Fatemeh Noroozi

Industry 4.0 and Prospects of Circular Economy: A Survey of Robotic Assembly and Disassembly

Jun 14, 2021

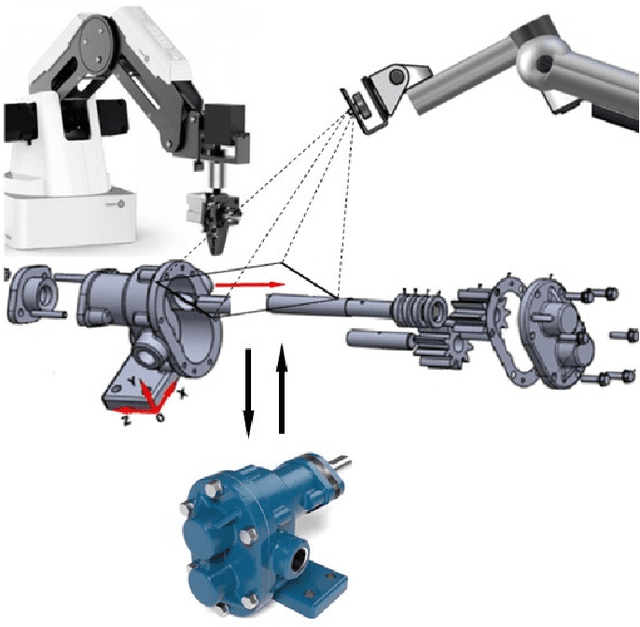

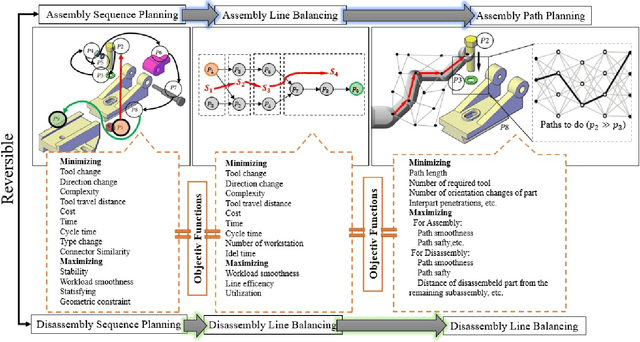

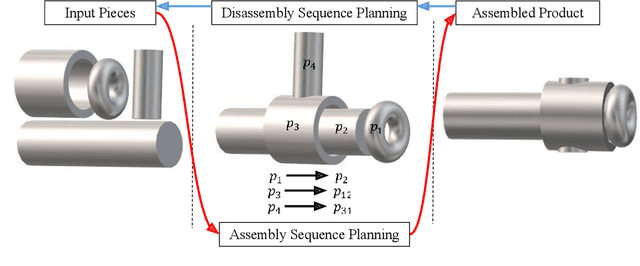

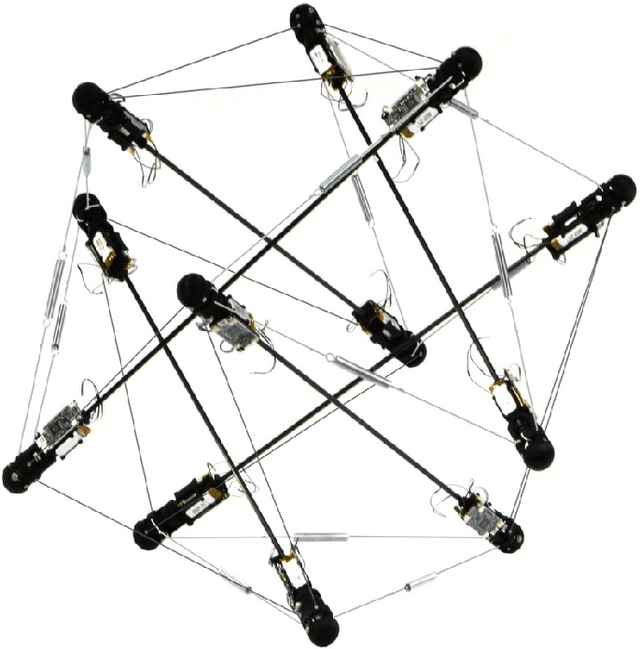

Abstract:Despite their contributions to the financial efficiency and environmental sustainability of industrial processes, robotic assembly and disassembly have been understudied in the existing literature. This is in contradiction to their importance in realizing the Fourth Industrial Revolution. More specifically, although most of the literature has extensively discussed how to optimally assemble or disassemble given products, the role of other factors has been overlooked. For example, the types of robots involved in implementing the sequence plans, which should ideally be taken into account throughout the whole chain consisting of design, assembly, disassembly and reassembly. Isolating the foregoing operations from the rest of the components of the relevant ecosystems may lead to erroneous inferences toward both the necessity and efficiency of the underlying procedures. In this paper we try to alleviate these shortcomings by comprehensively investigating the state-of-the-art in robotic assembly and disassembly. We consider and review various aspects of manufacturing and remanufacturing frameworks while particularly focusing on their desirability for supporting a circular economy.

A Study of Language and Classifier-independent Feature Analysis for Vocal Emotion Recognition

Nov 14, 2018

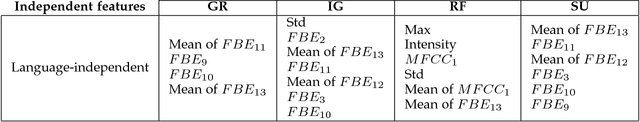

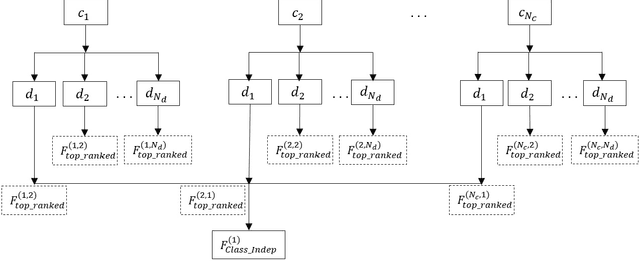

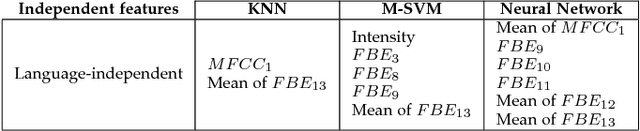

Abstract:Every speech signal carries implicit information about the emotions, which can be extracted by speech processing methods. In this paper, we propose an algorithm for extracting features that are independent from the spoken language and the classification method to have comparatively good recognition performance on different languages independent from the employed classification methods. The proposed algorithm is composed of three stages. In the first stage, we propose a feature ranking method analyzing the state-of-the-art voice quality features. In the second stage, we propose a method for finding the subset of the common features for each language and classifier. In the third stage, we compare our approach with the recognition rate of the state-of-the-art filter methods. We use three databases with different languages, namely, Polish, Serbian and English. Also three different classifiers, namely, nearest neighbour, support vector machine and gradient descent neural network, are employed. It is shown that our method for selecting the most significant language-independent and method-independent features in many cases outperforms state-of-the-art filter methods.

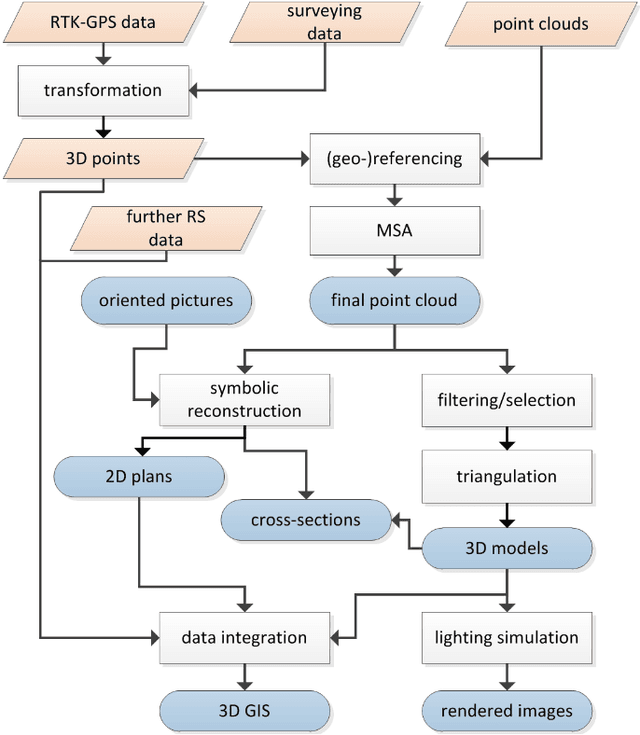

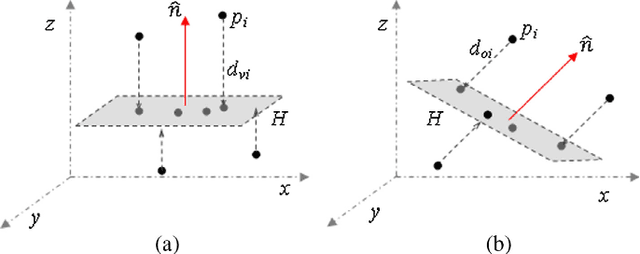

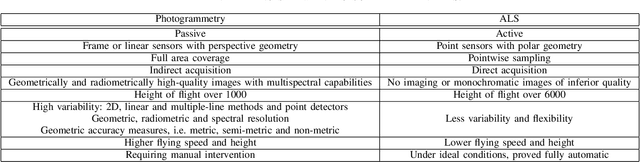

3D Scanning: A Comprehensive Survey

Jan 24, 2018

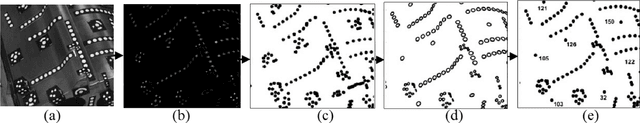

Abstract:This paper provides an overview of 3D scanning methodologies and technologies proposed in the existing scientific and industrial literature. Throughout the paper, various types of the related techniques are reviewed, which consist, mainly, of close-range, aerial, structure-from-motion and terrestrial photogrammetry, and mobile, terrestrial and airborne laser scanning, as well as time-of-flight, structured-light and phase-comparison methods, along with comparative and combinational studies, the latter being intended to help make a clearer distinction on the relevance and reliability of the possible choices. Moreover, outlier detection and surface fitting procedures are discussed concisely, which are necessary post-processing stages.

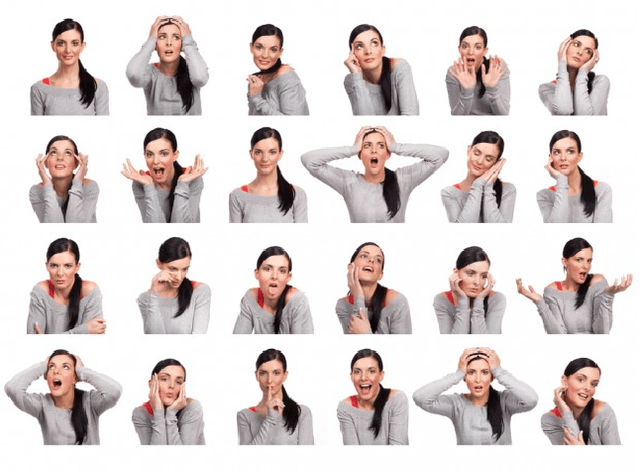

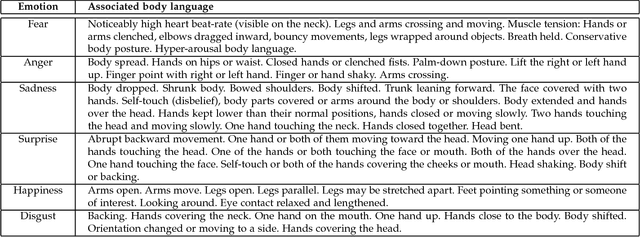

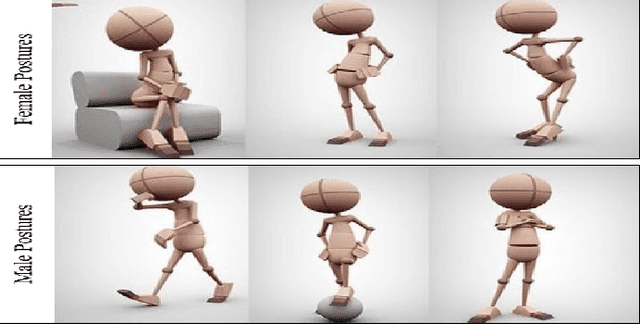

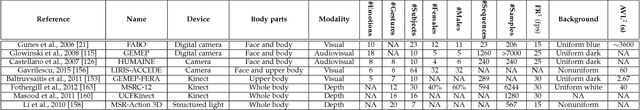

Survey on Emotional Body Gesture Recognition

Jan 23, 2018

Abstract:Automatic emotion recognition has become a trending research topic in the past decade. While works based on facial expressions or speech abound, recognizing affect from body gestures remains a less explored topic. We present a new comprehensive survey hoping to boost research in the field. We first introduce emotional body gestures as a component of what is commonly known as "body language" and comment general aspects as gender differences and culture dependence. We then define a complete framework for automatic emotional body gesture recognition. We introduce person detection and comment static and dynamic body pose estimation methods both in RGB and 3D. We then comment the recent literature related to representation learning and emotion recognition from images of emotionally expressive gestures. We also discuss multi-modal approaches that combine speech or face with body gestures for improved emotion recognition. While pre-processing methodologies (e.g. human detection and pose estimation) are nowadays mature technologies fully developed for robust large scale analysis, we show that for emotion recognition the quantity of labelled data is scarce, there is no agreement on clearly defined output spaces and the representations are shallow and largely based on naive geometrical representations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge