Cédric Pradalier

Evaluating Robustness of Deep Reinforcement Learning for Autonomous Surface Vehicle Control in Field Tests

May 15, 2025Abstract:Despite significant advancements in Deep Reinforcement Learning (DRL) for Autonomous Surface Vehicles (ASVs), their robustness in real-world conditions, particularly under external disturbances, remains insufficiently explored. In this paper, we evaluate the resilience of a DRL-based agent designed to capture floating waste under various perturbations. We train the agent using domain randomization and evaluate its performance in real-world field tests, assessing its ability to handle unexpected disturbances such as asymmetric drag and an off-center payload. We assess the agent's performance under these perturbations in both simulation and real-world experiments, quantifying performance degradation and benchmarking it against an MPC baseline. Results indicate that the DRL agent performs reliably despite significant disturbances. Along with the open-source release of our implementation, we provide insights into effective training strategies, real-world challenges, and practical considerations for deploying DRLbased ASV controllers.

PolygoNet: Leveraging Simplified Polygonal Representation for Effective Image Classification

Apr 01, 2025Abstract:Deep learning models have achieved significant success in various image related tasks. However, they often encounter challenges related to computational complexity and overfitting. In this paper, we propose an efficient approach that leverages polygonal representations of images using dominant points or contour coordinates. By transforming input images into these compact forms, our method significantly reduces computational requirements, accelerates training, and conserves resources making it suitable for real time and resource constrained applications. These representations inherently capture essential image features while filtering noise, providing a natural regularization effect that mitigates overfitting. The resulting lightweight models achieve performance comparable to state of the art methods using full resolution images while enabling deployment on edge devices. Extensive experiments on benchmark datasets validate the effectiveness of our approach in reducing complexity, improving generalization, and facilitating edge computing applications. This work demonstrates the potential of polygonal representations in advancing efficient and scalable deep learning solutions for real world scenarios. The code for the experiments of the paper is provided in https://github.com/salimkhazem/PolygoNet.

Hyperspectral Neural Radiance Fields

Mar 21, 2024Abstract:Hyperspectral Imagery (HSI) has been used in many applications to non-destructively determine the material and/or chemical compositions of samples. There is growing interest in creating 3D hyperspectral reconstructions, which could provide both spatial and spectral information while also mitigating common HSI challenges such as non-Lambertian surfaces and translucent objects. However, traditional 3D reconstruction with HSI is difficult due to technological limitations of hyperspectral cameras. In recent years, Neural Radiance Fields (NeRFs) have seen widespread success in creating high quality volumetric 3D representations of scenes captured by a variety of camera models. Leveraging recent advances in NeRFs, we propose computing a hyperspectral 3D reconstruction in which every point in space and view direction is characterized by wavelength-dependent radiance and transmittance spectra. To evaluate our approach, a dataset containing nearly 2000 hyperspectral images across 8 scenes and 2 cameras was collected. We perform comparisons against traditional RGB NeRF baselines and apply ablation testing with alternative spectra representations. Finally, we demonstrate the potential of hyperspectral NeRFs for hyperspectral super-resolution and imaging sensor simulation. We show that our hyperspectral NeRF approach enables creating fast, accurate volumetric 3D hyperspectral scenes and enables several new applications and areas for future study.

Trust and Acceptance of Multi-Robot Systems "in the Wild". A Roadmap exemplified within the EU-Project BugWright2

Dec 13, 2023Abstract:This paper outlines a roadmap to effectively leverage shared mental models in multi-robot, multi-stakeholder scenarios, drawing on experiences from the BugWright2 project. The discussion centers on an autonomous multi-robot systems designed for ship inspection and maintenance. A significant challenge in the development and implementation of this system is the calibration of trust. To address this, the paper proposes that trust calibration can be managed and optimized through the creation and continual updating of shared and accurate mental models of the robots. Strategies to promote these mental models, including cross-training, briefings, debriefings, and task-specific elaboration and visualization, are examined. Additionally, the crucial role of an adaptable, distributed, and well-structured user interface (UI) is discussed.

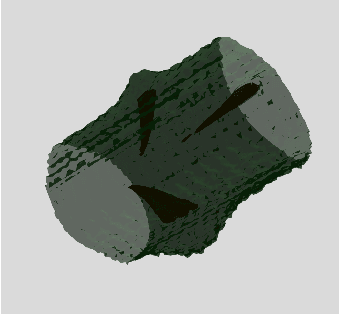

Improving Knot Prediction in Wood Logs with Longitudinal Feature Propagation

Aug 22, 2023Abstract:The quality of a wood log in the wood industry depends heavily on the presence of both outer and inner defects, including inner knots that are a result of the growth of tree branches. Today, locating the inner knots require the use of expensive equipment such as X-ray scanners. In this paper, we address the task of predicting the location of inner defects from the outer shape of the logs. The dataset is built by extracting both the contours and the knots with X-ray measurements. We propose to solve this binary segmentation task by leveraging convolutional recurrent neural networks. Once the neural network is trained, inference can be performed from the outer shape measured with cheap devices such as laser profilers. We demonstrate the effectiveness of our approach on fir and spruce tree species and perform ablation on the recurrence to demonstrate its importance.

Integrating Visual and Semantic Similarity Using Hierarchies for Image Retrieval

Aug 16, 2023

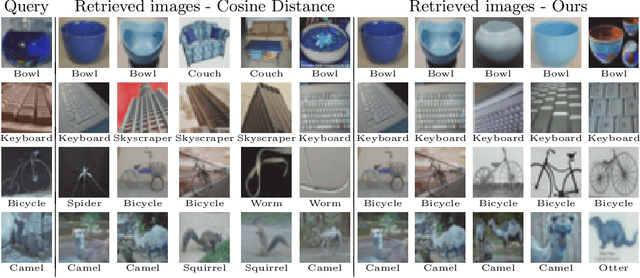

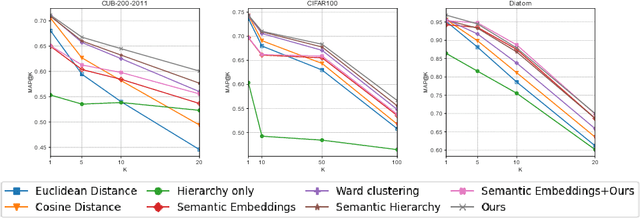

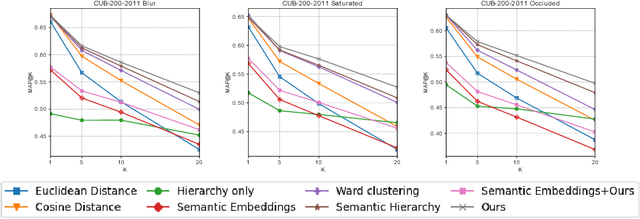

Abstract:Most of the research in content-based image retrieval (CBIR) focus on developing robust feature representations that can effectively retrieve instances from a database of images that are visually similar to a query. However, the retrieved images sometimes contain results that are not semantically related to the query. To address this, we propose a method for CBIR that captures both visual and semantic similarity using a visual hierarchy. The hierarchy is constructed by merging classes with overlapping features in the latent space of a deep neural network trained for classification, assuming that overlapping classes share high visual and semantic similarities. Finally, the constructed hierarchy is integrated into the distance calculation metric for similarity search. Experiments on standard datasets: CUB-200-2011 and CIFAR100, and a real-life use case using diatom microscopy images show that our method achieves superior performance compared to the existing methods on image retrieval.

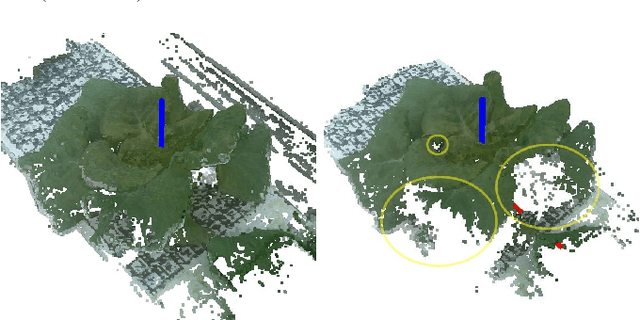

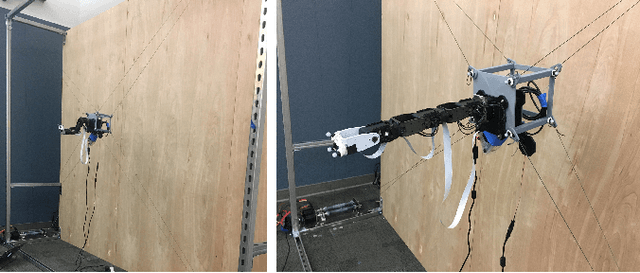

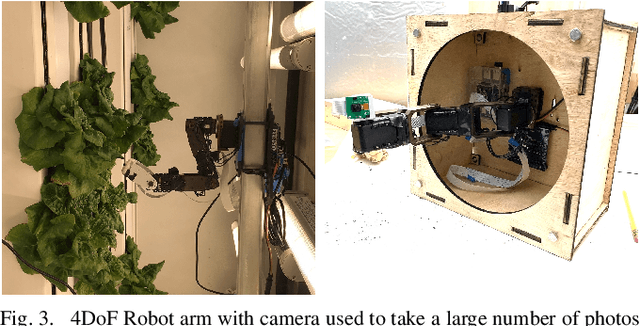

A Hybrid Cable-Driven Robot for Non-Destructive Leafy Plant Monitoring and Mass Estimation using Structure from Motion

Sep 19, 2022

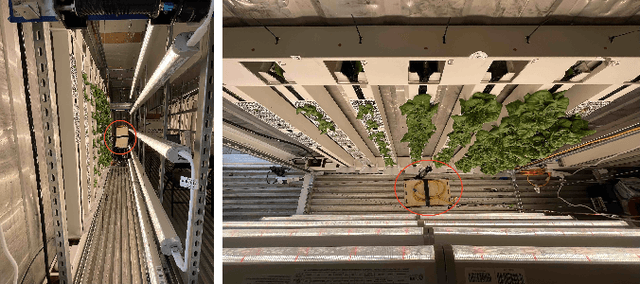

Abstract:We propose a novel hybrid cable-based robot with manipulator and camera for high-accuracy, medium-throughput plant monitoring in a vertical hydroponic farm and, as an example application, demonstrate non-destructive plant mass estimation. Plant monitoring with high temporal and spatial resolution is important to both farmers and researchers to detect anomalies and develop predictive models for plant growth. The availability of high-quality, off-the-shelf structure-from-motion (SfM) and photogrammetry packages has enabled a vibrant community of roboticists to apply computer vision for non-destructive plant monitoring. While existing approaches tend to focus on either high-throughput (e.g. satellite, unmanned aerial vehicle (UAV), vehicle-mounted, conveyor-belt imagery) or high-accuracy/robustness to occlusions (e.g. turn-table scanner or robot arm), we propose a middle-ground that achieves high accuracy with a medium-throughput, highly automated robot. Our design pairs the workspace scalability of a cable-driven parallel robot (CDPR) with the dexterity of a 4 degree-of-freedom (DoF) robot arm to autonomously image many plants from a variety of viewpoints. We describe our robot design and demonstrate it experimentally by collecting daily photographs of 54 plants from 64 viewpoints each. We show that our approach can produce scientifically useful measurements, operate fully autonomously after initial calibration, and produce better reconstructions and plant property estimates than those of over-canopy methods (e.g. UAV). As example applications, we show that our system can successfully estimate plant mass with a Mean Absolute Error (MAE) of 0.586g and, when used to perform hypothesis testing on the relationship between mass and age, produces p-values comparable to ground-truth data (p=0.0020 and p=0.0016, respectively).

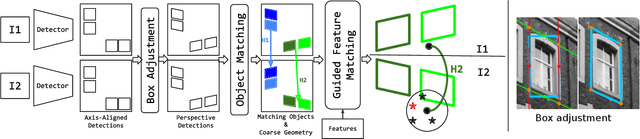

Object-Guided Day-Night Visual Localization in Urban Scenes

Feb 09, 2022

Abstract:We introduce Object-Guided Localization (OGuL) based on a novel method of local-feature matching. Direct matching of local features is sensitive to significant changes in illumination. In contrast, object detection often survives severe changes in lighting conditions. The proposed method first detects semantic objects and establishes correspondences of those objects between images. Object correspondences provide local coarse alignment of the images in the form of a planar homography. These homographies are consequently used to guide the matching of local features. Experiments on standard urban localization datasets (Aachen, Extended-CMU-Season, RobotCar-Season) show that OGuL significantly improves localization results with as simple local features as SIFT, and its performance competes with the state-of-the-art CNN-based methods trained for day-to-night localization.

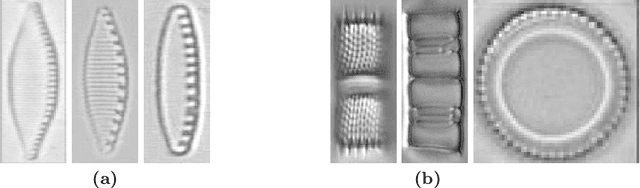

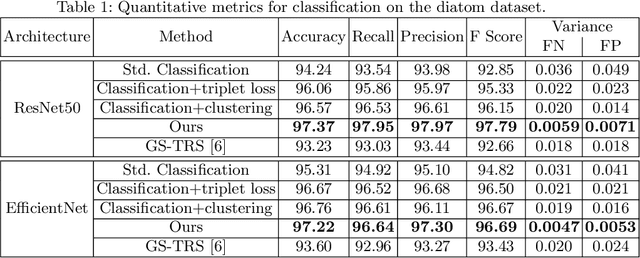

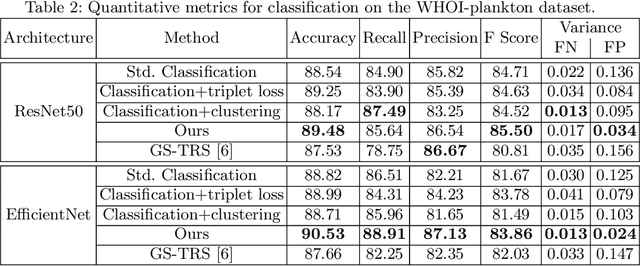

Tackling Inter-Class Similarity and Intra-Class Variance for Microscopic Image-based Classification

Sep 24, 2021

Abstract:Automatic classification of aquatic microorganisms is based on the morphological features extracted from individual images. The current works on their classification do not consider the inter-class similarity and intra-class variance that causes misclassification. We are particularly interested in the case where variance within a class occurs due to discrete visual changes in microscopic images. In this paper, we propose to account for it by partitioning the classes with high variance based on the visual features. Our algorithm automatically decides the optimal number of sub-classes to be created and consider each of them as a separate class for training. This way, the network learns finer-grained visual features. Our experiments on two databases of freshwater benthic diatoms and marine plankton show that our method can outperform the state-of-the-art approaches for classification of these aquatic microorganisms.

A Survey On 3D Inner Structure Prediction from its Outer Shape

Feb 11, 2020

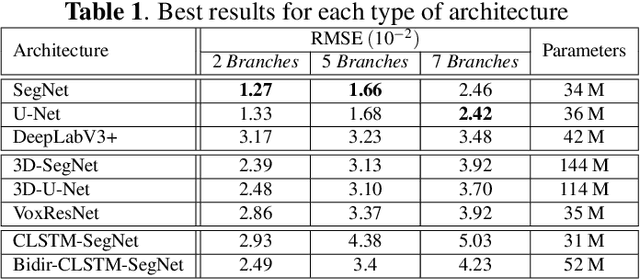

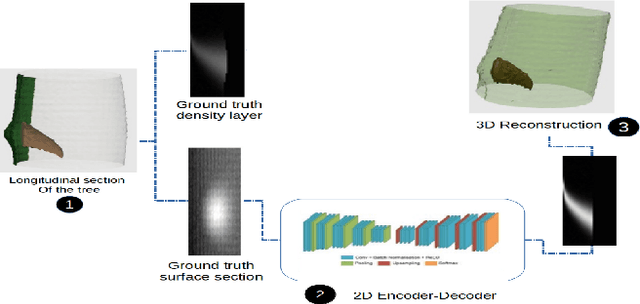

Abstract:The analysis of the internal structure of trees is highly important for both forest experts, biological scientists, and the wood industry. Traditionally, CT-scanners are considered as the most efficient way to get an accurate inner representation of the tree. However, this method requires an important investment and reduces the cost-effectiveness of this operation. Our goal is to design neural-network-based methods to predict the internal density of the tree from its external bark shape. This paper compares different image-to-image(2D), volume-to-volume(3D) and Convolutional Long Short Term Memory based neural network architectures in the context of the prediction of the defect distribution inside trees from their external bark shape. Those models are trained on a synthetic dataset of 1800 CT-scanned look-like volumetric structures of the internal density of the trees and their corresponding external surface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge