Yongsheng Chen

Hyperspectral Gaussian Splatting

May 28, 2025Abstract:Hyperspectral imaging (HSI) has been widely used in agricultural applications for non-destructive estimation of plant nutrient composition and precise determination of nutritional elements in samples. Recently, 3D reconstruction methods have been used to create implicit neural representations of HSI scenes, which can help localize the target object's nutrient composition spatially and spectrally. Neural Radiance Field (NeRF) is a cutting-edge implicit representation that can render hyperspectral channel compositions of each spatial location from any viewing direction. However, it faces limitations in training time and rendering speed. In this paper, we propose Hyperspectral Gaussian Splatting (HS-GS), which combines the state-of-the-art 3D Gaussian Splatting (3DGS) with a diffusion model to enable 3D explicit reconstruction of the hyperspectral scenes and novel view synthesis for the entire spectral range. To enhance the model's ability to capture fine-grained reflectance variations across the light spectrum and leverage correlations between adjacent wavelengths for denoising, we introduce a wavelength encoder to generate wavelength-specific spherical harmonics offsets. We also introduce a novel Kullback--Leibler divergence-based loss to mitigate the spectral distribution gap between the rendered image and the ground truth. A diffusion model is further applied for denoising the rendered images and generating photorealistic hyperspectral images. We present extensive evaluations on five diverse hyperspectral scenes from the Hyper-NeRF dataset to show the effectiveness of our proposed HS-GS framework. The results demonstrate that HS-GS achieves new state-of-the-art performance among all previously published methods. Code will be released upon publication.

Hyperspectral Neural Radiance Fields

Mar 21, 2024Abstract:Hyperspectral Imagery (HSI) has been used in many applications to non-destructively determine the material and/or chemical compositions of samples. There is growing interest in creating 3D hyperspectral reconstructions, which could provide both spatial and spectral information while also mitigating common HSI challenges such as non-Lambertian surfaces and translucent objects. However, traditional 3D reconstruction with HSI is difficult due to technological limitations of hyperspectral cameras. In recent years, Neural Radiance Fields (NeRFs) have seen widespread success in creating high quality volumetric 3D representations of scenes captured by a variety of camera models. Leveraging recent advances in NeRFs, we propose computing a hyperspectral 3D reconstruction in which every point in space and view direction is characterized by wavelength-dependent radiance and transmittance spectra. To evaluate our approach, a dataset containing nearly 2000 hyperspectral images across 8 scenes and 2 cameras was collected. We perform comparisons against traditional RGB NeRF baselines and apply ablation testing with alternative spectra representations. Finally, we demonstrate the potential of hyperspectral NeRFs for hyperspectral super-resolution and imaging sensor simulation. We show that our hyperspectral NeRF approach enables creating fast, accurate volumetric 3D hyperspectral scenes and enables several new applications and areas for future study.

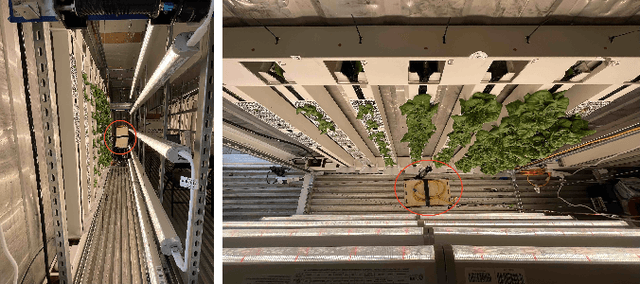

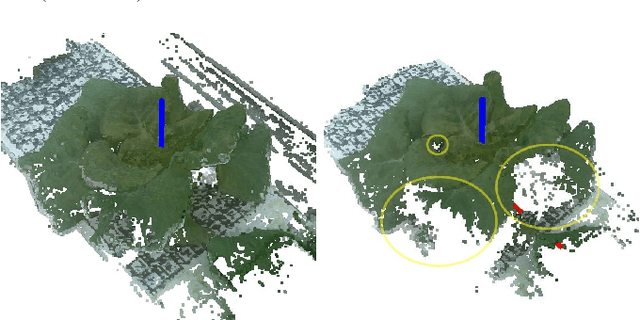

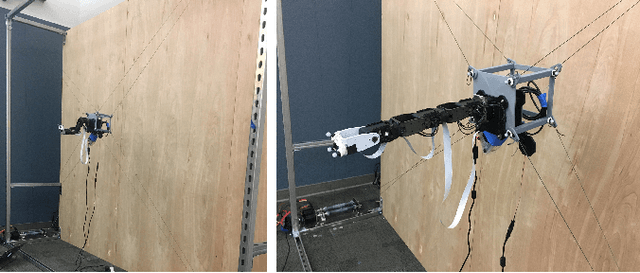

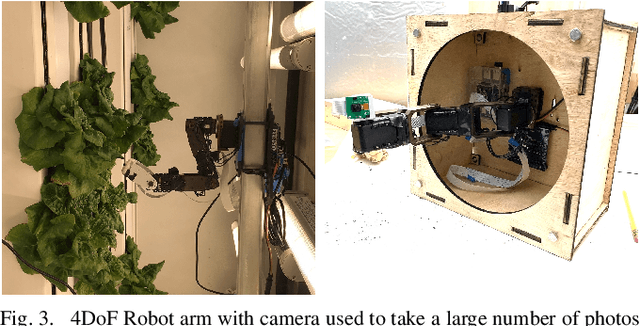

A Hybrid Cable-Driven Robot for Non-Destructive Leafy Plant Monitoring and Mass Estimation using Structure from Motion

Sep 19, 2022

Abstract:We propose a novel hybrid cable-based robot with manipulator and camera for high-accuracy, medium-throughput plant monitoring in a vertical hydroponic farm and, as an example application, demonstrate non-destructive plant mass estimation. Plant monitoring with high temporal and spatial resolution is important to both farmers and researchers to detect anomalies and develop predictive models for plant growth. The availability of high-quality, off-the-shelf structure-from-motion (SfM) and photogrammetry packages has enabled a vibrant community of roboticists to apply computer vision for non-destructive plant monitoring. While existing approaches tend to focus on either high-throughput (e.g. satellite, unmanned aerial vehicle (UAV), vehicle-mounted, conveyor-belt imagery) or high-accuracy/robustness to occlusions (e.g. turn-table scanner or robot arm), we propose a middle-ground that achieves high accuracy with a medium-throughput, highly automated robot. Our design pairs the workspace scalability of a cable-driven parallel robot (CDPR) with the dexterity of a 4 degree-of-freedom (DoF) robot arm to autonomously image many plants from a variety of viewpoints. We describe our robot design and demonstrate it experimentally by collecting daily photographs of 54 plants from 64 viewpoints each. We show that our approach can produce scientifically useful measurements, operate fully autonomously after initial calibration, and produce better reconstructions and plant property estimates than those of over-canopy methods (e.g. UAV). As example applications, we show that our system can successfully estimate plant mass with a Mean Absolute Error (MAE) of 0.586g and, when used to perform hypothesis testing on the relationship between mass and age, produces p-values comparable to ground-truth data (p=0.0020 and p=0.0016, respectively).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge