Byeong-Yun Ko

DNN based HRIRs Identification with a Continuously Rotating Speaker Array

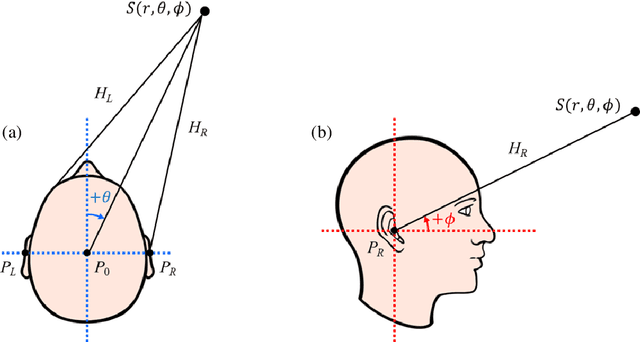

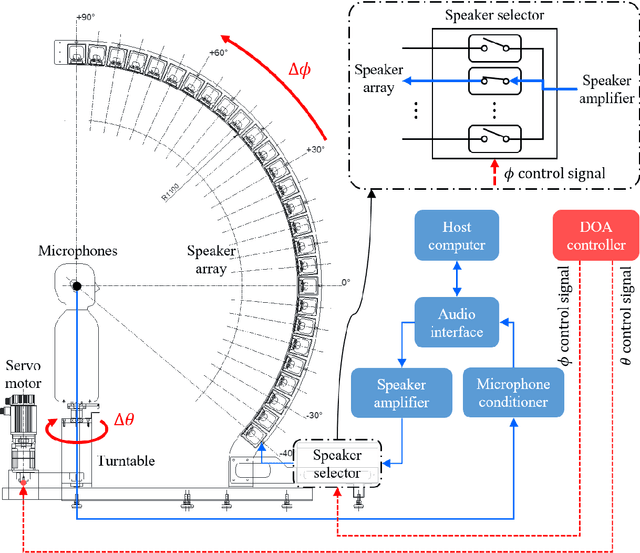

Apr 21, 2025Abstract:Conventional static measurement of head-related impulse responses (HRIRs) is time-consuming due to the need for repositioning a speaker array for each azimuth angle. Dynamic approaches using analytical models with a continuously rotating speaker array have been proposed, but their accuracy is significantly reduced at high rotational speeds. To address this limitation, we propose a DNN-based HRIRs identification using sequence-to-sequence learning. The proposed DNN model incorporates fully connected (FC) networks to effectively capture HRIR transitions and includes reset and update gates to identify HRIRs over a whole sequence. The model updates the HRIRs vector coefficients based on the gradient of the instantaneous square error (ISE). Additionally, we introduce a learnable normalization process based on the speaker excitation signals to stabilize the gradient scale of ISE across time. A training scheme, referred to as whole-sequence updating and optimization scheme, is also introduced to prevent overfitting. We evaluated the proposed method through simulations and experiments. Simulation results using the FABIAN database show that the proposed method outperforms previous analytic models, achieving over 7 dB improvement in normalized misalignment (NM) and maintaining log spectral distortion (LSD) below 2 dB at a rotational speed of 45{\deg}/s. Experimental results with a custom-built speaker array confirm that the proposed method successfully preserved accurate sound localization cues, consistent with those from static measurement. Source code is available at https://github.com/byko0810/DNN-based-HRIRs-identification

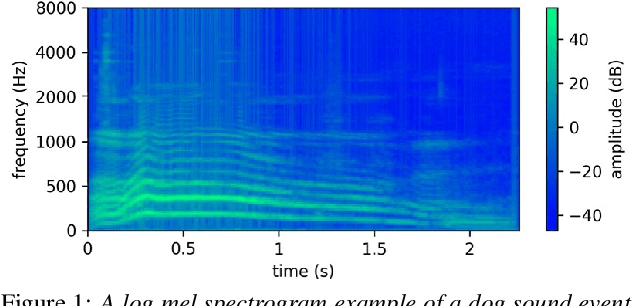

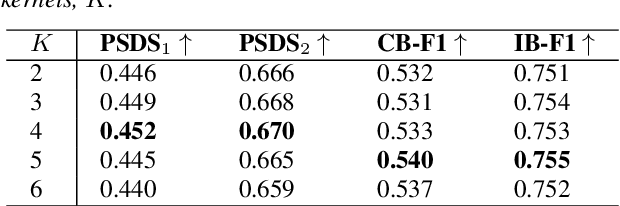

Towards Understanding of Frequency Dependence on Sound Event Detection

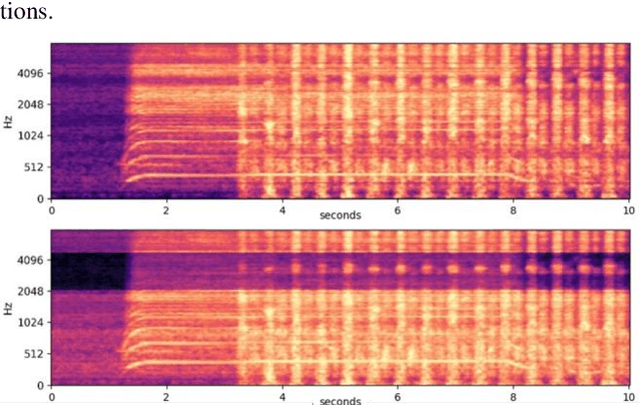

Feb 11, 2025Abstract:In this work, various analysis methods are conducted on frequency-dependent methods on SED to further delve into their detailed characteristics and behaviors on SED. While SED has been rapidly advancing through the adoption of various deep learning techniques from other pattern recognition fields, these techniques are often not suitable for SED. To address this issue, two frequency-dependent SED methods were previously proposed: FilterAugment, a data augmentation randomly weighting frequency bands, and frequency dynamic convolution (FDY Conv), an architecture applying frequency adaptive convolution kernels. These methods have demonstrated superior performance in SED, and we aim to further analyze their detailed effectiveness and characteristics in SED. We compare class-wise performance to find out specific pros and cons of FilterAugment and FDY Conv. We apply Gradient-weighted Class Activation Mapping (Grad-CAM), which highlights time-frequency region that is more inferred by the model, on SED models with and without frequency masking and two types of FilterAugment to observe their detailed characteristics. We propose simpler frequency dependent convolution methods and compare them with FDY Conv to further understand which components of FDY Conv affects SED performance. Lastly, we apply PCA to show how FDY Conv adapts dynamic kernel across frequency dimensions on different sound event classes. The results and discussions demonstrate that frequency dependency plays a significant role in sound event detection and further confirms the effectiveness of frequency dependent methods on SED.

Divided spectro-temporal attention for sound event localization and detection in real scenes for DCASE2023 challenge

Jun 05, 2023

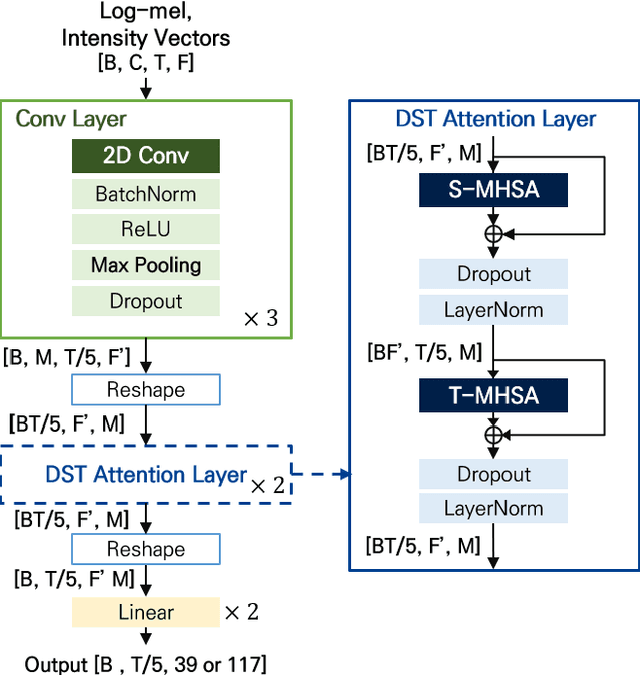

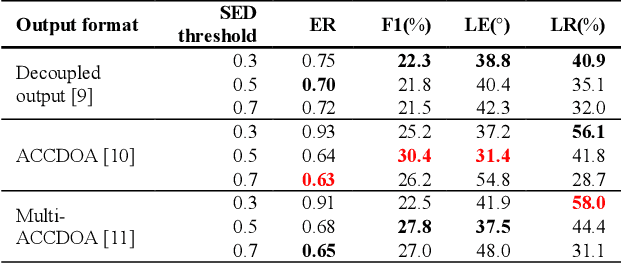

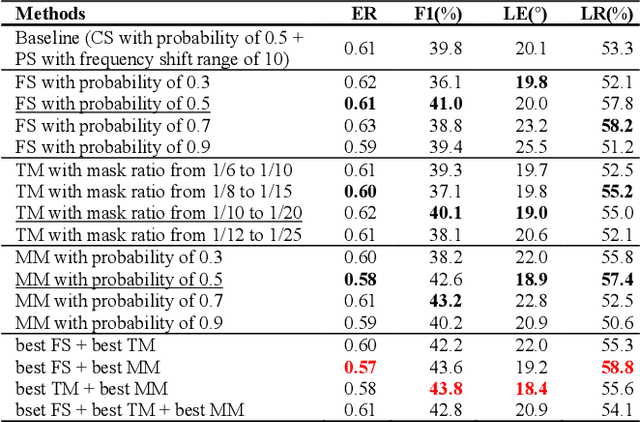

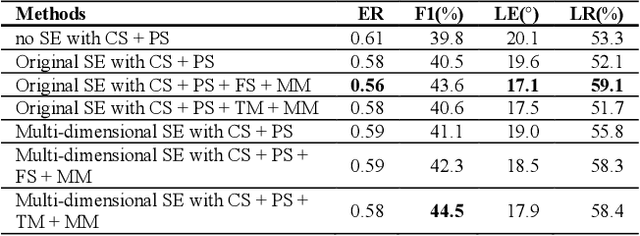

Abstract:Localizing sounds and detecting events in different room environments is a difficult task, mainly due to the wide range of reflections and reverberations. When training neural network models with sounds recorded in only a few room environments, there is a tendency for the models to become overly specialized to those specific environments, resulting in overfitting. To address this overfitting issue, we propose divided spectro-temporal attention. In comparison to the baseline method, which utilizes a convolutional recurrent neural network (CRNN) followed by a temporal multi-head self-attention layer (MHSA), we introduce a separate spectral attention layer that aggregates spectral features prior to the temporal MHSA. To achieve efficient spectral attention, we reduce the frequency pooling size in the convolutional encoder of the baseline to obtain a 3D tensor that incorporates information about frequency, time, and channel. As a result, we can implement spectral attention with channel embeddings, which is not possible in the baseline method dealing with only temporal context in the RNN and MHSA layers. We demonstrate that the proposed divided spectro-temporal attention significantly improves the performance of sound event detection and localization scores for real test data from the STARSS23 development dataset. Additionally, we show that various data augmentations, such as frameshift, time masking, channel swapping, and moderate mix-up, along with the use of external data, contribute to the overall improvement in SELD performance.

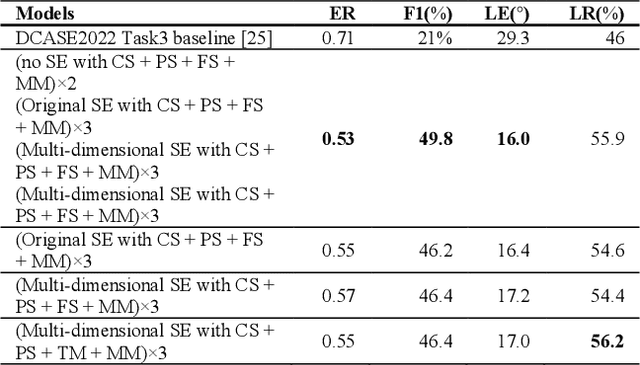

Data Augmentation and Squeeze-and-Excitation Network on Multiple Dimension for Sound Event Localization and Detection in Real Scenes

Jun 24, 2022

Abstract:Performance of sound event localization and detection (SELD) in real scenes is limited by small size of SELD dataset, due to difficulty in obtaining sufficient amount of realistic multi-channel audio data recordings with accurate label. We used two main strategies to solve problems arising from the small real SELD dataset. First, we applied various data augmentation methods on all data dimensions: channel, frequency and time. We also propose original data augmentation method named Moderate Mixup in order to simulate situations where noise floor or interfering events exist. Second, we applied Squeeze-and-Excitation block on channel and frequency dimensions to efficiently extract feature characteristics. Result of our trained models on the STARSS22 test dataset achieved the best ER, F1, LE, and LR of 0.53, 49.8%, 16.0deg., and 56.2% respectively.

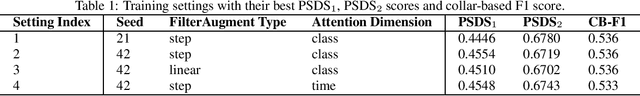

Frequency Dependent Sound Event Detection for DCASE 2022 Challenge Task 4

Jun 23, 2022

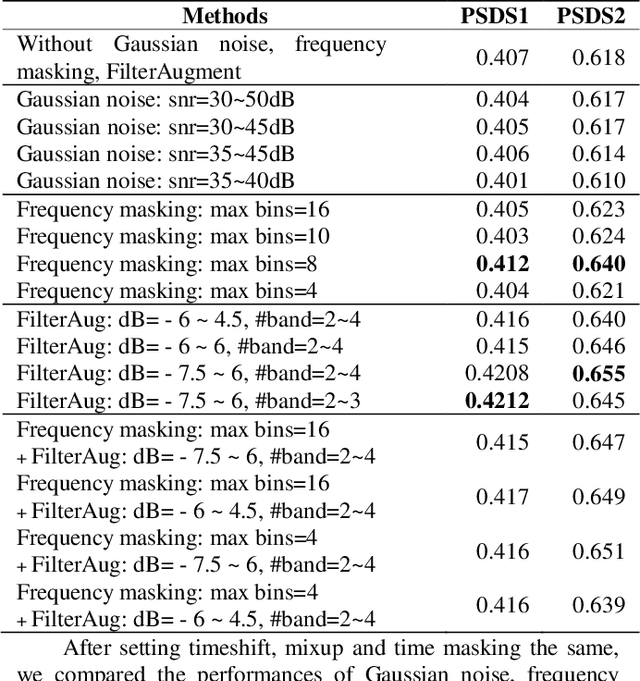

Abstract:While many deep learning methods on other domains have been applied to sound event detection (SED), differences between original domains of the methods and SED have not been appropriately considered so far. As SED uses audio data with two dimensions (time and frequency) for input, thorough comprehension on these two dimensions is essential for application of methods from other domains on SED. Previous works proved that methods those address on frequency dimension are especially powerful in SED. By applying FilterAugment and frequency dynamic convolution those are frequency dependent methods proposed to enhance SED performance, our submitted models achieved best PSDS1 of 0.4704 and best PSDS2 of 0.8224.

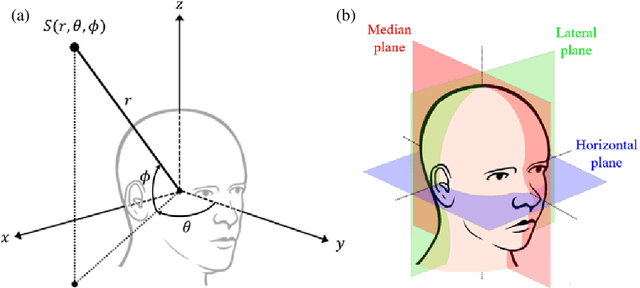

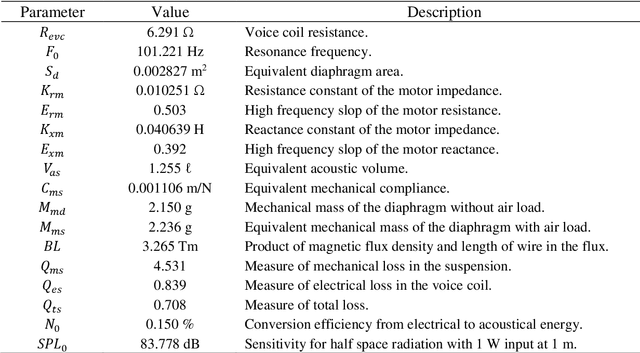

HRTF measurement for accurate sound localization cues

Apr 06, 2022

Abstract:A new database of head-related transfer functions (HRTFs) for accurate sound source localization is presented through precise measurement and post-processing in terms of improved frequency bandwidth and causality of head-related impulse responses (HRIRs) for accurate spectral cue (SC) and interaural time difference (ITD), respectively. The improvement effects of the proposed methods on binaural sound localization cues were investigated. To achieve sufficient frequency bandwidth with a single source, a one-way sealed speaker module was designed to obtain wide band frequency response based on electro-acoustics, whereas most existing HRTF databases rely on a two-way vented loudspeaker that has multiple sources. The origin transfer function at the head center was obtained by the proposed measurement scheme using a 0 degree on-axis microphone to ensure accurate spectral cue pattern of HRTFs, whereas in the previous measurements with a 90 degree off-axis microphone, the magnitude response of the origin transfer function fluctuated and decreased with increasing frequency, causing erroneous SCs of HRTFs. To prevent discontinuity of ITD due to non-causality of ipsilateral HRTFs, obtained HRIRs were circularly shifted by time delay considering the head radius of the measurement subject. Finally, various sound localization cues such as ITD, interaural level difference (ILD), SC, and horizontal plane directivity (HPD) were derived from the presented HRTFs, and improvements on binaural sound localization cues were examined. As a result, accurate SC patterns of HRTFs were confirmed through the proposed measurement scheme using the 0 degree on-axis microphone, and continuous ITD patterns were obtained due to the non-causality compensation. Source codes and presented HRTF database are available to relevant research groups at GitHub (https://github.com/han-saram/HRTF-HATS-KAIST).

Frequency Dynamic Convolution: Frequency-Adaptive Pattern Recognition for Sound Event Detection

Mar 29, 2022

Abstract:2D convolution is widely used in sound event detection (SED) to recognize 2D patterns of sound events in time-frequency domain. However, 2D convolution enforces translation-invariance on sound events along both time and frequency axis while sound events exhibit frequency-dependent patterns. In order to improve physical inconsistency in 2D convolution on SED, we propose frequency dynamic convolution which applies kernel that adapts to frequency components of input. Frequency dynamic convolution outperforms the baseline model by 6.3% in DESED dataset in terms of polyphonic sound detection score (PSDS). It also significantly outperforms dynamic convolution and temporal dynamic convolution on SED. In addition, by comparing class-wise F1 scores of baseline model and frequency dynamic convolution, we showed that frequency dynamic convolution is especially more effective for detection of non-stationary sound events. From this result, we verified that frequency dynamic convolution is superior in recognizing frequency-dependent patterns as non-stationary sound events show more intricate time-frequency patterns.

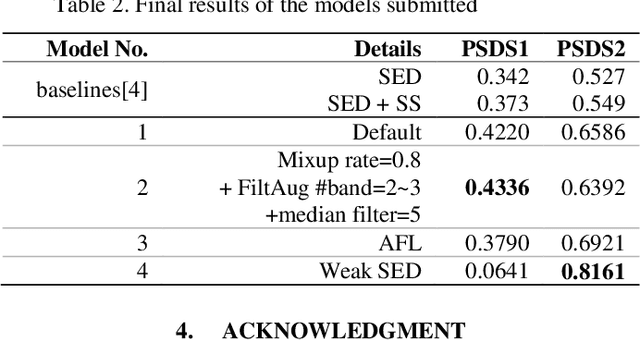

Heavily Augmented Sound Event Detection utilizing Weak Predictions

Jul 20, 2021

Abstract:The performances of Sound Event Detection (SED) systems are greatly limited by the difficulty in generating large strongly labeled dataset. In this work, we used two main approaches to overcome the lack of strongly labeled data. First, we applied heavy data augmentation on input features. Data augmentation methods used include not only conventional methods used in speech/audio domains but also our proposed method named FilterAugment. Second, we propose two methods to utilize weak predictions to enhance weakly supervised SED performance. As a result, we obtained the best PSDS1 of 0.4336 and best PSDS2 of 0.8161 on the DESED real validation dataset. This work is submitted to DCASE 2021 Task4 and is ranked on the 3rd place. Code availa-ble: https://github.com/frednam93/FilterAugSED.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge