Jung-Woo Choi

Sound Separation and Classification with Object and Semantic Guidance

Sep 19, 2025Abstract:The spatial semantic segmentation task focuses on separating and classifying sound objects from multichannel signals. To achieve two different goals, conventional methods fine-tune a large classification model cascaded with the separation model and inject classified labels as separation clues for the next iteration step. However, such integration is not ideal, in that fine-tuning over a smaller dataset loses the diversity of large classification models, features from the source separation model are different from the inputs of the pretrained classifier, and injected one-hot class labels lack semantic depth, often leading to error propagation. To resolve these issues, we propose a Dual-Path Classifier (DPC) architecture that combines object features from a source separation model with semantic representations acquired from a pretrained classification model without fine-tuning. We also introduce a Semantic Clue Encoder (SCE) that enriches the semantic depth of injected clues. Our system achieves a state-of-the-art 11.19 dB CA-SDRi and enhanced semantic fidelity on the DCASE 2025 task4 evaluation set, surpassing the top-rank performance of 11.00 dB. These results highlight the effectiveness of integrating separator-derived features and rich semantic clues.

DISPATCH: Distilling Selective Patches for Speech Enhancement

Sep 19, 2025Abstract:In speech enhancement, knowledge distillation (KD) compresses models by transferring a high-capacity teacher's knowledge to a compact student. However, conventional KD methods train the student to mimic the teacher's output entirely, which forces the student to imitate the regions where the teacher performs poorly and to apply distillation to the regions where the student already performs well, which yields only marginal gains. We propose Distilling Selective Patches (DISPatch), a KD framework for speech enhancement that applies the distillation loss to spectrogram patches where the teacher outperforms the student, as determined by a Knowledge Gap Score. This approach guides optimization toward areas with the most significant potential for student improvement while minimizing the influence of regions where the teacher may provide unreliable instruction. Furthermore, we introduce Multi-Scale Selective Patches (MSSP), a frequency-dependent method that uses different patch sizes across low- and high-frequency bands to account for spectral heterogeneity. We incorporate DISPatch into conventional KD methods and observe consistent gains in compact students. Moreover, integrating DISPatch and MSSP into a state-of-the-art frequency-dependent KD method considerably improves performance across all metrics.

CST-former: Multidimensional Attention-based Transformer for Sound Event Localization and Detection in Real Scenes

Apr 17, 2025Abstract:Sound event localization and detection (SELD) is a task for the classification of sound events and the identification of direction of arrival (DoA) utilizing multichannel acoustic signals. For effective classification and localization, a channel-spectro-temporal transformer (CST-former) was suggested. CST-former employs multidimensional attention mechanisms across the spatial, spectral, and temporal domains to enlarge the model's capacity to learn the domain information essential for event detection and DoA estimation over time. In this work, we present an enhanced version of CST-former with multiscale unfolded local embedding (MSULE) developed to capture and aggregate domain information over multiple time-frequency scales. Also, we propose finetuning and post-processing techniques beneficial for conducting the SELD task over limited training datasets. In-depth ablation studies of the proposed architecture and detailed analysis on the proposed modules are carried out to validate the efficacy of multidimensional attentions on the SELD task. Empirical validation through experimentation on STARSS22 and STARSS23 datasets demonstrates the remarkable performance of CST-former and post-processing techniques without using external data.

3D Room Geometry Inference from Multichannel Room Impulse Response using Deep Neural Network

Jan 19, 2024Abstract:Room geometry inference (RGI) aims at estimating room shapes from measured room impulse responses (RIRs) and has received lots of attention for its importance in environment-aware audio rendering and virtual acoustic representation of a real venue. A lot of estimation models utilizing time difference of arrival (TDoA) or time of arrival (ToA) information in RIRs have been proposed. However, an estimation model should be able to handle more general features and complex relations between reflections to cope with various room shapes and uncertainties such as the unknown number of walls. In this study, we propose a deep neural network that can estimate various room shapes without prior assumptions on the shape or number of walls. The proposed model consists of three sub-networks: a feature extractor, parameter estimation, and evaluation networks, which extract key features from RIRs, estimate parameters, and evaluate the confidence of estimated parameters, respectively. The network is trained by about 40,000 RIRs simulated in rooms of different shapes using a single source and spherical microphone array and tested for rooms of unseen shapes and dimensions. The proposed algorithm achieves almost perfect accuracy in finding the true number of walls and shows negligible errors in room shapes.

* 5 pages, 2 figures, Proceedings of the 24th International Congress on Acoustics

CST-former: Transformer with Channel-Spectro-Temporal Attention for Sound Event Localization and Detection

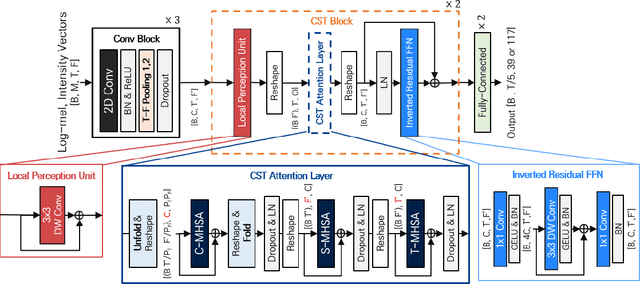

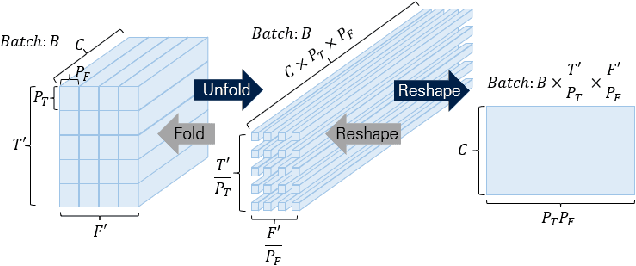

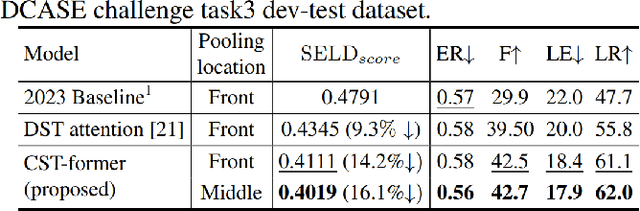

Dec 20, 2023

Abstract:Sound event localization and detection (SELD) is a task for the classification of sound events and the localization of direction of arrival (DoA) utilizing multichannel acoustic signals. Prior studies employ spectral and channel information as the embedding for temporal attention. However, this usage limits the deep neural network from extracting meaningful features from the spectral or spatial domains. Therefore, our investigation in this paper presents a novel framework termed the Channel-Spectro-Temporal Transformer (CST-former) that bolsters SELD performance through the independent application of attention mechanisms to distinct domains. The CST-former architecture employs distinct attention mechanisms to independently process channel, spectral, and temporal information. In addition, we propose an unfolded local embedding (ULE) technique for channel attention (CA) to generate informative embedding vectors including local spectral and temporal information. Empirical validation through experimentation on the 2022 and 2023 DCASE Challenge task3 datasets affirms the efficacy of employing attention mechanisms separated across each domain and the benefit of ULE, in enhancing SELD performance.

EchoScan: Scanning Complex Indoor Geometries via Acoustic Echoes

Oct 18, 2023

Abstract:Accurate estimation of indoor space geometries is vital for constructing precise digital twins, whose broad industrial applications include navigation in unfamiliar environments and efficient evacuation planning, particularly in low-light conditions. This study introduces EchoScan, a deep neural network model that utilizes acoustic echoes to perform room geometry inference. Conventional sound-based techniques rely on estimating geometry-related room parameters such as wall position and room size, thereby limiting the diversity of inferable room geometries. Contrarily, EchoScan overcomes this limitation by directly inferring room floorplans and heights, thereby enabling it to handle rooms with arbitrary shapes, including curved walls. The key innovation of EchoScan is its ability to analyze the complex relationship between low- and high-order reflections in room impulse responses (RIRs) using a multi-aggregation module. The analysis of high-order reflections also enables it to infer complex room shapes when echoes are unobservable from the position of an audio device. Herein, EchoScan was trained and evaluated using RIRs synthesized from complex environments, including the Manhattan and Atlanta layouts, employing a practical audio device configuration compatible with commercial, off-the-shelf devices. Compared with vision-based methods, EchoScan demonstrated outstanding geometry estimation performance in rooms with various shapes.

Noisy-ArcMix: Additive Noisy Angular Margin Loss Combined With Mixup Anomalous Sound Detection

Oct 10, 2023Abstract:Unsupervised anomalous sound detection (ASD) aims to identify anomalous sounds by learning the features of normal operational sounds and sensing their deviations. Recent approaches have focused on the self-supervised task utilizing the classification of normal data, and advanced models have shown that securing representation space for anomalous data is important through representation learning yielding compact intra-class and well-separated intra-class distributions. However, we show that conventional approaches often fail to ensure sufficient intra-class compactness and exhibit angular disparity between samples and their corresponding centers. In this paper, we propose a training technique aimed at ensuring intra-class compactness and increasing the angle gap between normal and abnormal samples. Furthermore, we present an architecture that extracts features for important temporal regions, enabling the model to learn which time frames should be emphasized or suppressed. Experimental results demonstrate that the proposed method achieves the best performance giving 0.90%, 0.83%, and 2.16% improvement in terms of AUC, pAUC, and mAUC, respectively, compared to the state-of-the-art method on DCASE 2020 Challenge Task2 dataset.

RGI-Net: 3D Room Geometry Inference from Room Impulse Responses in the Absence of First-order Echoes

Sep 04, 2023

Abstract:Room geometry is important prior information for implementing realistic 3D audio rendering. For this reason, various room geometry inference (RGI) methods have been developed by utilizing the time of arrival (TOA) or time difference of arrival (TDOA) information in room impulse responses. However, the conventional RGI technique poses several assumptions, such as convex room shapes, the number of walls known in priori, and the visibility of first-order reflections. In this work, we introduce the deep neural network (DNN), RGI-Net, which can estimate room geometries without the aforementioned assumptions. RGI-Net learns and exploits complex relationships between high-order reflections in room impulse responses (RIRs) and, thus, can estimate room shapes even when the shape is non-convex or first-order reflections are missing in the RIRs. The network takes RIRs measured from a compact audio device equipped with a circular microphone array and a single loudspeaker, which greatly improves its practical applicability. RGI-Net includes the evaluation network that separately evaluates the presence probability of walls, so the geometry inference is possible without prior knowledge of the number of walls.

DeFTAN-II: Efficient Multichannel Speech Enhancement with Subgroup Processing

Aug 30, 2023

Abstract:In this work, we present DeFTAN-II, an efficient multichannel speech enhancement model based on transformer architecture and subgroup processing. Despite the success of transformers in speech enhancement, they face challenges in capturing local relations, reducing the high computational complexity, and lowering memory usage. To address these limitations, we introduce subgroup processing in our model, combining subgroups of locally emphasized features with other subgroups containing original features. The subgroup processing is implemented in several blocks of the proposed network. In the proposed split dense blocks extracting spatial features, a pair of subgroups is sequentially concatenated and processed by convolution layers to effectively reduce the computational complexity and memory usage. For the F- and T-transformers extracting temporal and spectral relations, we introduce cross-attention between subgroups to identify relationships between locally emphasized and non-emphasized features. The dual-path feedforward network then aggregates attended features in terms of the gating of local features processed by dilated convolutions. Through extensive comparisons with state-of-the-art multichannel speech enhancement models, we demonstrate that DeFTAN-II with subgroup processing outperforms existing methods at significantly lower computational complexity. Moreover, we evaluate the model's generalization capability on real-world data without fine-tuning, which further demonstrates its effectiveness in practical scenarios.

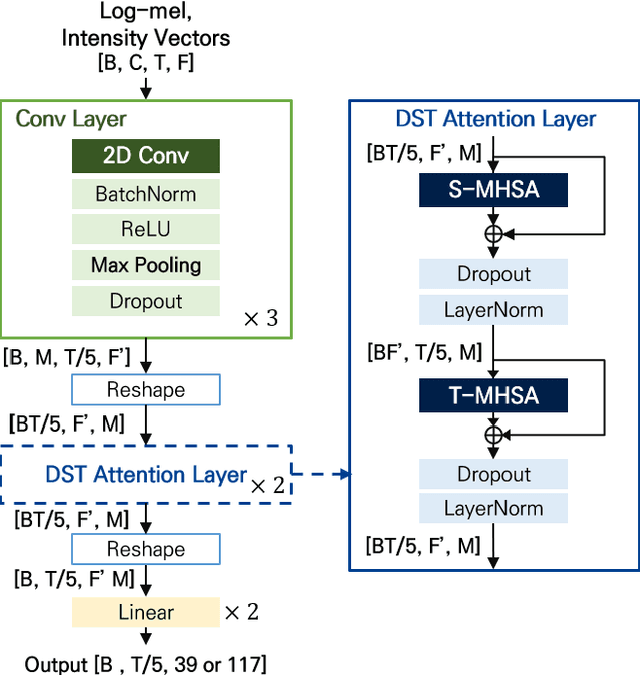

Divided spectro-temporal attention for sound event localization and detection in real scenes for DCASE2023 challenge

Jun 05, 2023

Abstract:Localizing sounds and detecting events in different room environments is a difficult task, mainly due to the wide range of reflections and reverberations. When training neural network models with sounds recorded in only a few room environments, there is a tendency for the models to become overly specialized to those specific environments, resulting in overfitting. To address this overfitting issue, we propose divided spectro-temporal attention. In comparison to the baseline method, which utilizes a convolutional recurrent neural network (CRNN) followed by a temporal multi-head self-attention layer (MHSA), we introduce a separate spectral attention layer that aggregates spectral features prior to the temporal MHSA. To achieve efficient spectral attention, we reduce the frequency pooling size in the convolutional encoder of the baseline to obtain a 3D tensor that incorporates information about frequency, time, and channel. As a result, we can implement spectral attention with channel embeddings, which is not possible in the baseline method dealing with only temporal context in the RNN and MHSA layers. We demonstrate that the proposed divided spectro-temporal attention significantly improves the performance of sound event detection and localization scores for real test data from the STARSS23 development dataset. Additionally, we show that various data augmentations, such as frameshift, time masking, channel swapping, and moderate mix-up, along with the use of external data, contribute to the overall improvement in SELD performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge