Seungchul Lee

EchoScan: Scanning Complex Indoor Geometries via Acoustic Echoes

Oct 18, 2023

Abstract:Accurate estimation of indoor space geometries is vital for constructing precise digital twins, whose broad industrial applications include navigation in unfamiliar environments and efficient evacuation planning, particularly in low-light conditions. This study introduces EchoScan, a deep neural network model that utilizes acoustic echoes to perform room geometry inference. Conventional sound-based techniques rely on estimating geometry-related room parameters such as wall position and room size, thereby limiting the diversity of inferable room geometries. Contrarily, EchoScan overcomes this limitation by directly inferring room floorplans and heights, thereby enabling it to handle rooms with arbitrary shapes, including curved walls. The key innovation of EchoScan is its ability to analyze the complex relationship between low- and high-order reflections in room impulse responses (RIRs) using a multi-aggregation module. The analysis of high-order reflections also enables it to infer complex room shapes when echoes are unobservable from the position of an audio device. Herein, EchoScan was trained and evaluated using RIRs synthesized from complex environments, including the Manhattan and Atlanta layouts, employing a practical audio device configuration compatible with commercial, off-the-shelf devices. Compared with vision-based methods, EchoScan demonstrated outstanding geometry estimation performance in rooms with various shapes.

Ensemble Transfer Learning of Elastography and B-mode Breast Ultrasound Images

Feb 17, 2021

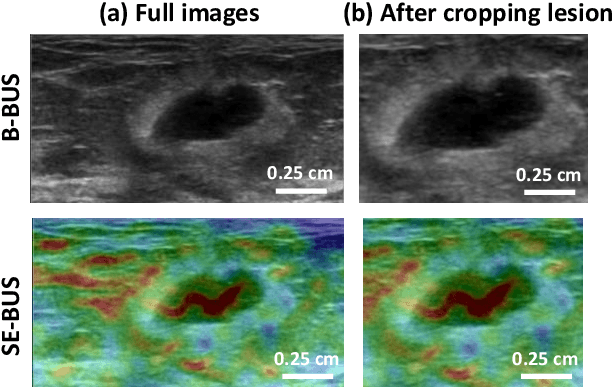

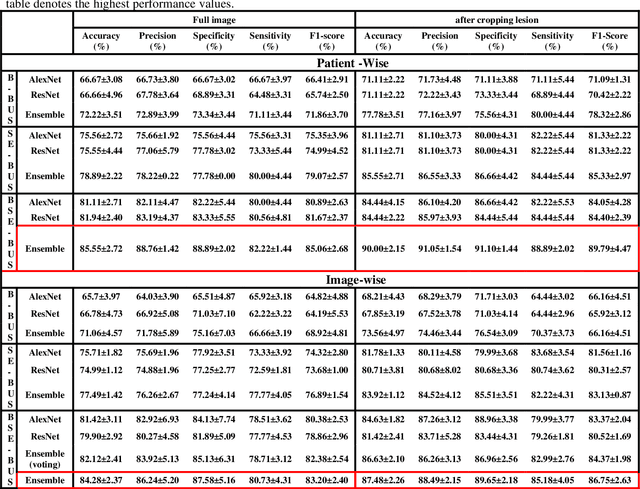

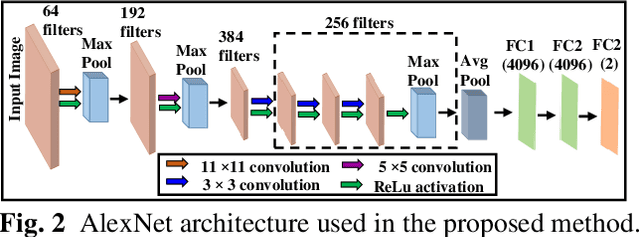

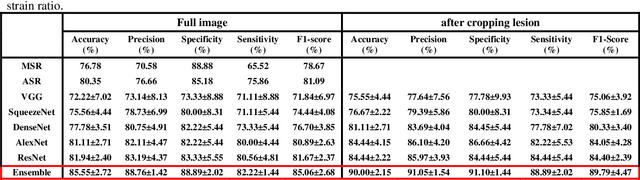

Abstract:Computer-aided detection (CAD) of benign and malignant breast lesions becomes increasingly essential in breast ultrasound (US) imaging. The CAD systems rely on imaging features identified by the medical experts for their performance, whereas deep learning (DL) methods automatically extract features from the data. The challenge of the DL is the insufficiency of breast US images available to train the DL models. Here, we present an ensemble transfer learning model to classify benign and malignant breast tumors using B-mode breast US (B-US) and strain elastography breast US (SE-US) images. This model combines semantic features from AlexNet & ResNet models to classify benign from malignant tumors. We use both B-US and SE-US images to train the model and classify the tumors. We retrospectively gathered 85 patients' data, with 42 benign and 43 malignant cases confirmed with the biopsy. Each patient had multiple B-US and their corresponding SE-US images, and the total dataset contained 261 B-US images and 261 SE-US images. Experimental results show that our ensemble model achieves a sensitivity of 88.89% and specificity of 91.10%. These diagnostic performances of the proposed method are equivalent to or better than manual identification. Thus, our proposed ensemble learning method would facilitate detecting early breast cancer, reliably improving patient care.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge