Ensemble Transfer Learning of Elastography and B-mode Breast Ultrasound Images

Paper and Code

Feb 17, 2021

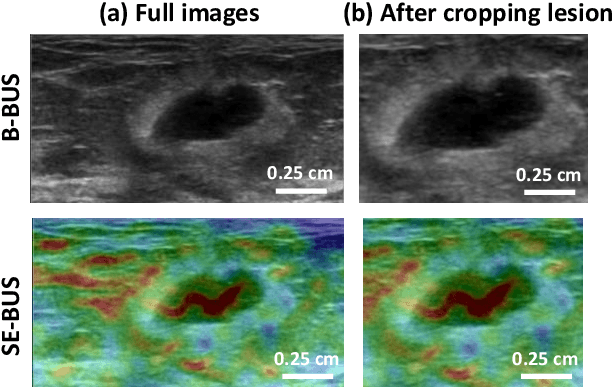

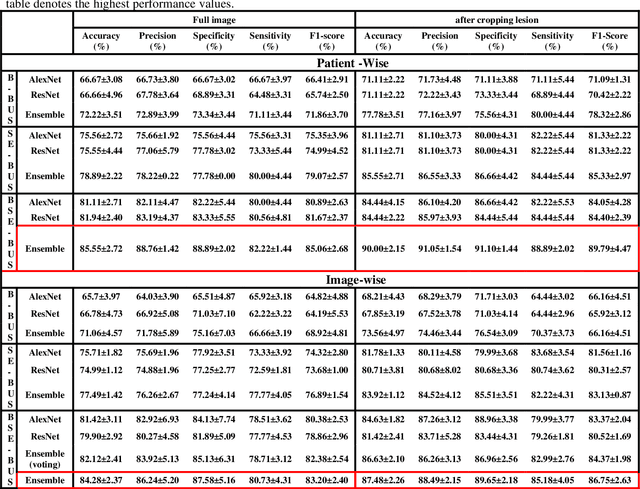

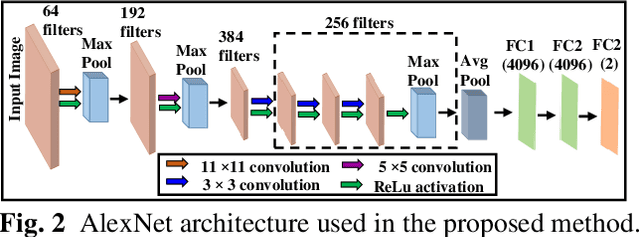

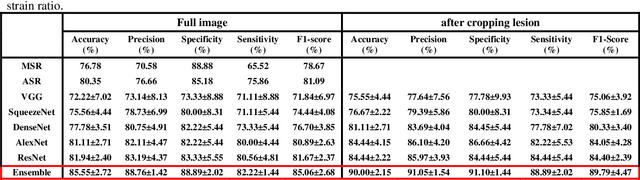

Computer-aided detection (CAD) of benign and malignant breast lesions becomes increasingly essential in breast ultrasound (US) imaging. The CAD systems rely on imaging features identified by the medical experts for their performance, whereas deep learning (DL) methods automatically extract features from the data. The challenge of the DL is the insufficiency of breast US images available to train the DL models. Here, we present an ensemble transfer learning model to classify benign and malignant breast tumors using B-mode breast US (B-US) and strain elastography breast US (SE-US) images. This model combines semantic features from AlexNet & ResNet models to classify benign from malignant tumors. We use both B-US and SE-US images to train the model and classify the tumors. We retrospectively gathered 85 patients' data, with 42 benign and 43 malignant cases confirmed with the biopsy. Each patient had multiple B-US and their corresponding SE-US images, and the total dataset contained 261 B-US images and 261 SE-US images. Experimental results show that our ensemble model achieves a sensitivity of 88.89% and specificity of 91.10%. These diagnostic performances of the proposed method are equivalent to or better than manual identification. Thus, our proposed ensemble learning method would facilitate detecting early breast cancer, reliably improving patient care.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge