Bokyeung Lee

Single Cell Training on Architecture Search for Image Denoising

Dec 13, 2022Abstract:Neural Architecture Search (NAS) for automatically finding the optimal network architecture has shown some success with competitive performances in various computer vision tasks. However, NAS in general requires a tremendous amount of computations. Thus reducing computational cost has emerged as an important issue. Most of the attempts so far has been based on manual approaches, and often the architectures developed from such efforts dwell in the balance of the network optimality and the search cost. Additionally, recent NAS methods for image restoration generally do not consider dynamic operations that may transform dimensions of feature maps because of the dimensionality mismatch in tensor calculations. This can greatly limit NAS in its search for optimal network structure. To address these issues, we re-frame the optimal search problem by focusing at component block level. From previous work, it's been shown that an effective denoising block can be connected in series to further improve the network performance. By focusing at block level, the search space of reinforcement learning becomes significantly smaller and evaluation process can be conducted more rapidly. In addition, we integrate an innovative dimension matching modules for dealing with spatial and channel-wise mismatch that may occur in the optimal design search. This allows much flexibility in optimal network search within the cell block. With these modules, then we employ reinforcement learning in search of an optimal image denoising network at a module level. Computational efficiency of our proposed Denoising Prior Neural Architecture Search (DPNAS) was demonstrated by having it complete an optimal architecture search for an image restoration task by just one day with a single GPU.

Efficient dynamic filter for robust and low computational feature extraction

May 03, 2022

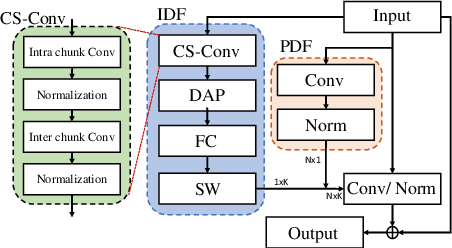

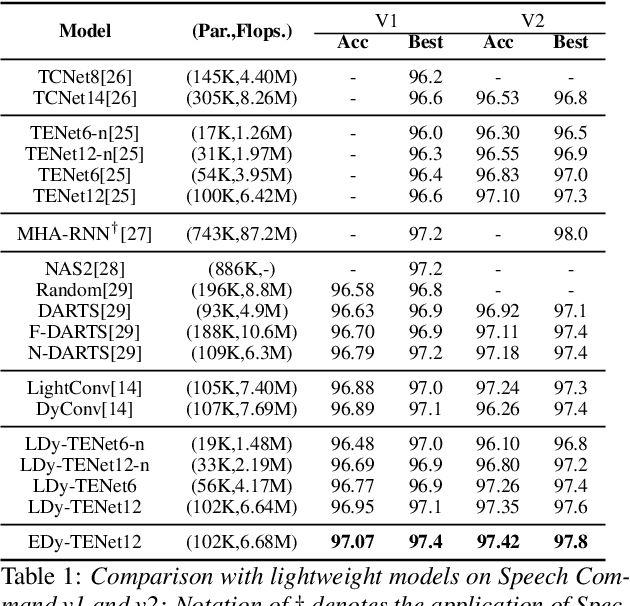

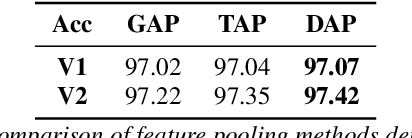

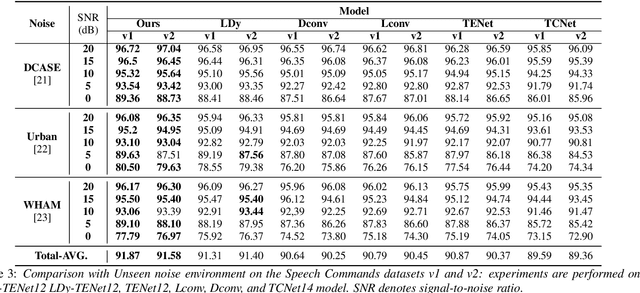

Abstract:Unseen noise signal which is not considered in a model training process is difficult to anticipate and would lead to performance degradation. Various methods have been investigated to mitigate unseen noise. In our previous work, an Instance-level Dynamic Filter (IDF) and a Pixel Dynamic Filter (PDF) were proposed to extract noise-robust features. However, the performance of the dynamic filter might be degraded since simple feature pooling is used to reduce the computational resource in the IDF part. In this paper, we propose an efficient dynamic filter to enhance the performance of the dynamic filter. Instead of utilizing the simple feature mean, we separate Time-Frequency (T-F) features as non-overlapping chunks, and separable convolutions are carried out for each feature direction (inter chunks and intra chunks). Additionally, we propose Dynamic Attention Pooling that maps high dimensional features as low dimensional feature embeddings. These methods are applied to the IDF for keyword spotting and speaker verification tasks. We confirm that our proposed method performs better in unseen environments (unseen noise and unseen speakers) than state-of-the-art models.

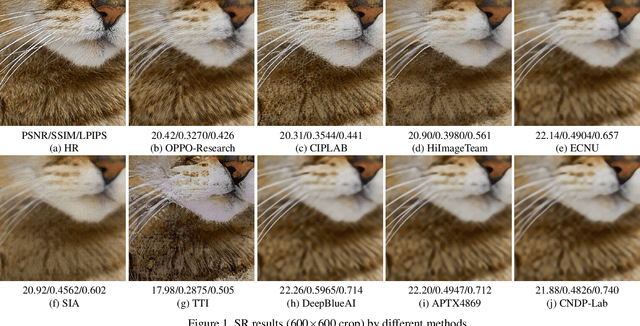

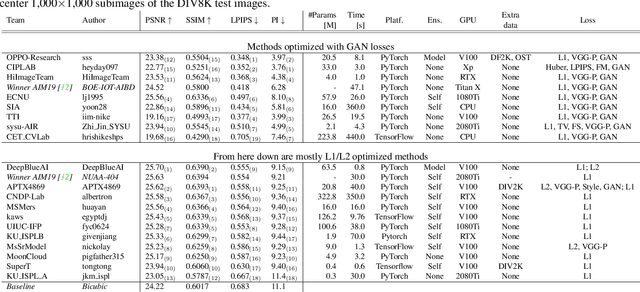

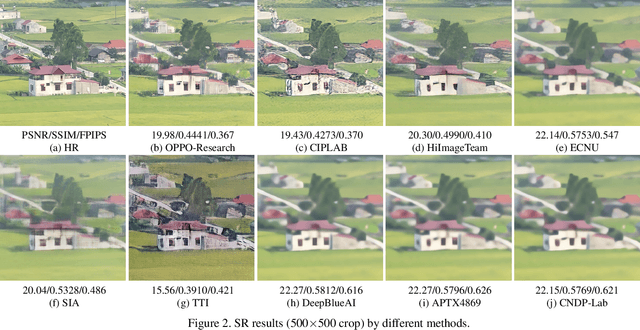

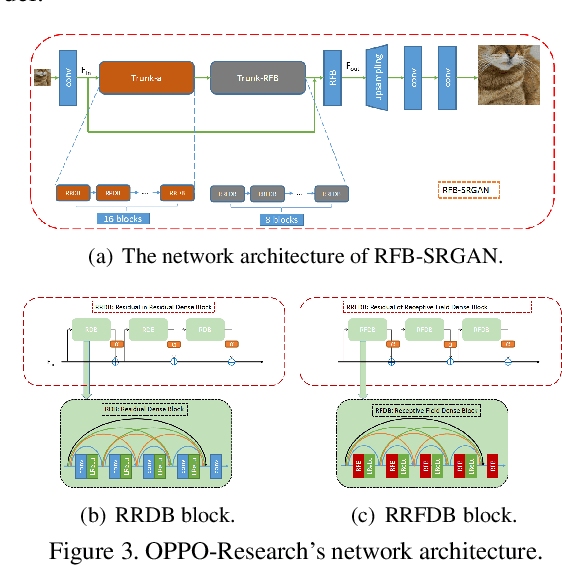

NTIRE 2020 Challenge on Perceptual Extreme Super-Resolution: Methods and Results

May 03, 2020

Abstract:This paper reviews the NTIRE 2020 challenge on perceptual extreme super-resolution with focus on proposed solutions and results. The challenge task was to super-resolve an input image with a magnification factor 16 based on a set of prior examples of low and corresponding high resolution images. The goal is to obtain a network design capable to produce high resolution results with the best perceptual quality and similar to the ground truth. The track had 280 registered participants, and 19 teams submitted the final results. They gauge the state-of-the-art in single image super-resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge