Bjoern Haefner

On Neural BRDFs: A Thorough Comparison of State-of-the-Art Approaches

Feb 21, 2025Abstract:The bidirectional reflectance distribution function (BRDF) is an essential tool to capture the complex interaction of light and matter. Recently, several works have employed neural methods for BRDF modeling, following various strategies, ranging from utilizing existing parametric models to purely neural parametrizations. While all methods yield impressive results, a comprehensive comparison of the different approaches is missing in the literature. In this work, we present a thorough evaluation of several approaches, including results for qualitative and quantitative reconstruction quality and an analysis of reciprocity and energy conservation. Moreover, we propose two extensions that can be added to existing approaches: A novel additive combination strategy for neural BRDFs that split the reflectance into a diffuse and a specular part, and an input mapping that ensures reciprocity exactly by construction, while previous approaches only ensure it by soft constraints.

Sparse Views, Near Light: A Practical Paradigm for Uncalibrated Point-light Photometric Stereo

Mar 29, 2024Abstract:Neural approaches have shown a significant progress on camera-based reconstruction. But they require either a fairly dense sampling of the viewing sphere, or pre-training on an existing dataset, thereby limiting their generalizability. In contrast, photometric stereo (PS) approaches have shown great potential for achieving high-quality reconstruction under sparse viewpoints. Yet, they are impractical because they typically require tedious laboratory conditions, are restricted to dark rooms, and often multi-staged, making them subject to accumulated errors. To address these shortcomings, we propose an end-to-end uncalibrated multi-view PS framework for reconstructing high-resolution shapes acquired from sparse viewpoints in a real-world environment. We relax the dark room assumption, and allow a combination of static ambient lighting and dynamic near LED lighting, thereby enabling easy data capture outside the lab. Experimental validation confirms that it outperforms existing baseline approaches in the regime of sparse viewpoints by a large margin. This allows to bring high-accuracy 3D reconstruction from the dark room to the real world, while maintaining a reasonable data capture complexity.

SupeRVol: Super-Resolution Shape and Reflectance Estimation in Inverse Volume Rendering

Dec 09, 2022

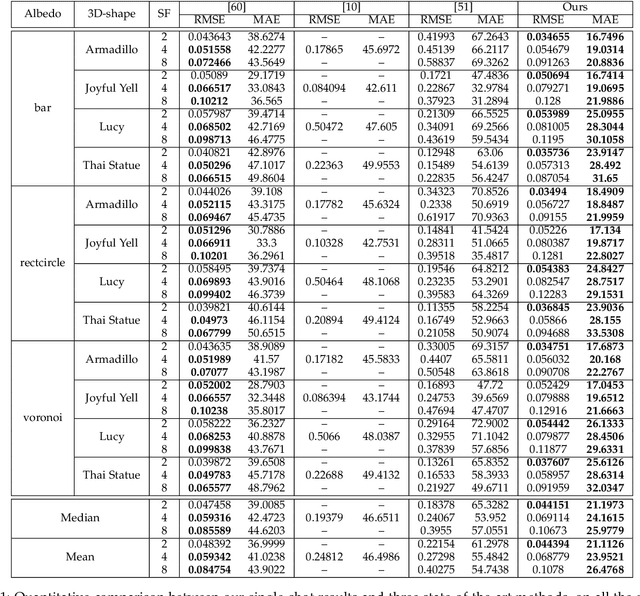

Abstract:We propose an end-to-end inverse rendering pipeline called SupeRVol that allows us to recover 3D shape and material parameters from a set of color images in a super-resolution manner. To this end, we represent both the bidirectional reflectance distribution function (BRDF) and the signed distance function (SDF) by multi-layer perceptrons. In order to obtain both the surface shape and its reflectance properties, we revert to a differentiable volume renderer with a physically based illumination model that allows us to decouple reflectance and lighting. This physical model takes into account the effect of the camera's point spread function thereby enabling a reconstruction of shape and material in a super-resolution quality. Experimental validation confirms that SupeRVol achieves state of the art performance in terms of inverse rendering quality. It generates reconstructions that are sharper than the individual input images, making this method ideally suited for 3D modeling from low-resolution imagery.

Inferring Super-Resolution Depth from a Moving Light-Source Enhanced RGB-D Sensor: A Variational Approach

Dec 13, 2019

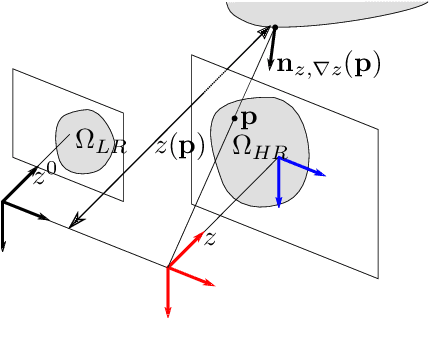

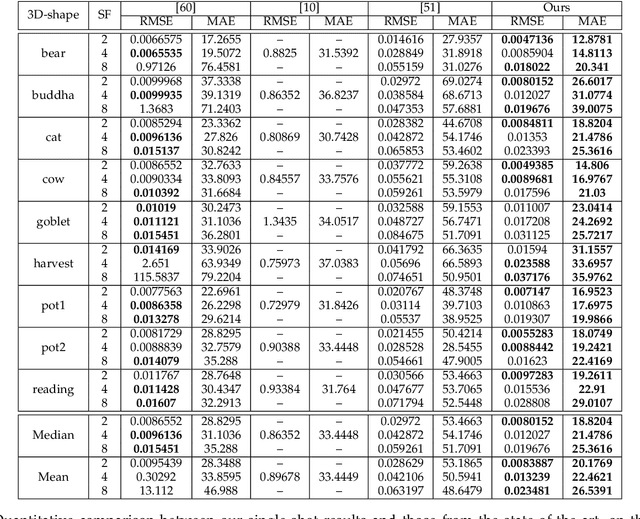

Abstract:A novel approach towards depth map super-resolution using multi-view uncalibrated photometric stereo is presented. Practically, an LED light source is attached to a commodity RGB-D sensor and is used to capture objects from multiple viewpoints with unknown motion. This non-static camera-to-object setup is described with a nonconvex variational approach such that no calibration on lighting or camera motion is required due to the formulation of an end-to-end joint optimization problem. Solving the proposed variational model results in high resolution depth, reflectance and camera pose estimates, as we show on challenging synthetic and real-world datasets.

On the well-posedness of uncalibrated photometric stereo under general lighting

Nov 17, 2019

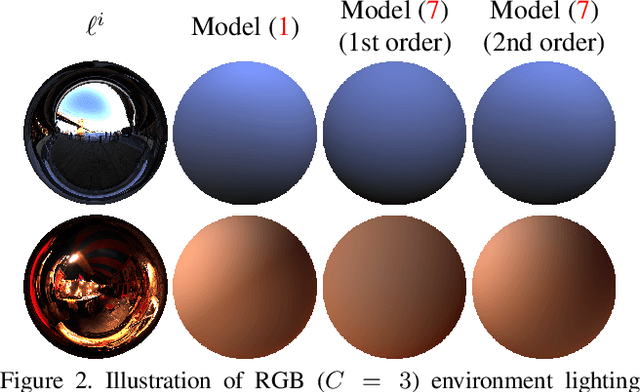

Abstract:Uncalibrated photometric stereo aims at estimating the 3D-shape of a surface, given a set of images captured from the same viewing angle, but under unknown, varying illumination. While the theoretical foundations of this inverse problem under directional lighting are well-established, there is a lack of mathematical evidence for the uniqueness of a solution under general lighting. On the other hand, stable and accurate heuristical solutions of uncalibrated photometric stereo under such general lighting have recently been proposed. The quality of the results demonstrated therein tends to indicate that the problem may actually be well-posed, but this still has to be established. The present paper addresses this theoretical issue, considering first-order spherical harmonics approximation of general lighting. Two important theoretical results are established. First, the orthographic integrability constraint ensures uniqueness of a solution up to a global concave-convex ambiguity, which had already been conjectured, yet not proven. Second, the perspective integrability constraint makes the problem well-posed, which generalizes a previous result limited to directional lighting. Eventually, a closed-form expression for the unique least-squares solution of the problem under perspective projection is provided, allowing numerical simulations on synthetic data to empirically validate our findings.

Variational Uncalibrated Photometric Stereo under General Lighting

Apr 08, 2019

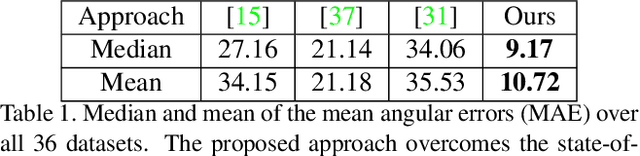

Abstract:Photometric stereo (PS) techniques nowadays remain constrained to an ideal laboratory setup where modeling and calibration of lighting is amenable. This work aims to eliminate such restrictions. To this end, we introduce an efficient principled variational approach to uncalibrated PS under general illumination, which is approximated through a second-order spherical harmonic expansion. The joint recovery of shape, reflectance and illumination is formulated as a variational problem where shape estimation is carried out directly in terms of the underlying perspective depth map, thus implicitly ensuring integrability and bypassing the need for a subsequent normal integration. We provide a tailored numerical scheme to solve the resulting nonconvex problem efficiently and robustly. On a variety of evaluations, our method consistently reduces the mean angular error by a factor of 2-3 compared to the state-of-the-art.

Photometric Depth Super-Resolution

Sep 26, 2018

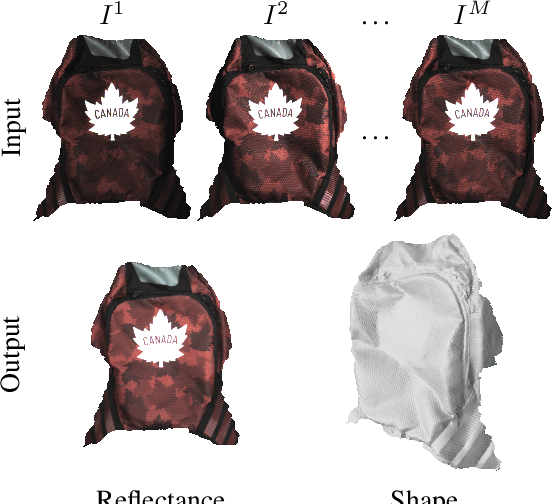

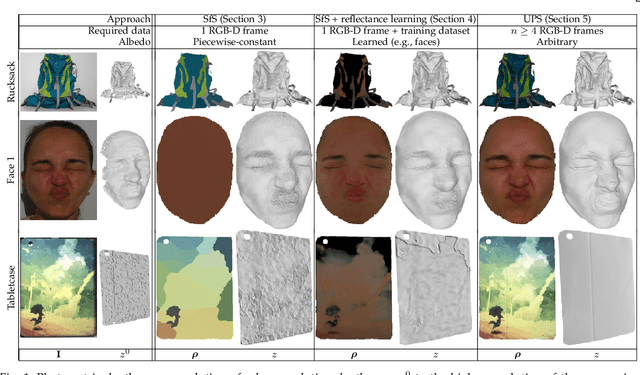

Abstract:This study explores the use of photometric techniques (shape-from-shading and uncalibrated photometric stereo) for upsampling the low-resolution depth map from an RGB-D sensor to the higher resolution of the companion RGB image. A single-shot variational approach is first put forward, which is effective as long as the target's reflectance is piecewise-constant. It is then shown that this dependency upon a specific reflectance model can be relaxed by focusing on a specific class of objects (e.g., faces), and delegate reflectance estimation to a deep neural network. A multi-shots strategy based on randomly varying lighting conditions is eventually discussed. It requires no training or prior on the reflectance, yet this comes at the price of a dedicated acquisition setup. Both quantitative and qualitative evaluations illustrate the effectiveness of the proposed methods on synthetic and real-world scenarios.

Depth Super-Resolution Meets Uncalibrated Photometric Stereo

Aug 24, 2017

Abstract:A novel depth super-resolution approach for RGB-D sensors is presented. It disambiguates depth super-resolution through high-resolution photometric clues and, symmetrically, it disambiguates uncalibrated photometric stereo through low-resolution depth cues. To this end, an RGB-D sequence is acquired from the same viewing angle, while illuminating the scene from various uncalibrated directions. This sequence is handled by a variational framework which fits high-resolution shape and reflectance, as well as lighting, to both the low-resolution depth measurements and the high-resolution RGB ones. The key novelty consists in a new PDE-based photometric stereo regularizer which implicitly ensures surface regularity. This allows to carry out depth super-resolution in a purely data-driven manner, without the need for any ad-hoc prior or material calibration. Real-world experiments are carried out using an out-of-the-box RGB-D sensor and a hand-held LED light source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge