Azadeh Mansouri

Compressed image quality assessment using stacking

Feb 01, 2024Abstract:It is well-known that there is no universal metric for image quality evaluation. In this case, distortion-specific metrics can be more reliable. The artifact imposed by image compression can be considered as a combination of various distortions. Depending on the image context, this combination can be different. As a result, Generalization can be regarded as the major challenge in compressed image quality assessment. In this approach, stacking is employed to provide a reliable method. Both semantic and low-level information are employed in the presented IQA to predict the human visual system. Moreover, the results of the Full-Reference (FR) and No-Reference (NR) models are aggregated to improve the proposed Full-Reference method for compressed image quality evaluation. The accuracy of the quality benchmark of the clic2024 perceptual image challenge was achieved 79.6\%, which illustrates the effectiveness of the proposed fusion-based approach.

NTIRE 2023 Quality Assessment of Video Enhancement Challenge

Jul 19, 2023

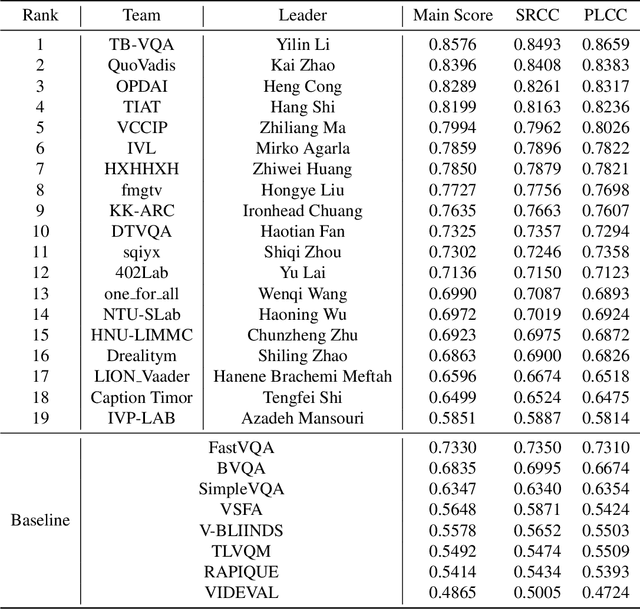

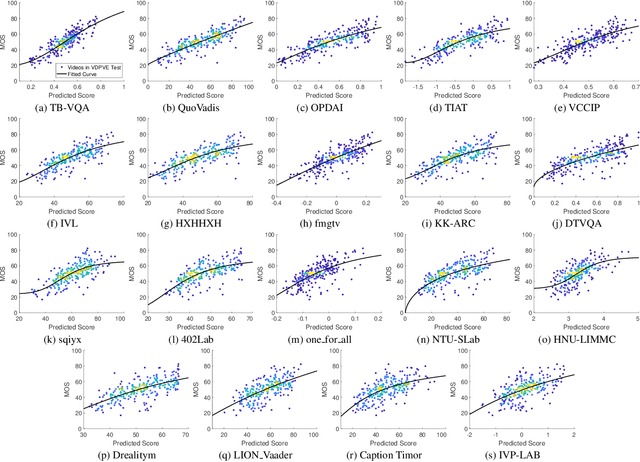

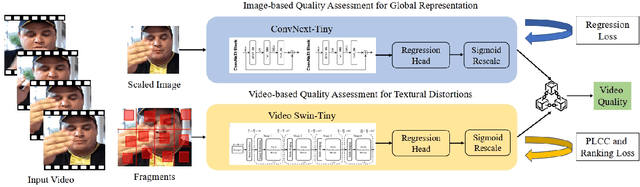

Abstract:This paper reports on the NTIRE 2023 Quality Assessment of Video Enhancement Challenge, which will be held in conjunction with the New Trends in Image Restoration and Enhancement Workshop (NTIRE) at CVPR 2023. This challenge is to address a major challenge in the field of video processing, namely, video quality assessment (VQA) for enhanced videos. The challenge uses the VQA Dataset for Perceptual Video Enhancement (VDPVE), which has a total of 1211 enhanced videos, including 600 videos with color, brightness, and contrast enhancements, 310 videos with deblurring, and 301 deshaked videos. The challenge has a total of 167 registered participants. 61 participating teams submitted their prediction results during the development phase, with a total of 3168 submissions. A total of 176 submissions were submitted by 37 participating teams during the final testing phase. Finally, 19 participating teams submitted their models and fact sheets, and detailed the methods they used. Some methods have achieved better results than baseline methods, and the winning methods have demonstrated superior prediction performance.

Speeding Up Action Recognition Using Dynamic Accumulation of Residuals in Compressed Domain

Sep 29, 2022

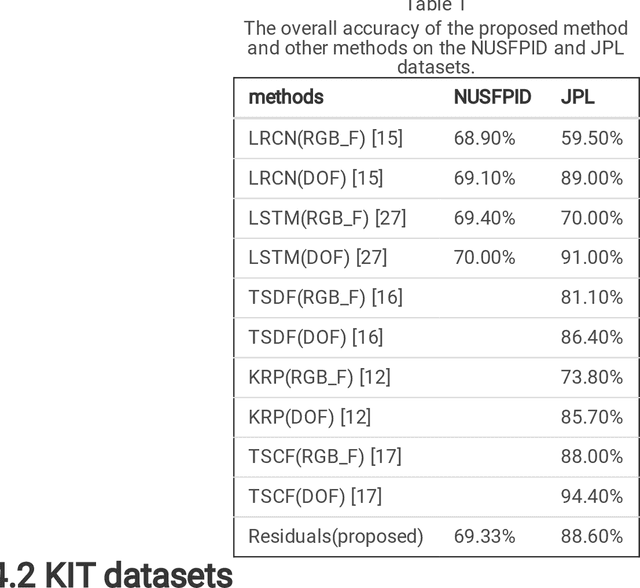

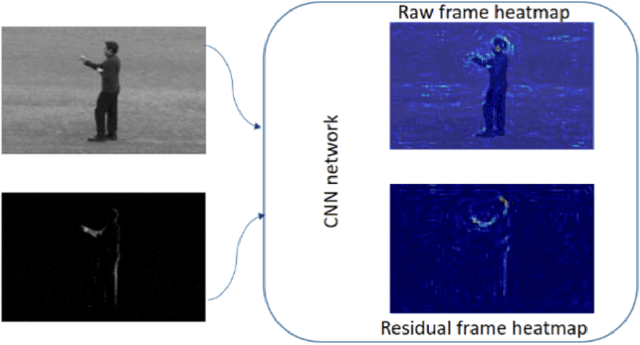

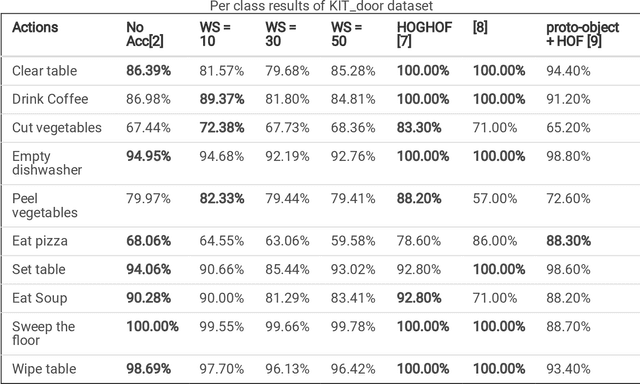

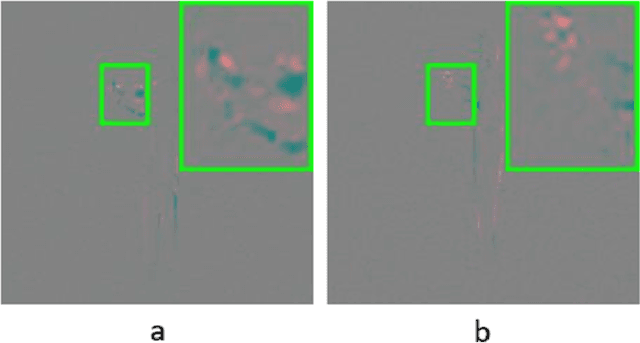

Abstract:With the widespread use of installed cameras, video-based monitoring approaches have seized considerable attention for different purposes like assisted living. Temporal redundancy and the sheer size of raw videos are the two most common problematic issues related to video processing algorithms. Most of the existing methods mainly focused on increasing accuracy by exploring consecutive frames, which is laborious and cannot be considered for real-time applications. Since videos are mostly stored and transmitted in compressed format, these kinds of videos are available on many devices. Compressed videos contain a multitude of beneficial information, such as motion vectors and quantized coefficients. Proper use of this available information can greatly improve the video understanding methods' performance. This paper presents an approach for using residual data, available in compressed videos directly, which can be obtained by a light partially decoding procedure. In addition, a method for accumulating similar residuals is proposed, which dramatically reduces the number of processed frames for action recognition. Applying neural networks exclusively for accumulated residuals in the compressed domain accelerates performance, while the classification results are highly competitive with raw video approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge