Ashley D. Edwards

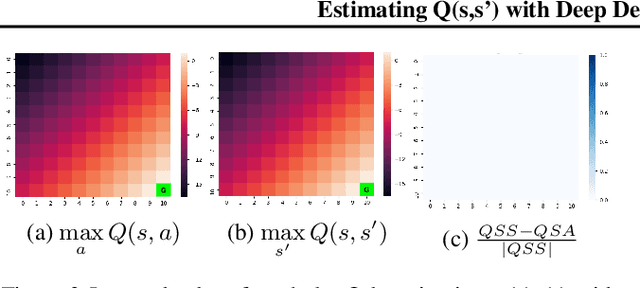

Estimating Q(s,s') with Deep Deterministic Dynamics Gradients

Feb 21, 2020

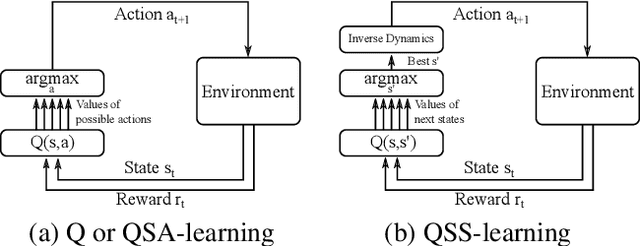

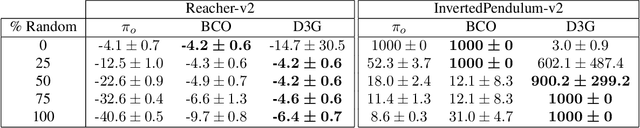

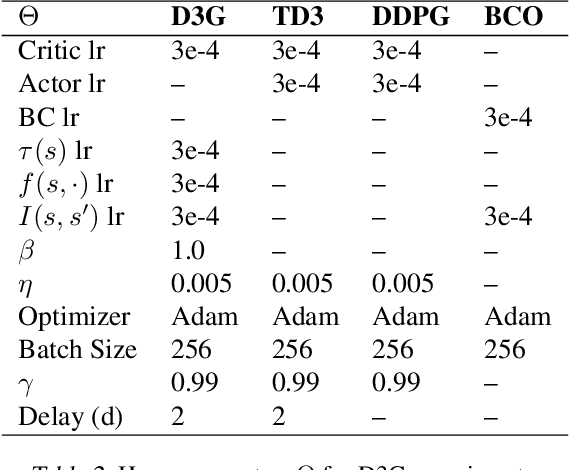

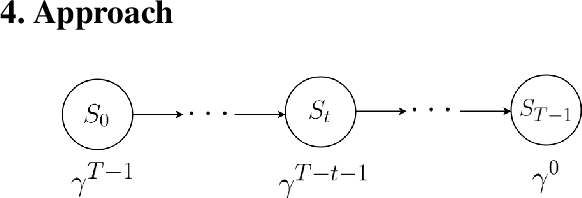

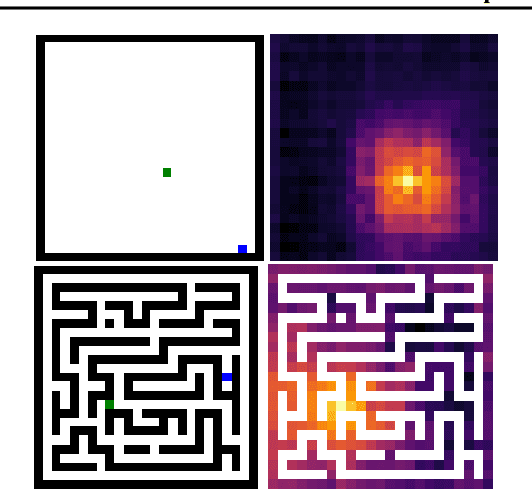

Abstract:In this paper, we introduce a novel form of value function, $Q(s, s')$, that expresses the utility of transitioning from a state $s$ to a neighboring state $s'$ and then acting optimally thereafter. In order to derive an optimal policy, we develop a forward dynamics model that learns to make next-state predictions that maximize this value. This formulation decouples actions from values while still learning off-policy. We highlight the benefits of this approach in terms of value function transfer, learning within redundant action spaces, and learning off-policy from state observations generated by sub-optimal or completely random policies. Code and videos are available at \url{sites.google.com/view/qss-paper}.

Perceptual Values from Observation

May 20, 2019

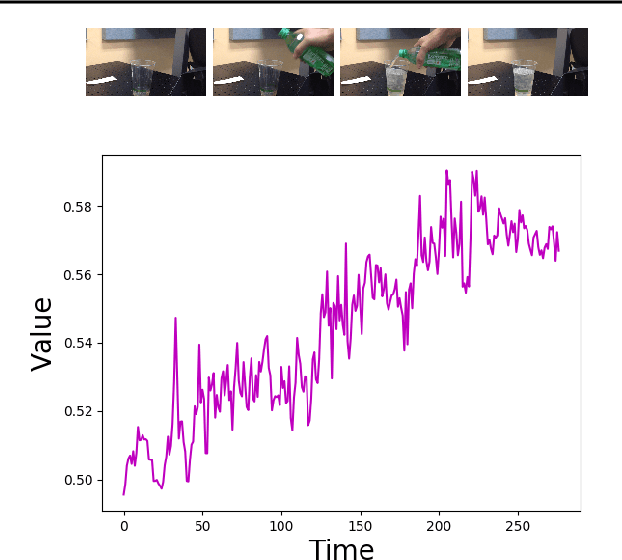

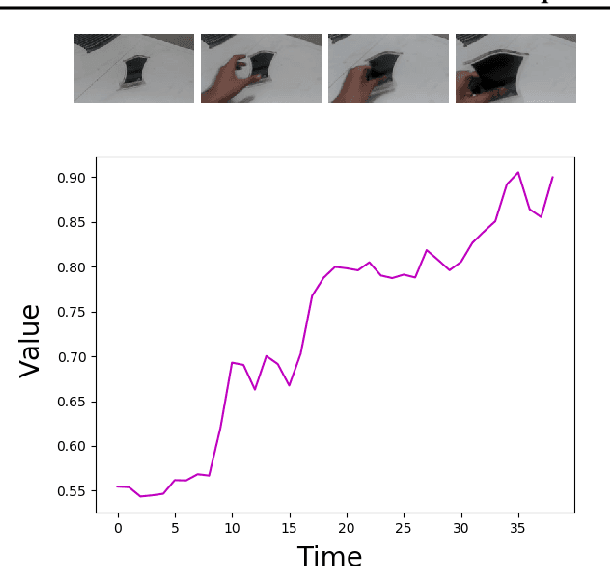

Abstract:Imitation by observation is an approach for learning from expert demonstrations that lack action information, such as videos. Recent approaches to this problem can be placed into two broad categories: training dynamics models that aim to predict the actions taken between states, and learning rewards or features for computing them for Reinforcement Learning (RL). In this paper, we introduce a novel approach that learns values, rather than rewards, directly from observations. We show that by using values, we can significantly speed up RL by removing the need to bootstrap action-values, as compared to sparse-reward specifications.

Imitating Latent Policies from Observation

May 24, 2018

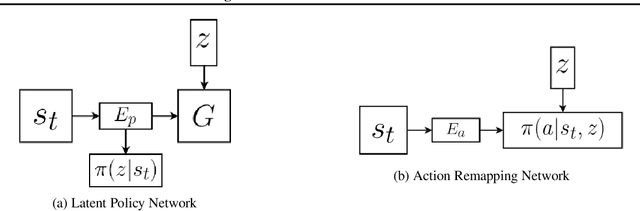

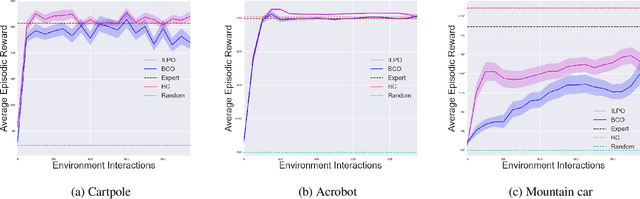

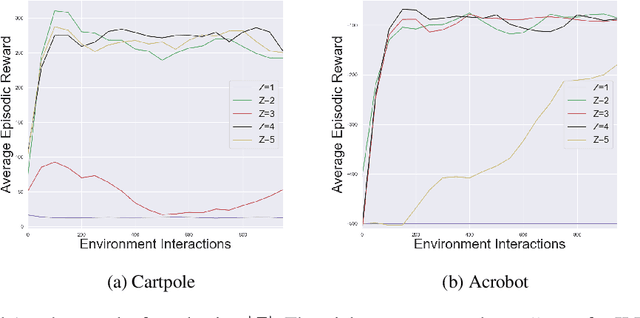

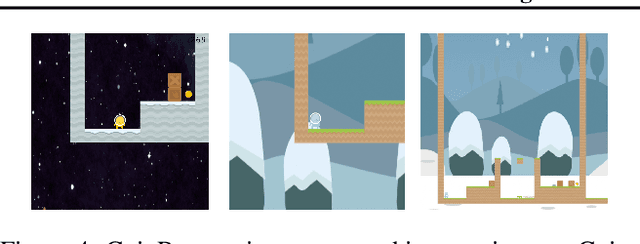

Abstract:We describe a novel approach to imitation learning that infers latent policies directly from state observations. We introduce a method that characterizes the causal effects of unknown actions on observations while simultaneously predicting their likelihood. We then outline an action alignment procedure that leverages a small amount of environment interactions to determine a mapping between latent and real-world actions. We show that this corrected labeling can be used for imitating the observed behavior, even though no expert actions are given. We evaluate our approach within classic control and photo-realistic visual environments and demonstrate that it performs well when compared to standard approaches.

Forward-Backward Reinforcement Learning

Mar 27, 2018

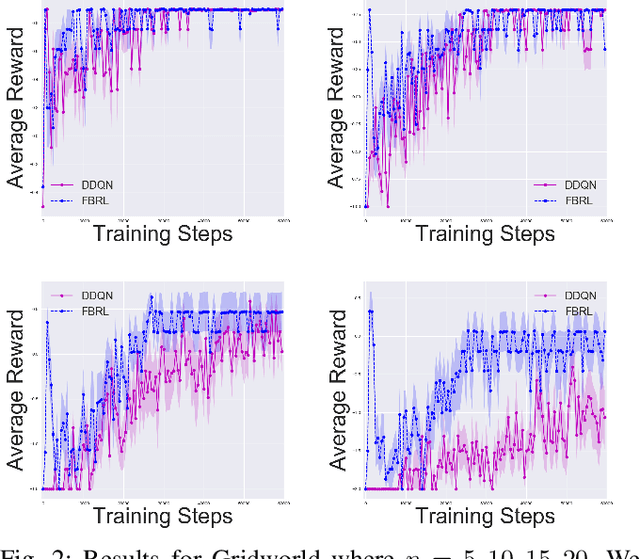

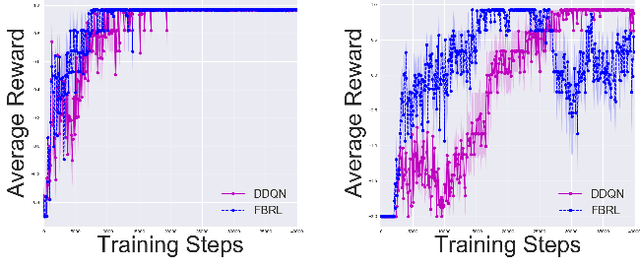

Abstract:Goals for reinforcement learning problems are typically defined through hand-specified rewards. To design such problems, developers of learning algorithms must inherently be aware of what the task goals are, yet we often require agents to discover them on their own without any supervision beyond these sparse rewards. While much of the power of reinforcement learning derives from the concept that agents can learn with little guidance, this requirement greatly burdens the training process. If we relax this one restriction and endow the agent with knowledge of the reward function, and in particular of the goal, we can leverage backwards induction to accelerate training. To achieve this, we propose training a model to learn to take imagined reversal steps from known goal states. Rather than training an agent exclusively to determine how to reach a goal while moving forwards in time, our approach travels backwards to jointly predict how we got there. We evaluate our work in Gridworld and Towers of Hanoi and empirically demonstrate that it yields better performance than standard DDQN.

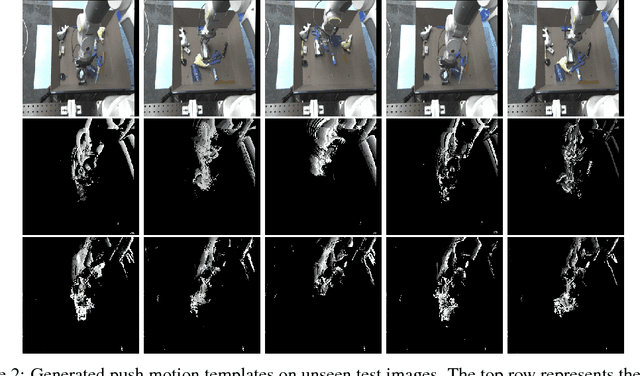

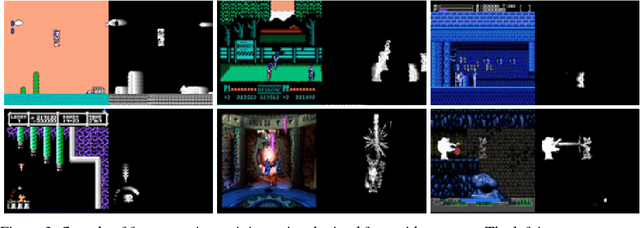

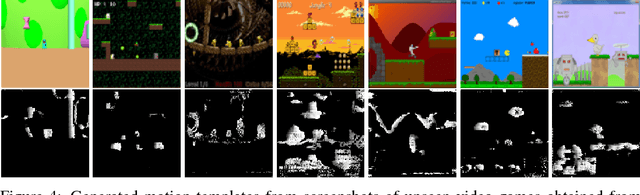

Transferring Agent Behaviors from Videos via Motion GANs

Nov 21, 2017

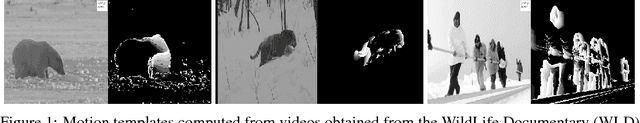

Abstract:A major bottleneck for developing general reinforcement learning agents is determining rewards that will yield desirable behaviors under various circumstances. We introduce a general mechanism for automatically specifying meaningful behaviors from raw pixels. In particular, we train a generative adversarial network to produce short sub-goals represented through motion templates. We demonstrate that this approach generates visually meaningful behaviors in unknown environments with novel agents and describe how these motions can be used to train reinforcement learning agents.

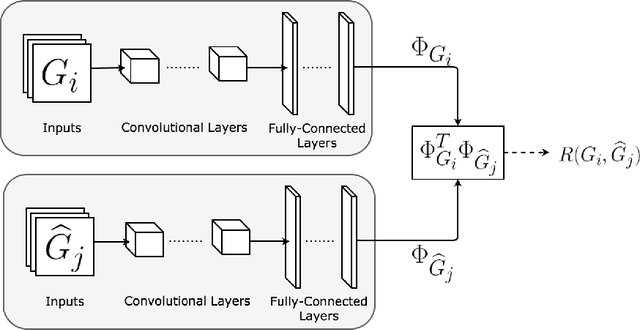

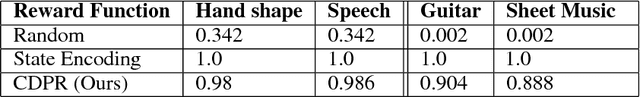

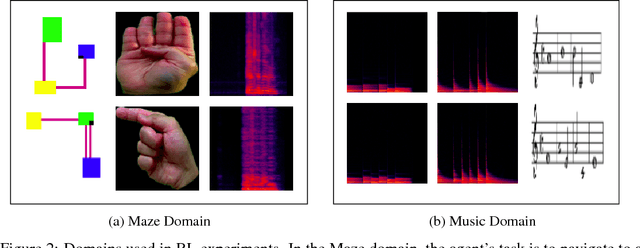

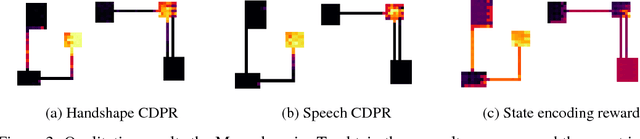

Cross-Domain Perceptual Reward Functions

Jul 25, 2017

Abstract:In reinforcement learning, we often define goals by specifying rewards within desirable states. One problem with this approach is that we typically need to redefine the rewards each time the goal changes, which often requires some understanding of the solution in the agents environment. When humans are learning to complete tasks, we regularly utilize alternative sources that guide our understanding of the problem. Such task representations allow one to specify goals on their own terms, thus providing specifications that can be appropriately interpreted across various environments. This motivates our own work, in which we represent goals in environments that are different from the agents. We introduce Cross-Domain Perceptual Reward (CDPR) functions, learned rewards that represent the visual similarity between an agents state and a cross-domain goal image. We report results for learning the CDPRs with a deep neural network and using them to solve two tasks with deep reinforcement learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge