Ashish Agarwal

The Impact of Generative AI on Collaborative Open-Source Software Development: Evidence from GitHub Copilot

Oct 02, 2024

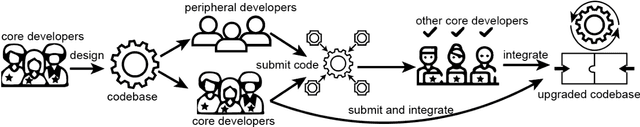

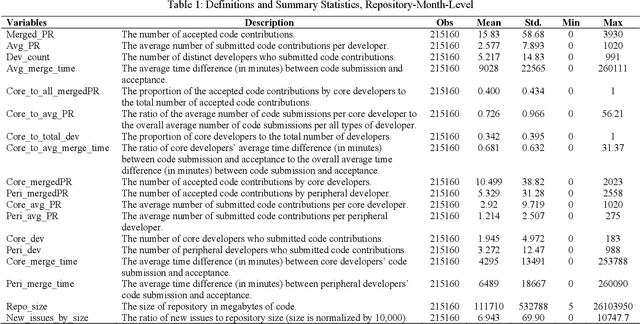

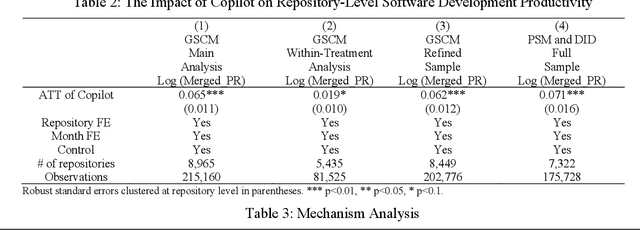

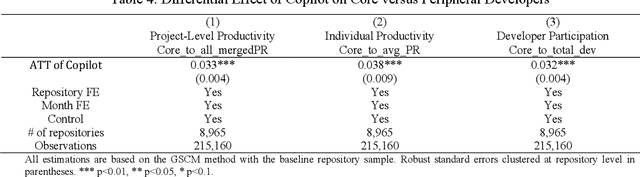

Abstract:Generative artificial intelligence (AI) has opened the possibility of automated content production, including coding in software development, which can significantly influence the participation and performance of software developers. To explore this impact, we investigate the role of GitHub Copilot, a generative AI pair programmer, on software development in open-source community, where multiple developers voluntarily collaborate on software projects. Using GitHub's dataset for open-source repositories and a generalized synthetic control method, we find that Copilot significantly enhances project-level productivity by 6.5%. Delving deeper, we dissect the key mechanisms driving this improvement. Our findings reveal a 5.5% increase in individual productivity and a 5.4% increase in participation. However, this is accompanied with a 41.6% increase in integration time, potentially due to higher coordination costs. Interestingly, we also observe the differential effects among developers. We discover that core developers achieve greater project-level productivity gains from using Copilot, benefiting more in terms of individual productivity and participation compared to peripheral developers, plausibly due to their deeper familiarity with software projects. We also find that the increase in project-level productivity is accompanied with no change in code quality. We conclude that AI pair programmers bring benefits to developers to automate and augment their code, but human developers' knowledge of software projects can enhance the benefits. In summary, our research underscores the role of AI pair programmers in impacting project-level productivity within the open-source community and suggests potential implications for the structure of open-source software projects.

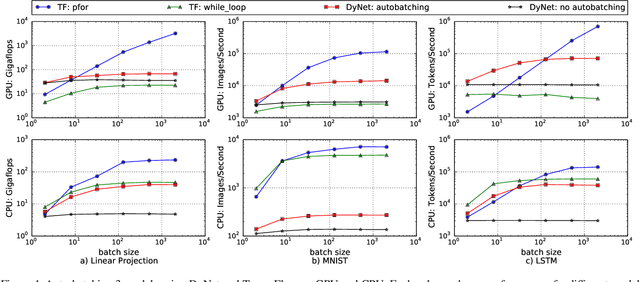

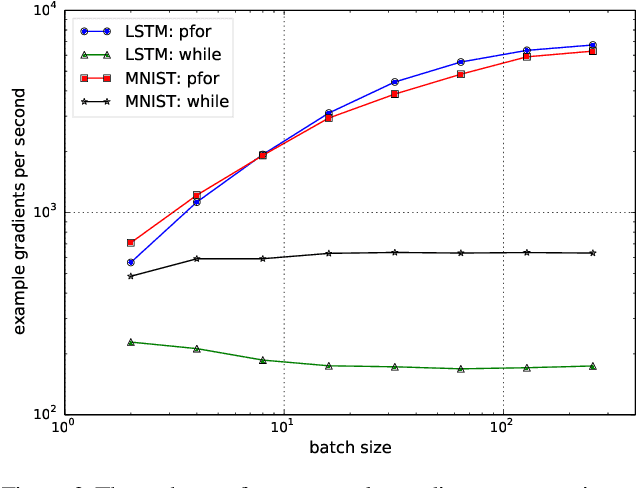

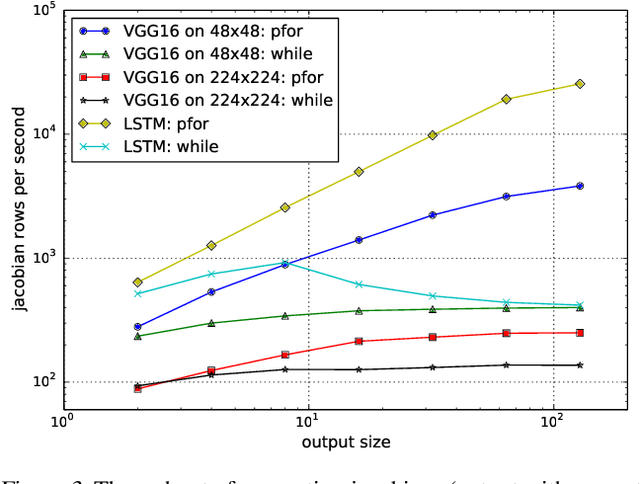

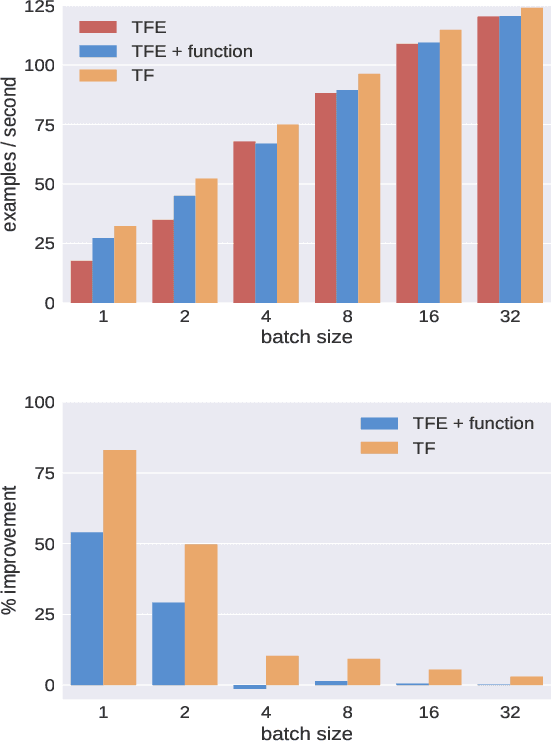

Auto-Vectorizing TensorFlow Graphs: Jacobians, Auto-Batching And Beyond

Mar 08, 2019

Abstract:We propose a static loop vectorization optimization on top of high level dataflow IR used by frameworks like TensorFlow. A new statically vectorized parallel-for abstraction is provided on top of TensorFlow, and used for applications ranging from auto-batching and per-example gradients, to jacobian computation, optimized map functions and input pipeline optimization. We report huge speedups compared to both loop based implementations, as well as run-time batching adopted by the DyNet framework.

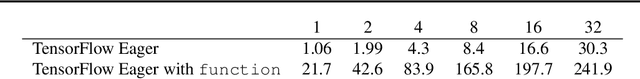

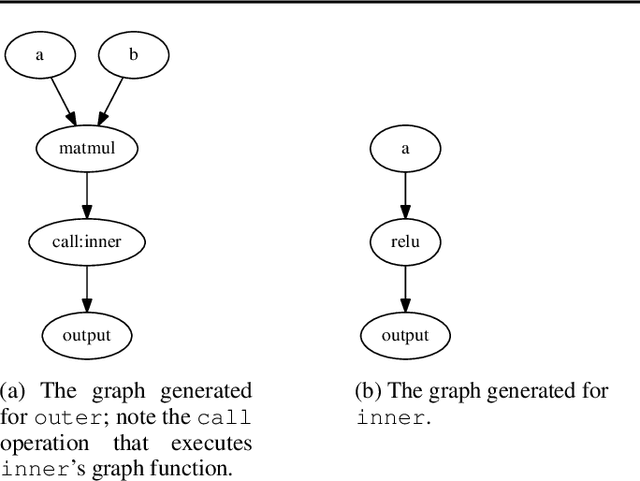

TensorFlow Eager: A Multi-Stage, Python-Embedded DSL for Machine Learning

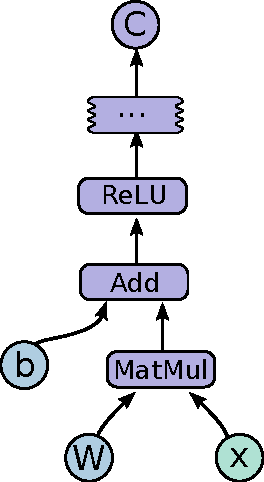

Feb 27, 2019

Abstract:TensorFlow Eager is a multi-stage, Python-embedded domain-specific language for hardware-accelerated machine learning, suitable for both interactive research and production. TensorFlow, which TensorFlow Eager extends, requires users to represent computations as dataflow graphs; this permits compiler optimizations and simplifies deployment but hinders rapid prototyping and run-time dynamism. TensorFlow Eager eliminates these usability costs without sacrificing the benefits furnished by graphs: It provides an imperative front-end to TensorFlow that executes operations immediately and a JIT tracer that translates Python functions composed of TensorFlow operations into executable dataflow graphs. TensorFlow Eager thus offers a multi-stage programming model that makes it easy to interpolate between imperative and staged execution in a single package.

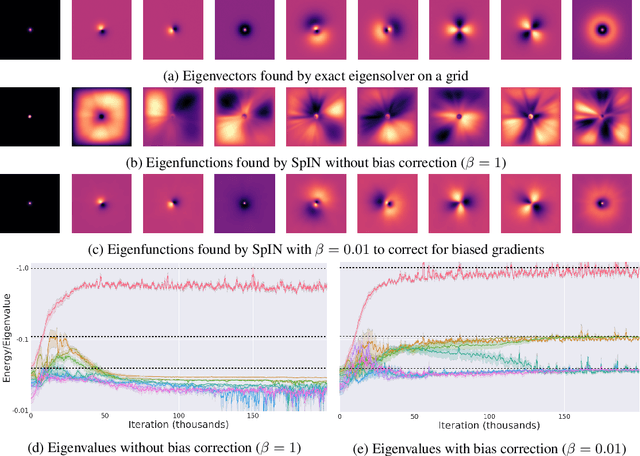

Spectral Inference Networks: Unifying Spectral Methods With Deep Learning

Jun 06, 2018

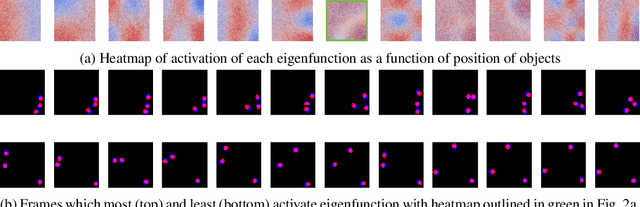

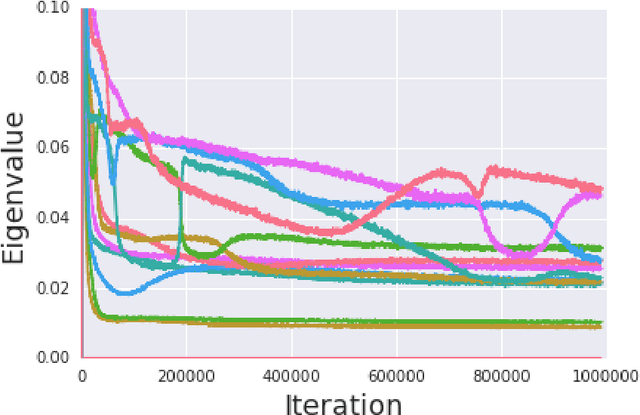

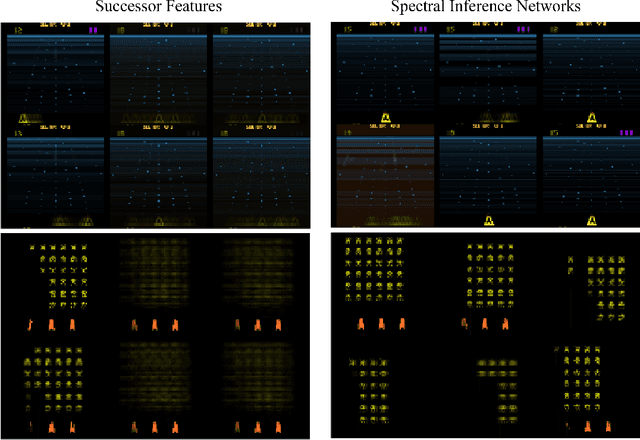

Abstract:We present Spectral Inference Networks, a framework for learning eigenfunctions of linear operators by stochastic optimization. Spectral Inference Networks generalize Slow Feature Analysis to generic symmetric operators, and are closely related to Variational Monte Carlo methods from computational physics. As such, they can be a powerful tool for unsupervised representation learning from video or pairs of data. We derive a training algorithm for Spectral Inference Networks that addresses the bias in the gradients due to finite batch size and allows for online learning of multiple eigenfunctions. We show results of training Spectral Inference Networks on problems in quantum mechanics and feature learning for videos on synthetic datasets as well as the Arcade Learning Environment. Our results demonstrate that Spectral Inference Networks accurately recover eigenfunctions of linear operators, can discover interpretable representations from video and find meaningful subgoals in reinforcement learning environments.

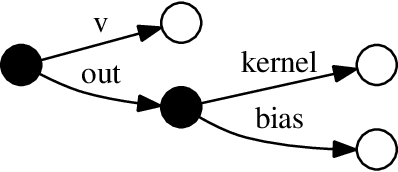

TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems

Mar 16, 2016

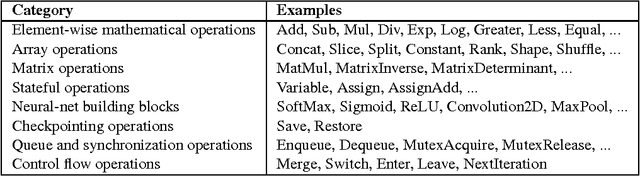

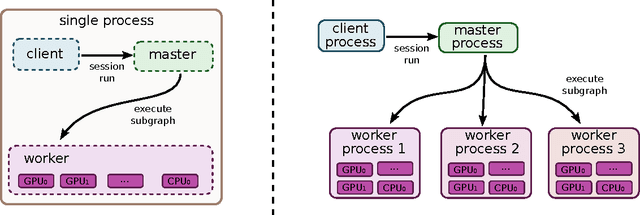

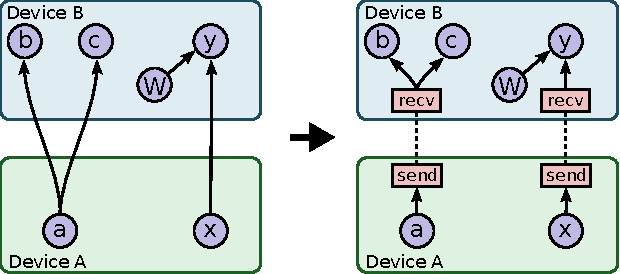

Abstract:TensorFlow is an interface for expressing machine learning algorithms, and an implementation for executing such algorithms. A computation expressed using TensorFlow can be executed with little or no change on a wide variety of heterogeneous systems, ranging from mobile devices such as phones and tablets up to large-scale distributed systems of hundreds of machines and thousands of computational devices such as GPU cards. The system is flexible and can be used to express a wide variety of algorithms, including training and inference algorithms for deep neural network models, and it has been used for conducting research and for deploying machine learning systems into production across more than a dozen areas of computer science and other fields, including speech recognition, computer vision, robotics, information retrieval, natural language processing, geographic information extraction, and computational drug discovery. This paper describes the TensorFlow interface and an implementation of that interface that we have built at Google. The TensorFlow API and a reference implementation were released as an open-source package under the Apache 2.0 license in November, 2015 and are available at www.tensorflow.org.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge