Arpan Pal

TCS Research, TATA Consultancy Services, Kolkata, India

Photometric Redshift Estimation Using Scaled Ensemble Learning

Jan 12, 2026Abstract:The development of the state-of-the-art telescopic systems capable of performing expansive sky surveys such as the Sloan Digital Sky Survey, Euclid, and the Rubin Observatory's Legacy Survey of Space and Time (LSST) has significantly advanced efforts to refine cosmological models. These advances offer deeper insight into persistent challenges in astrophysics and our understanding of the Universe's evolution. A critical component of this progress is the reliable estimation of photometric redshifts (Pz). To improve the precision and efficiency of such estimations, the application of machine learning (ML) techniques to large-scale astronomical datasets has become essential. This study presents a new ensemble-based ML framework aimed at predicting Pz for faint galaxies and higher redshift ranges, relying solely on optical (grizy) photometric data. The proposed architecture integrates several learning algorithms, including gradient boosting machine, extreme gradient boosting, k-nearest neighbors, and artificial neural networks, within a scaled ensemble structure. By using bagged input data, the ensemble approach delivers improved predictive performance compared to stand-alone models. The framework demonstrates consistent accuracy in estimating redshifts, maintaining strong performance up to z ~ 4. The model is validated using publicly available data from the Hyper Suprime-Cam Strategic Survey Program by the Subaru Telescope. Our results show marked improvements in the precision and reliability of Pz estimation. Furthermore, this approach closely adheres to-and in certain instances exceeds-the benchmarks specified in the LSST Science Requirements Document. Evaluation metrics include catastrophic outlier, bias, and rms.

* 10 pages, 2 figures, 3 tables

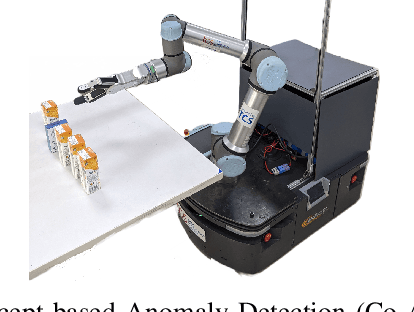

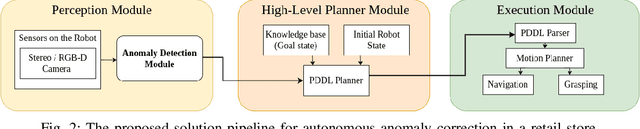

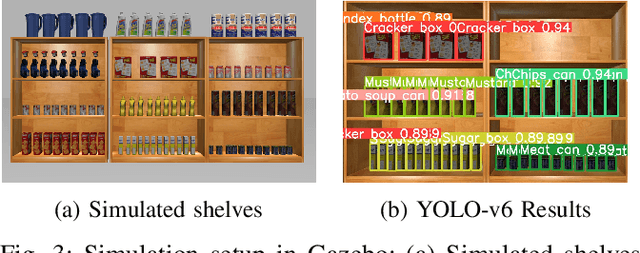

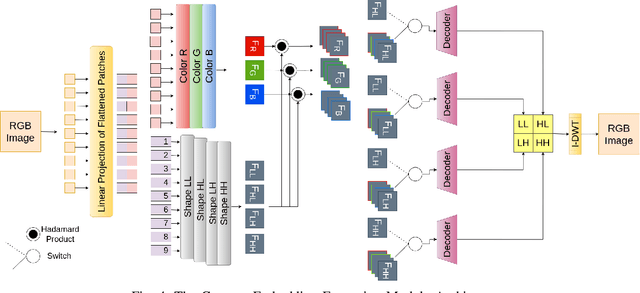

Concept-based Anomaly Detection in Retail Stores for Automatic Correction using Mobile Robots

Oct 21, 2023

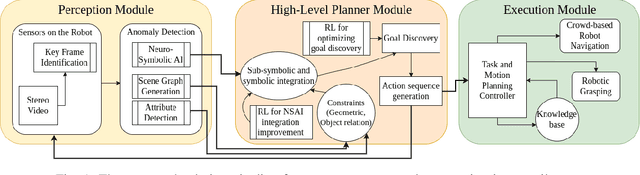

Abstract:Tracking of inventory and rearrangement of misplaced items are some of the most labor-intensive tasks in a retail environment. While there have been attempts at using vision-based techniques for these tasks, they mostly use planogram compliance for detection of any anomalies, a technique that has been found lacking in robustness and scalability. Moreover, existing systems rely on human intervention to perform corrective actions after detection. In this paper, we present Co-AD, a Concept-based Anomaly Detection approach using a Vision Transformer (ViT) that is able to flag misplaced objects without using a prior knowledge base such as a planogram. It uses an auto-encoder architecture followed by outlier detection in the latent space. Co-AD has a peak success rate of 89.90% on anomaly detection image sets of retail objects drawn from the RP2K dataset, compared to 80.81% on the best-performing baseline of a standard ViT auto-encoder. To demonstrate its utility, we describe a robotic mobile manipulation pipeline to autonomously correct the anomalies flagged by Co-AD. This work is ultimately aimed towards developing autonomous mobile robot solutions that reduce the need for human intervention in retail store management.

DOST -- Domain Obedient Self-supervised Training for Multi Label Classification with Noisy Labels

Aug 09, 2023Abstract:The enormous demand for annotated data brought forth by deep learning techniques has been accompanied by the problem of annotation noise. Although this issue has been widely discussed in machine learning literature, it has been relatively unexplored in the context of "multi-label classification" (MLC) tasks which feature more complicated kinds of noise. Additionally, when the domain in question has certain logical constraints, noisy annotations often exacerbate their violations, making such a system unacceptable to an expert. This paper studies the effect of label noise on domain rule violation incidents in the MLC task, and incorporates domain rules into our learning algorithm to mitigate the effect of noise. We propose the Domain Obedient Self-supervised Training (DOST) paradigm which not only makes deep learning models more aligned to domain rules, but also improves learning performance in key metrics and minimizes the effect of annotation noise. This novel approach uses domain guidance to detect offending annotations and deter rule-violating predictions in a self-supervised manner, thus making it more "data efficient" and domain compliant. Empirical studies, performed over two large scale multi-label classification datasets, demonstrate that our method results in improvement across the board, and often entirely counteracts the effect of noise.

Challenges in Applying Robotics to Retail Store Management

Aug 18, 2022

Abstract:An autonomous retail store management system entails inventory tracking, store monitoring, and anomaly correction. Recent attempts at autonomous retail store management have faced challenges primarily in perception for anomaly detection, as well as new challenges arising in mobile manipulation for executing anomaly correction. Advances in each of these areas along with system integration are necessary for a scalable solution in this domain.

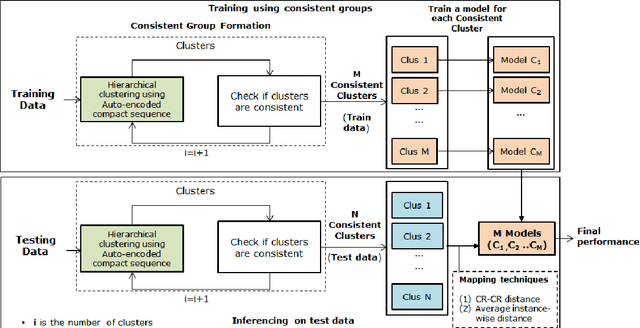

Unsupervised Driving Behavior Analysis using Representation Learning and Exploiting Group-based Training

May 12, 2022

Abstract:Driving behavior monitoring plays a crucial role in managing road safety and decreasing the risk of traffic accidents. Driving behavior is affected by multiple factors like vehicle characteristics, types of roads, traffic, but, most importantly, the pattern of driving of individuals. Current work performs a robust driving pattern analysis by capturing variations in driving patterns. It forms consistent groups by learning compressed representation of time series (Auto Encoded Compact Sequence) using a multi-layer seq-2-seq autoencoder and exploiting hierarchical clustering along with recommending the choice of best distance measure. Consistent groups aid in identifying variations in driving patterns of individuals captured in the dataset. These groups are generated for both train and hidden test data. The consistent groups formed using train data, are exploited for training multiple instances of the classifier. Obtained choice of best distance measure is used to select the best train-test pair of consistent groups. We have experimented on the publicly available UAH-DriveSet dataset considering the signals captured from IMU sensors (accelerometer and gyroscope) for classifying driving behavior. We observe proposed method, significantly outperforms the benchmark performance.

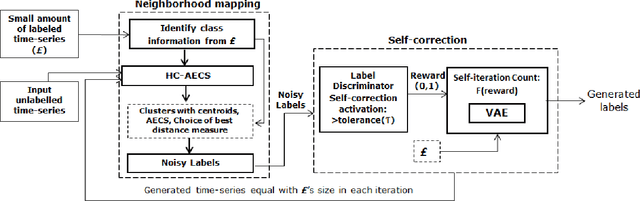

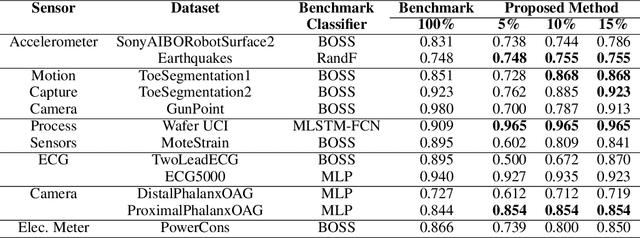

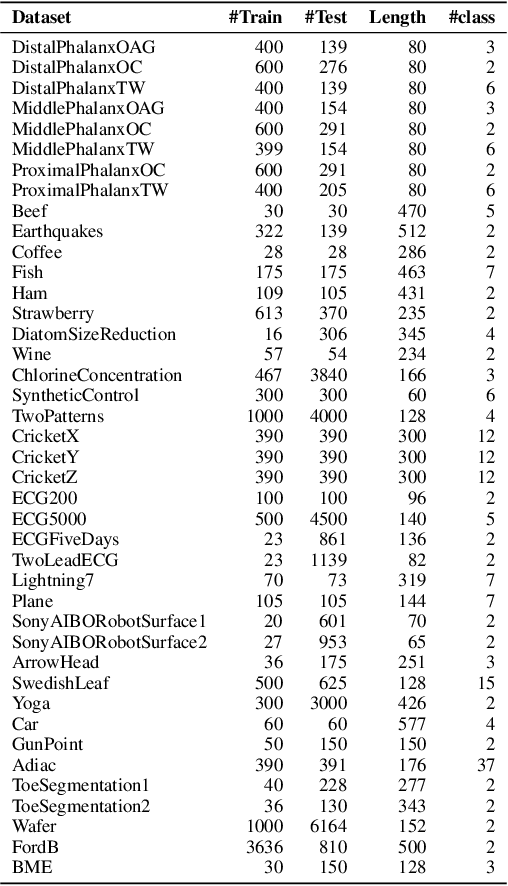

Automated Label Generation for Time Series Classification with Representation Learning: Reduction of Label Cost for Training

Jul 12, 2021

Abstract:Time-series generated by end-users, edge devices, and different wearables are mostly unlabelled. We propose a method to auto-generate labels of un-labelled time-series, exploiting very few representative labelled time-series. Our method is based on representation learning using Auto Encoded Compact Sequence (AECS) with a choice of best distance measure. It performs self-correction in iterations, by learning latent structure, as well as synthetically boosting representative time-series using Variational-Auto-Encoder (VAE) to improve the quality of labels. We have experimented with UCR and UCI archives, public real-world univariate, multivariate time-series taken from different application domains. Experimental results demonstrate that the proposed method is very close to the performance achieved by fully supervised classification. The proposed method not only produces close to benchmark results but outperforms the benchmark performance in some cases.

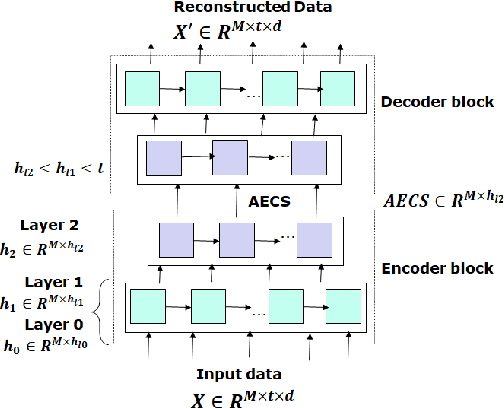

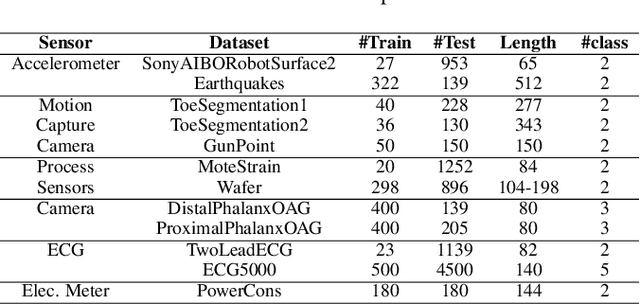

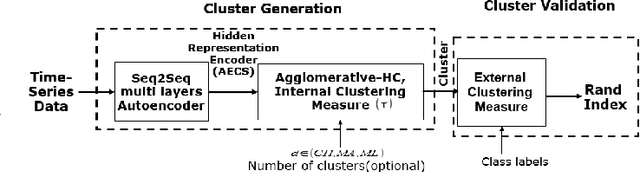

Hierarchical Clustering using Auto-encoded Compact Representation for Time-series Analysis

Jan 11, 2021

Abstract:Getting a robust time-series clustering with best choice of distance measure and appropriate representation is always a challenge. We propose a novel mechanism to identify the clusters combining learned compact representation of time-series, Auto Encoded Compact Sequence (AECS) and hierarchical clustering approach. Proposed algorithm aims to address the large computing time issue of hierarchical clustering as learned latent representation AECS has a length much less than the original length of time-series and at the same time want to enhance its performance.Our algorithm exploits Recurrent Neural Network (RNN) based under complete Sequence to Sequence(seq2seq) autoencoder and agglomerative hierarchical clustering with a choice of best distance measure to recommend the best clustering. Our scheme selects the best distance measure and corresponding clustering for both univariate and multivariate time-series. We have experimented with real-world time-series from UCR and UCI archive taken from diverse application domains like health, smart-city, manufacturing etc. Experimental results show that proposed method not only produce close to benchmark results but also in some cases outperform the benchmark.

Enabling human-like task identification from natural conversation

Aug 29, 2020

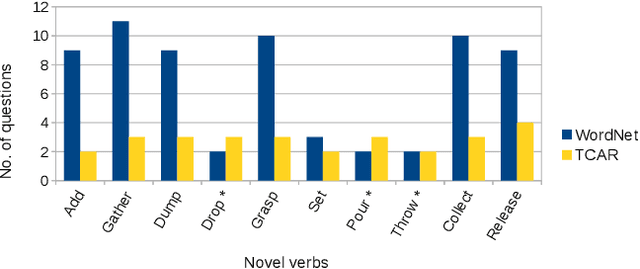

Abstract:A robot as a coworker or a cohabitant is becoming mainstream day-by-day with the development of low-cost sophisticated hardware. However, an accompanying software stack that can aid the usability of the robotic hardware remains the bottleneck of the process, especially if the robot is not dedicated to a single job. Programming a multi-purpose robot requires an on the fly mission scheduling capability that involves task identification and plan generation. The problem dimension increases if the robot accepts tasks from a human in natural language. Though recent advances in NLP and planner development can solve a variety of complex problems, their amalgamation for a dynamic robotic task handler is used in a limited scope. Specifically, the problem of formulating a planning problem from natural language instructions is not studied in details. In this work, we provide a non-trivial method to combine an NLP engine and a planner such that a robot can successfully identify tasks and all the relevant parameters and generate an accurate plan for the task. Additionally, some mechanism is required to resolve the ambiguity or missing pieces of information in natural language instruction. Thus, we also develop a dialogue strategy that aims to gather additional information with minimal question-answer iterations and only when it is necessary. This work makes a significant stride towards enabling a human-like task understanding capability in a robot.

Demo: Edge-centric Telepresence Avatar Robot for Geographically Distributed Environment

Jul 25, 2020

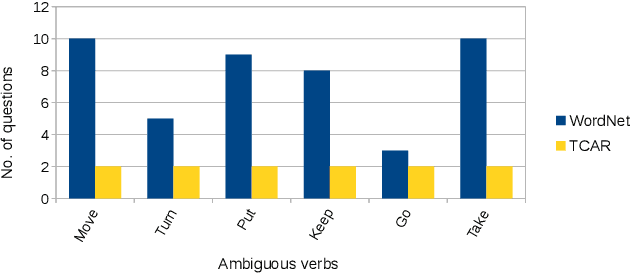

Abstract:Using a robotic platform for telepresence applications has gained paramount importance in this decade. Scenarios such as remote meetings, group discussions, and presentations/talks in seminars and conferences get much attention in this regard. Though there exist some robotic platforms for such telepresence applications, they lack efficacy in communication and interaction between the remote person and the avatar robot deployed in another geographic location. Also, such existing systems are often cloud-centric which adds to its network overhead woes. In this demo, we develop and test a framework that brings the best of both cloud and edge-centric systems together along with a newly designed communication protocol. Our solution adds to the improvement of the existing systems in terms of robustness and efficacy in communication for a geographically distributed environment.

I can attend a meeting too! Towards a human-like telepresence avatar robot to attend meeting on your behalf

Jun 28, 2020

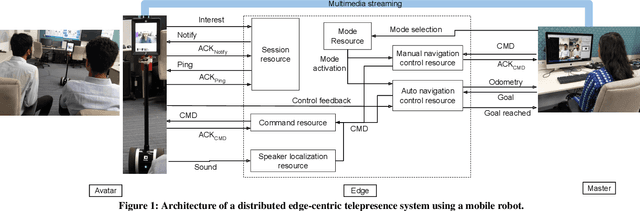

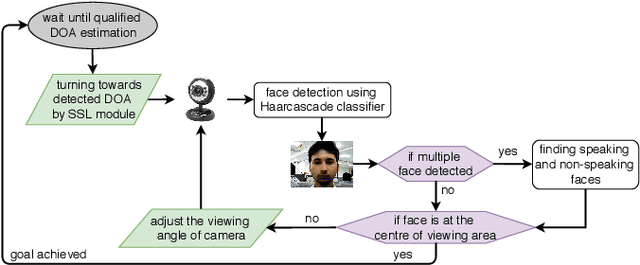

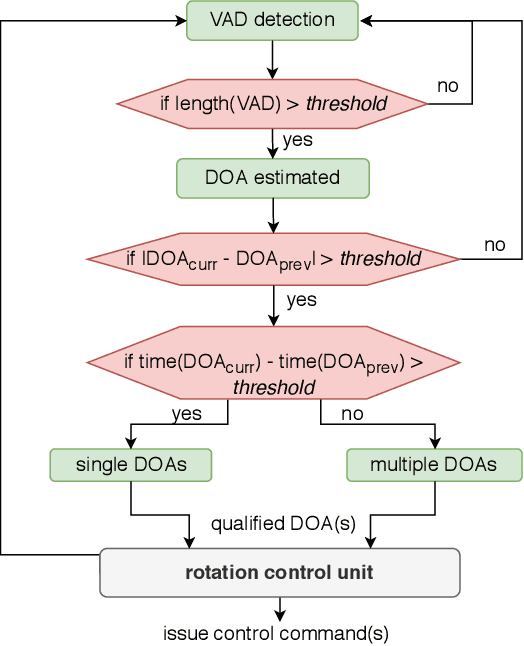

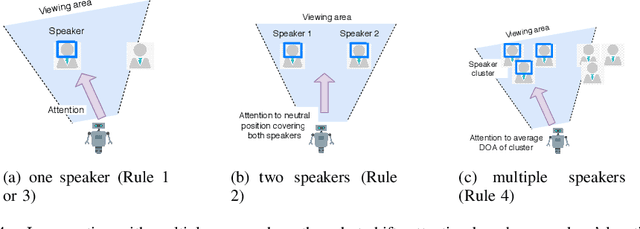

Abstract:Telepresence robots are used in various forms in various use-cases that helps to avoid physical human presence at the scene of action. In this work, we focus on a telepresence robot that can be used to attend a meeting remotely with a group of people. Unlike a one-to-one meeting, participants in a group meeting can be located at a different part of the room, especially in an informal setup. As a result, all of them may not be at the viewing angle of the robot, a.k.a. the remote participant. In such a case, to provide a better meeting experience, the robot should localize the speaker and bring the speaker at the center of the viewing angle. Though sound source localization can easily be done using a microphone-array, bringing the speaker or set of speakers at the viewing angle is not a trivial task. First of all, the robot should react only to a human voice, but not to the random noises. Secondly, if there are multiple speakers, to whom the robot should face or should it rotate continuously with every new speaker? Lastly, most robotic platforms are resource-constrained and to achieve a real-time response, i.e., avoiding network delay, all the algorithms should be implemented within the robot itself. This article presents a study and implementation of an attention shifting scheme in a telepresence meeting scenario which best suits the needs and expectations of the collocated and remote attendees. We define a policy to decide when a robot should rotate and how much based on real-time speaker localization. Using user satisfaction study, we show the efficacy and usability of our system in the meeting scenario. Moreover, our system can be easily adapted to other scenarios where multiple people are located.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge