Antoine J. -P. Tixier

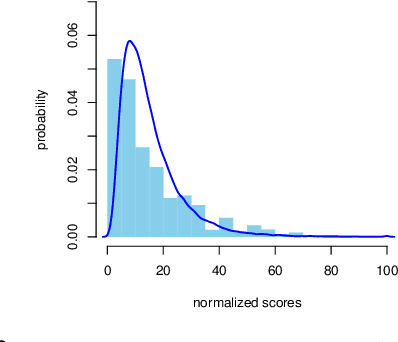

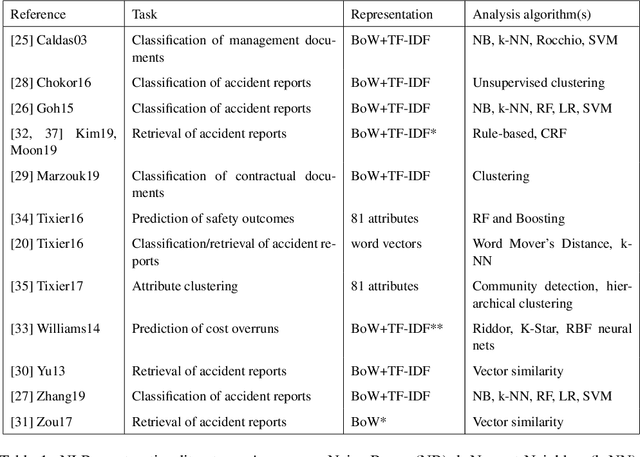

Safer Together: Machine Learning Models Trained on Shared Accident Datasets Predict Construction Injuries Better than Company-Specific Models

Jan 09, 2023

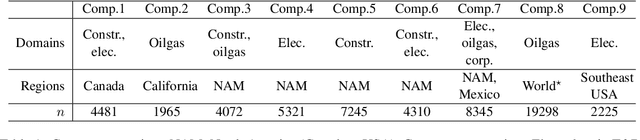

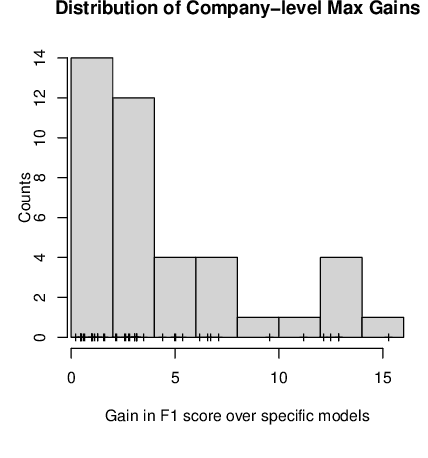

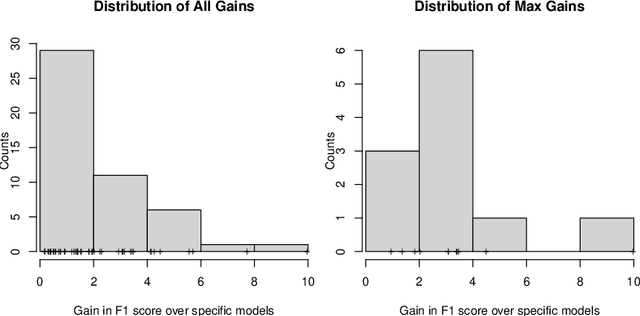

Abstract:In this study, we capitalized on a collective dataset repository of 57k accidents from 9 companies belonging to 3 domains and tested whether models trained on multiple datasets (generic models) predicted safety outcomes better than the company-specific models. We experimented with full generic models (trained on all data), per-domain generic models (construction, electric T&D, oil & gas), and with ensembles of generic and specific models. Results are very positive, with generic models outperforming the company-specific models in most cases while also generating finer-grained, hence more useful, forecasts. Successful generic models remove the needs for training company-specific models, saving a lot of time and resources, and give small companies, whose accident datasets are too limited to train their own models, access to safety outcome predictions. It may still however be advantageous to train specific models to get an extra boost in performance through ensembling with the generic models. Overall, by learning lessons from a pool of datasets whose accumulated experience far exceeds that of any single company, and making these lessons easily accessible in the form of simple forecasts, generic models tackle the holy grail of safety cross-organizational learning and dissemination in the construction industry.

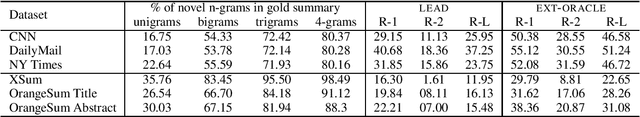

FrugalScore: Learning Cheaper, Lighter and Faster Evaluation Metricsfor Automatic Text Generation

Oct 16, 2021

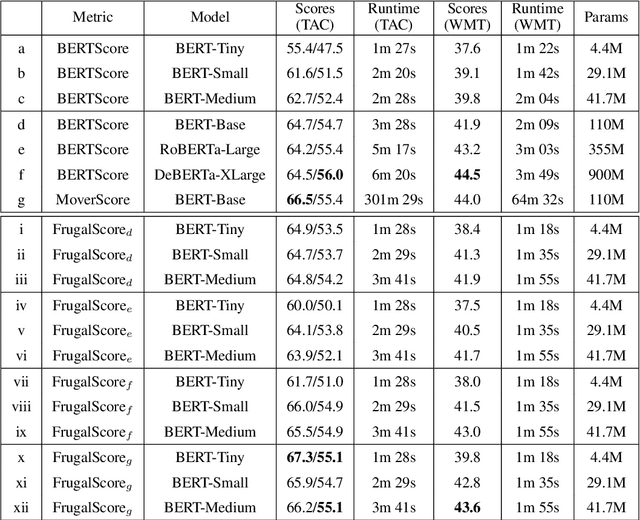

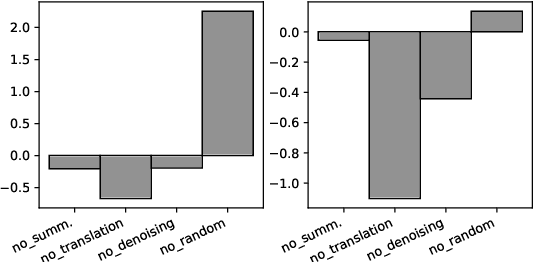

Abstract:Fast and reliable evaluation metrics are key to R&D progress. While traditional natural language generation metrics are fast, they are not very reliable. Conversely, new metrics based on large pretrained language models are much more reliable, but require significant computational resources. In this paper, we propose FrugalScore, an approach to learn a fixed, low cost version of any expensive NLG metric, while retaining most of its original performance. Experiments with BERTScore and MoverScore on summarization and translation show that FrugalScore is on par with the original metrics (and sometimes better), while having several orders of magnitude less parameters and running several times faster. On average over all learned metrics, tasks, and variants, FrugalScore retains 96.8% of the performance, runs 24 times faster, and has 35 times less parameters than the original metrics. We make our trained metrics publicly available, to benefit the entire NLP community and in particular researchers and practitioners with limited resources.

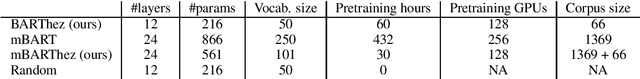

BARThez: a Skilled Pretrained French Sequence-to-Sequence Model

Oct 23, 2020

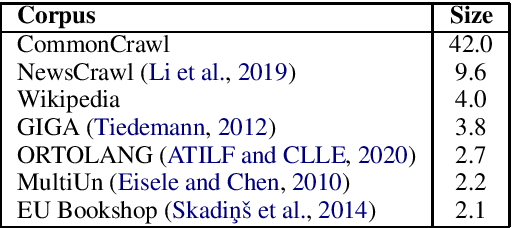

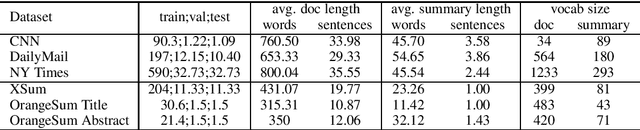

Abstract:Inductive transfer learning, enabled by self-supervised learning, have taken the entire Natural Language Processing (NLP) field by storm, with models such as BERT and BART setting new state of the art on countless natural language understanding tasks. While there are some notable exceptions, most of the available models and research have been conducted for the English language. In this work, we introduce BARThez, the first BART model for the French language (to the best of our knowledge). BARThez was pretrained on a very large monolingual French corpus from past research that we adapted to suit BART's perturbation schemes. Unlike already existing BERT-based French language models such as CamemBERT and FlauBERT, BARThez is particularly well-suited for generative tasks, since not only its encoder but also its decoder is pretrained. In addition to discriminative tasks from the FLUE benchmark, we evaluate BARThez on a novel summarization dataset, OrangeSum, that we release with this paper. We also continue the pretraining of an already pretrained multilingual BART on BARThez's corpus, and we show that the resulting model, which we call mBARTHez, provides a significant boost over vanilla BARThez, and is on par with or outperforms CamemBERT and FlauBERT.

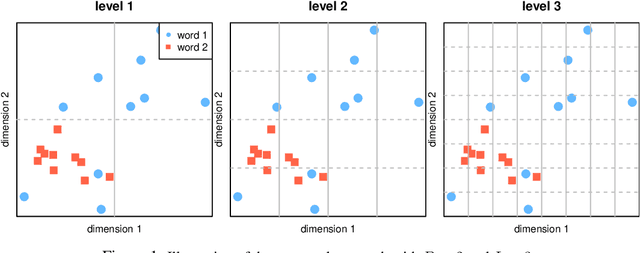

Unsupervised Word Polysemy Quantification with Multiresolution Grids of Contextual Embeddings

Mar 23, 2020

Abstract:The number of senses of a given word, or polysemy, is a very subjective notion, which varies widely across annotators and resources. We propose a novel method to estimate polysemy, based on simple geometry in the contextual embedding space. Our approach is fully unsupervised and purely data-driven. We show through rigorous experiments that our rankings are well correlated (with strong statistical significance) with 6 different rankings derived from famous human-constructed resources such as WordNet, OntoNotes, Oxford, Wikipedia etc., for 6 different standard metrics. We also visualize and analyze the correlation between the human rankings. A valuable by-product of our method is the ability to sample, at no extra cost, sentences containing different senses of a given word. Finally, the fully unsupervised nature of our method makes it applicable to any language. Code and data are publicly available at https://github.com/ksipos/polysemy-assessment.

Message Passing Attention Networks for Document Understanding

Aug 17, 2019

Abstract:Most graph neural networks can be described in terms of message passing, vertex update, and readout functions. In this paper, we represent documents as word co-occurrence networks and propose an application of the message passing framework to NLP, the Message Passing Attention network for Document understanding (MPAD). We also propose several hierarchical variants of MPAD. Experiments conducted on 10 standard text classification datasets show that our architectures are competitive with the state-of-the-art. Ablation studies reveal further insights about the impact of the different components on performance. Code and data are publicly available.

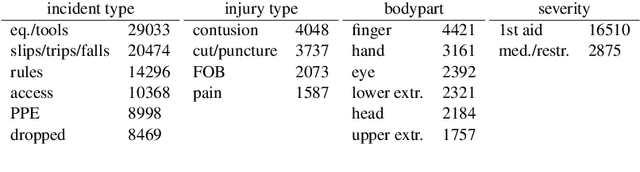

AI Predicts Independent Construction Safety Outcomes from Universal Attributes

Aug 16, 2019

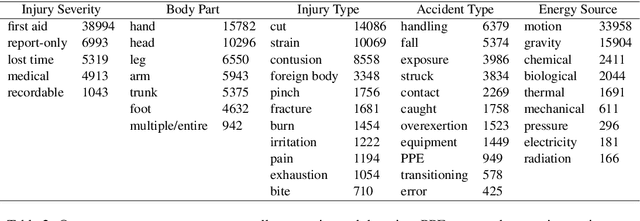

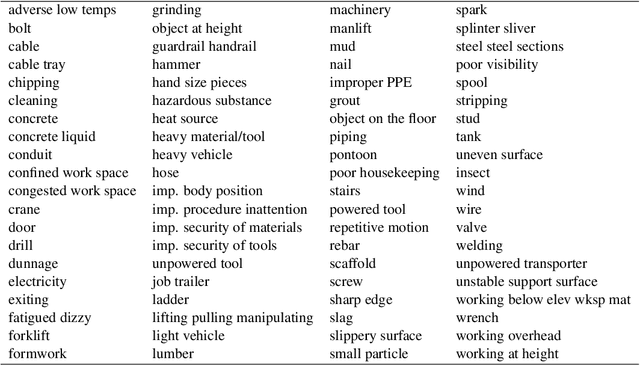

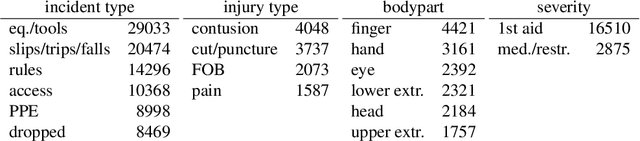

Abstract:This paper significantly improves on, and finishes to validate, the approach proposed in "Application of Machine Learning to Construction Injury Prediction" (Tixier et al. 2016 [1]). Like in the original study, we use NLP to extract fundamental attributes from raw incident reports and machine learning models are trained to predict safety outcomes (here, these outcomes are injury severity, injury type, bodypart impacted, and incident type). However, in this study, safety outcomes were not extracted via NLP but are independent (human annotations), eliminating any potential source of artificial correlation between predictors and predictands. Results show that attributes are still highly predictive, confirming the validity of the original study. Other improvements brought by the current study include the use of (1) a much larger dataset, (2) two new models (XGBoost andlinear SVM), (3) model stacking, (4) a more straight forward experimental setup with more appropriate performance metrics, and (5) an analysis of per-category attribute importance scores. Finally, the injury severity outcome is well predicted, which was not the case in the original study. This is a significant advancement.

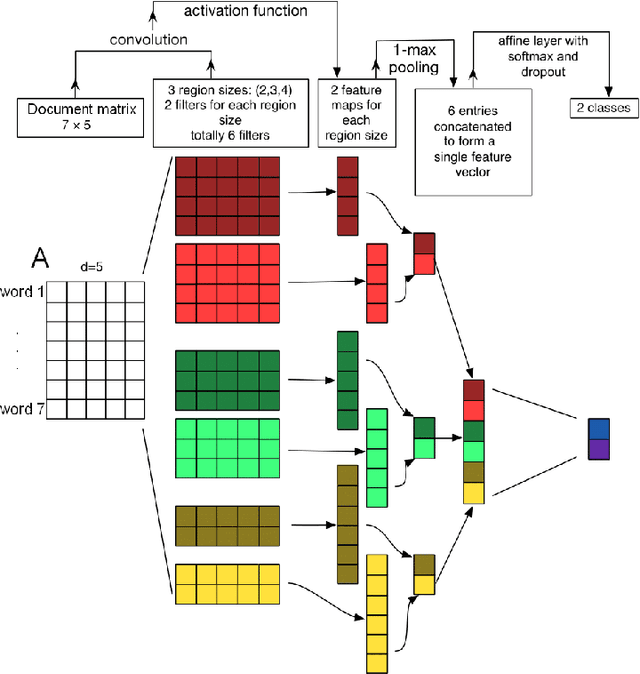

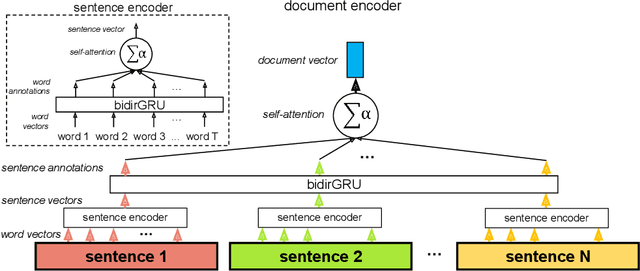

Automatically Learning Construction Injury Precursors from Text

Jul 26, 2019

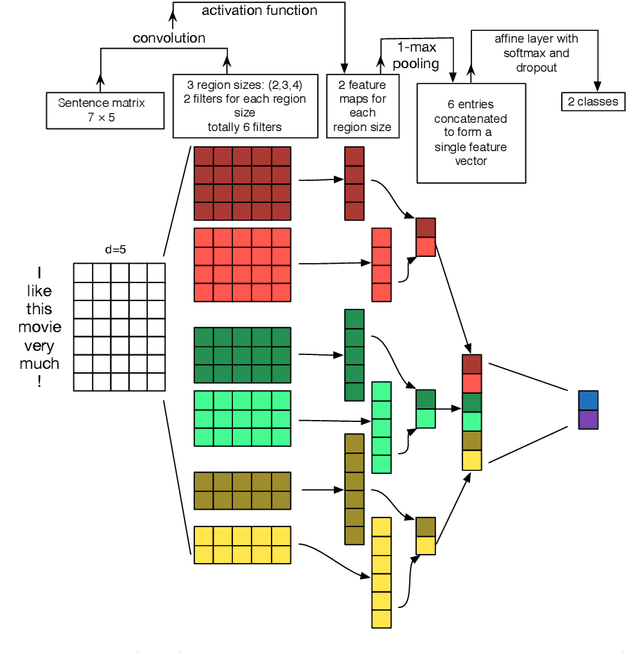

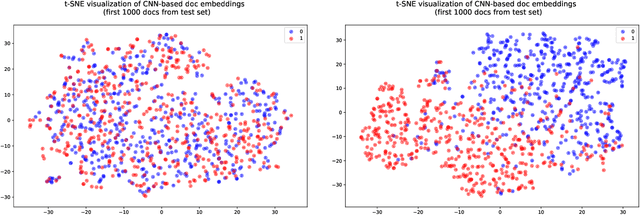

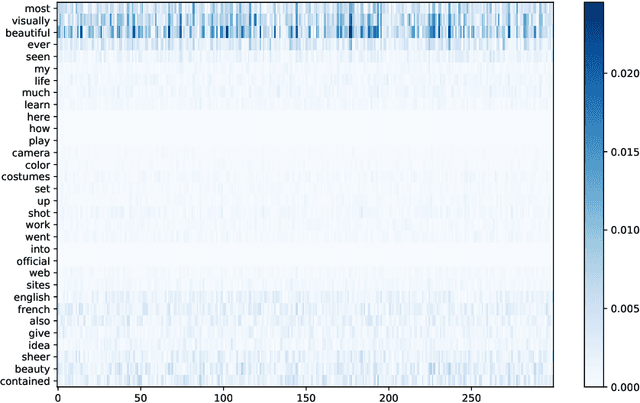

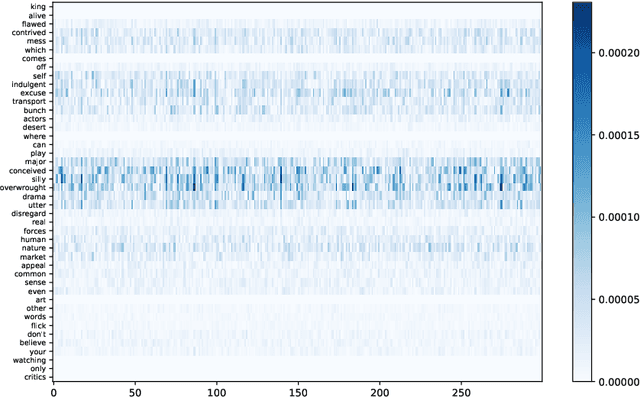

Abstract:In light of the increasing availability of digitally recorded safety reports in the construction industry, it is important to develop methods to exploit these data to improve our understanding of safety incidents and ability to learn from them. In this study, we compare several approaches to automatically learn injury precursors from raw construction accident reports. More precisely, we experiment with two state-of-the-art deep learning architectures for Natural Language Processing (NLP), Convolutional Neural Networks (CNN) and Hierarchical Attention Networks (HAN), and with the established Term Frequency - Inverse Document Frequency representation (TF-IDF) + Support Vector Machine (SVM) approach. For each model, we provide a method to identify (after training) the textual patterns that are, on average, the most predictive of each safety outcome. We show that among those pieces of text, valid injury precursors can be found. The proposed methods can also be used by the user to visualize and understand the models' predictions.

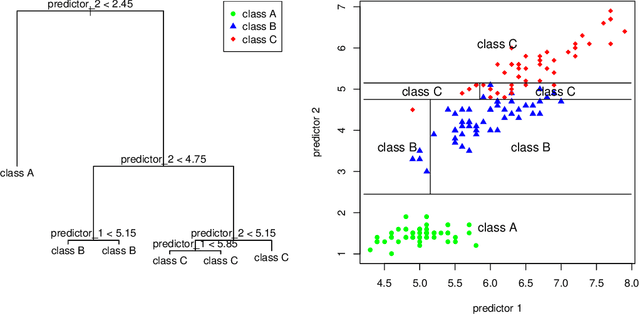

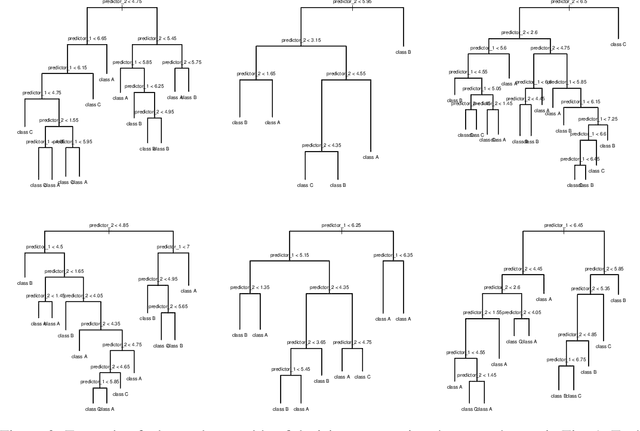

Perturb and Combine to Identify Influential Spreaders in Real-World Networks

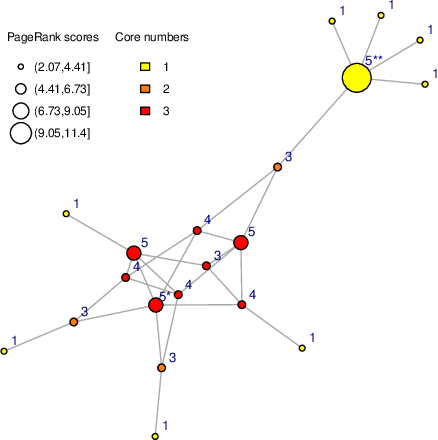

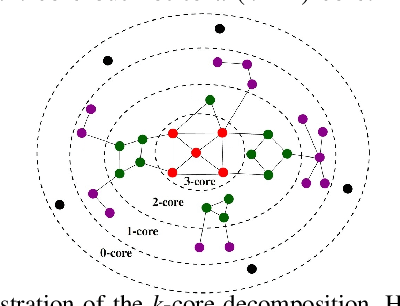

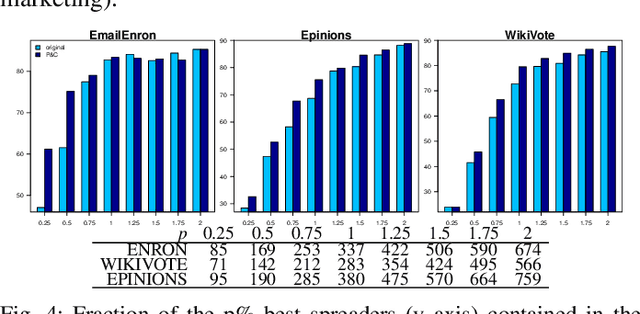

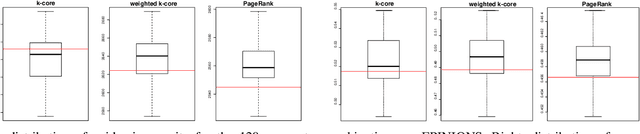

Sep 04, 2018

Abstract:Recent research has shown that graph degeneracy algorithms, which decompose a network into a hierarchy of nested subgraphs of decreasing size and increasing density, are very effective at detecting the good spreaders in a network. However, it is also known that degeneracy-based decompositions of a graph are unstable to small perturbations of the network structure. In Machine Learning, the performance of unstable classification and regression methods, such as fully-grown decision trees, can be greatly improved by using Perturb and Combine (P&C) strategies such as bagging (bootstrap aggregating). Therefore, we propose a P&C procedure for networks that (1) creates many perturbed versions of a given graph, (2) applies a node scoring function separately to each graph (such as a degeneracy-based one), and (3) combines the results. We conduct real-world experiments on the tasks of identifying influential spreaders in large social networks, and influential words (keywords) in small word co-occurrence networks. We use the k-core, generalized k-core, and PageRank algorithms as our vertex scoring functions. In each case, using the aggregated scores brings significant improvements compared to using the scores computed on the original graphs. Finally, a bias-variance analysis suggests that our P&C procedure works mainly by reducing bias, and that therefore, it should be capable of improving the performance of all vertex scoring functions, not only unstable ones.

Notes on Deep Learning for NLP

Aug 30, 2018

Abstract:My notes on Deep Learning for NLP.

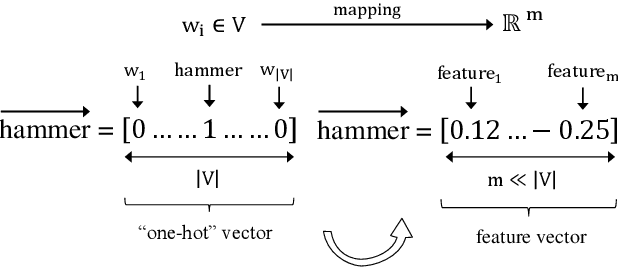

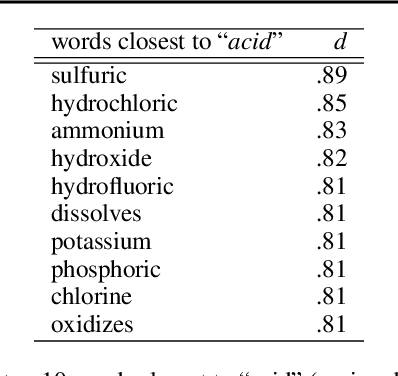

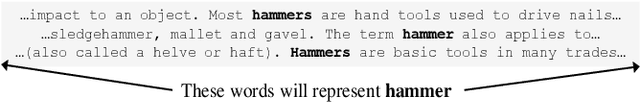

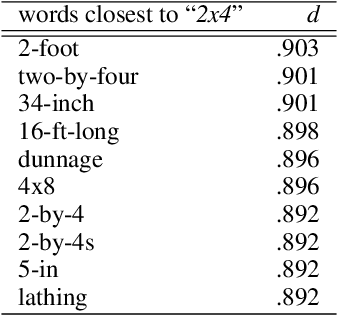

Word Embeddings for the Construction Domain

Oct 28, 2016

Abstract:We introduce word vectors for the construction domain. Our vectors were obtained by running word2vec on an 11M-word corpus that we created from scratch by leveraging freely-accessible online sources of construction-related text. We first explore the embedding space and show that our vectors capture meaningful construction-specific concepts. We then evaluate the performance of our vectors against that of ones trained on a 100B-word corpus (Google News) within the framework of an injury report classification task. Without any parameter tuning, our embeddings give competitive results, and outperform the Google News vectors in many cases. Using a keyword-based compression of the reports also leads to a significant speed-up with only a limited loss in performance. We release our corpus and the data set we created for the classification task as publicly available, in the hope that they will be used by future studies for benchmarking and building on our work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge