Anthony Scanlan

Descriptor: Distance-Annotated Traffic Perception Question Answering (DTPQA)

Nov 17, 2025Abstract:The remarkable progress of Vision-Language Models (VLMs) on a variety of tasks has raised interest in their application to automated driving. However, for these models to be trusted in such a safety-critical domain, they must first possess robust perception capabilities, i.e., they must be capable of understanding a traffic scene, which can often be highly complex, with many things happening simultaneously. Moreover, since critical objects and agents in traffic scenes are often at long distances, we require systems with not only strong perception capabilities at close distances (up to 20 meters), but also at long (30+ meters) range. Therefore, it is important to evaluate the perception capabilities of these models in isolation from other skills like reasoning or advanced world knowledge. Distance-Annotated Traffic Perception Question Answering (DTPQA) is a Visual Question Answering (VQA) benchmark designed specifically for this purpose: it can be used to evaluate the perception systems of VLMs in traffic scenarios using trivial yet crucial questions relevant to driving decisions. It consists of two parts: a synthetic benchmark (DTP-Synthetic) created using a simulator, and a real-world benchmark (DTP-Real) built on top of existing images of real traffic scenes. Additionally, DTPQA includes distance annotations, i.e., how far the object in question is from the camera. More specifically, each DTPQA sample consists of (at least): (a) an image, (b) a question, (c) the ground truth answer, and (d) the distance of the object in question, enabling analysis of how VLM performance degrades with increasing object distance. In this article, we provide the dataset itself along with the Python scripts used to create it, which can be used to generate additional data of the same kind.

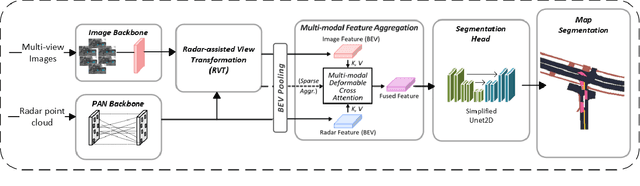

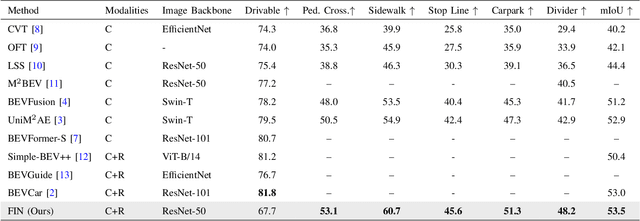

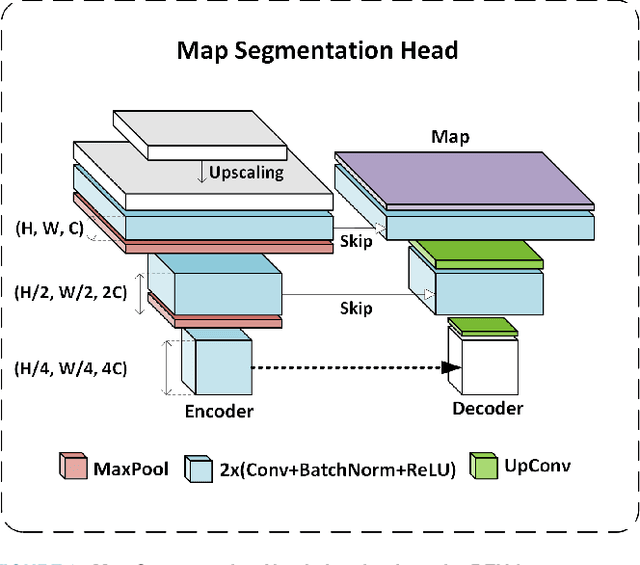

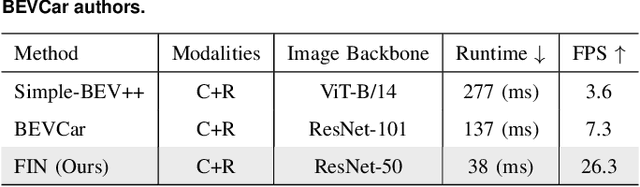

FIN: Fast Inference Network for Map Segmentation

Oct 01, 2025

Abstract:Multi-sensor fusion in autonomous vehicles is becoming more common to offer a more robust alternative for several perception tasks. This need arises from the unique contribution of each sensor in collecting data: camera-radar fusion offers a cost-effective solution by combining rich semantic information from cameras with accurate distance measurements from radar, without incurring excessive financial costs or overwhelming data processing requirements. Map segmentation is a critical task for enabling effective vehicle behaviour in its environment, yet it continues to face significant challenges in achieving high accuracy and meeting real-time performance requirements. Therefore, this work presents a novel and efficient map segmentation architecture, using cameras and radars, in the \acrfull{bev} space. Our model introduces a real-time map segmentation architecture considering aspects such as high accuracy, per-class balancing, and inference time. To accomplish this, we use an advanced loss set together with a new lightweight head to improve the perception results. Our results show that, with these modifications, our approach achieves results comparable to large models, reaching 53.5 mIoU, while also setting a new benchmark for inference time, improving it by 260\% over the strongest baseline models.

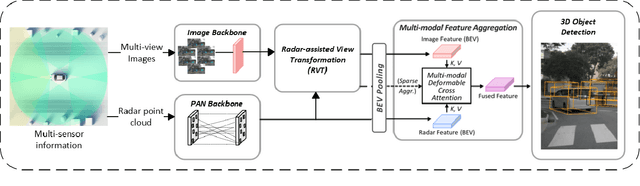

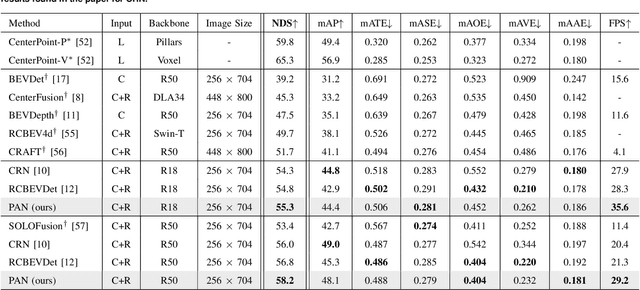

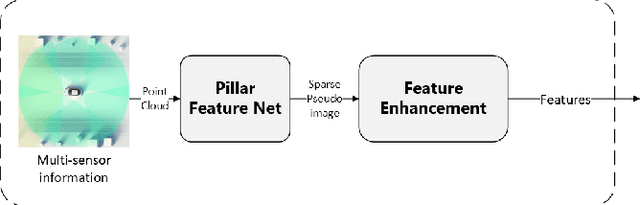

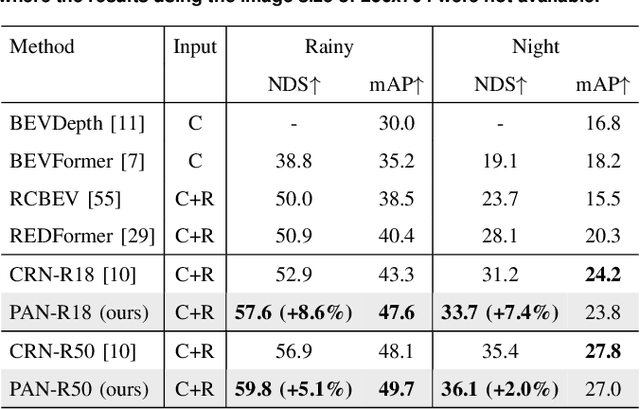

PAN: Pillars-Attention-Based Network for 3D Object Detection

Sep 19, 2025

Abstract:Camera-radar fusion offers a robust and low-cost alternative to Camera-lidar fusion for the 3D object detection task in real-time under adverse weather and lighting conditions. However, currently, in the literature, it is possible to find few works focusing on this modality and, most importantly, developing new architectures to explore the advantages of the radar point cloud, such as accurate distance estimation and speed information. Therefore, this work presents a novel and efficient 3D object detection algorithm using cameras and radars in the bird's-eye-view (BEV). Our algorithm exploits the advantages of radar before fusing the features into a detection head. A new backbone is introduced, which maps the radar pillar features into an embedded dimension. A self-attention mechanism allows the backbone to model the dependencies between the radar points. We are using a simplified convolutional layer to replace the FPN-based convolutional layers used in the PointPillars-based architectures with the main goal of reducing inference time. Our results show that with this modification, our approach achieves the new state-of-the-art in the 3D object detection problem, reaching 58.2 of the NDS metric for the use of ResNet-50, while also setting a new benchmark for inference time on the nuScenes dataset for the same category.

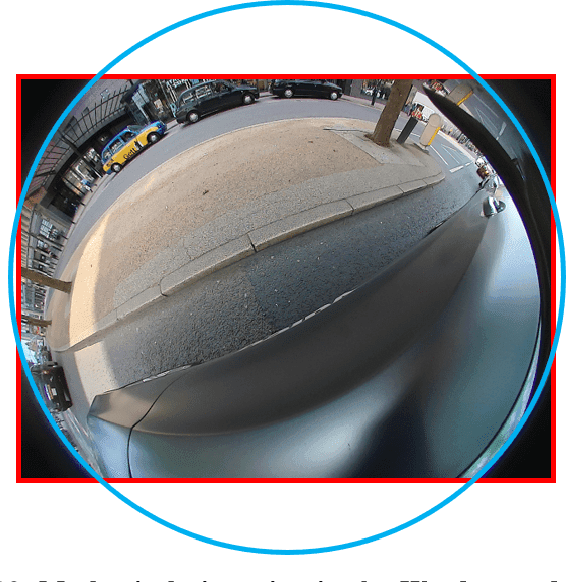

Deformable Convolution Based Road Scene Semantic Segmentation of Fisheye Images in Autonomous Driving

Jul 23, 2024

Abstract:This study investigates the effectiveness of modern Deformable Convolutional Neural Networks (DCNNs) for semantic segmentation tasks, particularly in autonomous driving scenarios with fisheye images. These images, providing a wide field of view, pose unique challenges for extracting spatial and geometric information due to dynamic changes in object attributes. Our experiments focus on segmenting the WoodScape fisheye image dataset into ten distinct classes, assessing the Deformable Networks' ability to capture intricate spatial relationships and improve segmentation accuracy. Additionally, we explore different loss functions to address class imbalance issues and compare the performance of conventional CNN architectures with Deformable Convolution-based CNNs, including Vanilla U-Net and Residual U-Net architectures. The significant improvement in mIoU score resulting from integrating Deformable CNNs demonstrates their effectiveness in handling the geometric distortions present in fisheye imagery, exceeding the performance of traditional CNN architectures. This underscores the significant role of Deformable convolution in enhancing semantic segmentation performance for fisheye imagery.

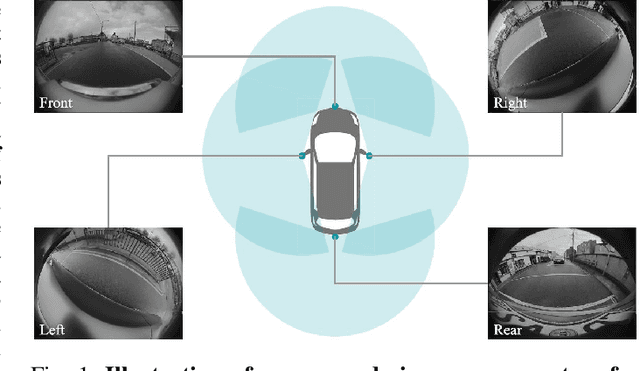

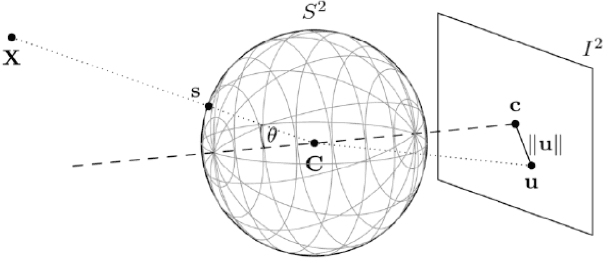

Surround-View Fisheye Optics in Computer Vision and Simulation: Survey and Challenges

Feb 21, 2024

Abstract:In this paper, we provide a survey on automotive surround-view fisheye optics, with an emphasis on the impact of optical artifacts on computer vision tasks in autonomous driving and ADAS. The automotive industry has advanced in applying state-of-the-art computer vision to enhance road safety and provide automated driving functionality. When using camera systems on vehicles, there is a particular need for a wide field of view to capture the entire vehicle's surroundings, in areas such as low-speed maneuvering, automated parking, and cocoon sensing. However, one crucial challenge in surround-view cameras is the strong optical aberrations of the fisheye camera, which is an area that has received little attention in the literature. Additionally, a comprehensive dataset is needed for testing safety-critical scenarios in vehicle automation. The industry has turned to simulation as a cost-effective strategy for creating synthetic datasets with surround-view camera imagery. We examine different simulation methods (such as model-driven and data-driven simulations) and discuss the simulators' ability (or lack thereof) to model real-world optical performance. Overall, this paper highlights the optical aberrations in automotive fisheye datasets, and the limitations of optical reality in simulated fisheye datasets, with a focus on computer vision in surround-view optical systems.

Measuring Natural Scenes SFR of Automotive Fisheye Cameras

Jan 10, 2024Abstract:The Modulation Transfer Function (MTF) is an important image quality metric typically used in the automotive domain. However, despite the fact that optical quality has an impact on the performance of computer vision in vehicle automation, for many public datasets, this metric is unknown. Additionally, wide field-of-view (FOV) cameras have become increasingly popular, particularly for low-speed vehicle automation applications. To investigate image quality in datasets, this paper proposes an adaptation of the Natural Scenes Spatial Frequency Response (NS-SFR) algorithm to suit cameras with a wide field-of-view.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge