Letizia Mariotti

PAN: Pillars-Attention-Based Network for 3D Object Detection

Sep 19, 2025

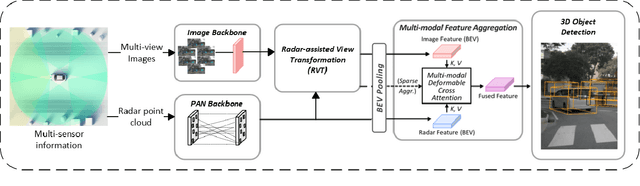

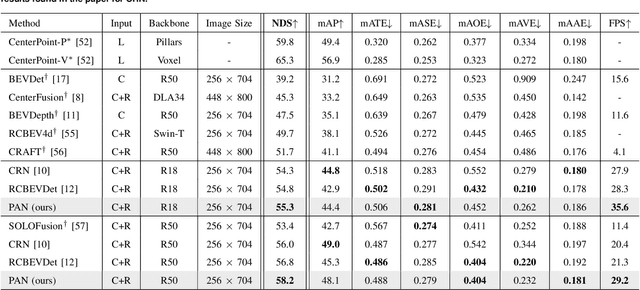

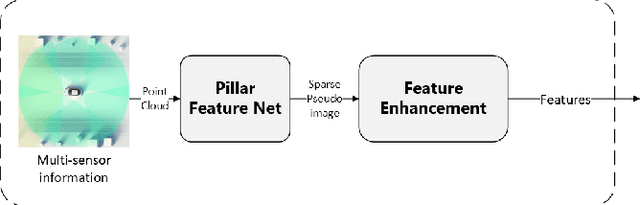

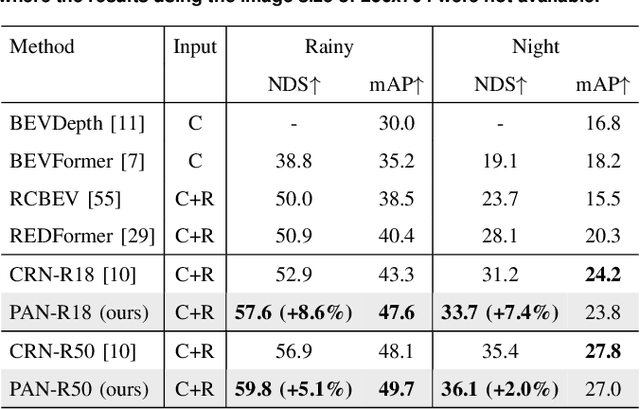

Abstract:Camera-radar fusion offers a robust and low-cost alternative to Camera-lidar fusion for the 3D object detection task in real-time under adverse weather and lighting conditions. However, currently, in the literature, it is possible to find few works focusing on this modality and, most importantly, developing new architectures to explore the advantages of the radar point cloud, such as accurate distance estimation and speed information. Therefore, this work presents a novel and efficient 3D object detection algorithm using cameras and radars in the bird's-eye-view (BEV). Our algorithm exploits the advantages of radar before fusing the features into a detection head. A new backbone is introduced, which maps the radar pillar features into an embedded dimension. A self-attention mechanism allows the backbone to model the dependencies between the radar points. We are using a simplified convolutional layer to replace the FPN-based convolutional layers used in the PointPillars-based architectures with the main goal of reducing inference time. Our results show that with this modification, our approach achieves the new state-of-the-art in the 3D object detection problem, reaching 58.2 of the NDS metric for the use of ResNet-50, while also setting a new benchmark for inference time on the nuScenes dataset for the same category.

Spherical formulation of geometric motion segmentation constraints in fisheye cameras

Apr 26, 2021

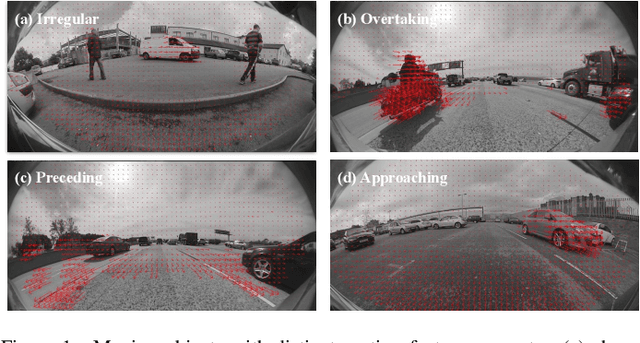

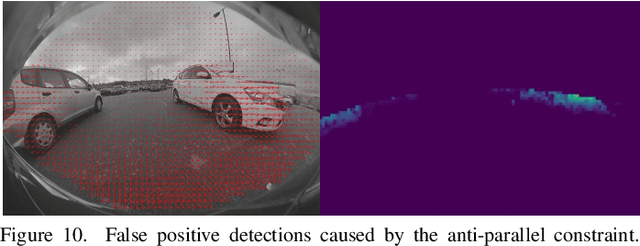

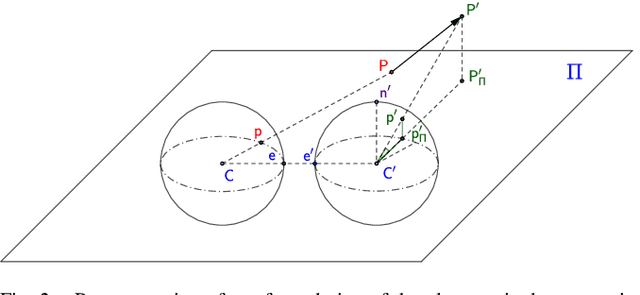

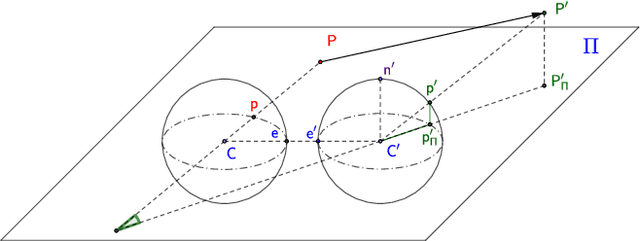

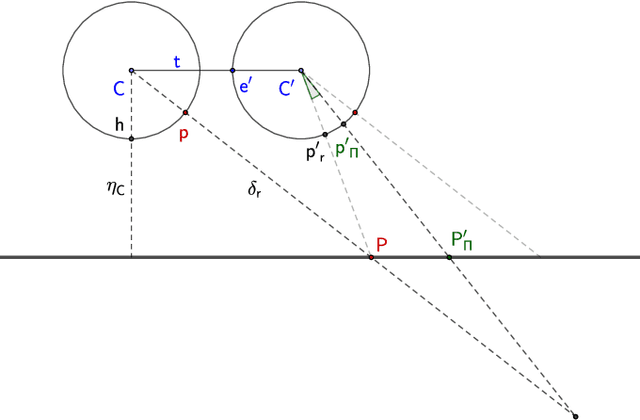

Abstract:We introduce a visual motion segmentation method employing spherical geometry for fisheye cameras and automoated driving. Three commonly used geometric constraints in pin-hole imagery (the positive height, positive depth and epipolar constraints) are reformulated to spherical coordinates, making them invariant to specific camera configurations as long as the camera calibration is known. A fourth constraint, known as the anti-parallel constraint, is added to resolve motion-parallax ambiguity, to support the detection of moving objects undergoing parallel or near-parallel motion with respect to the host vehicle. A final constraint constraint is described, known as the spherical three-view constraint, is described though not employed in our proposed algorithm. Results are presented and analyzed that demonstrate that the proposal is an effective motion segmentation approach for direct employment on fisheye imagery.

* arXiv admin note: text overlap with arXiv:2003.03262

Spherical formulation of moving object geometric constraints for monocular fisheye cameras

Mar 06, 2020

Abstract:In this paper, we introduce a moving object detection algorithm for fisheye cameras used in autonomous driving. We reformulate the three commonly used constraints in rectilinear images (epipolar, positive depth and positive height constraints) to spherical coordinates which is invariant to specific camera configuration once the calibration is known. One of the main challenging use case in autonomous driving is to detect parallel moving objects which suffer from motion-parallax ambiguity. To alleviate this, we formulate an additional fourth constraint, called the anti-parallel constraint, which aids the detection of objects with motion that mirrors the ego-vehicle possible. We analyze the proposed algorithm in different scenarios and demonstrate that it works effectively operating directly on fisheye images.

* 8 pages, 9 figures, 2 tables Conference ITSC 2019

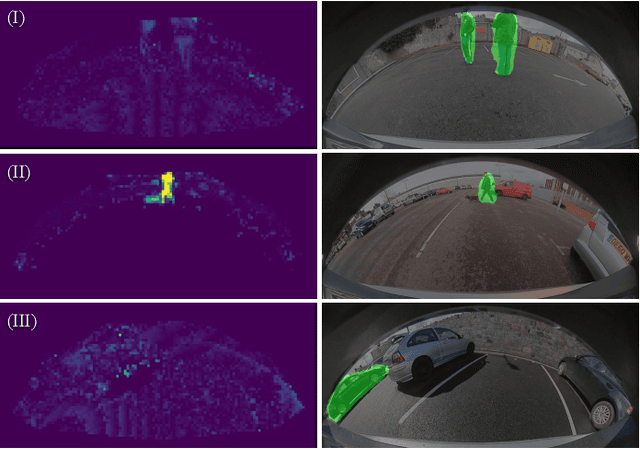

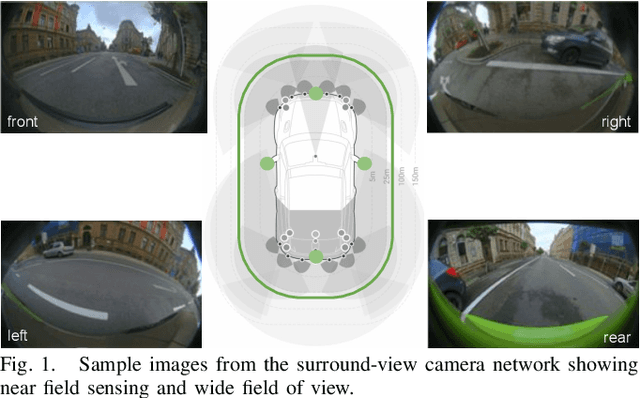

FisheyeMODNet: Moving Object detection on Surround-view Cameras for Autonomous Driving

Aug 30, 2019

Abstract:Moving Object Detection (MOD) is an important task for achieving robust autonomous driving. An autonomous vehicle has to estimate collision risk with other interacting objects in the environment and calculate an optional trajectory. Collision risk is typically higher for moving objects than static ones due to the need to estimate the future states and poses of the objects for decision making. This is particularly important for near-range objects around the vehicle which are typically detected by a fisheye surround-view system that captures a 360{\deg} view of the scene. In this work, we propose a CNN architecture for moving object detection using fisheye images that were captured in autonomous driving environment. As motion geometry is highly non-linear and unique for fisheye cameras, we will make an improved version of the current dataset public to encourage further research. To target embedded deployment, we design a lightweight encoder sharing weights across sequential images. The proposed network runs at 15 fps on a 1 teraflops automotive embedded system at accuracy of 40% IoU and 69.5% mIoU.

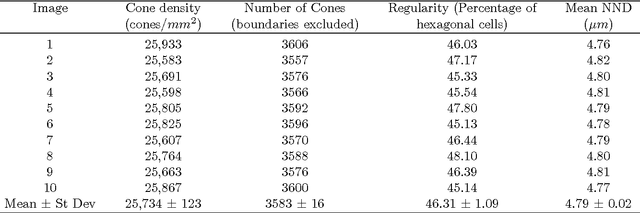

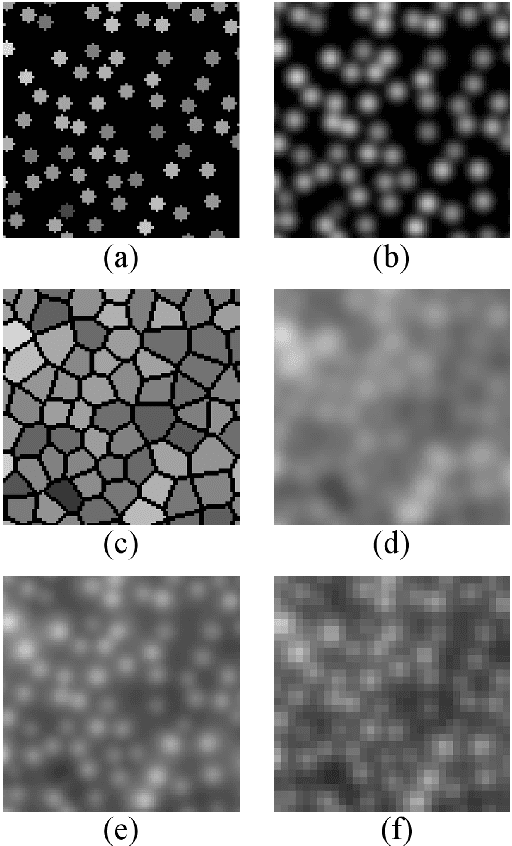

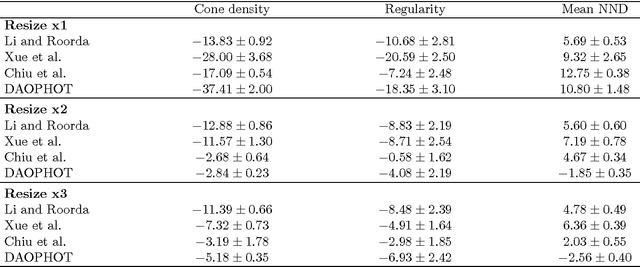

Performance Analysis of Cone Detection Algorithms

Feb 05, 2015

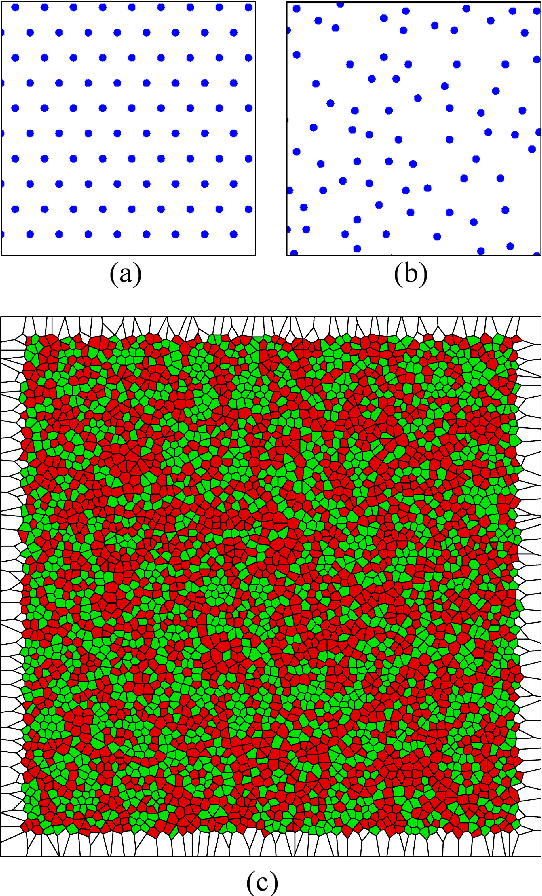

Abstract:Many algorithms have been proposed to help clinicians evaluate cone density and spacing, as these may be related to the onset of retinal diseases. However, there has been no rigorous comparison of the performance of these algorithms. In addition, the performance of such algorithms is typically determined by comparison with human observers. Here we propose a technique to simulate realistic images of the cone mosaic. We use the simulated images to test the performance of two popular cone detection algorithms and we introduce an algorithm which is used by astronomers to detect stars in astronomical images. We use Free Response Operating Characteristic (FROC) curves to evaluate and compare the performance of the three algorithms. This allows us to optimize the performance of each algorithm. We observe that performance is significantly enhanced by up-sampling the images. We investigate the effect of noise and image quality on cone mosaic parameters estimated using the different algorithms, finding that the estimated regularity is the most sensitive parameter. This paper was published in JOSA A and is made available as an electronic reprint with the permission of OSA. The paper can be found at the following URL on the OSA website: http://www.opticsinfobase.org/abstract.cfm?msid=224577. Systematic or multiple reproduction or distribution to multiple locations via electronic or other means is prohibited and is subject to penalties under law.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge