Lucie Yahiaoui

Navigating Uncertainty: The Role of Short-Term Trajectory Prediction in Autonomous Vehicle Safety

Jul 12, 2023

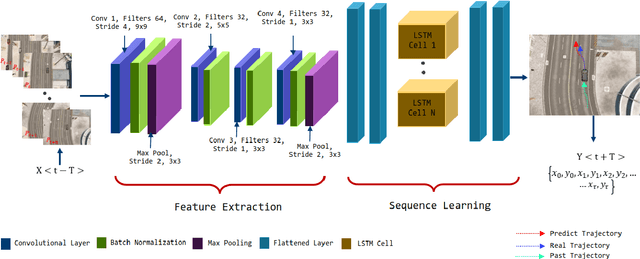

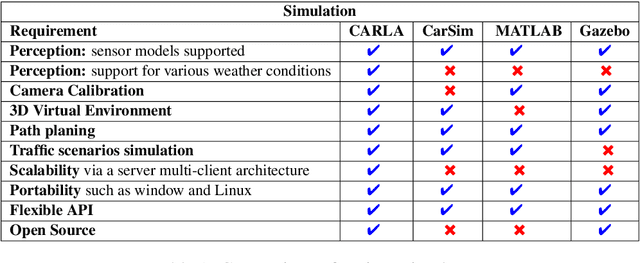

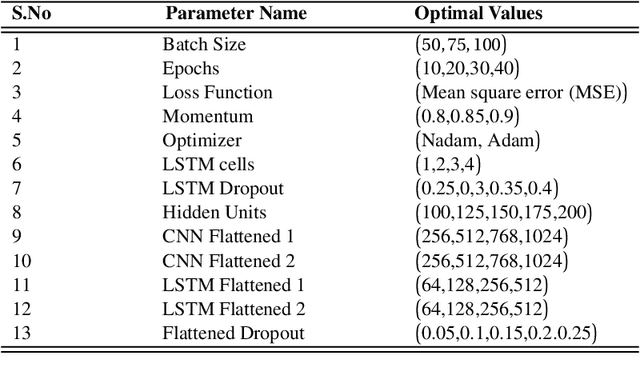

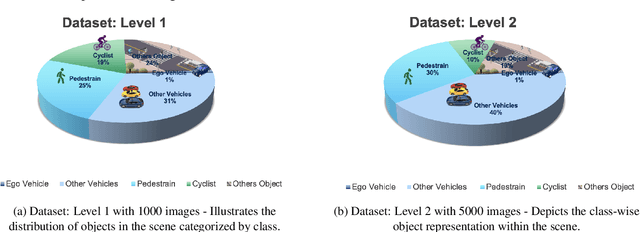

Abstract:Autonomous vehicles require accurate and reliable short-term trajectory predictions for safe and efficient driving. While most commercial automated vehicles currently use state machine-based algorithms for trajectory forecasting, recent efforts have focused on end-to-end data-driven systems. Often, the design of these models is limited by the availability of datasets, which are typically restricted to generic scenarios. To address this limitation, we have developed a synthetic dataset for short-term trajectory prediction tasks using the CARLA simulator. This dataset is extensive and incorporates what is considered complex scenarios - pedestrians crossing the road, vehicles overtaking - and comprises 6000 perspective view images with corresponding IMU and odometry information for each frame. Furthermore, an end-to-end short-term trajectory prediction model using convolutional neural networks (CNN) and long short-term memory (LSTM) networks has also been developed. This model can handle corner cases, such as slowing down near zebra crossings and stopping when pedestrians cross the road, without the need for explicit encoding of the surrounding environment. In an effort to accelerate this research and assist others, we are releasing our dataset and model to the research community. Our datasets are publicly available on https://github.com/sharmasushil/Navigating-Uncertainty-Trajectory-Prediction .

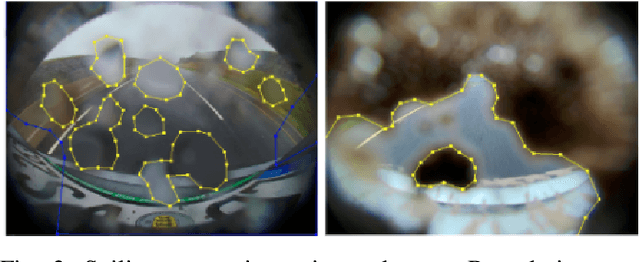

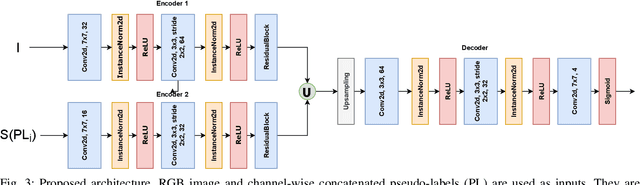

Pseudo-Label Ensemble-based Semi-supervised Learning for Handling Noisy Soiling Segmentation Annotations

May 17, 2021

Abstract:Manual annotation of soiling on surround view cameras is a very challenging and expensive task. The unclear boundary for various soiling categories like water drops or mud particles usually results in a large variance in the annotation quality. As a result, the models trained on such poorly annotated data are far from being optimal. In this paper, we focus on handling such noisy annotations via pseudo-label driven ensemble model which allow us to quickly spot problematic annotations and in most cases also sufficiently fixing them. We train a soiling segmentation model on both noisy and refined labels and demonstrate significant improvements using the refined annotations. It also illustrates that it is possible to effectively refine lower cost coarse annotations.

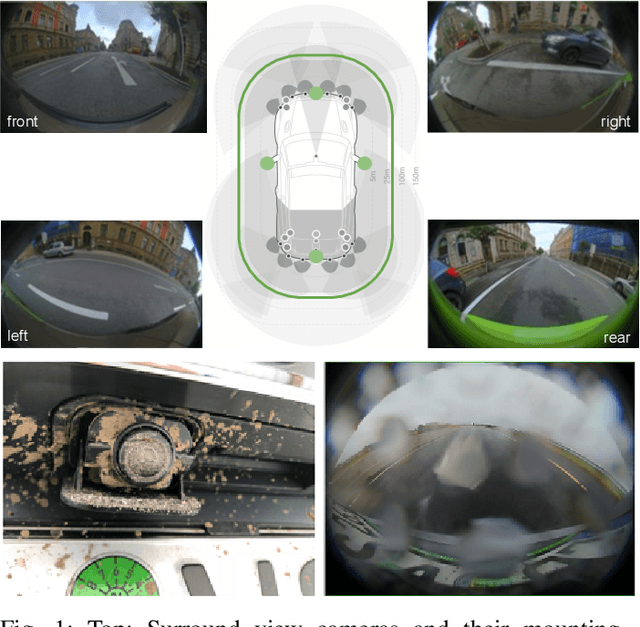

FisheyeMODNet: Moving Object detection on Surround-view Cameras for Autonomous Driving

Aug 30, 2019

Abstract:Moving Object Detection (MOD) is an important task for achieving robust autonomous driving. An autonomous vehicle has to estimate collision risk with other interacting objects in the environment and calculate an optional trajectory. Collision risk is typically higher for moving objects than static ones due to the need to estimate the future states and poses of the objects for decision making. This is particularly important for near-range objects around the vehicle which are typically detected by a fisheye surround-view system that captures a 360{\deg} view of the scene. In this work, we propose a CNN architecture for moving object detection using fisheye images that were captured in autonomous driving environment. As motion geometry is highly non-linear and unique for fisheye cameras, we will make an improved version of the current dataset public to encourage further research. To target embedded deployment, we design a lightweight encoder sharing weights across sequential images. The proposed network runs at 15 fps on a 1 teraflops automotive embedded system at accuracy of 40% IoU and 69.5% mIoU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge