Enda Ward

Hyperspectral Sensors and Autonomous Driving: Technologies, Limitations, and Opportunities

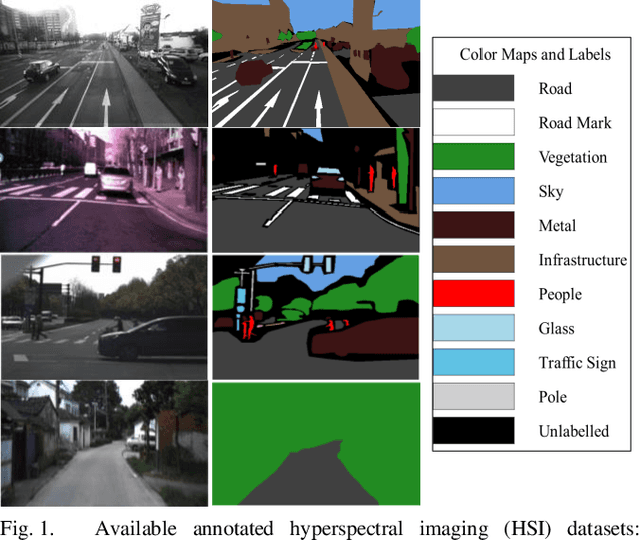

Aug 27, 2025Abstract:Hyperspectral imaging (HSI) offers a transformative sensing modality for Advanced Driver Assistance Systems (ADAS) and autonomous driving (AD) applications, enabling material-level scene understanding through fine spectral resolution beyond the capabilities of traditional RGB imaging. This paper presents the first comprehensive review of HSI for automotive applications, examining the strengths, limitations, and suitability of current HSI technologies in the context of ADAS/AD. In addition to this qualitative review, we analyze 216 commercially available HSI and multispectral imaging cameras, benchmarking them against key automotive criteria: frame rate, spatial resolution, spectral dimensionality, and compliance with AEC-Q100 temperature standards. Our analysis reveals a significant gap between HSI's demonstrated research potential and its commercial readiness. Only four cameras meet the defined performance thresholds, and none comply with AEC-Q100 requirements. In addition, the paper reviews recent HSI datasets and applications, including semantic segmentation for road surface classification, pedestrian separability, and adverse weather perception. Our review shows that current HSI datasets are limited in terms of scale, spectral consistency, the number of spectral channels, and environmental diversity, posing challenges for the development of perception algorithms and the adequate validation of HSI's true potential in ADAS/AD applications. This review paper establishes the current state of HSI in automotive contexts as of 2025 and outlines key research directions toward practical integration of spectral imaging in ADAS and autonomous systems.

Hyperspectral Imaging-Based Perception in Autonomous Driving Scenarios: Benchmarking Baseline Semantic Segmentation Models

Oct 29, 2024

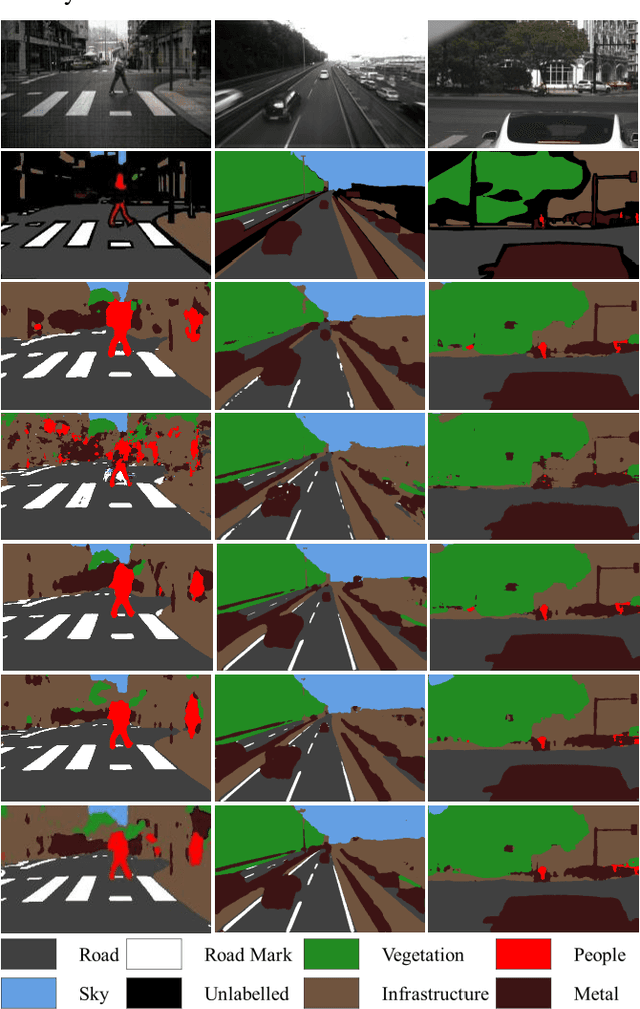

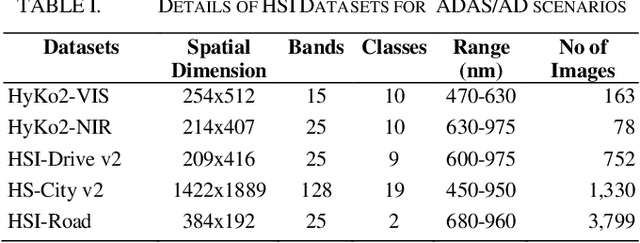

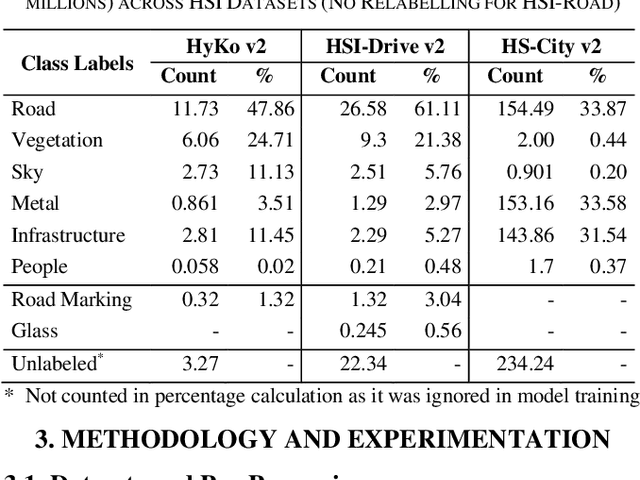

Abstract:Hyperspectral Imaging (HSI) is known for its advantages over traditional RGB imaging in remote sensing, agriculture, and medicine. Recently, it has gained attention for enhancing Advanced Driving Assistance Systems (ADAS) perception. Several HSI datasets such as HyKo, HSI-Drive, HSI-Road, and Hyperspectral City have been made available. However, a comprehensive evaluation of semantic segmentation models (SSM) using these datasets is lacking. To address this gap, we evaluated the available annotated HSI datasets on four deep learning-based baseline SSMs: DeepLab v3+, HRNet, PSPNet, and U-Net, along with its two variants: Coordinate Attention (UNet-CA) and Convolutional Block-Attention Module (UNet-CBAM). The original model architectures were adapted to handle the varying spatial and spectral dimensions of the datasets. These baseline SSMs were trained using a class-weighted loss function for individual HSI datasets and evaluated using mean-based metrics such as intersection over union (IoU), recall, precision, F1 score, specificity, and accuracy. Our results indicate that UNet-CBAM, which extracts channel-wise features, outperforms other SSMs and shows potential to leverage spectral information for enhanced semantic segmentation. This study establishes a baseline SSM benchmark on available annotated datasets for future evaluation of HSI-based ADAS perception. However, limitations of current HSI datasets, such as limited dataset size, high class imbalance, and lack of fine-grained annotations, remain significant constraints for developing robust SSMs for ADAS applications.

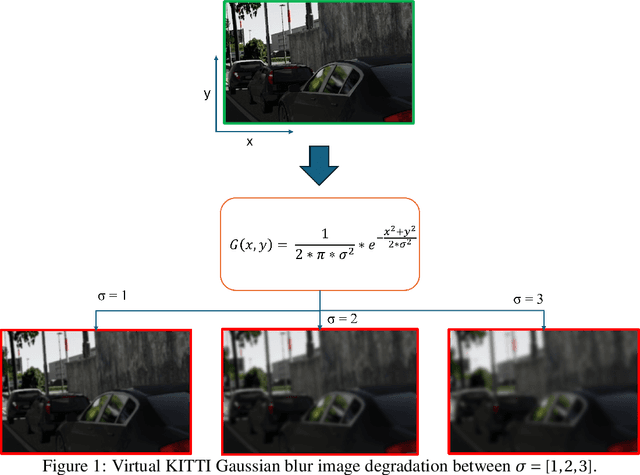

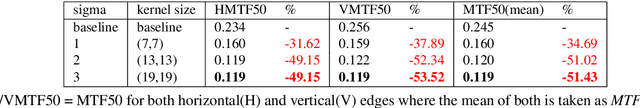

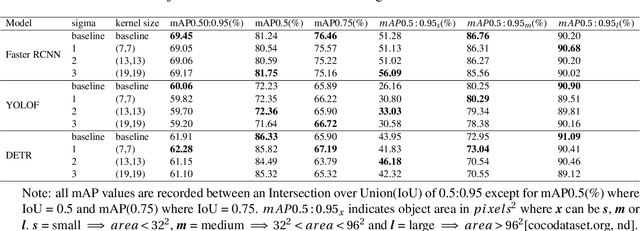

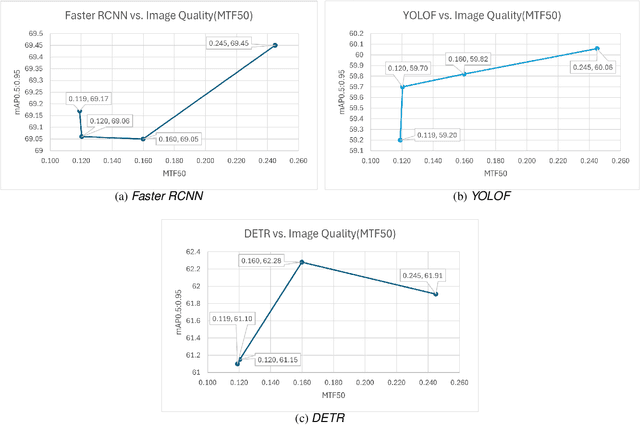

SS-SFR: Synthetic Scenes Spatial Frequency Response on Virtual KITTI and Degraded Automotive Simulations for Object Detection

Jul 22, 2024

Abstract:Automotive simulation can potentially compensate for a lack of training data in computer vision applications. However, there has been little to no image quality evaluation of automotive simulation and the impact of optical degradations on simulation is little explored. In this work, we investigate Virtual KITTI and the impact of applying variations of Gaussian blur on image sharpness. Furthermore, we consider object detection, a common computer vision application on three different state-of-the-art models, thus allowing us to characterize the relationship between object detection and sharpness. It was found that while image sharpness (MTF50) degrades from an average of 0.245cy/px to approximately 0.119cy/px; object detection performance stays largely robust within 0.58\%(Faster RCNN), 1.45\%(YOLOF) and 1.93\%(DETR) across all respective held-out test sets.

* 8 pages, 2 figures, 2 tables

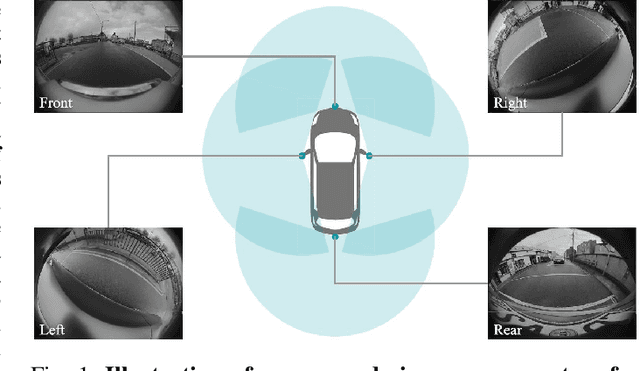

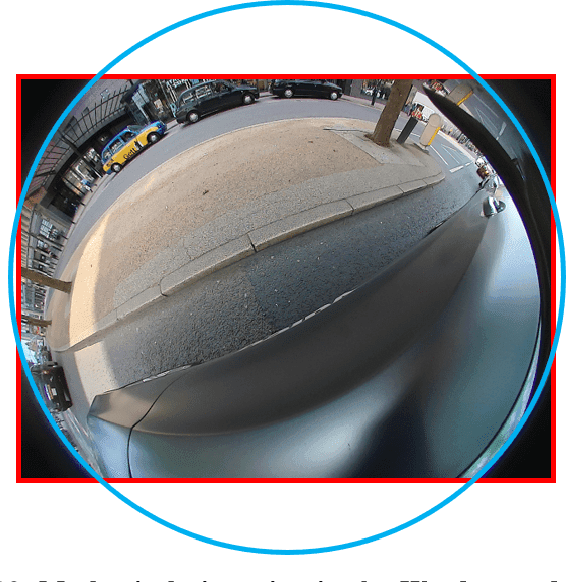

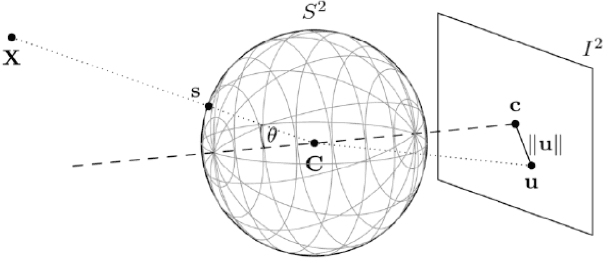

Surround-View Fisheye Optics in Computer Vision and Simulation: Survey and Challenges

Feb 21, 2024

Abstract:In this paper, we provide a survey on automotive surround-view fisheye optics, with an emphasis on the impact of optical artifacts on computer vision tasks in autonomous driving and ADAS. The automotive industry has advanced in applying state-of-the-art computer vision to enhance road safety and provide automated driving functionality. When using camera systems on vehicles, there is a particular need for a wide field of view to capture the entire vehicle's surroundings, in areas such as low-speed maneuvering, automated parking, and cocoon sensing. However, one crucial challenge in surround-view cameras is the strong optical aberrations of the fisheye camera, which is an area that has received little attention in the literature. Additionally, a comprehensive dataset is needed for testing safety-critical scenarios in vehicle automation. The industry has turned to simulation as a cost-effective strategy for creating synthetic datasets with surround-view camera imagery. We examine different simulation methods (such as model-driven and data-driven simulations) and discuss the simulators' ability (or lack thereof) to model real-world optical performance. Overall, this paper highlights the optical aberrations in automotive fisheye datasets, and the limitations of optical reality in simulated fisheye datasets, with a focus on computer vision in surround-view optical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge