Anne Mergy

Globally Optimal Event-Based Divergence Estimation for Ventral Landing

Sep 27, 2022

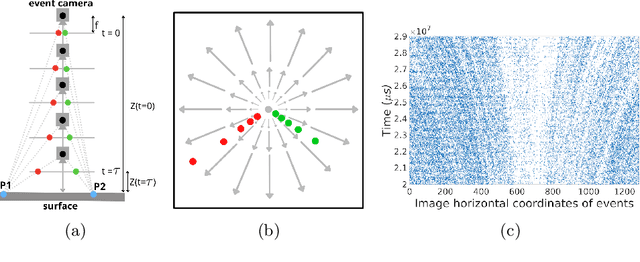

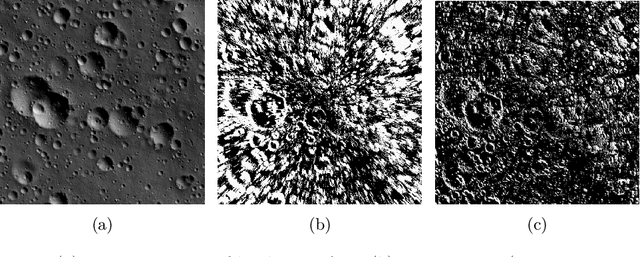

Abstract:Event sensing is a major component in bio-inspired flight guidance and control systems. We explore the usage of event cameras for predicting time-to-contact (TTC) with the surface during ventral landing. This is achieved by estimating divergence (inverse TTC), which is the rate of radial optic flow, from the event stream generated during landing. Our core contributions are a novel contrast maximisation formulation for event-based divergence estimation, and a branch-and-bound algorithm to exactly maximise contrast and find the optimal divergence value. GPU acceleration is conducted to speed up the global algorithm. Another contribution is a new dataset containing real event streams from ventral landing that was employed to test and benchmark our method. Owing to global optimisation, our algorithm is much more capable at recovering the true divergence, compared to other heuristic divergence estimators or event-based optic flow methods. With GPU acceleration, our method also achieves competitive runtimes.

The Fellowship of the Dyson Ring: ACT&Friends' Results and Methods for GTOC 11

May 23, 2022

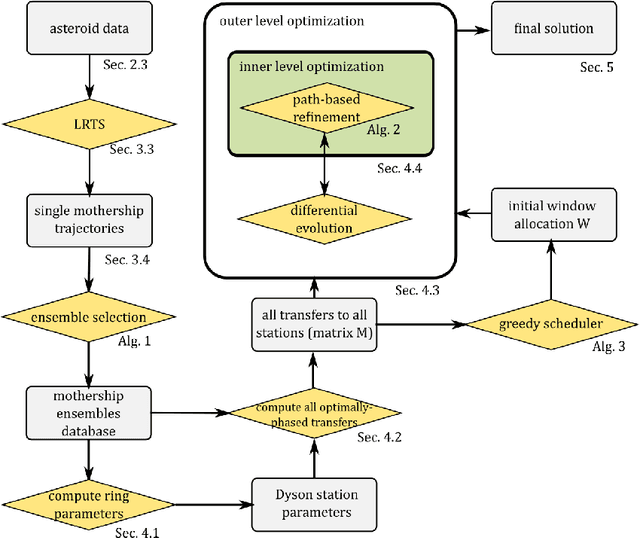

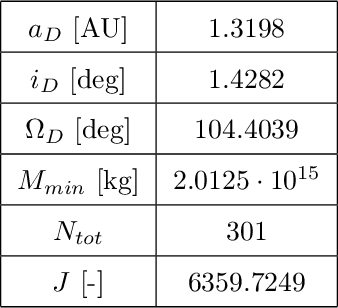

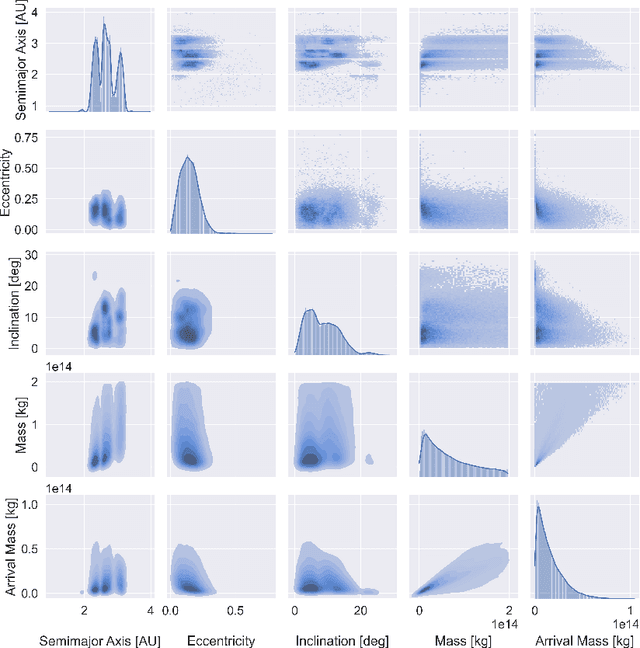

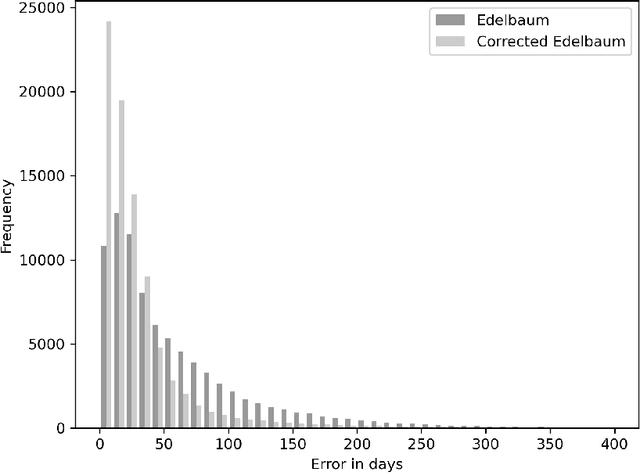

Abstract:Dyson spheres are hypothetical megastructures encircling stars in order to harvest most of their energy output. During the 11th edition of the GTOC challenge, participants were tasked with a complex trajectory planning related to the construction of a precursor Dyson structure, a heliocentric ring made of twelve stations. To this purpose, we developed several new approaches that synthesize techniques from machine learning, combinatorial optimization, planning and scheduling, and evolutionary optimization effectively integrated into a fully automated pipeline. These include a machine learned transfer time estimator, improving the established Edelbaum approximation and thus better informing a Lazy Race Tree Search to identify and collect asteroids with high arrival mass for the stations; a series of optimally-phased low-thrust transfers to all stations computed by indirect optimization techniques, exploiting the synodic periodicity of the system; and a modified Hungarian scheduling algorithm, which utilizes evolutionary techniques to arrange a mass-balanced arrival schedule out of all transfer possibilities. We describe the steps of our pipeline in detail with a special focus on how our approaches mutually benefit from each other. Lastly, we outline and analyze the final solution of our team, ACT&Friends, which ranked second at the GTOC 11 challenge.

Vision-based Neural Scene Representations for Spacecraft

May 11, 2021

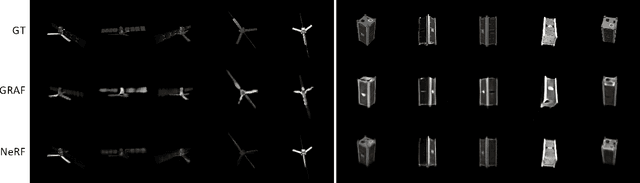

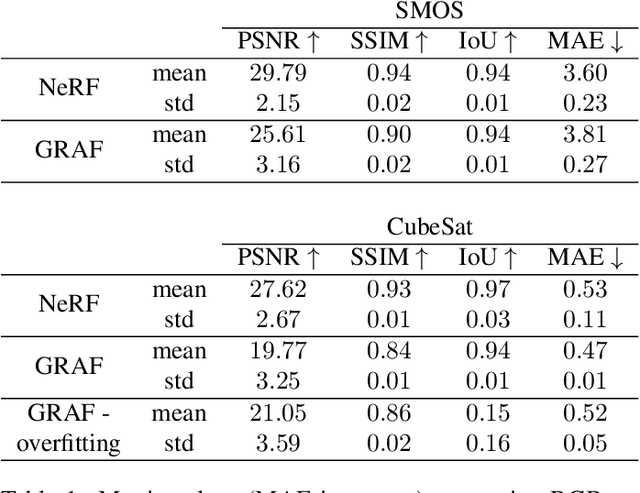

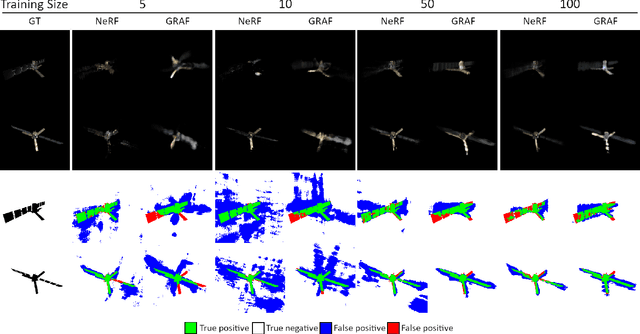

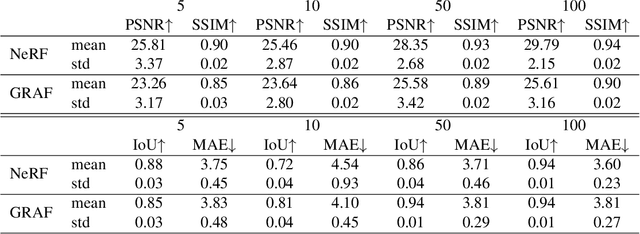

Abstract:In advanced mission concepts with high levels of autonomy, spacecraft need to internally model the pose and shape of nearby orbiting objects. Recent works in neural scene representations show promising results for inferring generic three-dimensional scenes from optical images. Neural Radiance Fields (NeRF) have shown success in rendering highly specular surfaces using a large number of images and their pose. More recently, Generative Radiance Fields (GRAF) achieved full volumetric reconstruction of a scene from unposed images only, thanks to the use of an adversarial framework to train a NeRF. In this paper, we compare and evaluate the potential of NeRF and GRAF to render novel views and extract the 3D shape of two different spacecraft, the Soil Moisture and Ocean Salinity satellite of ESA's Living Planet Programme and a generic cube sat. Considering the best performances of both models, we observe that NeRF has the ability to render more accurate images regarding the material specularity of the spacecraft and its pose. For its part, GRAF generates precise novel views with accurate details even when parts of the satellites are shadowed while having the significant advantage of not needing any information about the relative pose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge