Marcus Märtens

Asteroid Mining: ACT&Friends' Results for the GTOC 12 Problem

Oct 28, 2024Abstract:In 2023, the 12th edition of Global Trajectory Competition was organised around the problem referred to as "Sustainable Asteroid Mining". This paper reports the developments that led to the solution proposed by ESA's Advanced Concepts Team. Beyond the fact that the proposed approach failed to rank higher than fourth in the final competition leader-board, several innovative fundamental methodologies were developed which have a broader application. In particular, new methods based on machine learning as well as on manipulating the fundamental laws of astrodynamics were developed and able to fill with remarkable accuracy the gap between full low-thrust trajectories and their representation as impulsive Lambert transfers. A novel technique was devised to formulate the challenge of optimal subset selection from a repository of pre-existing optimal mining trajectories as an integer linear programming problem. Finally, the fundamental problem of searching for single optimal mining trajectories (mining and collecting all resources), albeit ignoring the possibility of having intra-ship collaboration and thus sub-optimal in the case of the GTOC12 problem, was efficiently solved by means of a novel search based on a look-ahead score and thus making sure to select asteroids that had chances to be re-visited later on.

Synthetic Lunar Terrain: A Multimodal Open Dataset for Training and Evaluating Neuromorphic Vision Algorithms

Aug 30, 2024Abstract:Synthetic Lunar Terrain (SLT) is an open dataset collected from an analogue test site for lunar missions, featuring synthetic craters in a high-contrast lighting setup. It includes several side-by-side captures from event-based and conventional RGB cameras, supplemented with a high-resolution 3D laser scan for depth estimation. The event-stream recorded from the neuromorphic vision sensor of the event-based camera is of particular interest as this emerging technology provides several unique advantages, such as high data rates, low energy consumption and resilience towards scenes of high dynamic range. SLT provides a solid foundation to analyse the limits of RGB-cameras and potential advantages or synergies in utilizing neuromorphic visions with the goal of enabling and improving lunar specific applications like rover navigation, landing in cratered environments or similar.

Symbolic Regression for Space Applications: Differentiable Cartesian Genetic Programming Powered by Multi-objective Memetic Algorithms

Jun 13, 2022

Abstract:Interpretable regression models are important for many application domains, as they allow experts to understand relations between variables from sparse data. Symbolic regression addresses this issue by searching the space of all possible free form equations that can be constructed from elementary algebraic functions. While explicit mathematical functions can be rediscovered this way, the determination of unknown numerical constants during search has been an often neglected issue. We propose a new multi-objective memetic algorithm that exploits a differentiable Cartesian Genetic Programming encoding to learn constants during evolutionary loops. We show that this approach is competitive or outperforms machine learned black box regression models or hand-engineered fits for two applications from space: the Mars express thermal power estimation and the determination of the age of stars by gyrochronology.

The Fellowship of the Dyson Ring: ACT&Friends' Results and Methods for GTOC 11

May 23, 2022

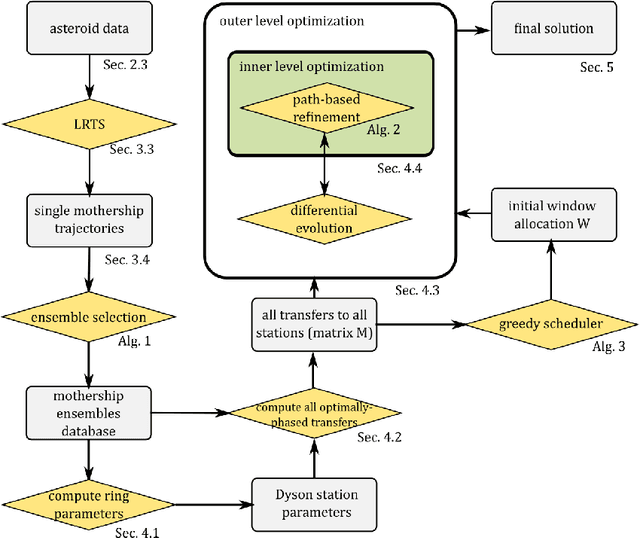

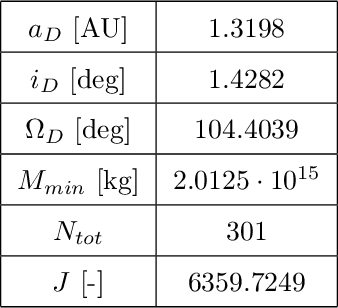

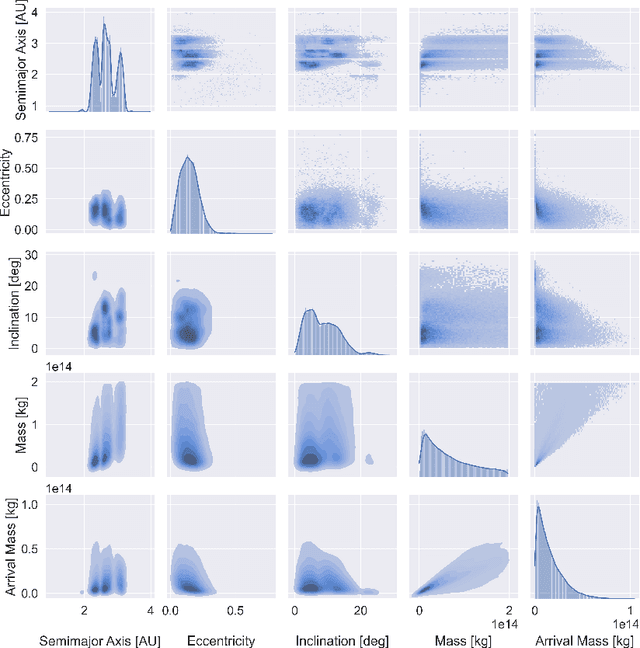

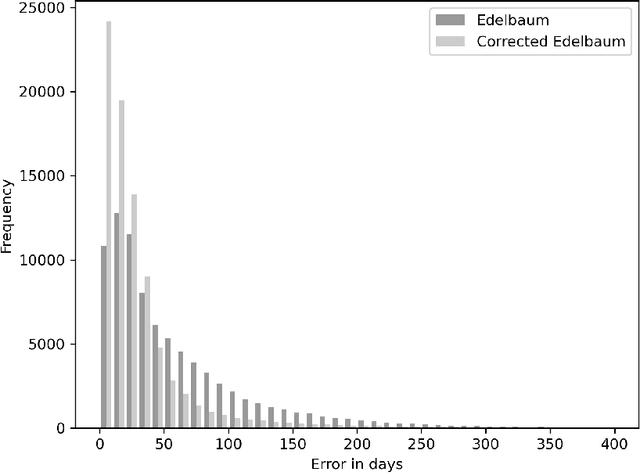

Abstract:Dyson spheres are hypothetical megastructures encircling stars in order to harvest most of their energy output. During the 11th edition of the GTOC challenge, participants were tasked with a complex trajectory planning related to the construction of a precursor Dyson structure, a heliocentric ring made of twelve stations. To this purpose, we developed several new approaches that synthesize techniques from machine learning, combinatorial optimization, planning and scheduling, and evolutionary optimization effectively integrated into a fully automated pipeline. These include a machine learned transfer time estimator, improving the established Edelbaum approximation and thus better informing a Lazy Race Tree Search to identify and collect asteroids with high arrival mass for the stations; a series of optimally-phased low-thrust transfers to all stations computed by indirect optimization techniques, exploiting the synodic periodicity of the system; and a modified Hungarian scheduling algorithm, which utilizes evolutionary techniques to arrange a mass-balanced arrival schedule out of all transfer possibilities. We describe the steps of our pipeline in detail with a special focus on how our approaches mutually benefit from each other. Lastly, we outline and analyze the final solution of our team, ACT&Friends, which ranked second at the GTOC 11 challenge.

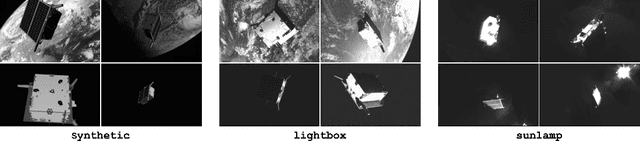

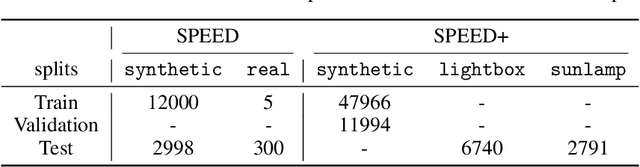

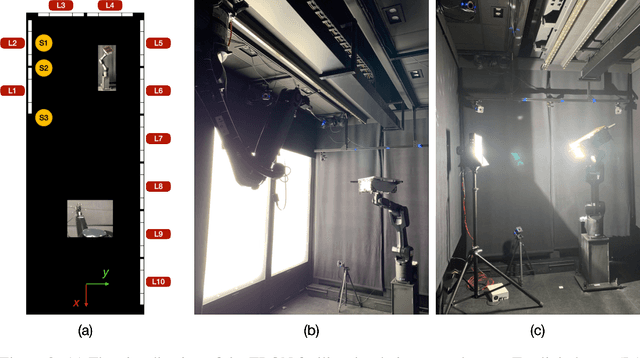

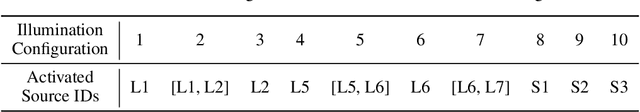

SPEED+: Next Generation Dataset for Spacecraft Pose Estimation across Domain Gap

Oct 06, 2021

Abstract:Autonomous vision-based spaceborne navigation is an enabling technology for future on-orbit servicing and space logistics missions. While computer vision in general has benefited from Machine Learning (ML), training and validating spaceborne ML models are extremely challenging due to the impracticality of acquiring a large-scale labeled dataset of images of the intended target in the space environment. Existing datasets, such as Spacecraft PosE Estimation Dataset (SPEED), have so far mostly relied on synthetic images for both training and validation, which are easy to mass-produce but fail to resemble the visual features and illumination variability inherent to the target spaceborne images. In order to bridge the gap between the current practices and the intended applications in future space missions, this paper introduces SPEED+: the next generation spacecraft pose estimation dataset with specific emphasis on domain gap. In addition to 60,000 synthetic images for training, SPEED+ includes 9,531 simulated images of a spacecraft mockup model captured from the Testbed for Rendezvous and Optical Navigation (TRON) facility. TRON is a first-of-a-kind robotic testbed capable of capturing an arbitrary number of target images with accurate and maximally diverse pose labels and high-fidelity spaceborne illumination conditions. SPEED+ will be used in the upcoming international Satellite Pose Estimation Challenge co-hosted with the Advanced Concepts Team of the European Space Agency to evaluate and compare the robustness of spaceborne ML models trained on synthetic images.

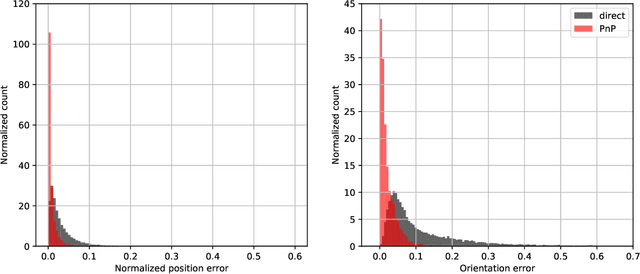

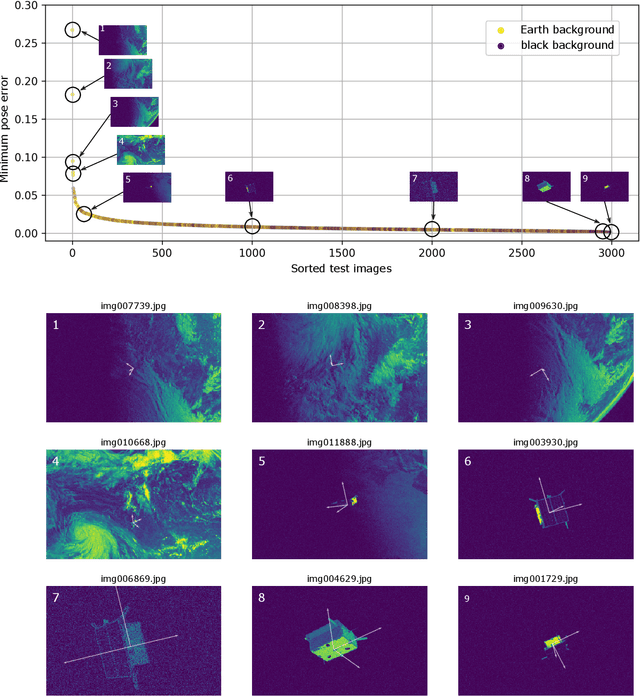

Satellite Pose Estimation Challenge: Dataset, Competition Design and Results

Nov 05, 2019

Abstract:Reliable pose estimation of uncooperative satellites is a key technology for enabling future on-orbit servicing and debris removal missions. The Kelvins Satellite Pose Estimation Challenge aims at evaluating and comparing monocular vision-based approaches and pushing the state-of-the-art on this problem. This work is based on the Satellite Pose Estimation Dataset, the first publicly available machine learning set of synthetic and real spacecraft imagery. The choice of dataset reflects one of the unique challenges associated with spaceborne computer vision tasks, namely the lack of spaceborne images to train and validate the developed algorithms. This work briefly reviews the basic properties and the collection process of the dataset which was made publicly available. The competition design, including the definition of performance metrics and the adopted testbed, is also discussed. Furthermore, the submissions of the 48 participants are analyzed to compare the performance of their approaches and uncover what factors make the satellite pose estimation problem especially challenging.

Neural Network Architecture Search with Differentiable Cartesian Genetic Programming for Regression

Jul 03, 2019

Abstract:The ability to design complex neural network architectures which enable effective training by stochastic gradient descent has been the key for many achievements in the field of deep learning. However, developing such architectures remains a challenging and resourceintensive process full of trial-and-error iterations. All in all, the relation between the network topology and its ability to model the data remains poorly understood. We propose to encode neural networks with a differentiable variant of Cartesian Genetic Programming (dCGPANN) and present a memetic algorithm for architecture design: local searches with gradient descent learn the network parameters while evolutionary operators act on the dCGPANN genes shaping the network architecture towards faster learning. Studying a particular instance of such a learning scheme, we are able to improve the starting feed forward topology by learning how to rewire and prune links, adapt activation functions and introduce skip connections for chosen regression tasks. The evolved network architectures require less space for network parameters and reach, given the same amount of time, a significantly lower error on average.

Super-Resolution of PROBA-V Images Using Convolutional Neural Networks

Jul 03, 2019

Abstract:ESA's PROBA-V Earth observation satellite enables us to monitor our planet at a large scale, studying the interaction between vegetation and climate and provides guidance for important decisions on our common global future. However, the interval at which high resolution images are recorded spans over several days, in contrast to the availability of lower resolution images which is often daily. We collect an extensive dataset of both, high and low resolution images taken by PROBA-V instruments during monthly periods to investigate Multi Image Super-resolution, a technique to merge several low resolution images to one image of higher quality. We propose a convolutional neural network that is able to cope with changes in illumination, cloud coverage and landscape features which are challenges introduced by the fact that the different images are taken over successive satellite passages over the same region. Given a bicubic upscaling of low resolution images taken under optimal conditions, we find the Peak Signal to Noise Ratio of the reconstructed image of the network to be higher for a large majority of different scenes. This shows that applied machine learning has the potential to enhance large amounts of previously collected earth observation data during multiple satellite passes.

Interplanetary Transfers via Deep Representations of the Optimal Policy and/or of the Value Function

Apr 18, 2019

Abstract:A number of applications to interplanetary trajectories have been recently proposed based on deep networks. These approaches often rely on the availability of a large number of optimal trajectories to learn from. In this paper we introduce a new method to quickly create millions of optimal spacecraft trajectories from a single nominal trajectory. Apart from the generation of the nominal trajectory, no additional optimal control problems need to be solved as all the trajectories, by construction, satisfy Pontryagin's minimum principle and the relevant transversality conditions. We then consider deep feed forward neural networks and benchmark three learning methods on the created dataset: policy imitation, value function learning and value function gradient learning. Our results are shown for the case of the interplanetary trajectory optimization problem of reaching Venus orbit, with the nominal trajectory starting from the Earth. We find that both policy imitation and value function gradient learning are able to learn the optimal state feedback, while in the case of value function learning the optimal policy is not captured, only the final value of the optimal propellant mass is.

A Survey on Artificial Intelligence Trends in Spacecraft Guidance Dynamics and Control

Dec 07, 2018Abstract:The rapid developments of Artificial Intelligence in the last decade are influencing Aerospace Engineering to a great extent and research in this context is proliferating. We share our observations on the recent developments in the area of Spacecraft Guidance Dynamics and Control, giving selected examples on success stories that have been motivated by mission designs. Our focus is on evolutionary optimisation, tree searches and machine learning, including deep learning and reinforcement learning as the key technologies and drivers for current and future research in the field. From a high-level perspective, we survey various scenarios for which these approaches have been successfully applied or are under strong scientific investigation. Whenever possible, we highlight the relations and synergies that can be obtained by combining different techniques and projects towards future domains for which newly emerging artificial intelligence techniques are expected to become game changers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge