Giacomo Acciarini

IonCast: A Deep Learning Framework for Forecasting Ionospheric Dynamics

Nov 19, 2025Abstract:The ionosphere is a critical component of near-Earth space, shaping GNSS accuracy, high-frequency communications, and aviation operations. For these reasons, accurate forecasting and modeling of ionospheric variability has become increasingly relevant. To address this gap, we present IonCast, a suite of deep learning models that include a GraphCast-inspired model tailored for ionospheric dynamics. IonCast leverages spatiotemporal learning to forecast global Total Electron Content (TEC), integrating diverse physical drivers and observational datasets. Validating on held-out storm-time and quiet conditions highlights improved skill compared to persistence. By unifying heterogeneous data with scalable graph-based spatiotemporal learning, IonCast demonstrates how machine learning can augment physical understanding of ionospheric variability and advance operational space weather resilience.

EclipseNETs: Learning Irregular Small Celestial Body Silhouettes

Apr 06, 2025Abstract:Accurately predicting eclipse events around irregular small bodies is crucial for spacecraft navigation, orbit determination, and spacecraft systems management. This paper introduces a novel approach leveraging neural implicit representations to model eclipse conditions efficiently and reliably. We propose neural network architectures that capture the complex silhouettes of asteroids and comets with high precision. Tested on four well-characterized bodies - Bennu, Itokawa, 67P/Churyumov-Gerasimenko, and Eros - our method achieves accuracy comparable to traditional ray-tracing techniques while offering orders of magnitude faster performance. Additionally, we develop an indirect learning framework that trains these models directly from sparse trajectory data using Neural Ordinary Differential Equations, removing the requirement to have prior knowledge of an accurate shape model. This approach allows for the continuous refinement of eclipse predictions, progressively reducing errors and improving accuracy as new trajectory data is incorporated.

Asteroid Mining: ACT&Friends' Results for the GTOC 12 Problem

Oct 28, 2024Abstract:In 2023, the 12th edition of Global Trajectory Competition was organised around the problem referred to as "Sustainable Asteroid Mining". This paper reports the developments that led to the solution proposed by ESA's Advanced Concepts Team. Beyond the fact that the proposed approach failed to rank higher than fourth in the final competition leader-board, several innovative fundamental methodologies were developed which have a broader application. In particular, new methods based on machine learning as well as on manipulating the fundamental laws of astrodynamics were developed and able to fill with remarkable accuracy the gap between full low-thrust trajectories and their representation as impulsive Lambert transfers. A novel technique was devised to formulate the challenge of optimal subset selection from a repository of pre-existing optimal mining trajectories as an integer linear programming problem. Finally, the fundamental problem of searching for single optimal mining trajectories (mining and collecting all resources), albeit ignoring the possibility of having intra-ship collaboration and thus sub-optimal in the case of the GTOC12 problem, was efficiently solved by means of a novel search based on a look-ahead score and thus making sure to select asteroids that had chances to be re-visited later on.

EclipseNETs: a differentiable description of irregular eclipse conditions

Aug 09, 2024Abstract:In the field of spaceflight mechanics and astrodynamics, determining eclipse regions is a frequent and critical challenge. This determination impacts various factors, including the acceleration induced by solar radiation pressure, the spacecraft power input, and its thermal state all of which must be accounted for in various phases of the mission design. This study leverages recent advances in neural image processing to develop fully differentiable models of eclipse regions for highly irregular celestial bodies. By utilizing test cases involving Solar System bodies previously visited by spacecraft, such as 433 Eros, 25143 Itokawa, 67P/Churyumov--Gerasimenko, and 101955 Bennu, we propose and study an implicit neural architecture defining the shape of the eclipse cone based on the Sun's direction. Employing periodic activation functions, we achieve high precision in modeling eclipse conditions. Furthermore, we discuss the potential applications of these differentiable models in spaceflight mechanics computations.

Computing low-thrust transfers in the asteroid belt, a comparison between astrodynamical manipulations and a machine learning approach

May 29, 2024

Abstract:Low-thrust trajectories play a crucial role in optimizing scientific output and cost efficiency in asteroid belt missions. Unlike high-thrust transfers, low-thrust trajectories require solving complex optimal control problems. This complexity grows exponentially with the number of asteroids visited due to orbital mechanics intricacies. In the literature, methods for approximating low-thrust transfers without full optimization have been proposed, including analytical and machine learning techniques. In this work, we propose new analytical approximations and compare their accuracy and performance to machine learning methods. While analytical approximations leverage orbit theory to estimate trajectory costs, machine learning employs a more black-box approach, utilizing neural networks to predict optimal transfers based on various attributes. We build a dataset of about 3 million transfers, found by solving the time and fuel optimal control problems, for different time of flights, which we also release open-source. Comparison between the two methods on this database reveals the superiority of machine learning, especially for longer transfers. Despite challenges such as multi revolution transfers, both approaches maintain accuracy within a few percent in the final mass errors, on a database of trajectories involving numerous asteroids. This work contributes to the efficient exploration of mission opportunities in the asteroid belt, providing insights into the strengths and limitations of different approximation strategies.

NeuralODEs for VLEO simulations: Introducing thermoNET for Thermosphere Modeling

May 29, 2024

Abstract:We introduce a novel neural architecture termed thermoNET, designed to represent thermospheric density in satellite orbital propagation using a reduced amount of differentiable computations. Due to the appearance of a neural network on the right-hand side of the equations of motion, the resulting satellite dynamics is governed by a NeuralODE, a neural Ordinary Differential Equation, characterized by its fully differentiable nature, allowing the derivation of variational equations (hence of the state transition matrix) and facilitating its use in connection to advanced numerical techniques such as Taylor-based numerical propagation and differential algebraic techniques. Efficient training of the network parameters occurs through two distinct approaches. In the first approach, the network undergoes training independently of spacecraft dynamics, engaging in a pure regression task against ground truth models, including JB-08 and NRLMSISE-00. In the second paradigm, network parameters are learned based on observed dynamics, adapting through ODE sensitivities. In both cases, the outcome is a flexible, compact model of the thermosphere density greatly enhancing numerical propagation efficiency while maintaining accuracy in the orbital predictions.

Closing the Gap Between SGP4 and High-Precision Propagation via Differentiable Programming

Feb 26, 2024

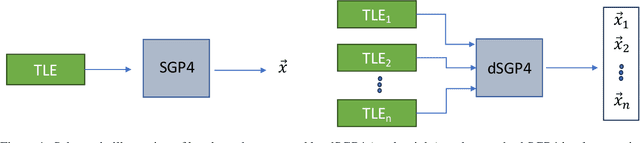

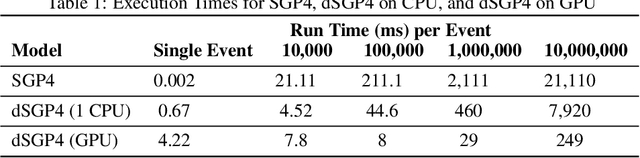

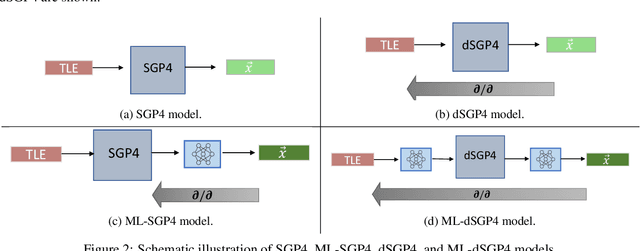

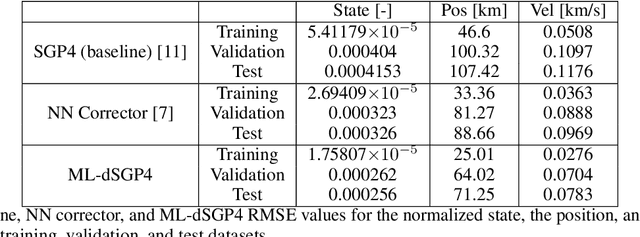

Abstract:The Simplified General Perturbations 4 (SGP4) orbital propagation method is widely used for predicting the positions and velocities of Earth-orbiting objects rapidly and reliably. Despite continuous refinement, SGP models still lack the precision of numerical propagators, which offer significantly smaller errors. This study presents dSGP4, a novel differentiable version of SGP4 implemented using PyTorch. By making SGP4 differentiable, dSGP4 facilitates various space-related applications, including spacecraft orbit determination, state conversion, covariance transformation, state transition matrix computation, and covariance propagation. Additionally, dSGP4's PyTorch implementation allows for embarrassingly parallel orbital propagation across batches of Two-Line Element Sets (TLEs), leveraging the computational power of CPUs, GPUs, and advanced hardware for distributed prediction of satellite positions at future times. Furthermore, dSGP4's differentiability enables integration with modern machine learning techniques. Thus, we propose a novel orbital propagation paradigm, ML-dSGP4, where neural networks are integrated into the orbital propagator. Through stochastic gradient descent, this combined model's inputs, outputs, and parameters can be iteratively refined, surpassing SGP4's precision. Neural networks act as identity operators by default, adhering to SGP4's behavior. However, dSGP4's differentiability allows fine-tuning with ephemeris data, enhancing precision while maintaining computational speed. This empowers satellite operators and researchers to train the model using specific ephemeris or high-precision numerical propagation data, significantly advancing orbital prediction capabilities.

The Fellowship of the Dyson Ring: ACT&Friends' Results and Methods for GTOC 11

May 23, 2022

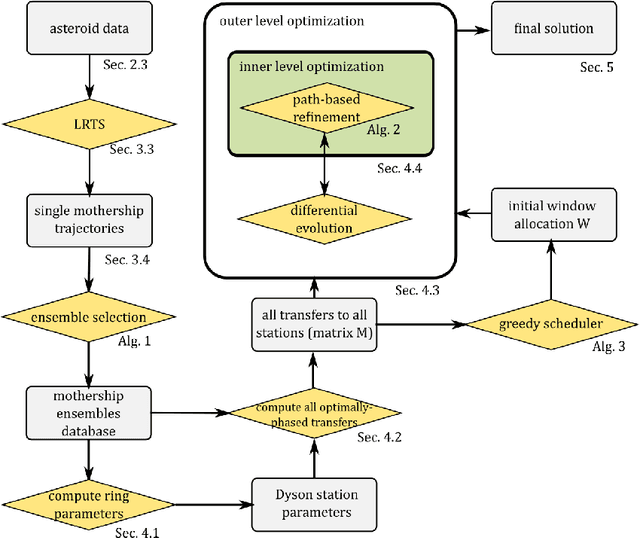

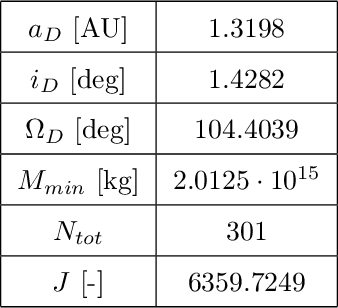

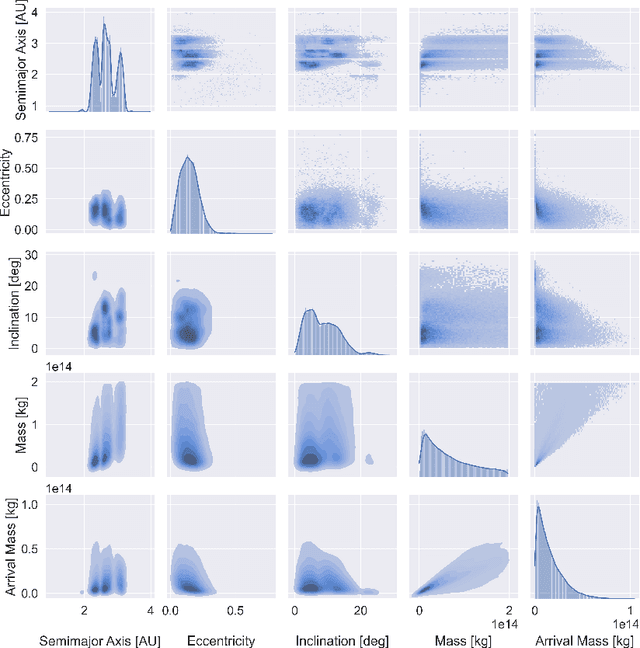

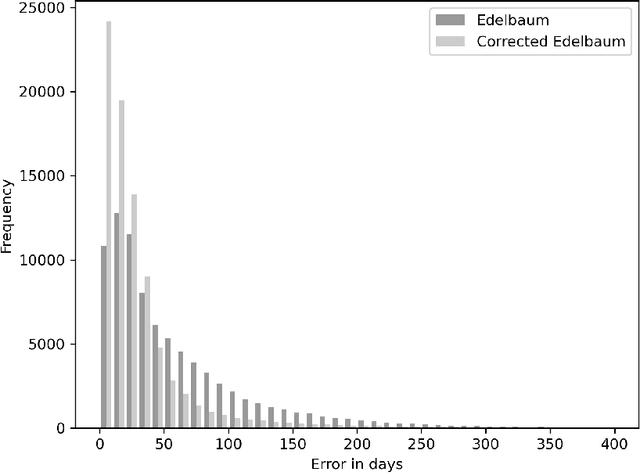

Abstract:Dyson spheres are hypothetical megastructures encircling stars in order to harvest most of their energy output. During the 11th edition of the GTOC challenge, participants were tasked with a complex trajectory planning related to the construction of a precursor Dyson structure, a heliocentric ring made of twelve stations. To this purpose, we developed several new approaches that synthesize techniques from machine learning, combinatorial optimization, planning and scheduling, and evolutionary optimization effectively integrated into a fully automated pipeline. These include a machine learned transfer time estimator, improving the established Edelbaum approximation and thus better informing a Lazy Race Tree Search to identify and collect asteroids with high arrival mass for the stations; a series of optimally-phased low-thrust transfers to all stations computed by indirect optimization techniques, exploiting the synodic periodicity of the system; and a modified Hungarian scheduling algorithm, which utilizes evolutionary techniques to arrange a mass-balanced arrival schedule out of all transfer possibilities. We describe the steps of our pipeline in detail with a special focus on how our approaches mutually benefit from each other. Lastly, we outline and analyze the final solution of our team, ACT&Friends, which ranked second at the GTOC 11 challenge.

Simultaneous Multivariate Forecast of Space Weather Indices using Deep Neural Network Ensembles

Dec 16, 2021

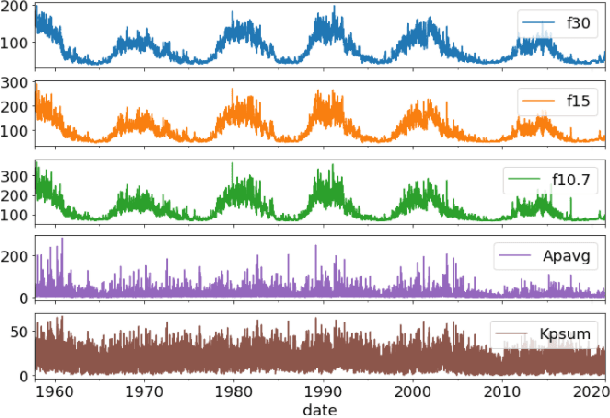

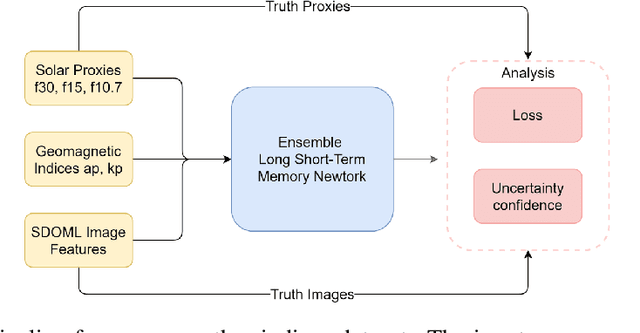

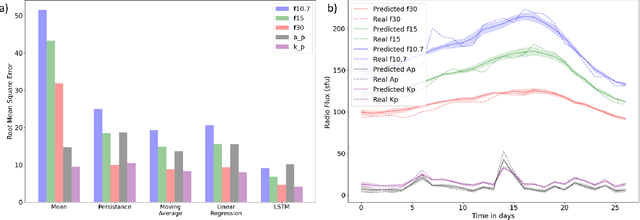

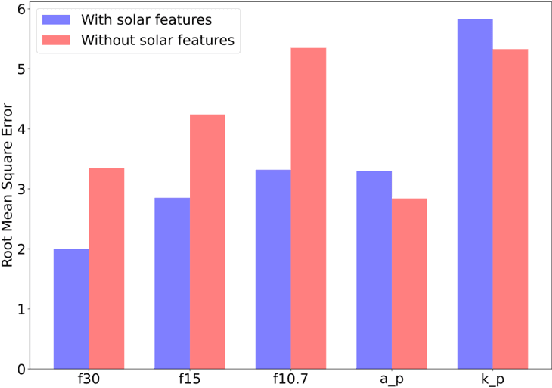

Abstract:Solar radio flux along with geomagnetic indices are important indicators of solar activity and its effects. Extreme solar events such as flares and geomagnetic storms can negatively affect the space environment including satellites in low-Earth orbit. Therefore, forecasting these space weather indices is of great importance in space operations and science. In this study, we propose a model based on long short-term memory neural networks to learn the distribution of time series data with the capability to provide a simultaneous multivariate 27-day forecast of the space weather indices using time series as well as solar image data. We show a 30-40\% improvement of the root mean-square error while including solar image data with time series data compared to using time series data alone. Simple baselines such as a persistence and running average forecasts are also compared with the trained deep neural network models. We also quantify the uncertainty in our prediction using a model ensemble.

Towards Automated Satellite Conjunction Management with Bayesian Deep Learning

Dec 23, 2020

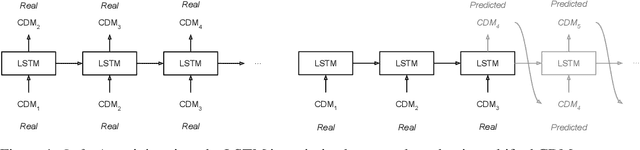

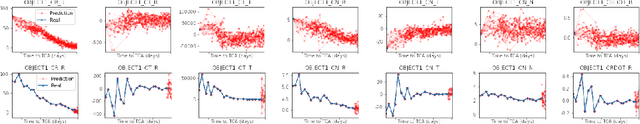

Abstract:After decades of space travel, low Earth orbit is a junkyard of discarded rocket bodies, dead satellites, and millions of pieces of debris from collisions and explosions. Objects in high enough altitudes do not re-enter and burn up in the atmosphere, but stay in orbit around Earth for a long time. With a speed of 28,000 km/h, collisions in these orbits can generate fragments and potentially trigger a cascade of more collisions known as the Kessler syndrome. This could pose a planetary challenge, because the phenomenon could escalate to the point of hindering future space operations and damaging satellite infrastructure critical for space and Earth science applications. As commercial entities place mega-constellations of satellites in orbit, the burden on operators conducting collision avoidance manoeuvres will increase. For this reason, development of automated tools that predict potential collision events (conjunctions) is critical. We introduce a Bayesian deep learning approach to this problem, and develop recurrent neural network architectures (LSTMs) that work with time series of conjunction data messages (CDMs), a standard data format used by the space community. We show that our method can be used to model all CDM features simultaneously, including the time of arrival of future CDMs, providing predictions of conjunction event evolution with associated uncertainties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge