Andy Regensky

Improved Motion Plane Adaptive 360-Degree Video Compression Using Affine Motion Models

Mar 29, 2025

Abstract:Efficient compression of 360-degree video content requires the application of advanced motion models for interframe prediction. The Motion Plane Adaptive (MPA) motion model projects the frames on multiple perspective planes in the 3D space. It improves the motion compensation by estimating the motion on those planes with a translational diamond search. In this work, we enhance this motion model with an affine parameterization and motion estimation method. Thereby, we find a feasible trade-off between the quality of the reconstructed frames and the computational cost. The affine motion estimation is hereby done with the inverse compositional Lucas-Kanade algorithm. With the proposed method, it is possible to improve the motion compensation significantly, so that the motion compensated frame has a Weighted-to-Spherically-uniform Peak Signal-to-Noise Ratio (WS-PSNR) which is about 1.6 dB higher than with the conventional MPA. In a basic video codec, the improved inter prediction can lead to Bj{\o}ntegaard Delta (BD) rate savings between 9 % and 35 % depending on the block size (BS) and number of motion parameters.

Analysis of Neural Video Compression Networks for 360-Degree Video Coding

Feb 15, 2024

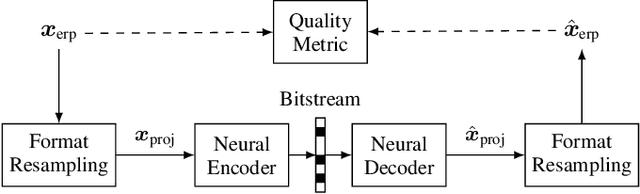

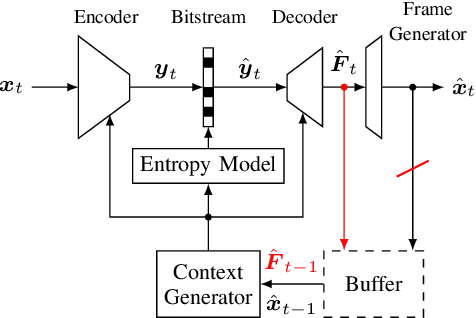

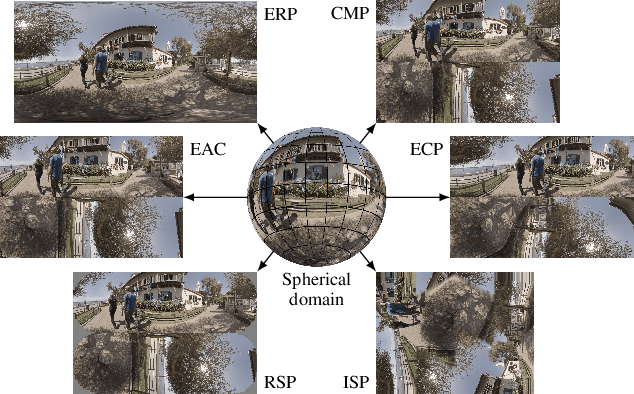

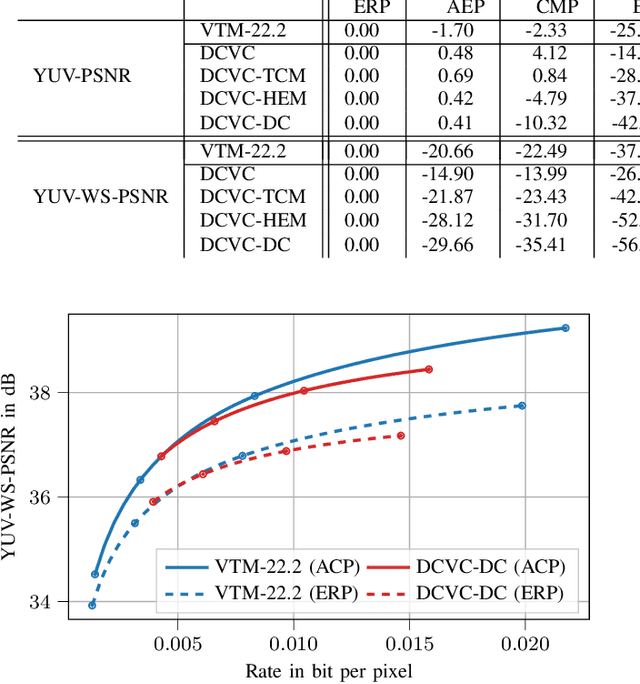

Abstract:With the increasing efforts of bringing high-quality virtual reality technologies into the market, efficient 360-degree video compression gains in importance. As such, the state-of-the-art H.266/VVC video coding standard integrates dedicated tools for 360-degree video, and considerable efforts have been put into designing 360-degree projection formats with improved compression efficiency. For the fast-evolving field of neural video compression networks (NVCs), the effects of different 360-degree projection formats on the overall compression performance have not yet been investigated. It is thus unclear, whether a resampling from the conventional equirectangular projection (ERP) to other projection formats yields similar gains for NVCs as for hybrid video codecs, and which formats perform best. In this paper, we analyze several generations of NVCs and an extensive set of 360-degree projection formats with respect to their compression performance for 360-degree video. Based on our analysis, we find that projection format resampling yields significant improvements in compression performance also for NVCs. The adjusted cubemap projection (ACP) and equatorial cylindrical projection (ECP) show to perform best and achieve rate savings of more than 55% compared to ERP based on WS-PSNR for the most recent NVC. Remarkably, the observed rate savings are higher than for H.266/VVC, emphasizing the importance of projection format resampling for NVCs.

Geometry-Corrected Geodesic Motion Modeling with Per-Frame Camera Motion for 360-Degree Video Compression

Dec 14, 2023Abstract:The large amounts of data associated with 360-degree video require highly effective compression techniques for efficient storage and distribution. The development of improved motion models for 360-degree motion compensation has shown significant improvements in compression efficiency. A geodesic motion model representing translational camera motion proved to be one of the most effective models. In this paper, we propose an improved geometry-corrected geodesic motion model that outperforms the state of the art at reduced complexity. We additionally propose the transmission of per-frame camera motion information, where prior work assumed the same camera motion for all frames of a sequence. Our approach yields average Bj{\o}ntegaard Delta rate savings of 2.27% over H.266/VVC, outperforming the original geodesic motion model by 0.32 percentage points at reduced computational complexity.

Processing Energy Modeling for Neural Network Based Image Compression

Jun 29, 2023

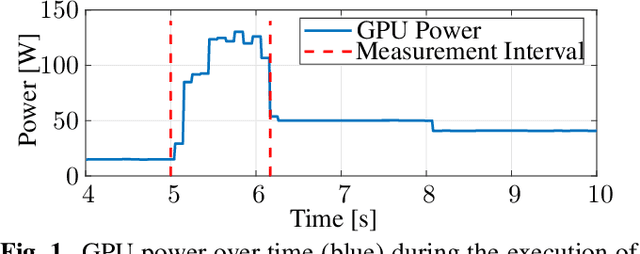

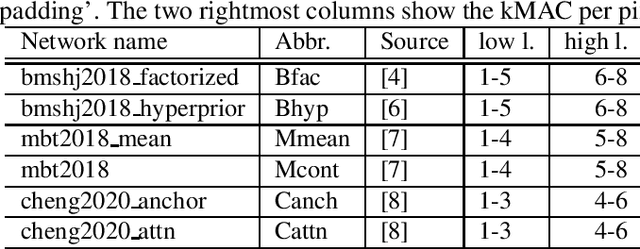

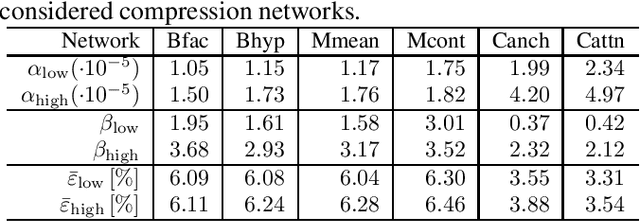

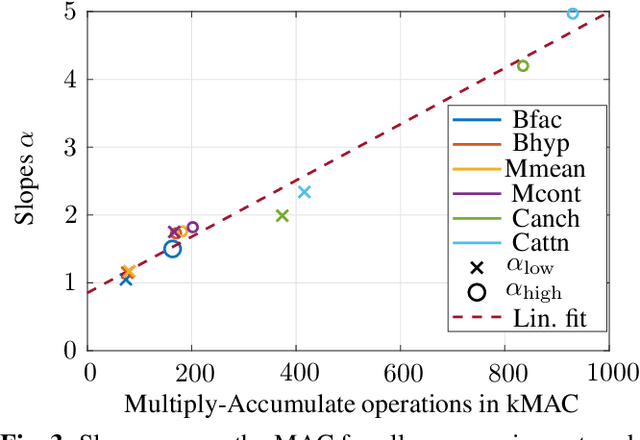

Abstract:Nowadays, the compression performance of neural-networkbased image compression algorithms outperforms state-of-the-art compression approaches such as JPEG or HEIC-based image compression. Unfortunately, most neural-network based compression methods are executed on GPUs and consume a high amount of energy during execution. Therefore, this paper performs an in-depth analysis on the energy consumption of state-of-the-art neural-network based compression methods on a GPU and show that the energy consumption of compression networks can be estimated using the image size with mean estimation errors of less than 7%. Finally, using a correlation analysis, we find that the number of operations per pixel is the main driving force for energy consumption and deduce that the network layers up to the second downsampling step are consuming most energy.

Improving Spherical Image Resampling through Viewport-Adaptivity

Jun 23, 2023

Abstract:The conversion between different spherical image and video projection formats requires highly accurate resampling techniques in order to minimize the inevitable loss of information. Suitable resampling algorithms such as nearest neighbor, linear or cubic resampling are readily available. However, no generally applicable resampling technique exploits the special properties of spherical images so far. Thus, we propose a novel viewport-adaptive resampling (VAR) technique that takes the spherical characteristics of the underlying resampling problem into account. VAR can be applied to any mesh-to-mesh capable resampling algorithm and shows significant gains across all tested techniques. In combination with frequency-selective resampling, VAR outperforms conventional cubic resampling by more than 2 dB in terms of WS-PSNR. A visual inspection and the evaluation of further metrics such as PSNR and SSIM support the positive results.

Motion Plane Adaptive Motion Modeling for Spherical Video Coding in H.266/VVC

Jun 23, 2023Abstract:Motion compensation is one of the key technologies enabling the high compression efficiency of modern video coding standards. To allow compression of spherical video content, special mapping functions are required to project the video to the 2D image plane. Distortions inevitably occurring in these mappings impair the performance of classical motion models. In this paper, we propose a novel motion plane adaptive motion modeling technique (MPA) for spherical video that allows to perform motion compensation on different motion planes in 3D space instead of having to work on the - in theory arbitrarily mapped - 2D image representation directly. The integration of MPA into the state-of-the-art H.266/VVC video coding standard shows average Bj{\o}ntegaard Delta rate savings of 1.72\% with a peak of 3.37\% based on PSNR and 1.55\% with a peak of 2.92\% based on WS-PSNR compared to VTM-14.2.

The Bjøntegaard Bible -- Why your Way of Comparing Video Codecs May Be Wrong

Apr 25, 2023

Abstract:In this paper, we provide an in-depth assessment on the Bj{\o}ntegaard Delta. We construct a large data set of video compression performance comparisons using a diverse set of metrics including PSNR, VMAF, bitrate, and processing energies. These metrics are evaluated for visual data types such as classic perspective video, 360{\deg} video, point clouds, and screen content. As compression technology, we consider multiple hybrid video codecs as well as state-of-the-art neural network based compression methods. Using additional performance points inbetween standard points defined by parameters such as the quantization parameter, we assess the interpolation error of the Bj{\o}ntegaard-Delta (BD) calculus and its impact on the final BD value. Performing an in-depth analysis, we find that the BD calculus is most accurate in the standard application of rate-distortion comparisons with mean errors below 0.5 percentage points. For other applications, the errors are higher (up to 10 percentage points), but can be reduced by a higher number of performance points. We finally come up with recommendations on how to use the BD calculus such that the validity of the resulting BD-values is maximized. Main recommendations include the use of Akima interpolation, the interpretation of relative difference curves, and the use of the logarithmic domain for saturating metrics such as SSIM and VMAF.

Fast Reconstruction of Three-Quarter Sampling Measurements Using Recurrent Local Joint Sparse Deconvolution and Extrapolation

May 05, 2022

Abstract:Recently, non-regular three-quarter sampling has shown to deliver an increased image quality of image sensors by using differently oriented L-shaped pixels compared to the same number of square pixels. A three-quarter sampling sensor can be understood as a conventional low-resolution sensor where one quadrant of each square pixel is opaque. Subsequent to the measurement, the data can be reconstructed on a regular grid with twice the resolution in both spatial dimensions using an appropriate reconstruction algorithm. For this reconstruction, local joint sparse deconvolution and extrapolation (L-JSDE) has shown to perform very well. As a disadvantage, L-JSDE requires long computation times of several dozen minutes per megapixel. In this paper, we propose a faster version of L-JSDE called recurrent L-JSDE (RL-JSDE) which is a reformulation of L-JSDE. For reasonable recurrent measurement patterns, RL-JSDE provides significant speedups on both CPU and GPU without sacrificing image quality. Compared to L-JSDE, 20-fold and 733-fold speedups are achieved on CPU and GPU, respectively.

Real-Time Frequency Selective Reconstruction through Register-Based Argmax Calculation

Feb 28, 2022

Abstract:Frequency Selective Reconstruction (FSR) is a state-of-the-art algorithm for solving diverse image reconstruction tasks, where a subset of pixel values in the image is missing. However, it entails a high computational complexity due to its iterative, blockwise procedure to reconstruct the missing pixel values. Although the complexity of FSR can be considerably decreased by performing its computations in the frequency domain, the reconstruction procedure still takes multiple seconds up to multiple minutes depending on the parameterization. However, FSR has the potential for a massive parallelization greatly improving its reconstruction time. In this paper, we introduce a novel highly parallelized formulation of FSR adapted to the capabilities of modern GPUs and propose a considerably accelerated calculation of the inherent argmax calculation. Altogether, we achieve a 100-fold speed-up, which enables the usage of FSR for real-time applications.

* 6 pages, 5 figures, Source code available at https://gitlab.lms.tf.fau.de/LMS/gpu-fsr

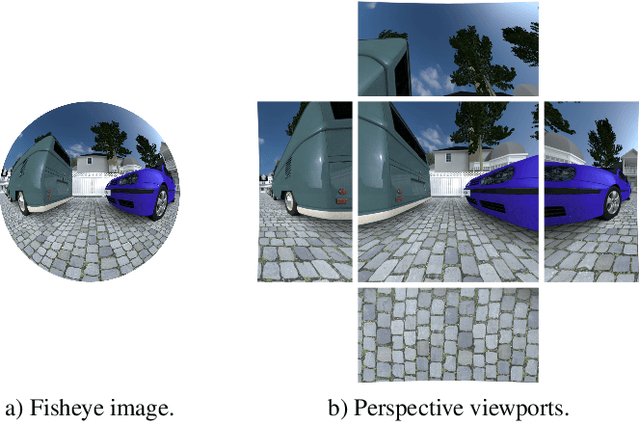

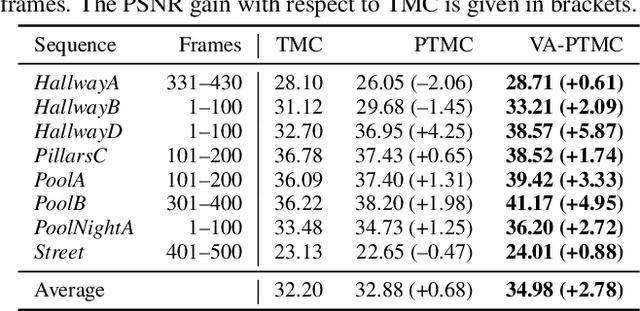

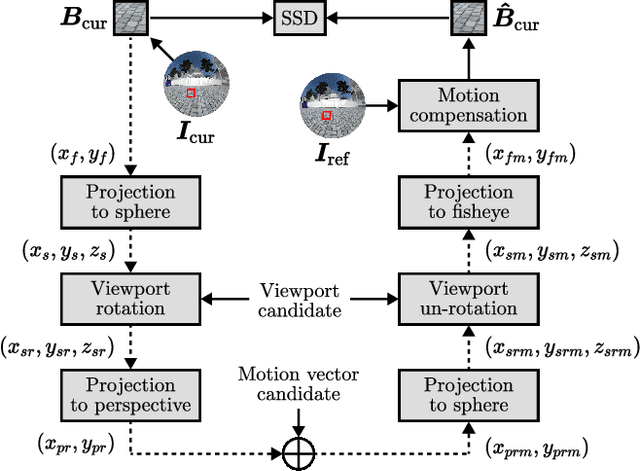

A Novel Viewport-Adaptive Motion Compensation Technique for Fisheye Video

Feb 28, 2022

Abstract:Although fisheye cameras are in high demand in many application areas due to their large field of view, many image and video signal processing tasks such as motion compensation suffer from the introduced strong radial distortions. A recently proposed projection-based approach takes the fisheye projection into account to improve fisheye motion compensation. However, the approach does not consider the large field of view of fisheye lenses that requires the consideration of different motion planes in 3D space. We propose a novel viewport-adaptive motion compensation technique that applies the motion vectors in different perspective viewports in order to realize these motion planes. Thereby, some pixels are mapped to so-called virtual image planes and require special treatment to obtain reliable mappings between the perspective viewports and the original fisheye image. While the state-of-the-art ultra wide-angle compensation is sufficiently accurate, we propose a virtual image plane compensation that leads to perfect mappings. All in all, we achieve average gains of +2.40 dB in terms of PSNR compared to the state of the art in fisheye motion compensation.

* 5 pages, 5 figures, 2 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge