Christian Herglotz

Adaptive Resolution and Chroma Subsampling for Energy-Efficient Video Coding

Feb 05, 2026Abstract:Conventional video encoders typically employ a fixed chroma subsampling format, such as YUV420, which may not optimally reflect variations in chroma detail across different types of content. This can lead to suboptimal chroma quality and inefficiencies in bitrate allocation. We propose an Adaptive Resolution-Chroma Subsampling (ARCS) framework that jointly optimizes spatial resolution and chroma subsampling to balance perceptual quality and decoding efficiency. ARCS selects an optimal (resolution, chroma format) pair for each bitrate by maximizing a composite quality-complexity objective, while enforcing monotonicity constraints to ensure smooth transitions between representations. Experimental results using x265 show that, compared to a fixed-format encoding (YUV444), on average, ARCS achieves a 13.48 % bitrate savings and a 62.18 % reduction in decoding time, which we use as a proxy for the decoding energy, to yield the same colorVideoVDP score. The proposed framework introduces chroma adaptivity as a new control dimension for energy-efficient video streaming.

Content-Driven Frame-Level Bit Prediction for Rate Control in Versatile Video Coding

Feb 05, 2026Abstract:Rate control allocates bits efficiently across frames to meet a target bitrate while maintaining quality. Conventional two-pass rate control (2pRC) in Versatile Video Coding (VVC) relies on analytical rate-QP models, which often fail to capture nonlinear spatial-temporal variations, causing quality instability and high complexity due to multiple trial encodes. This paper proposes a content-adaptive framework that predicts frame-level bit consumption using lightweight features from the Video Complexity Analyzer (VCA) and quantization parameters within a Random Forest regression. On ultra-high-definition sequences encoded with VVenC, the model achieves strong correlation with ground truth, yielding R2 values of 0.93, 0.88, and 0.77 for I-, P-, and B-frames, respectively. Integrated into a rate-control loop, it achieves comparable coding efficiency to 2pRC while reducing total encoding time by 33.3%. The results show that VCA-driven bit prediction provides a computationally efficient and accurate alternative to conventional rate-QP models.

Fast Multirate Encoding for 360° Video in OMAF Streaming Workflows

Jan 24, 2026Abstract:Preparing high-quality 360-degree video for HTTP Adaptive Streaming requires encoding each sequence into multiple representations spanning different resolutions and quantization parameters (QPs). For ultra-high-resolution immersive content such as 8K 360-degree video, this process is computationally intensive due to the large number of representations and the high complexity of modern codecs. This paper investigates fast multirate encoding strategies that reduce encoding time by reusing encoder analysis information across QPs and resolutions. We evaluate two cross-resolution information-reuse pipelines that differ in how reference encodes propagate across resolutions: (i) a strict HD -> 4K -> 8K cascade with scaled analysis reuse, and (ii) a resolution-anchored scheme that initializes each resolution with its own highest-bitrate reference before guiding dependent encodes. In addition to evaluating these pipelines on standard equirectangular projection content, we also apply the same two pipelines to cubemap-projection (CMP) tiling, where each 360-degree frame is partitioned into independently encoded tiles. CMP introduces substantial parallelism, while still benefiting from the proposed multirate analysis-reuse strategies. Experimental results using the SJTU 8K 360-degree dataset show that hierarchical analysis reuse significantly accelerates HEVC encoding with minimal rate-distortion impact across both equirectangular and CMP-tiled content, yielding encoding-time reductions of roughly 33%-59% for ERP and about 51% on average for CMP, with Bjontegaard Delta Encoding Time (BDET) gains approaching -50% and wall-clock speedups of up to 4.2x.

A High-Level Feature Model to Predict the Encoding Energy of a Hardware Video Encoder

Oct 14, 2025Abstract:In today's society, live video streaming and user generated content streamed from battery powered devices are ubiquitous. Live streaming requires real-time video encoding, and hardware video encoders are well suited for such an encoding task. In this paper, we introduce a high-level feature model using Gaussian process regression that can predict the encoding energy of a hardware video encoder. In an evaluation setup restricted to only P-frames and a single keyframe, the model can predict the encoding energy with a mean absolute percentage error of approximately 9%. Further, we demonstrate with an ablation study that spatial resolution is a key high-level feature for encoding energy prediction of a hardware encoder. A practical application of our model is that it can be used to perform a prior estimation of the energy required to encode a video at various spatial resolutions, with different coding standards and codec presets.

Design Space Exploration at Frame-Level for Joint Decoding Energy and Quality Optimization in VVC

Oct 01, 2024

Abstract:In the pursuit of a reduced energy demand of VVC decoders, it was found that the coding tool configuration has a substantial influence on the bit rate efficiency and the decoding energy demand. The Advanced Design Space Exploration algorithm as proposed in the literature, can derive coding tool configurations that provide optimal trade-offs between rate and energy efficiency. Yet, some trade-off points in the design space cannot be reached with the state-of-the-art methodology, which defines coding tools for an entire bitstream. This work proposes a novel, granular adjustment of the coding tool usage in VVC. Consequently, the optimization algorithm is adjusted to explore coding tool configurations that operate on frame-level. Moreover, new optimization criteria are introduced to focus the search on specific bit rates. As a result, coding tool configurations are obtained which yield so far inaccessible trade-offs between bit rate efficiency and decoding energy demand for VVC-coded sequences. The proposed methodology extends the design space and enhances the continuity of the Pareto front.

* submitted, accepted and published at EuSipCo 2024, Special Session on Frugality for Video Streaming

Exploiting Change Blindness for Video Coding: Perspectives from a Less Promising User Study

Jul 31, 2024

Abstract:What the human visual system can perceive is strongly limited by the capacity of our working memory and attention. Such limitations result in the human observer's inability to perceive large-scale changes in a stimulus, a phenomenon known as change blindness. In this paper, we started with the premise that this phenomenon can be exploited in video coding, especially HDR-video compression where the bitrate is high. We designed an HDR-video encoding approach that relies on spatially and temporally varying quantization parameters within the framework of HEVC video encoding. In the absence of a reliable change blindness prediction model, to extract compression candidate regions (CCR) we used an existing saliency prediction algorithm. We explored different configurations and carried out a subjective study to test our hypothesis. While our methodology did not lead to significantly superior performance in terms of the ratio between perceived quality and bitrate, we were able to determine potential flaws in our methodology, such as the employed saliency model for CCR prediction (chosen for computational efficiency, but eventually not sufficiently accurate), as well as a very strong subjective bias due to observers priming themselves early on in the experiment about the type of artifacts they should look for, thus creating a scenario with little ecological validity.

SVT-AV1 Encoding Bitrate Estimation Using Motion Search Information

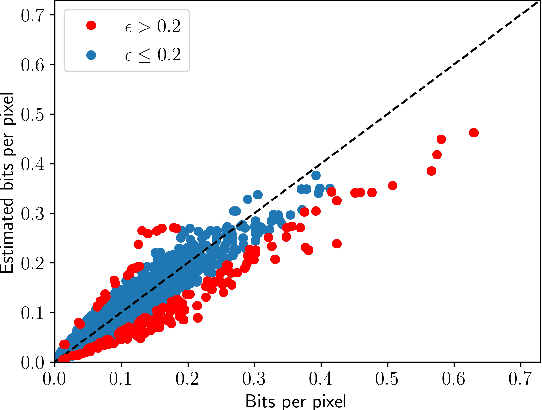

Jul 08, 2024

Abstract:Enabling high compression efficiency while keeping encoding energy consumption at a low level, requires prioritization of which videos need more sophisticated encoding techniques. However, the effects vary highly based on the content, and information on how good a video can be compressed is required. This can be measured by estimating the encoded bitstream size prior to encoding. We identified the errors between estimated motion vectors from Motion Search, an algorithm that predicts temporal changes in videos, correlates well to the encoded bitstream size. Combining Motion Search with Random Forests, the encoding bitrate can be estimated with a Pearson correlation of above 0.96.

Energy Reduction Opportunities in HDR Video Encoding

Jun 17, 2024

Abstract:This paper investigates the energy consumption of video encoding for high dynamic range videos. Specifically, we compare the energy consumption of the compression process using 10-bit input sequences, a tone-mapped 8-bit input sequence at 10-bit internal bit depth, and encoding an 8-bit input sequence using an encoder with an internal bit depth of 8 bit. We find that linear scaling of the luminance and chrominance values leads to degradations of the visual quality, but that significant encoding complexity and thus encoding energy can be saved. An important reason for this is the availability of vector instructions, which are not available for the 10-bit encoder. Furthermore, we find that at sufficiently low target bitrates, the compression efficiency at an internal bit depth of 8 bit exceeds the compression efficiency of regular 10-bit encoding.

Towards Video Codec Performance Evaluation: A Rate-Energy-Distortion Perspective

May 28, 2024Abstract:The Bj{\o}ntegaard Delta rate (BD-rate) objectively assesses the coding efficiency of video codecs using the rate-distortion (R-D) performance but overlooks encoding energy, which is crucial in practical applications, especially for those on handheld devices. Although R-D analysis can be extended to incorporate encoding energy as energy-distortion (E-D), it fails to integrate all three parameters seamlessly. This work proposes a novel approach to address this limitation by introducing a 3D representation of rate, encoding energy, and distortion through surface fitting. In addition, we evaluate various surface fitting techniques based on their accuracy and investigate the proposed 3D representation and its projections. The overlapping areas in projections help in encoder selection and recommend avoiding the slow presets of the older encoders (x264, x265), as the recent encoders (x265, VVenC) offer higher quality for the same bitrate-energy performance and provide a lower rate for the same energy-distortion performance.

Predicting the Energy Demand of a Hardware Video Decoder with Unknown Design Using Software Profiling

Feb 15, 2024Abstract:Energy efficiency for video communications and video-on-demand streaming is essential for mobile devices with a limited battery capacity. Therefore, hardware (HW) decoder implementations are commonly used to significantly reduce the energetic load of video playback. The energy consumption of such a HW implementation largely depends on a previously finalized standardization of a video codec that specifies which coding tools and methods are included in the new video codec. However, during the standardization, the true complexity of a HW implementation is unknown, and the adoption of coding tools relies solely on the expertise of experts in the industry. By using software (SW) decoder profiling, we are able to estimate the SW decoding energy demand with an average error of 1.25%. We propose a method that accurately models the energy demand of existing HW decoders with an average error of 1.79% by exploiting information from software (SW) decoder profiling. Motivated by the low estimation error, we propose a HW decoding energy metric that can predict and estimate the complexity of an unknown HW implementation using information from existing HW decoder implementations and available SW implementations of the future video decoder. By using multiple video codecs for model training, we can predict the complexity of a HW decoder with an error of less than 8% and a minimum error of 4.54% without using the corresponding HW decoder for training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge