Andreas Niekler

Using Language Models on Low-end Hardware

May 08, 2023Abstract:This paper evaluates the viability of using fixed language models for training text classification networks on low-end hardware. We combine language models with a CNN architecture and put together a comprehensive benchmark with 8 datasets covering single-label and multi-label classification of topic, sentiment, and genre. Our observations are distilled into a list of trade-offs, concluding that there are scenarios, where not fine-tuning a language model yields competitive effectiveness at faster training, requiring only a quarter of the memory compared to fine-tuning.

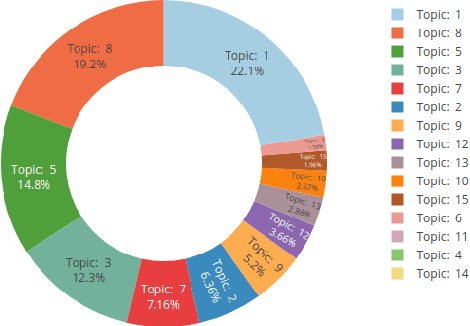

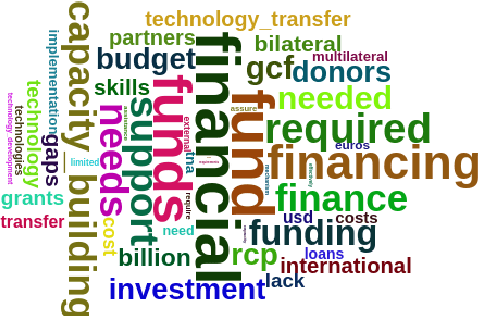

Using Text Classification with a Bayesian Correction for Estimating Overreporting in the Creditor Reporting System on Climate Adaptation Finance

Nov 30, 2022Abstract:Development funds are essential to finance climate change adaptation and are thus an important part of international climate policy. % However, the absence of a common reporting practice makes it difficult to assess the amount and distribution of such funds. Research has questioned the credibility of reported figures, indicating that adaptation financing is in fact lower than published figures suggest. Projects claiming a greater relevance to climate change adaptation than they target are referred to as "overreported". To estimate realistic rates of overreporting in large data sets over times, we propose an approach based on state-of-the-art text classification. To date, assessments of credibility have relied on small, manually evaluated samples. We use such a sample data set to train a classifier with an accuracy of $89.81\% \pm 0.83\%$ (tenfold cross-validation) and extrapolate to larger data sets to identify overreporting. Additionally, we propose a method that incorporates evidence of smaller, higher-quality data to correct predicted rates using Bayes' theorem. This enables a comparison of different annotation schemes to estimate the degree of overreporting in climate change adaptation. Our results support findings that indicate extensive overreporting of $32.03\%$ with a credible interval of $[19.81\%;48.34\%]$.

Application of the interactive Leipzig Corpus Miner as a generic research platform for the use in the social sciences

Oct 06, 2021

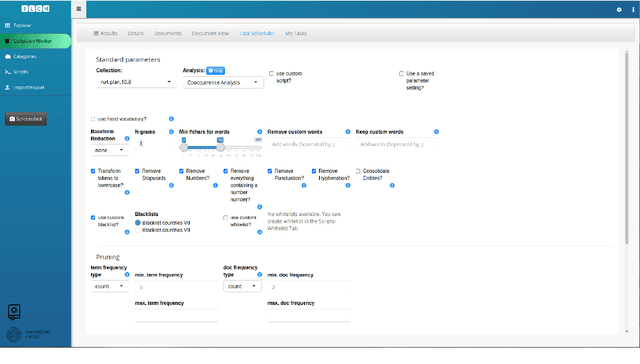

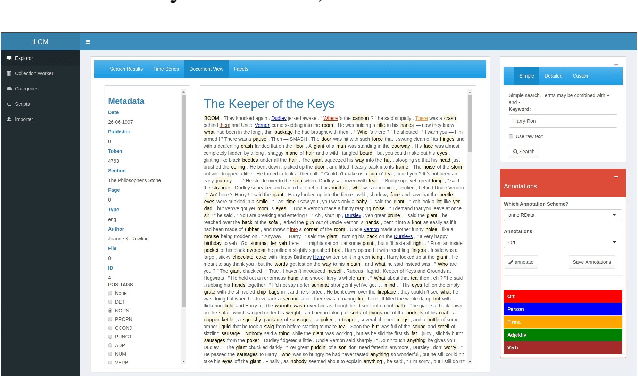

Abstract:This article introduces to the interactive Leipzig Corpus Miner (iLCM) - a newly released, open-source software to perform automatic content analysis. Since the iLCM is based on the R-programming language, its generic text mining procedures provided via a user-friendly graphical user interface (GUI) can easily be extended using the integrated IDE RStudio-Server or numerous other interfaces in the tool. Furthermore, the iLCM offers various possibilities to use quantitative and qualitative research approaches in combination. Some of these possibilities will be presented in more detail in the following.

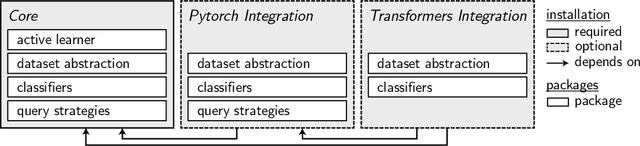

Small-text: Active Learning for Text Classification in Python

Jul 21, 2021

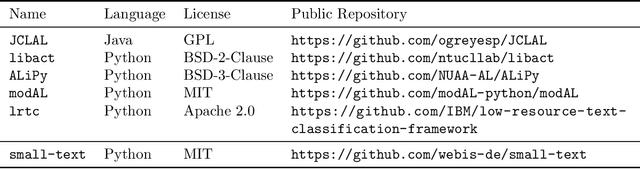

Abstract:We present small-text, a simple modular active learning library, which offers pool-based active learning for text classification in Python. It comes with various pre-implemented state-of-the-art query strategies, including some which can leverage the GPU. Clearly defined interfaces allow to combine a multitude of such query strategies with different classifiers, thereby facilitating a quick mix and match, and enabling a rapid development of both active learning experiments and applications. To make various classifiers accessible in a consistent way, it integrates several well-known machine learning libraries, namely, scikit-learn, PyTorch, and huggingface transformers -- for which the latter integrations are available as optionally installable extensions. The library is available under the MIT License at https://github.com/webis-de/small-text.

Uncertainty-based Query Strategies for Active Learning with Transformers

Jul 12, 2021

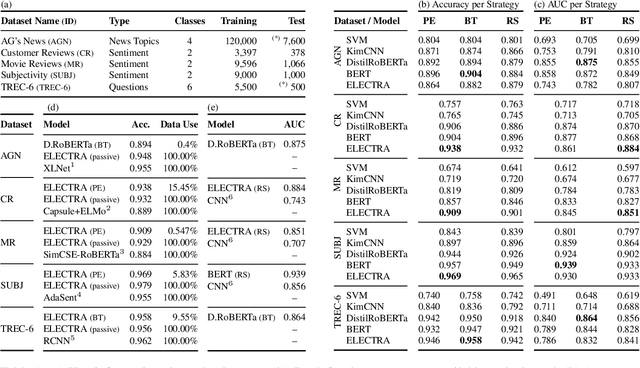

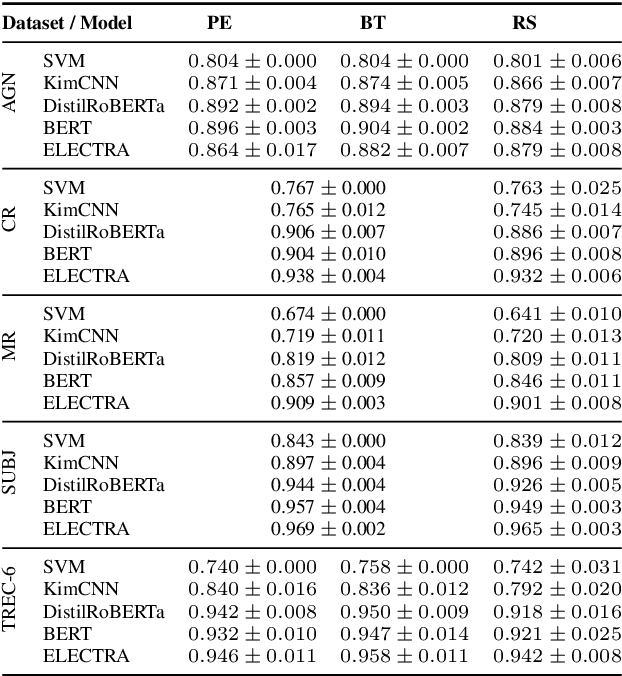

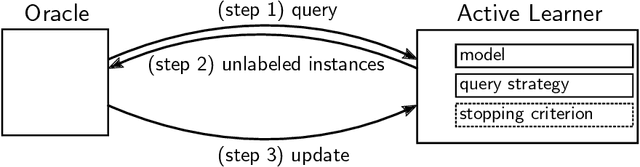

Abstract:Active learning is the iterative construction of a classification model through targeted labeling, enabling significant labeling cost savings. As most research on active learning has been carried out before transformer-based language models ("transformers") became popular, despite its practical importance, comparably few papers have investigated how transformers can be combined with active learning to date. This can be attributed to the fact that using state-of-the-art query strategies for transformers induces a prohibitive runtime overhead, which effectively cancels out, or even outweighs aforementioned cost savings. In this paper, we revisit uncertainty-based query strategies, which had been largely outperformed before, but are particularly suited in the context of fine-tuning transformers. In an extensive evaluation on five widely used text classification benchmarks, we show that considerable improvements of up to 14.4 percentage points in area under the learning curve are achieved, as well as a final accuracy close to the state of the art for all but one benchmark, using only between 0.4% and 15% of the training data.

Mining Legacy Issues in Open Pit Mining Sites: Innovation & Support of Renaturalization and Land Utilization

May 13, 2021

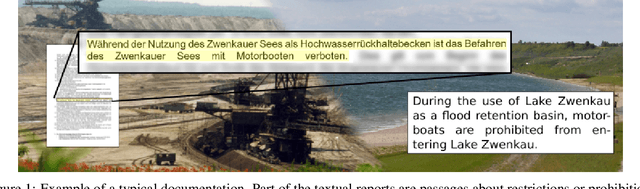

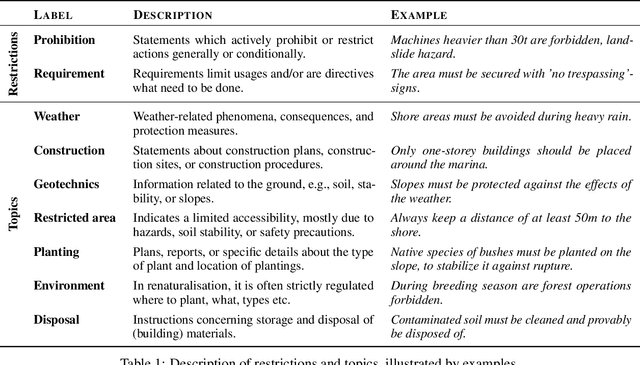

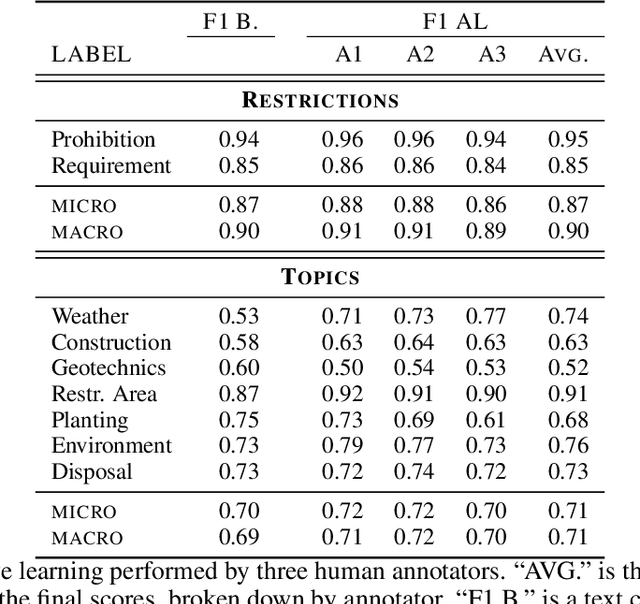

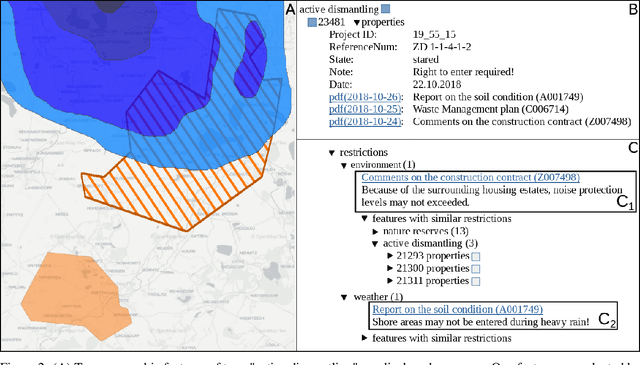

Abstract:Open pit mines left many regions worldwide inhospitable or uninhabitable. To put these regions back into use, entire stretches of land must be renaturalized. For the sustainable subsequent use or transfer to a new primary use, many contaminated sites and soil information have to be permanently managed. In most cases, this information is available in the form of expert reports in unstructured data collections or file folders, which in the best case are digitized. Due to size and complexity of the data, it is difficult for a single person to have an overview of this data in order to be able to make reliable statements. This is one of the most important obstacles to the rapid transfer of these areas to after-use. An information-based approach to this issue supports fulfilling several Sustainable Development Goals regarding environment issues, health and climate action. We use a stack of Optical Character Recognition, Text Classification, Active Learning and Geographic Information System Visualization to effectively mine and visualize this information. Subsequently, we link the extracted information to geographic coordinates and visualize them using a Geographic Information System. Active Learning plays a vital role because our dataset provides no training data. In total, we process nine categories and actively learn their representation in our dataset. We evaluate the OCR, Active Learning and Text Classification separately to report the performance of the system. Active Learning and text classification results are twofold: Whereas our categories about restrictions work sufficient ($>$.85 F1), the seven topic-oriented categories were complicated for human coders and hence the results achieved mediocre evaluation scores ($<$.70 F1).

A Survey of Active Learning for Text Classification using Deep Neural Networks

Aug 17, 2020

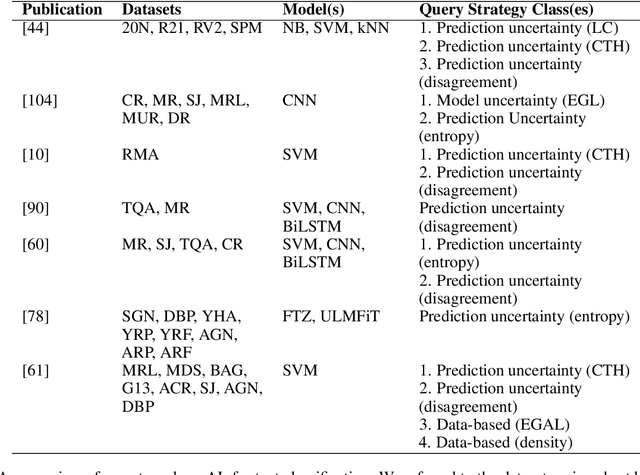

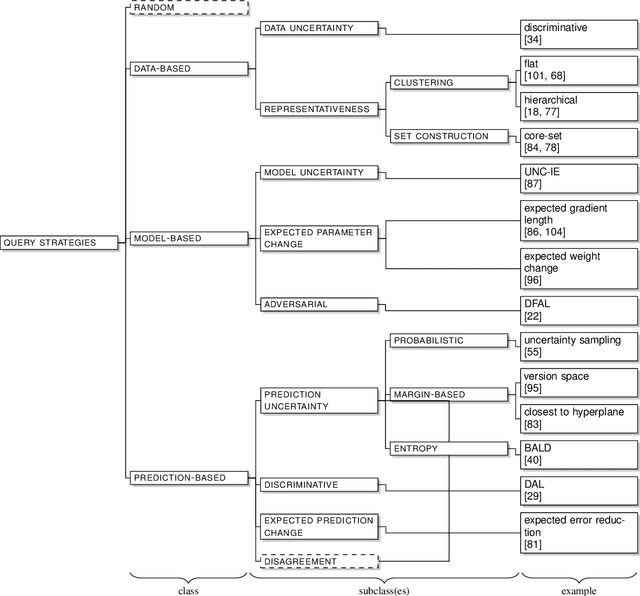

Abstract:Natural language processing (NLP) and neural networks (NNs) have both undergone significant changes in recent years. For active learning (AL) purposes, NNs are, however, less commonly used -- despite their current popularity. By using the superior text classification performance of NNs for AL, we can either increase a model's performance using the same amount of data or reduce the data and therefore the required annotation efforts while keeping the same performance. We review AL for text classification using deep neural networks (DNNs) and elaborate on two main causes which used to hinder the adoption: (a) the inability of NNs to provide reliable uncertainty estimates, on which the most commonly used query strategies rely, and (b) the challenge of training DNNs on small data. To investigate the former, we construct a taxonomy of query strategies, which distinguishes between data-based, model-based, and prediction-based instance selection, and investigate the prevalence of these classes in recent research. Moreover, we review recent NN-based advances in NLP like word embeddings or language models in the context of (D)NNs, survey the current state-of-the-art at the intersection of AL, text classification, and DNNs and relate recent advances in NLP to AL. Finally, we analyze recent work in AL for text classification, connect the respective query strategies to the taxonomy, and outline commonalities and shortcomings. As a result, we highlight gaps in current research and present open research questions.

iLCM - A Virtual Research Infrastructure for Large-Scale Qualitative Data

May 11, 2018

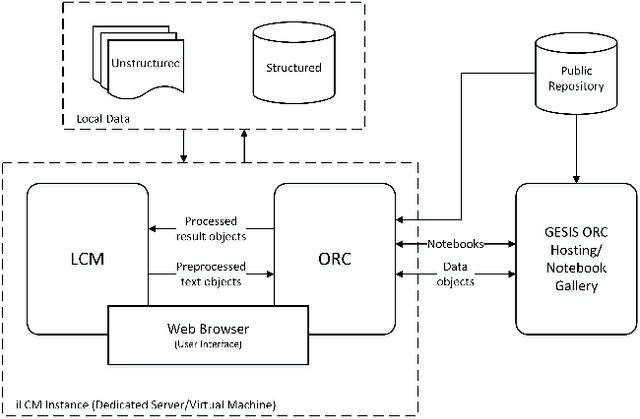

Abstract:The iLCM project pursues the development of an integrated research environment for the analysis of structured and unstructured data in a "Software as a Service" architecture (SaaS). The research environment addresses requirements for the quantitative evaluation of large amounts of qualitative data with text mining methods as well as requirements for the reproducibility of data-driven research designs in the social sciences. For this, the iLCM research environment comprises two central components. First, the Leipzig Corpus Miner (LCM), a decentralized SaaS application for the analysis of large amounts of news texts developed in a previous Digital Humanities project. Second, the text mining tools implemented in the LCM are extended by an "Open Research Computing" (ORC) environment for executable script documents, so-called "notebooks". This novel integration allows to combine generic, high-performance methods to process large amounts of unstructured text data and with individual program scripts to address specific research requirements in computational social science and digital humanities.

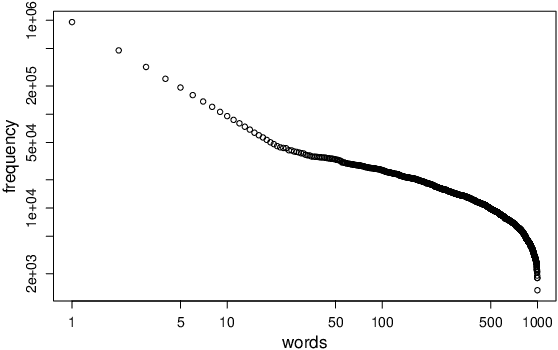

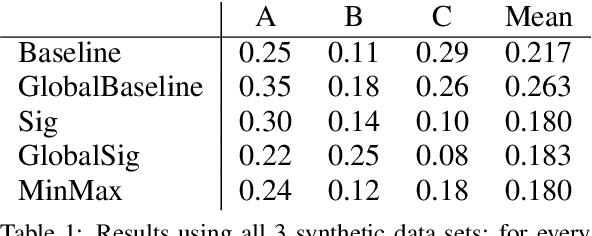

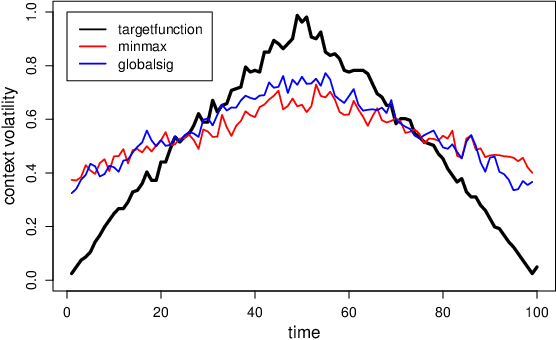

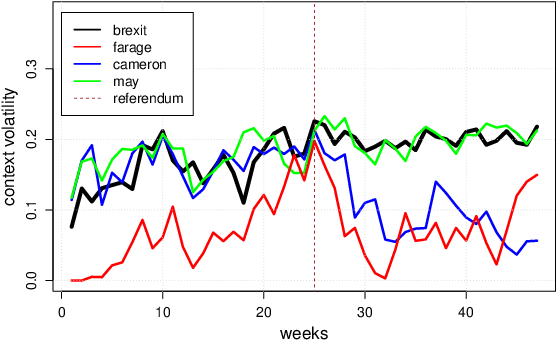

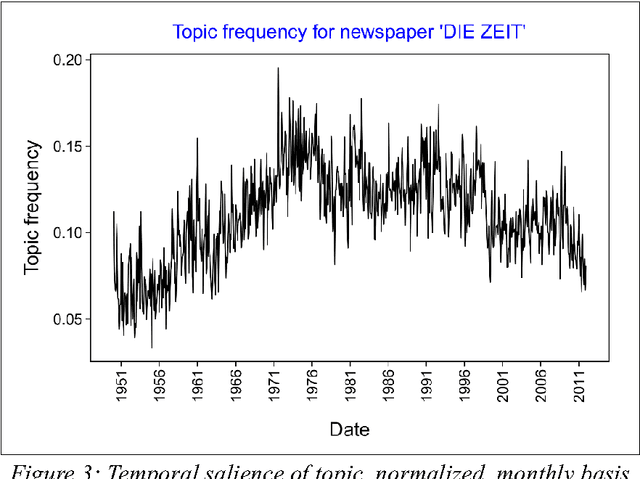

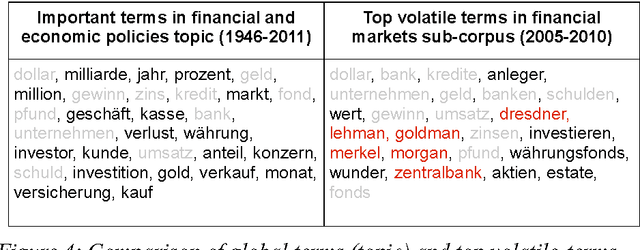

Detecting and assessing contextual change in diachronic text documents using context volatility

Nov 15, 2017

Abstract:Terms in diachronic text corpora may exhibit a high degree of semantic dynamics that is only partially captured by the common notion of semantic change. The new measure of context volatility that we propose models the degree by which terms change context in a text collection over time. The computation of context volatility for a word relies on the significance-values of its co-occurrent terms and the corresponding co-occurrence ranks in sequential time spans. We define a baseline and present an efficient computational approach in order to overcome problems related to computational issues in the data structure. Results are evaluated both, on synthetic documents that are used to simulate contextual changes, and a real example based on British newspaper texts.

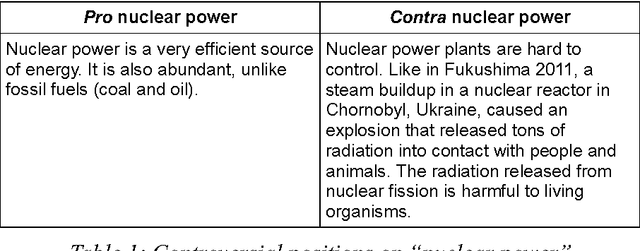

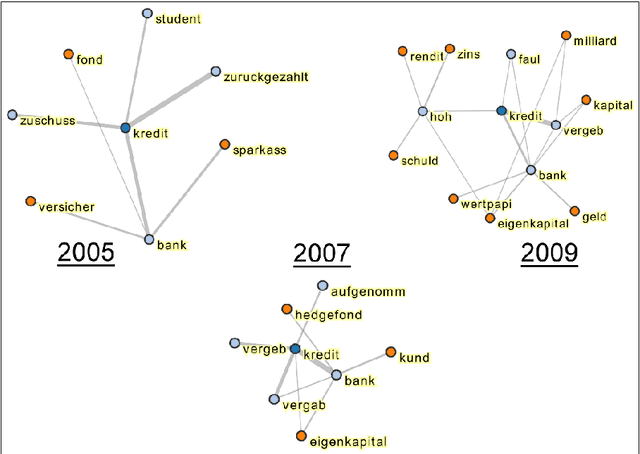

Modeling the dynamics of domain specific terminology in diachronic corpora

Jul 11, 2017

Abstract:In terminology work, natural language processing, and digital humanities, several studies address the analysis of variations in context and meaning of terms in order to detect semantic change and the evolution of terms. We distinguish three different approaches to describe contextual variations: methods based on the analysis of patterns and linguistic clues, methods exploring the latent semantic space of single words, and methods for the analysis of topic membership. The paper presents the notion of context volatility as a new measure for detecting semantic change and applies it to key term extraction in a political science case study. The measure quantifies the dynamics of a term's contextual variation within a diachronic corpus to identify periods of time that are characterised by intense controversial debates or substantial semantic transformations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge