Amir Ghalamzan E.

Deep Functional Predictive Control for Strawberry Cluster Manipulation using Tactile Prediction

Mar 09, 2023Abstract:This paper introduces a novel approach to address the problem of Physical Robot Interaction (PRI) during robot pushing tasks. The approach uses a data-driven forward model based on tactile predictions to inform the controller about potential future movements of the object being pushed, such as a strawberry stem, using a robot tactile finger. The model is integrated into a Deep Functional Predictive Control (d-FPC) system to control the displacement of the stem on the tactile finger during pushes. Pushing an object with a robot finger along a desired trajectory in 3D is a highly nonlinear and complex physical robot interaction, especially when the object is not stably grasped. The proposed approach controls the stem movements on the tactile finger in a prediction horizon. The effectiveness of the proposed FPC is demonstrated in a series of tests involving a real robot pushing a strawberry in a cluster. The results indicate that the d-FPC controller can successfully control PRI in robotic manipulation tasks beyond the handling of strawberries. The proposed approach offers a promising direction for addressing the challenging PRI problem in robotic manipulation tasks. Future work will explore the generalisation of the approach to other objects and tasks.

Autonomous Strawberry Picking Robotic System (Robofruit)

Jan 10, 2023

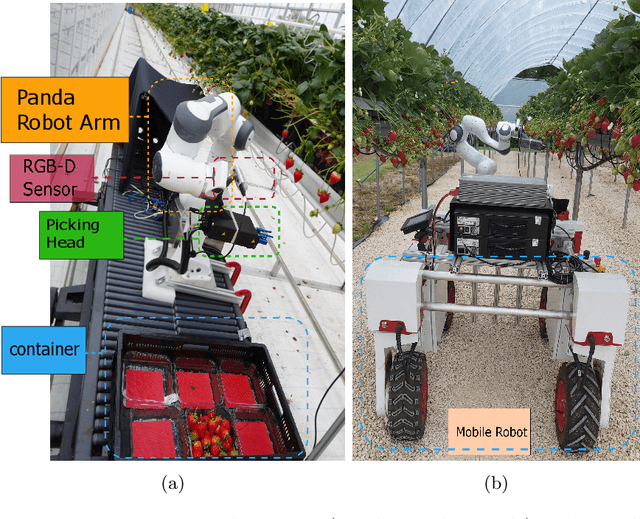

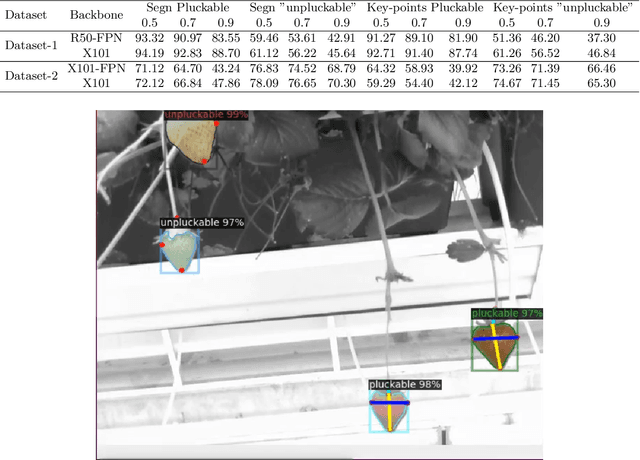

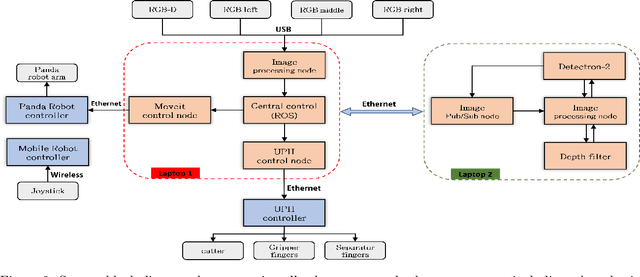

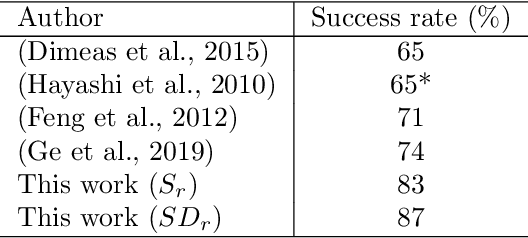

Abstract:Challenges in strawberry picking made selective harvesting robotic technology demanding. However, selective harvesting of strawberries is complicated forming a few scientific research questions. Most available solutions only deal with a specific picking scenario, e.g., picking only a single variety of fruit in isolation. Nonetheless, most economically viable (e.g. high-yielding and/or disease-resistant) varieties of strawberry are grown in dense clusters. The current perception technology in such use cases is inefficient. In this work, we developed a novel system capable of harvesting strawberries with several unique features. The features allow the system to deal with very complex picking scenarios, e.g. dense clusters. Our concept of a modular system makes our system reconfigurable to adapt to different picking scenarios. We designed, manufactured, and tested a picking head with 2.5 DOF (2 independent mechanisms and 1 dependent cutting system) capable of removing possible occlusions and harvesting targeted strawberries without contacting fruit flesh to avoid damage and bruising. In addition, we developed a novel perception system to localise strawberries and detect their key points, picking points, and determine their ripeness. For this purpose, we introduced two new datasets. Finally, we tested the system in a commercial strawberry growing field and our research farm with three different strawberry varieties. The results show the effectiveness and reliability of the proposed system. The designed picking head was able to remove occlusions and harvest strawberries effectively. The perception system was able to detect and determine the ripeness of strawberries with 95% accuracy. In total, the system was able to harvest 87% of all detected strawberries with a success rate of 83% for all pluckable fruits. We also discuss a series of open research questions in the discussion section.

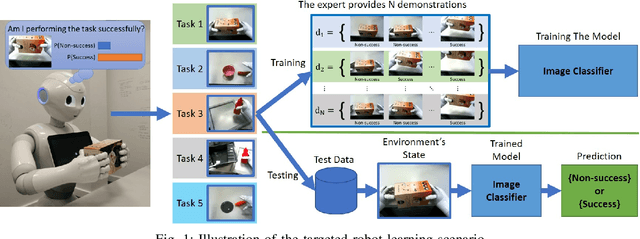

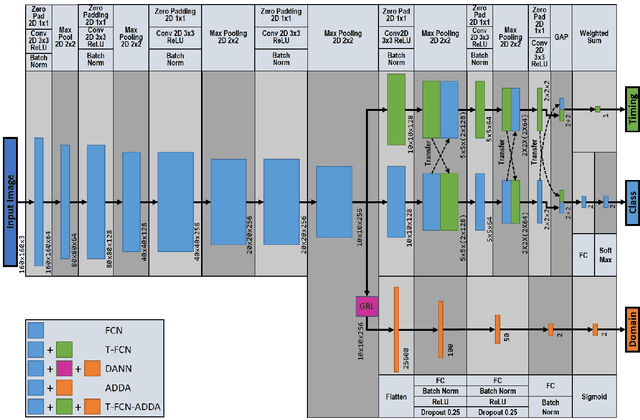

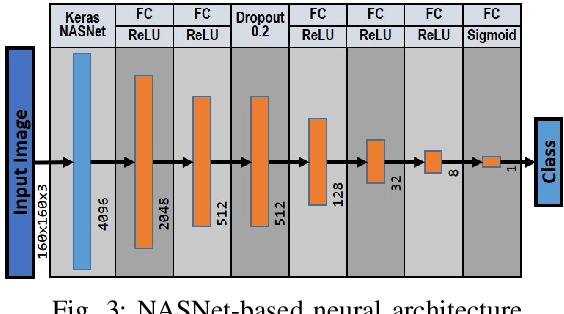

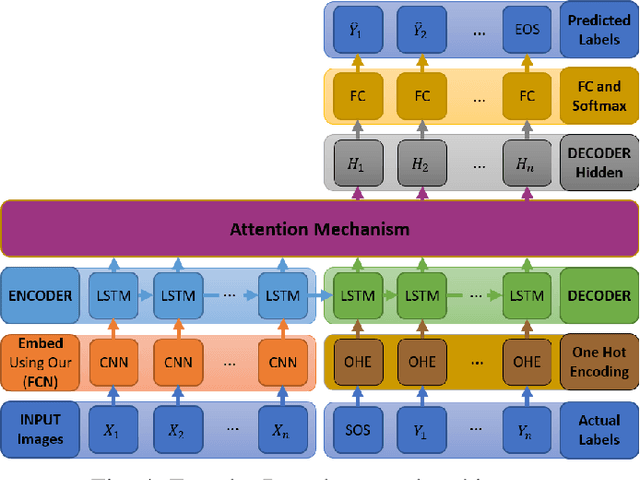

Neural Task Success Classifiers for Robotic Manipulation from Few Real Demonstrations

Jul 01, 2021

Abstract:Robots learning a new manipulation task from a small amount of demonstrations are increasingly demanded in different workspaces. A classifier model assessing the quality of actions can predict the successful completion of a task, which can be used by intelligent agents for action-selection. This paper presents a novel classifier that learns to classify task completion only from a few demonstrations. We carry out a comprehensive comparison of different neural classifiers, e.g. fully connected-based, fully convolutional-based, sequence2sequence-based, and domain adaptation-based classification. We also present a new dataset including five robot manipulation tasks, which is publicly available. We compared the performances of our novel classifier and the existing models using our dataset and the MIME dataset. The results suggest domain adaptation and timing-based features improve success prediction. Our novel model, i.e. fully convolutional neural network with domain adaptation and timing features, achieves an average classification accuracy of 97.3\% and 95.5\% across tasks in both datasets whereas state-of-the-art classifiers without domain adaptation and timing-features only achieve 82.4\% and 90.3\%, respectively.

A data-set of piercing needle through deformable objects for Deep Learning from Demonstrations

Dec 04, 2020

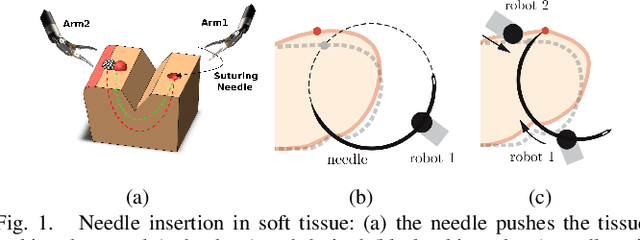

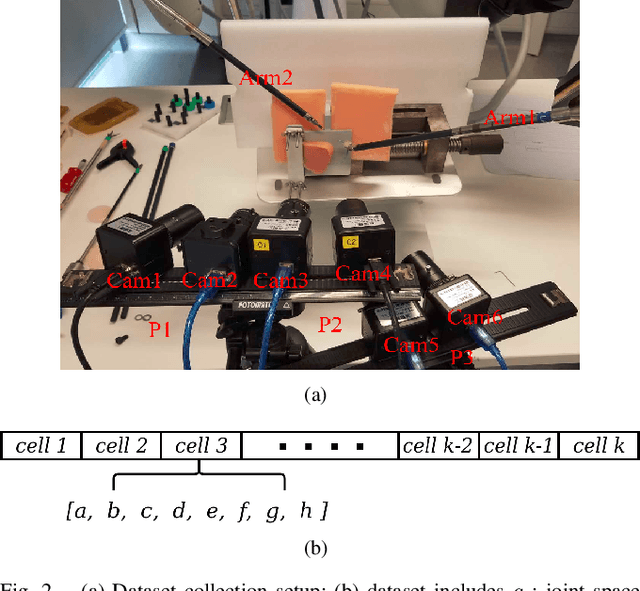

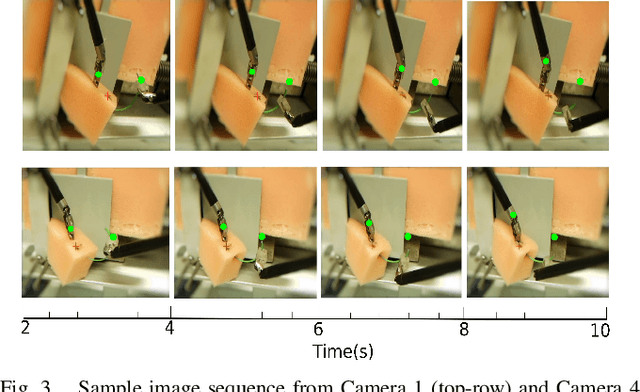

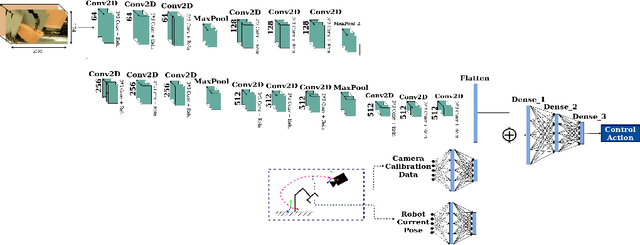

Abstract:Many robotic tasks are still teleoperated since automating them is very time consuming and expensive. Robot Learning from Demonstrations (RLfD) can reduce programming time and cost. However, conventional RLfD approaches are not directly applicable to many robotic tasks, e.g. robotic suturing with minimally invasive robots, as they require a time-consuming process of designing features from visual information. Deep Neural Networks (DNN) have emerged as useful tools for creating complex models capturing the relationship between high-dimensional observation space and low-level action/state space. Nonetheless, such approaches require a dataset suitable for training appropriate DNN models. This paper presents a dataset of inserting/piercing a needle with two arms of da Vinci Research Kit in/through soft tissues. The dataset consists of (1) 60 successful needle insertion trials with randomised desired exit points recorded by 6 high-resolution calibrated cameras, (2) the corresponding robot data, calibration parameters and (3) the commanded robot control input where all the collected data are synchronised. The dataset is designed for Deep-RLfD approaches. We also implemented several deep RLfD architectures, including simple feed-forward CNNs and different Recurrent Convolutional Networks (RCNs). Our study indicates RCNs improve the prediction accuracy of the model despite that the baseline feed-forward CNNs successfully learns the relationship between the visual information and the next step control actions of the robot. The dataset, as well as our baseline implementations of RLfD, are publicly available for bench-marking at https://github.com/imanlab/d-lfd.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge