Kiyanoush Nazari

Enabling Tactile Feedback for Robotic Strawberry Handling using AST Skin

Jul 01, 2024

Abstract:Acoustic Soft Tactile (AST) skin is a novel sensing technology which derives tactile information from the modulation of acoustic waves travelling through the skin's embedded acoustic channels. A generalisable data-driven calibration model maps the acoustic modulations to the corresponding tactile information in the form of contact forces with their contact locations and contact geometries. AST skin technology has been highlighted for its easy customisation. As a case study, this paper discusses the possibility of using AST skin on a custom-built robotic end effector finger for strawberry handling. The paper delves into the design, prototyping, and calibration method to sensorise the end effector finger with AST skin. A real-time force-controlled gripping experiment is conducted with the sensorised finger to handle strawberries by their peduncle. The finger could successfully grip the strawberry peduncle by maintaining a preset force of 2 N with a maximum Mean Absolute Error (MAE) of 0.31 N over multiple peduncle diameters and strawberry weight classes. Moreover, this study sets confidence in the usability of AST skin in generating real-time tactile feedback for robot manipulation tasks.

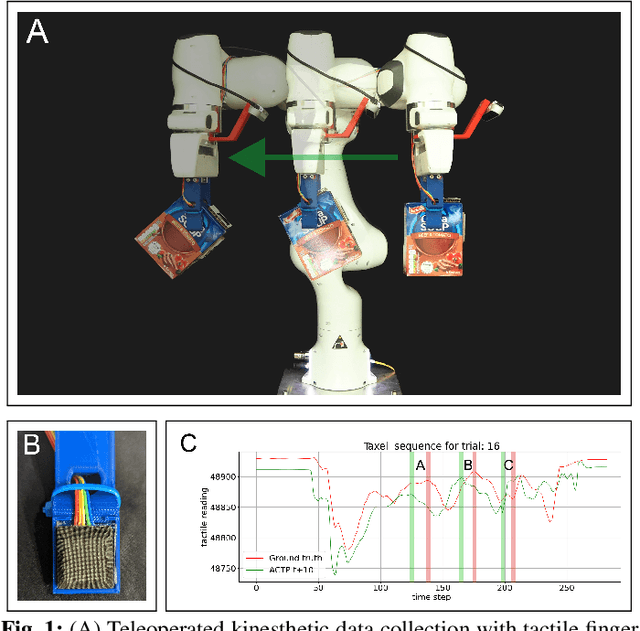

Deep Functional Predictive Control for Strawberry Cluster Manipulation using Tactile Prediction

Mar 09, 2023Abstract:This paper introduces a novel approach to address the problem of Physical Robot Interaction (PRI) during robot pushing tasks. The approach uses a data-driven forward model based on tactile predictions to inform the controller about potential future movements of the object being pushed, such as a strawberry stem, using a robot tactile finger. The model is integrated into a Deep Functional Predictive Control (d-FPC) system to control the displacement of the stem on the tactile finger during pushes. Pushing an object with a robot finger along a desired trajectory in 3D is a highly nonlinear and complex physical robot interaction, especially when the object is not stably grasped. The proposed approach controls the stem movements on the tactile finger in a prediction horizon. The effectiveness of the proposed FPC is demonstrated in a series of tests involving a real robot pushing a strawberry in a cluster. The results indicate that the d-FPC controller can successfully control PRI in robotic manipulation tasks beyond the handling of strawberries. The proposed approach offers a promising direction for addressing the challenging PRI problem in robotic manipulation tasks. Future work will explore the generalisation of the approach to other objects and tasks.

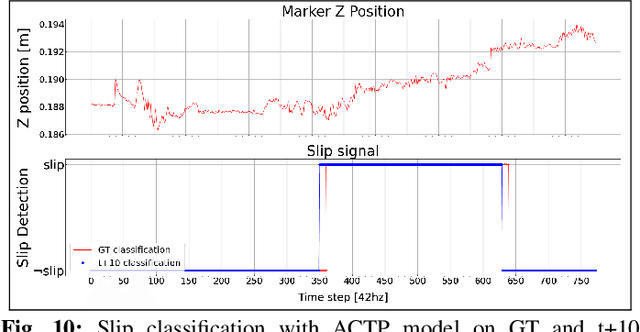

Proactive slip control by learned slip model and trajectory adaptation

Sep 13, 2022

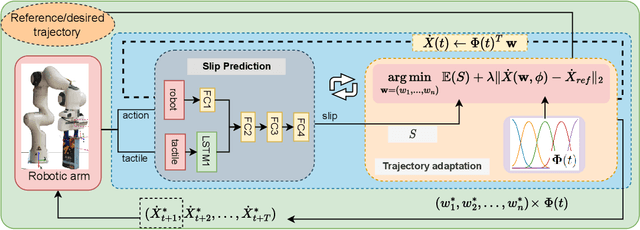

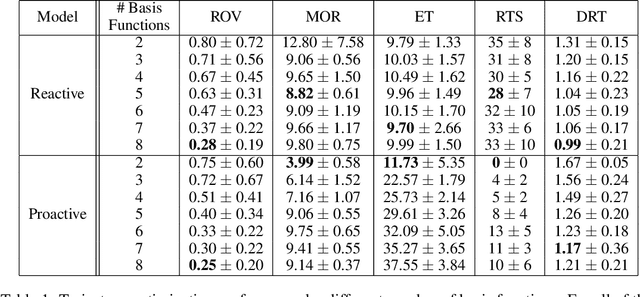

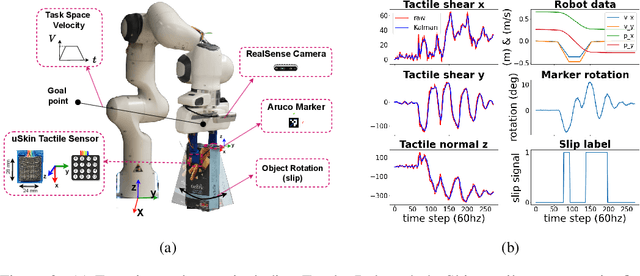

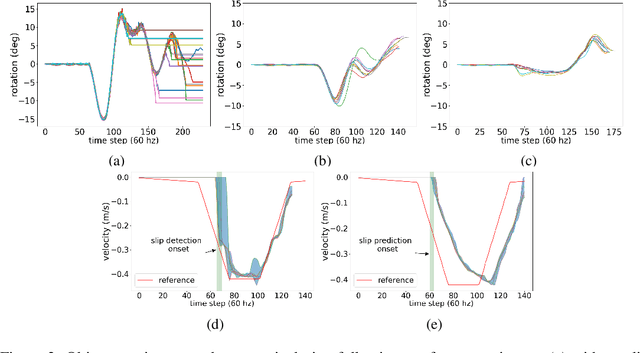

Abstract:This paper presents a novel control approach to dealing with object slip during robotic manipulative movements. Slip is a major cause of failure in many robotic grasping and manipulation tasks. Existing works increase grip force to avoid/control slip. However, this may not be feasible when (i) the robot cannot increase the gripping force -- the max gripping force is already applied or (ii) increased force damages the grasped object, such as soft fruit. Moreover, the robot fixes the gripping force when it forms a stable grasp on the surface of an object, and changing the gripping force during real-time manipulation may not be an effective control policy. We propose a novel control approach to slip avoidance including a learned action-conditioned slip predictor and a constrained optimiser avoiding a predicted slip given a desired robot action. We show the effectiveness of the proposed trajectory adaptation method with receding horizon controller with a series of real-robot test cases. Our experimental results show our proposed data-driven predictive controller can control slip for objects unseen in training.

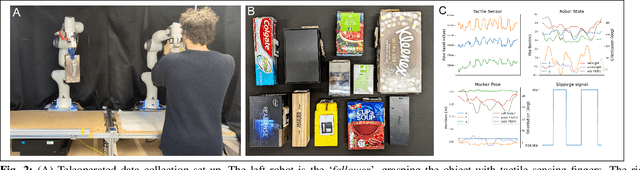

Action Conditioned Tactile Prediction: a case study on slip prediction

May 19, 2022

Abstract:Tactile predictive models can be useful across several robotic manipulation tasks, e.g. robotic pushing, robotic grasping, slip avoidance, and in-hand manipulation. However, available tactile prediction models are mostly studied for image-based tactile sensors and there is no comparison study indicating the best performing models. In this paper, we presented two novel data-driven action-conditioned models for predicting tactile signals during real-world physical robot interaction tasks (1) action condition tactile prediction and (2) action conditioned tactile-video prediction models. We use a magnetic-based tactile sensor that is challenging to analyse and test state-of-the-art predictive models and the only existing bespoke tactile prediction model. We compare the performance of these models with those of our proposed models. We perform the comparison study using our novel tactile enabled dataset containing 51,000 tactile frames of a real-world robotic manipulation task with 11 flat-surfaced household objects. Our experimental results demonstrate the superiority of our proposed tactile prediction models in terms of qualitative, quantitative and slip prediction scores.

Deep Movement Primitives: toward Breast Cancer Examination Robot

Feb 14, 2022

Abstract:Breast cancer is the most common type of cancer worldwide. A robotic system performing autonomous breast palpation can make a significant impact on the related health sector worldwide. However, robot programming for breast palpating with different geometries is very complex and unsolved. Robot learning from demonstrations (LfD) reduces the programming time and cost. However, the available LfD are lacking the modelling of the manipulation path/trajectory as an explicit function of the visual sensory information. This paper presents a novel approach to manipulation path/trajectory planning called deep Movement Primitives that successfully generates the movements of a manipulator to reach a breast phantom and perform the palpation. We show the effectiveness of our approach by a series of real-robot experiments of reaching and palpating a breast phantom. The experimental results indicate our approach outperforms the state-of-the-art method.

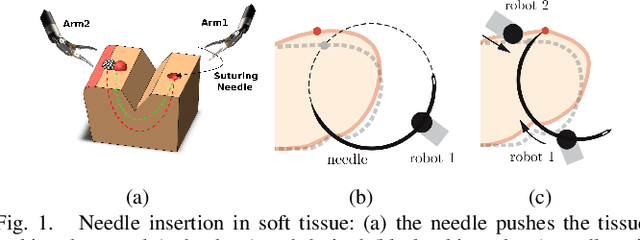

A data-set of piercing needle through deformable objects for Deep Learning from Demonstrations

Dec 04, 2020

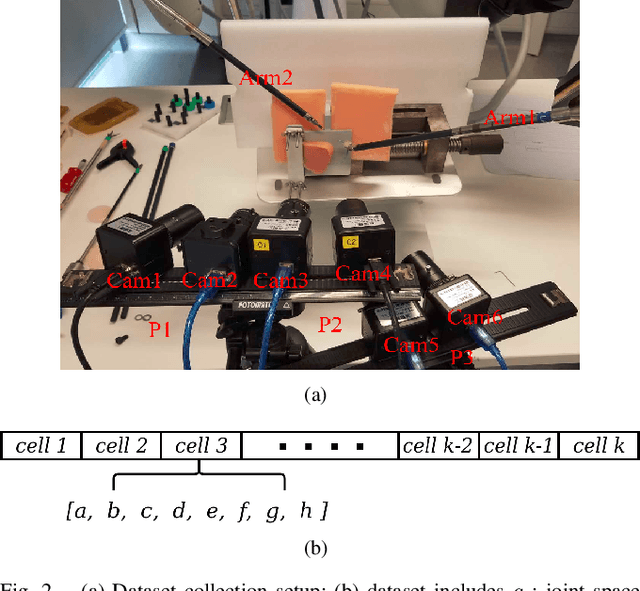

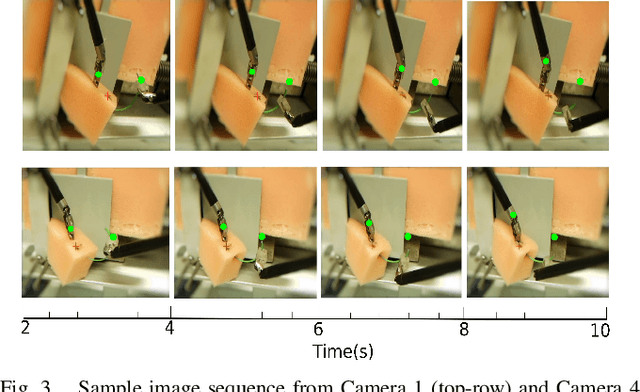

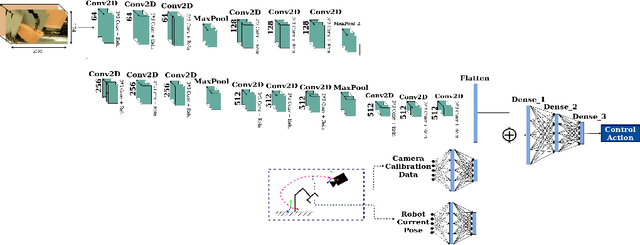

Abstract:Many robotic tasks are still teleoperated since automating them is very time consuming and expensive. Robot Learning from Demonstrations (RLfD) can reduce programming time and cost. However, conventional RLfD approaches are not directly applicable to many robotic tasks, e.g. robotic suturing with minimally invasive robots, as they require a time-consuming process of designing features from visual information. Deep Neural Networks (DNN) have emerged as useful tools for creating complex models capturing the relationship between high-dimensional observation space and low-level action/state space. Nonetheless, such approaches require a dataset suitable for training appropriate DNN models. This paper presents a dataset of inserting/piercing a needle with two arms of da Vinci Research Kit in/through soft tissues. The dataset consists of (1) 60 successful needle insertion trials with randomised desired exit points recorded by 6 high-resolution calibrated cameras, (2) the corresponding robot data, calibration parameters and (3) the commanded robot control input where all the collected data are synchronised. The dataset is designed for Deep-RLfD approaches. We also implemented several deep RLfD architectures, including simple feed-forward CNNs and different Recurrent Convolutional Networks (RCNs). Our study indicates RCNs improve the prediction accuracy of the model despite that the baseline feed-forward CNNs successfully learns the relationship between the visual information and the next step control actions of the robot. The dataset, as well as our baseline implementations of RLfD, are publicly available for bench-marking at https://github.com/imanlab/d-lfd.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge