Willow Mandil

Combining Vision and Tactile Sensation for Video Prediction

Apr 21, 2023

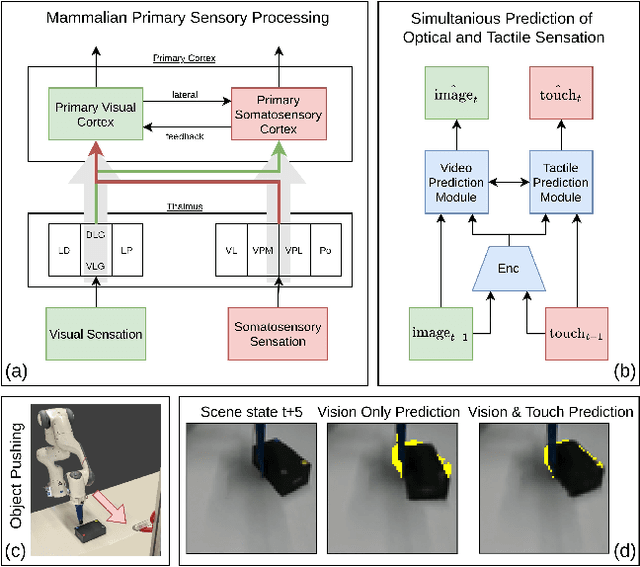

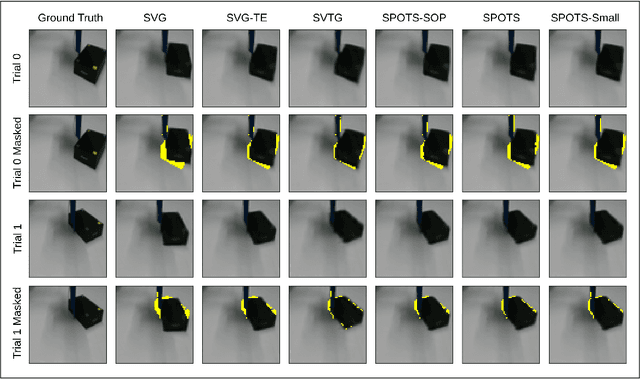

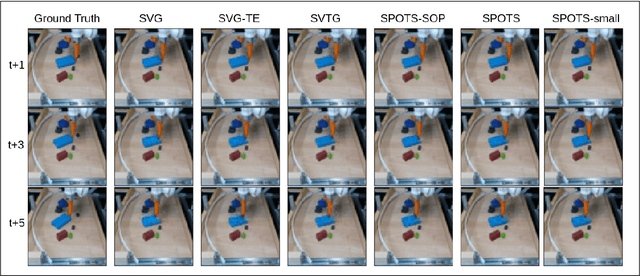

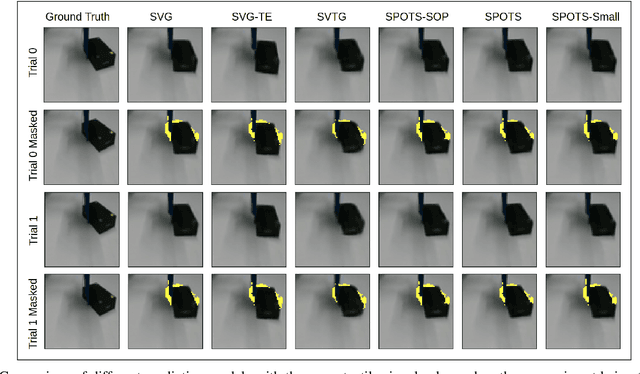

Abstract:In this paper, we explore the impact of adding tactile sensation to video prediction models for physical robot interactions. Predicting the impact of robotic actions on the environment is a fundamental challenge in robotics. Current methods leverage visual and robot action data to generate video predictions over a given time period, which can then be used to adjust robot actions. However, humans rely on both visual and tactile feedback to develop and maintain a mental model of their physical surroundings. In this paper, we investigate the impact of integrating tactile feedback into video prediction models for physical robot interactions. We propose three multi-modal integration approaches and compare the performance of these tactile-enhanced video prediction models. Additionally, we introduce two new datasets of robot pushing that use a magnetic-based tactile sensor for unsupervised learning. The first dataset contains visually identical objects with different physical properties, while the second dataset mimics existing robot-pushing datasets of household object clusters. Our results demonstrate that incorporating tactile feedback into video prediction models improves scene prediction accuracy and enhances the agent's perception of physical interactions and understanding of cause-effect relationships during physical robot interactions.

Towards Autonomous Selective Harvesting: A Review of Robot Perception, Robot Design, Motion Planning and Control

Apr 19, 2023Abstract:This paper provides an overview of the current state-of-the-art in selective harvesting robots (SHRs) and their potential for addressing the challenges of global food production. SHRs have the potential to increase productivity, reduce labour costs, and minimise food waste by selectively harvesting only ripe fruits and vegetables. The paper discusses the main components of SHRs, including perception, grasping, cutting, motion planning, and control. It also highlights the challenges in developing SHR technologies, particularly in the areas of robot design, motion planning and control. The paper also discusses the potential benefits of integrating AI and soft robots and data-driven methods to enhance the performance and robustness of SHR systems. Finally, the paper identifies several open research questions in the field and highlights the need for further research and development efforts to advance SHR technologies to meet the challenges of global food production. Overall, this paper provides a starting point for researchers and practitioners interested in developing SHRs and highlights the need for more research in this field.

Acoustic Soft Tactile Skin (AST Skin)

Mar 30, 2023

Abstract:Acoustic Soft Tactile (AST) skin is a novel soft-flexible, low-cost sensor that can measure static normal forces and their contact location. This letter presents the design, fabrication, and experimental evaluation of AST skin. The proposed AST skin has some Acoustic channels(s) (ACs) arranged in parallel below the sensing surface. A reference acoustic wave from a speaker unit propagates through these ACs. The deformation of ACs under the contact force modulates the acoustic waves, and the change in modulation recorded by a microphone is used to measure the force magnitude and the location of the action. We used a static force calibration method to validate the performance of the AST skin. Our two best AST configurations are capable of (i) making more than 93% of their force measurements within $\pm$ 1.5 N tolerances for a range of 0-30 N and (ii) predicting contact locations with more than 96% accuracy. Furthermore, we conducted a robotic pushing experiment with the AST skin and an off-the-shelf Xela uSkin sensor, which showed that the AST skin outperformed the Xela sensor in measuring the interaction forces. With further developments, the proposed AST skin has the potential to be used for various robotic tasks such as object grasping and manipulation.

Proactive slip control by learned slip model and trajectory adaptation

Sep 13, 2022

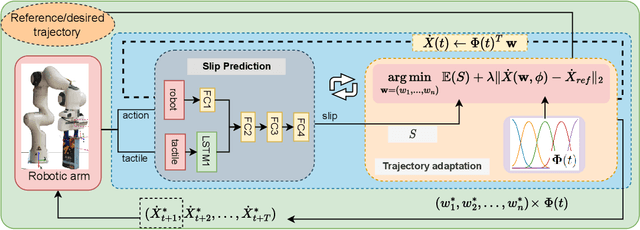

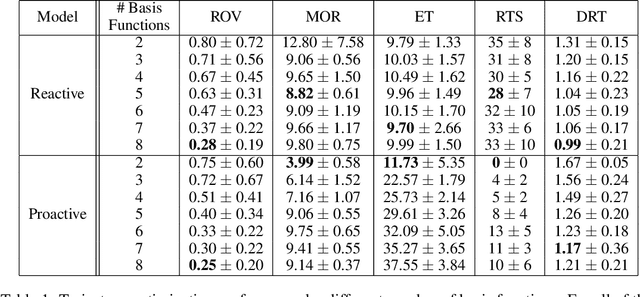

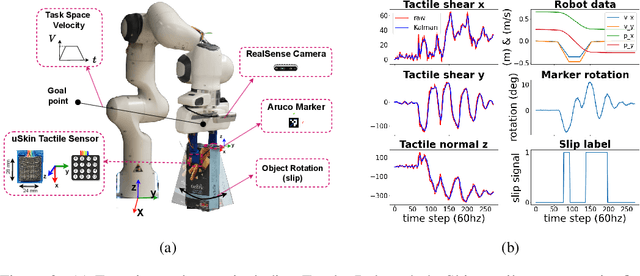

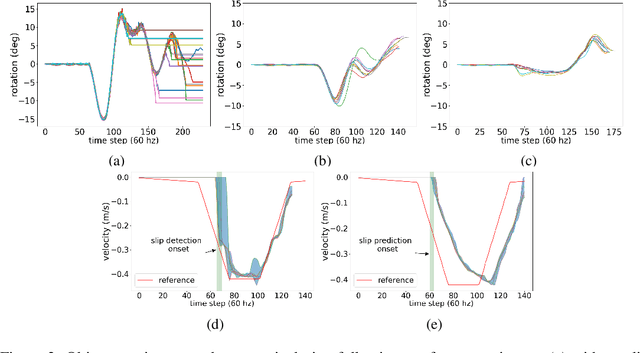

Abstract:This paper presents a novel control approach to dealing with object slip during robotic manipulative movements. Slip is a major cause of failure in many robotic grasping and manipulation tasks. Existing works increase grip force to avoid/control slip. However, this may not be feasible when (i) the robot cannot increase the gripping force -- the max gripping force is already applied or (ii) increased force damages the grasped object, such as soft fruit. Moreover, the robot fixes the gripping force when it forms a stable grasp on the surface of an object, and changing the gripping force during real-time manipulation may not be an effective control policy. We propose a novel control approach to slip avoidance including a learned action-conditioned slip predictor and a constrained optimiser avoiding a predicted slip given a desired robot action. We show the effectiveness of the proposed trajectory adaptation method with receding horizon controller with a series of real-robot test cases. Our experimental results show our proposed data-driven predictive controller can control slip for objects unseen in training.

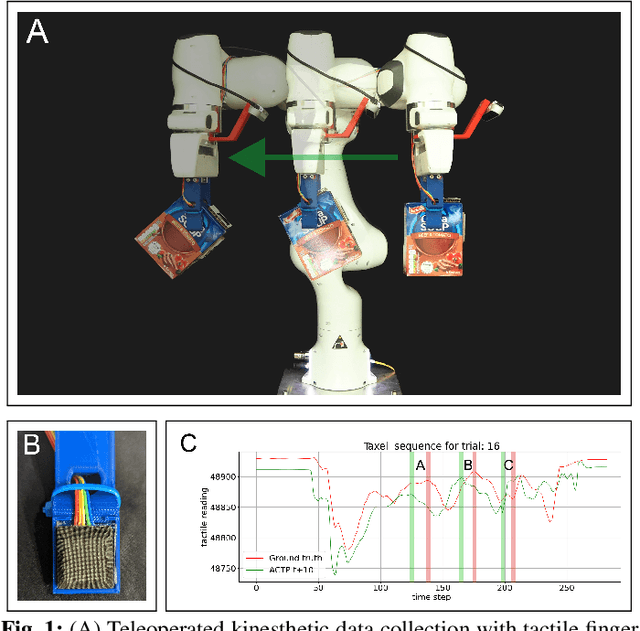

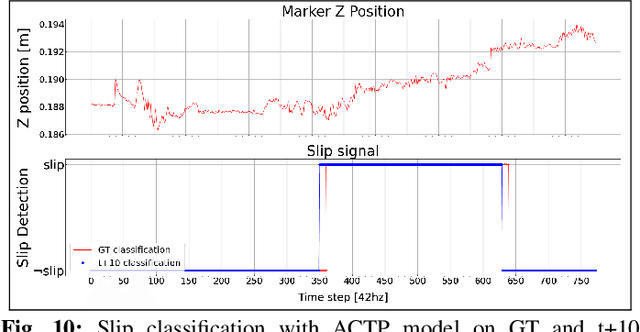

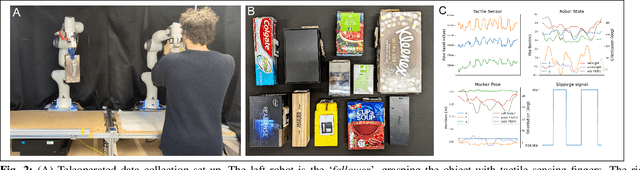

Action Conditioned Tactile Prediction: a case study on slip prediction

May 19, 2022

Abstract:Tactile predictive models can be useful across several robotic manipulation tasks, e.g. robotic pushing, robotic grasping, slip avoidance, and in-hand manipulation. However, available tactile prediction models are mostly studied for image-based tactile sensors and there is no comparison study indicating the best performing models. In this paper, we presented two novel data-driven action-conditioned models for predicting tactile signals during real-world physical robot interaction tasks (1) action condition tactile prediction and (2) action conditioned tactile-video prediction models. We use a magnetic-based tactile sensor that is challenging to analyse and test state-of-the-art predictive models and the only existing bespoke tactile prediction model. We compare the performance of these models with those of our proposed models. We perform the comparison study using our novel tactile enabled dataset containing 51,000 tactile frames of a real-world robotic manipulation task with 11 flat-surfaced household objects. Our experimental results demonstrate the superiority of our proposed tactile prediction models in terms of qualitative, quantitative and slip prediction scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge