Amir Aminifar

BEFT: Bias-Efficient Fine-Tuning of Language Models

Sep 19, 2025

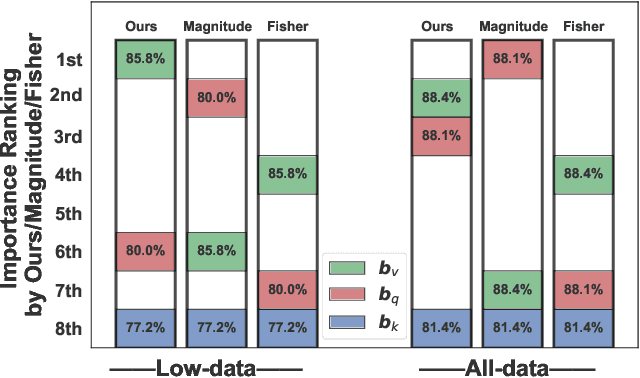

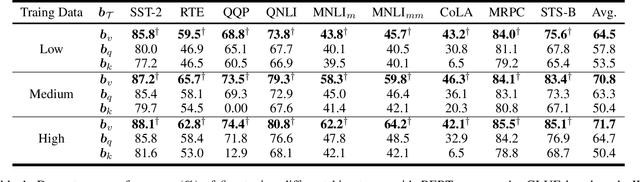

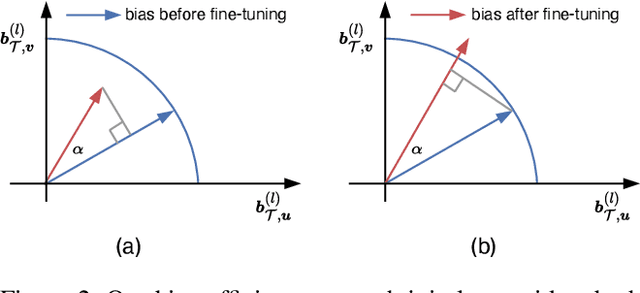

Abstract:Fine-tuning all-bias-terms stands out among various parameter-efficient fine-tuning (PEFT) techniques, owing to its out-of-the-box usability and competitive performance, especially in low-data regimes. Bias-only fine-tuning has the potential for unprecedented parameter efficiency. However, the link between fine-tuning different bias terms (i.e., bias terms in the query, key, or value projections) and downstream performance remains unclear. The existing approaches, e.g., based on the magnitude of bias change or empirical Fisher information, provide limited guidance for selecting the particular bias term for effective fine-tuning. In this paper, we propose an approach for selecting the bias term to be fine-tuned, forming the foundation of our bias-efficient fine-tuning (BEFT). We extensively evaluate our bias-efficient approach against other bias-selection approaches, across a wide range of large language models (LLMs) spanning encoder-only and decoder-only architectures from 110M to 6.7B parameters. Our results demonstrate the effectiveness and superiority of our bias-efficient approach on diverse downstream tasks, including classification, multiple-choice, and generation tasks.

Verified Relative Safety Margins for Neural Network Twins

Sep 25, 2024

Abstract:Given two Deep Neural Network (DNN) classifiers with the same input and output domains, our goal is to quantify the robustness of the two networks in relation to each other. Towards this, we introduce the notion of Relative Safety Margins (RSMs). Intuitively, given two classes and a common input, RSM of one classifier with respect to another reflects the relative margins with which decisions are made. The proposed notion is relevant in the context of several applications domains, including to compare a trained network and its corresponding compact network (e.g., pruned, quantized, distilled network). Not only can RSMs establish whether decisions are preserved, but they can also quantify their qualities. We also propose a framework to establish safe bounds on RSM gains or losses given an input and a family of perturbations. We evaluate our approach using the MNIST, CIFAR10, and two real-world medical datasets, to show the relevance of our results.

MetaWearS: A Shortcut in Wearable Systems Lifecycle with Only a Few Shots

Aug 04, 2024

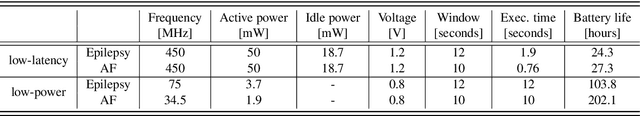

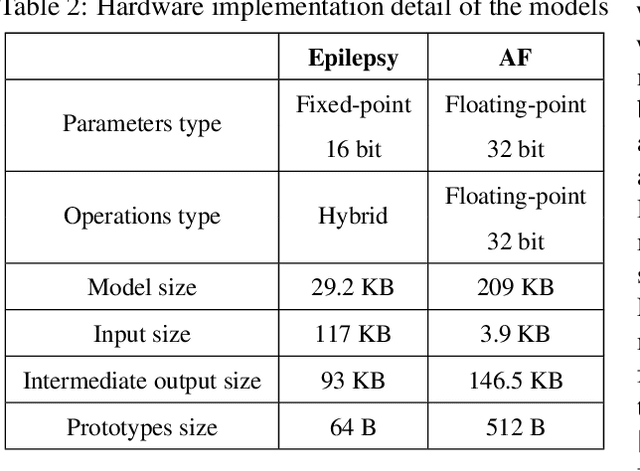

Abstract:Wearable systems provide continuous health monitoring and can lead to early detection of potential health issues. However, the lifecycle of wearable systems faces several challenges. First, effective model training for new wearable devices requires substantial labeled data from various subjects collected directly by the wearable. Second, subsequent model updates require further extensive labeled data for retraining. Finally, frequent model updating on the wearable device can decrease the battery life in long-term data monitoring. Addressing these challenges, in this paper, we propose MetaWearS, a meta-learning method to reduce the amount of initial data collection required. Moreover, our approach incorporates a prototypical updating mechanism, simplifying the update process by modifying the class prototype rather than retraining the entire model. We explore the performance of MetaWearS in two case studies, namely, the detection of epileptic seizures and the detection of atrial fibrillation. We show that by fine-tuning with just a few samples, we achieve 70% and 82% AUC for the detection of epileptic seizures and the detection of atrial fibrillation, respectively. Compared to a conventional approach, our proposed method performs better with up to 45% AUC. Furthermore, updating the model with only 16 minutes of additional labeled data increases the AUC by up to 5.3%. Finally, MetaWearS reduces the energy consumption for model updates by 456x and 418x for epileptic seizure and AF detection, respectively.

Privacy-Preserving Edge Federated Learning for Intelligent Mobile-Health Systems

May 09, 2024

Abstract:Machine Learning (ML) algorithms are generally designed for scenarios in which all data is stored in one data center, where the training is performed. However, in many applications, e.g., in the healthcare domain, the training data is distributed among several entities, e.g., different hospitals or patients' mobile devices/sensors. At the same time, transferring the data to a central location for learning is certainly not an option, due to privacy concerns and legal issues, and in certain cases, because of the communication and computation overheads. Federated Learning (FL) is the state-of-the-art collaborative ML approach for training an ML model across multiple parties holding local data samples, without sharing them. However, enabling learning from distributed data over such edge Internet of Things (IoT) systems (e.g., mobile-health and wearable technologies, involving sensitive personal/medical data) in a privacy-preserving fashion presents a major challenge mainly due to their stringent resource constraints, i.e., limited computing capacity, communication bandwidth, memory storage, and battery lifetime. In this paper, we propose a privacy-preserving edge FL framework for resource-constrained mobile-health and wearable technologies over the IoT infrastructure. We evaluate our proposed framework extensively and provide the implementation of our technique on Amazon's AWS cloud platform based on the seizure detection application in epilepsy monitoring using wearable technologies.

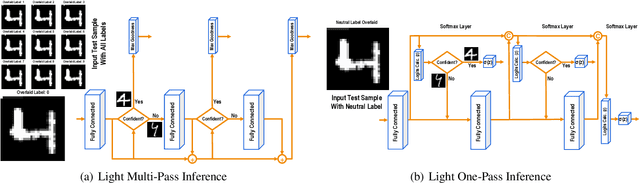

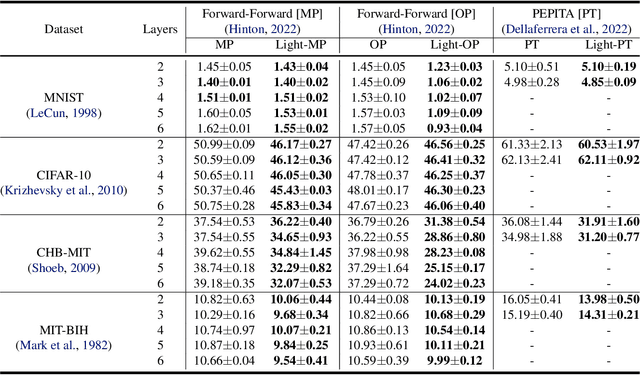

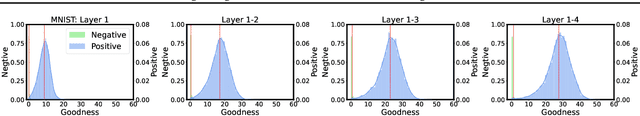

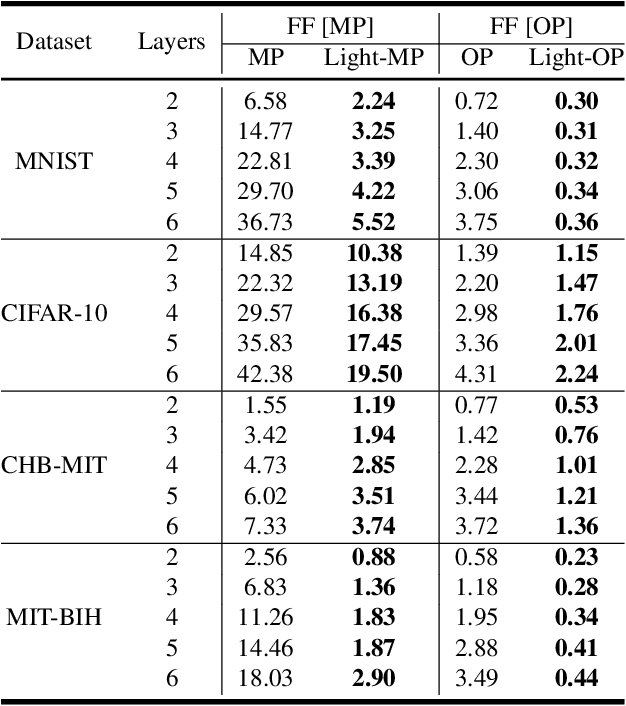

Lightweight Inference for Forward-Forward Algorithm

Apr 09, 2024

Abstract:The human brain performs tasks with an outstanding energy-efficiency, i.e., with approximately 20 Watts. The state-of-the-art Artificial/Deep Neural Networks (ANN/DNN), on the other hand, have recently been shown to consume massive amounts of energy. The training of these ANNs/DNNs is done almost exclusively based on the back-propagation algorithm, which is known to be biologically implausible. This has led to a new generation of forward-only techniques, including the Forward-Forward algorithm. In this paper, we propose a lightweight inference scheme specifically designed for DNNs trained using the Forward-Forward algorithm. We have evaluated our proposed lightweight inference scheme in the case of the MNIST and CIFAR datasets, as well as two real-world applications, namely, epileptic seizure detection and cardiac arrhythmia classification using wearable technologies, where complexity overheads/energy consumption is a major constraint, and demonstrate its relevance.

Verification-Friendly Deep Neural Networks

Dec 15, 2023Abstract:Machine learning techniques often lack formal correctness guarantees. This is evidenced by the widespread adversarial examples that plague most deep-learning applications. This resulted in several research efforts that aim at verifying deep neural networks, with a particular focus on safety-critical applications. However, formal verification techniques still face major scalability and precision challenges when dealing with the complexity of such networks. The over-approximation introduced during the formal verification process to tackle the scalability challenge often results in inconclusive analysis. To address this challenge, we propose a novel framework to generate Verification-friendly Neural Networks (VNNs). We present a post-training optimization framework to achieve a balance between preserving prediction performance and robustness in the resulting networks. Our proposed framework proves to result in networks that are comparable to the original ones in terms of prediction performance, while amenable to verification. This essentially enables us to establish robustness for more VNNs than their deep neural network counterparts, in a more time-efficient manner.

Many-to-One Knowledge Distillation of Real-Time Epileptic Seizure Detection for Low-Power Wearable Internet of Things Systems

Jul 20, 2022

Abstract:Integrating low-power wearable Internet of Things (IoT) systems into routine health monitoring is an ongoing challenge. Recent advances in the computation capabilities of wearables make it possible to target complex scenarios by exploiting multiple biosignals and using high-performance algorithms, such as Deep Neural Networks (DNNs). There is, however, a trade-off between performance of the algorithms and the low-power requirements of IoT platforms with limited resources. Besides, physically larger and multi-biosignal-based wearables bring significant discomfort to the patients. Consequently, reducing power consumption and discomfort is necessary for patients to use IoT devices continuously during everyday life. To overcome these challenges, in the context of epileptic seizure detection, we propose a many-to-one signals knowledge distillation approach targeting single-biosignal processing in IoT wearable systems. The starting point is to get a highly-accurate multi-biosignal DNN, then apply our approach to develop a single-biosignal DNN solution for IoT systems that achieves an accuracy comparable to the original multi-biosignal DNN. To assess the practicality of our approach to real-life scenarios, we perform a comprehensive simulation experiment analysis on several state-of-the-art edge computing platforms, such as Kendryte K210 and Raspberry Pi Zero.

Synthetic Epileptic Brain Activities Using Generative Adversarial Networks

Jul 22, 2019

Abstract:Epilepsy is a chronic neurological disorder affecting more than 65 million people worldwide and manifested by recurrent unprovoked seizures. The unpredictability of seizures not only degrades the quality of life of the patients, but it can also be life-threatening. Modern systems monitoring electroencephalography (EEG) signals are being currently developed with the view to detect epileptic seizures in order to alert caregivers and reduce the impact of seizures on patients' quality of life. Such seizure detection systems employ state-of-the-art machine learning algorithms that require a considerably large amount of labeled personal data for training. However, acquiring EEG signals of epileptic seizures is a costly and time-consuming process for medical experts and patients, currently requiring in-hospital recordings in specialized units. In this work, we generate synthetic seizure-like brain electrical activities, i.e., EEG signals, that can be used to train seizure detection algorithms, alleviating the need for recorded data. First, we train a Generative Adversarial Network (GAN) with data from 30 epilepsy patients. Then, we generate synthetic personalized training sets for new, unseen patients, which overall yield higher detection performance than the real-data training sets. We demonstrate our results using the datasets from the EPILEPSIAE Project, one of the world's largest public databases for seizure detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge