Amal Zouaq

LLMs Can't Play Hangman: On the Necessity of a Private Working Memory for Language Agents

Jan 11, 2026Abstract:As LLMs move from text completion toward autonomous agents, they remain constrained by the standard chat interface, which lacks private working memory. This raises a fundamental question: can agents reliably perform interactive tasks that depend on hidden state? We define Private State Interactive Tasks (PSITs), which require agents to generate and maintain hidden information while producing consistent public responses. We show theoretically that any agent restricted to the public conversation history cannot simultaneously preserve secrecy and consistency in PSITs, yielding an impossibility theorem. To empirically validate this limitation, we introduce a self-consistency testing protocol that evaluates whether agents can maintain a hidden secret across forked dialogue branches. Standard chat-based LLMs and retrieval-based memory baselines fail this test regardless of scale, demonstrating that semantic retrieval does not enable true state maintenance. To address this, we propose a novel architecture incorporating an explicit private working memory; we demonstrate that this mechanism restores consistency, establishing private state as a necessary component for interactive language agents.

What is the Best Process Model Representation? A Comparative Analysis for Process Modeling with Large Language Models

Jul 15, 2025Abstract:Large Language Models (LLMs) are increasingly applied for Process Modeling (PMo) tasks such as Process Model Generation (PMG). To support these tasks, researchers have introduced a variety of Process Model Representations (PMRs) that serve as model abstractions or generation targets. However, these PMRs differ widely in structure, complexity, and usability, and have never been systematically compared. Moreover, recent PMG approaches rely on distinct evaluation strategies and generation techniques, making comparison difficult. This paper presents the first empirical study that evaluates multiple PMRs in the context of PMo with LLMs. We introduce the PMo Dataset, a new dataset containing 55 process descriptions paired with models in nine different PMRs. We evaluate PMRs along two dimensions: suitability for LLM-based PMo and performance on PMG. \textit{Mermaid} achieves the highest overall score across six PMo criteria, whereas \textit{BPMN text} delivers the best PMG results in terms of process element similarity.

MedHal: An Evaluation Dataset for Medical Hallucination Detection

Apr 11, 2025

Abstract:We present MedHal, a novel large-scale dataset specifically designed to evaluate if models can detect hallucinations in medical texts. Current hallucination detection methods face significant limitations when applied to specialized domains like medicine, where they can have disastrous consequences. Existing medical datasets are either too small, containing only a few hundred samples, or focus on a single task like Question Answering or Natural Language Inference. MedHal addresses these gaps by: (1) incorporating diverse medical text sources and tasks; (2) providing a substantial volume of annotated samples suitable for training medical hallucination detection models; and (3) including explanations for factual inconsistencies to guide model learning. We demonstrate MedHal's utility by training and evaluating a baseline medical hallucination detection model, showing improvements over general-purpose hallucination detection approaches. This resource enables more efficient evaluation of medical text generation systems while reducing reliance on costly expert review, potentially accelerating the development of medical AI research.

FRASE: Structured Representations for Generalizable SPARQL Query Generation

Mar 28, 2025Abstract:Translating natural language questions into SPARQL queries enables Knowledge Base querying for factual and up-to-date responses. However, existing datasets for this task are predominantly template-based, leading models to learn superficial mappings between question and query templates rather than developing true generalization capabilities. As a result, models struggle when encountering naturally phrased, template-free questions. This paper introduces FRASE (FRAme-based Semantic Enhancement), a novel approach that leverages Frame Semantic Role Labeling (FSRL) to address this limitation. We also present LC-QuAD 3.0, a new dataset derived from LC-QuAD 2.0, in which each question is enriched using FRASE through frame detection and the mapping of frame-elements to their argument. We evaluate the impact of this approach through extensive experiments on recent large language models (LLMs) under different fine-tuning configurations. Our results demonstrate that integrating frame-based structured representations consistently improves SPARQL generation performance, particularly in challenging generalization scenarios when test questions feature unseen templates (unknown template splits) and when they are all naturally phrased (reformulated questions).

Reducing Hallucinations in Language Model-based SPARQL Query Generation Using Post-Generation Memory Retrieval

Feb 19, 2025Abstract:The ability to generate SPARQL queries from natural language questions is crucial for ensuring efficient and accurate retrieval of structured data from knowledge graphs (KG). While large language models (LLMs) have been widely adopted for SPARQL query generation, they are often susceptible to hallucinations and out-of-distribution errors when producing KG elements like Uniform Resource Identifiers (URIs) based on internal parametric knowledge. This often results in content that appears plausible but is factually incorrect, posing significant challenges for their use in real-world information retrieval (IR) applications. This has led to increased research aimed at detecting and mitigating such errors. In this paper, we introduce PGMR (Post-Generation Memory Retrieval), a modular framework that incorporates a non-parametric memory module to retrieve KG elements and enhance LLM-based SPARQL query generation. Our experimental results indicate that PGMR consistently delivers strong performance across diverse datasets, data distributions, and LLMs. Notably, PGMR significantly mitigates URI hallucinations, nearly eliminating the problem in several scenarios.

Enhancing Frame Detection with Retrieval Augmented Generation

Feb 17, 2025Abstract:Recent advancements in Natural Language Processing have significantly improved the extraction of structured semantic representations from unstructured text, especially through Frame Semantic Role Labeling (FSRL). Despite this progress, the potential of Retrieval-Augmented Generation (RAG) models for frame detection remains under-explored. In this paper, we present the first RAG-based approach for frame detection called RCIF (Retrieve Candidates and Identify Frames). RCIF is also the first approach to operate without the need for explicit target span and comprises three main stages: (1) generation of frame embeddings from various representations ; (2) retrieval of candidate frames given an input text; and (3) identification of the most suitable frames. We conducted extensive experiments across multiple configurations, including zero-shot, few-shot, and fine-tuning settings. Our results show that our retrieval component significantly reduces the complexity of the task by narrowing the search space thus allowing the frame identifier to refine and complete the set of candidates. Our approach achieves state-of-the-art performance on FrameNet 1.5 and 1.7, demonstrating its robustness in scenarios where only raw text is provided. Furthermore, we leverage the structured representation obtained through this method as a proxy to enhance generalization across lexical variations in the task of translating natural language questions into SPARQL queries.

Ontology-Constrained Generation of Domain-Specific Clinical Summaries

Nov 23, 2024Abstract:Large Language Models (LLMs) offer promising solutions for text summarization. However, some domains require specific information to be available in the summaries. Generating these domain-adapted summaries is still an open challenge. Similarly, hallucinations in generated content is a major drawback of current approaches, preventing their deployment. This study proposes a novel approach that leverages ontologies to create domain-adapted summaries both structured and unstructured. We employ an ontology-guided constrained decoding process to reduce hallucinations while improving relevance. When applied to the medical domain, our method shows potential in summarizing Electronic Health Records (EHRs) across different specialties, allowing doctors to focus on the most relevant information to their domain. Evaluation on the MIMIC-III dataset demonstrates improvements in generating domain-adapted summaries of clinical notes and hallucination reduction.

Combining Domain and Alignment Vectors to Achieve Better Knowledge-Safety Trade-offs in LLMs

Nov 11, 2024

Abstract:There is a growing interest in training domain-expert LLMs that excel in specific technical fields compared to their general-purpose instruction-tuned counterparts. However, these expert models often experience a loss in their safety abilities in the process, making them capable of generating harmful content. As a solution, we introduce an efficient and effective merging-based alignment method called \textsc{MergeAlign} that interpolates the domain and alignment vectors, creating safer domain-specific models while preserving their utility. We apply \textsc{MergeAlign} on Llama3 variants that are experts in medicine and finance, obtaining substantial alignment improvements with minimal to no degradation on domain-specific benchmarks. We study the impact of model merging through model similarity metrics and contributions of individual models being merged. We hope our findings open new research avenues and inspire more efficient development of safe expert LLMs.

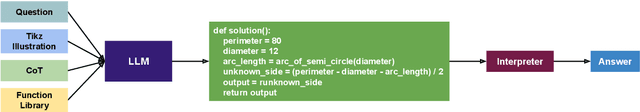

GeoCoder: Solving Geometry Problems by Generating Modular Code through Vision-Language Models

Oct 17, 2024

Abstract:Geometry problem-solving demands advanced reasoning abilities to process multimodal inputs and employ mathematical knowledge effectively. Vision-language models (VLMs) have made significant progress in various multimodal tasks. Yet, they still struggle with geometry problems and are significantly limited by their inability to perform mathematical operations not seen during pre-training, such as calculating the cosine of an arbitrary angle, and by difficulties in correctly applying relevant geometry formulas. To overcome these challenges, we present GeoCoder, which leverages modular code-finetuning to generate and execute code using a predefined geometry function library. By executing the code, we achieve accurate and deterministic calculations, contrasting the stochastic nature of autoregressive token prediction, while the function library minimizes errors in formula usage. We also propose a multimodal retrieval-augmented variant of GeoCoder, named RAG-GeoCoder, which incorporates a non-parametric memory module for retrieving functions from the geometry library, thereby reducing reliance on parametric memory. Our modular code-finetuning approach enhances the geometric reasoning capabilities of VLMs, yielding an average improvement of over 16% across various question complexities on the GeomVerse dataset compared to other finetuning methods.

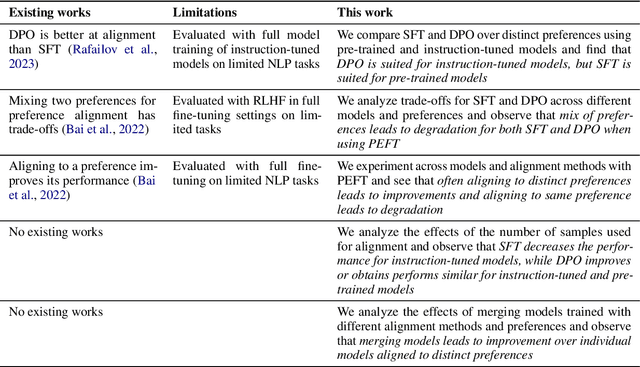

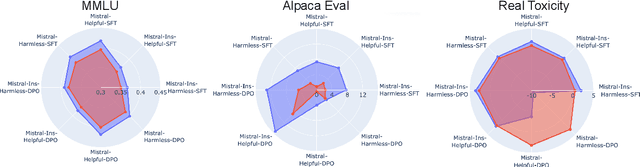

A Deep Dive into the Trade-Offs of Parameter-Efficient Preference Alignment Techniques

Jun 07, 2024

Abstract:Large language models are first pre-trained on trillions of tokens and then instruction-tuned or aligned to specific preferences. While pre-training remains out of reach for most researchers due to the compute required, fine-tuning has become affordable thanks to parameter-efficient methods such as LoRA and QLoRA. Alignment is known to be sensitive to the many factors involved, including the quantity and quality of data, the alignment method, and the adapter rank. However, there has not yet been an extensive study of their effect on downstream performance. To address this gap, we conduct an in-depth investigation of the impact of popular choices for three crucial axes: (i) the alignment dataset (HH-RLHF and BeaverTails), (ii) the alignment technique (SFT and DPO), and (iii) the model (LLaMA-1, Vicuna-v1.3, Mistral-7b, and Mistral-7b-Instruct). Our extensive setup spanning over 300 experiments reveals consistent trends and unexpected findings. We observe how more informative data helps with preference alignment, cases where supervised fine-tuning outperforms preference optimization, and how aligning to a distinct preference boosts performance on downstream tasks. Through our in-depth analyses, we put forward key guidelines to help researchers perform more effective parameter-efficient LLM alignment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge