Alice Wu

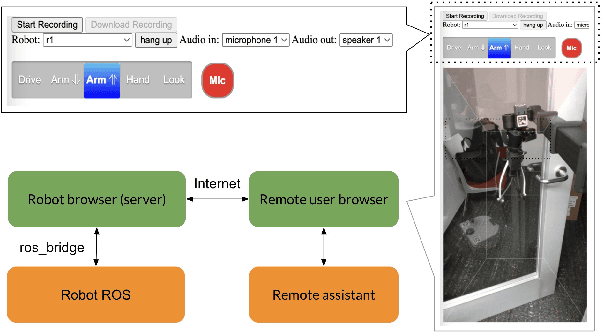

WeHelp: A Shared Autonomy System for Wheelchair Users

Sep 19, 2024

Abstract:There is a large population of wheelchair users. Most of the wheelchair users need help with daily tasks. However, according to recent reports, their needs are not properly satisfied due to the lack of caregivers. Therefore, in this project, we develop WeHelp, a shared autonomy system aimed for wheelchair users. A robot with a WeHelp system has three modes, following mode, remote control mode and tele-operation mode. In the following mode, the robot follows the wheelchair user automatically via visual tracking. The wheelchair user can ask the robot to follow them from behind, by the left or by the right. When the wheelchair user asks for help, the robot will recognize the command via speech recognition, and then switch to the teleoperation mode or remote control mode. In the teleoperation mode, the wheelchair user takes over the robot with a joy stick and controls the robot to complete some complex tasks for their needs, such as opening doors, moving obstacles on the way, reaching objects on a high shelf or on the low ground, etc. In the remote control mode, a remote assistant takes over the robot and helps the wheelchair user complete some complex tasks for their needs. Our evaluation shows that the pipeline is useful and practical for wheelchair users. Source code and demo of the paper are available at \url{https://github.com/Walleclipse/WeHelp}.

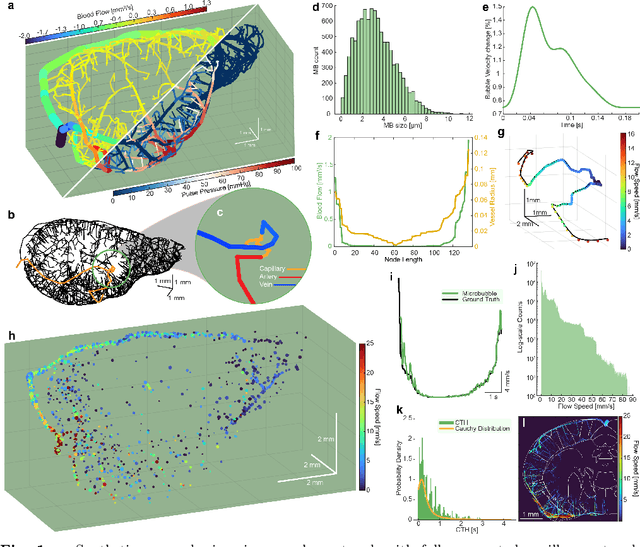

Functional Assessment of Cerebral Capillaries using Single Capillary Reporters in Ultrasound Localization Microscopy

Jul 11, 2024

Abstract:The brain's microvascular cerebral capillary network plays a vital role in maintaining neuronal health, yet capillary dynamics are still not well understood due to limitations in existing imaging techniques. Here, we present Single Capillary Reporters (SCaRe) for transcranial Ultrasound Localization Microscopy (ULM), a novel approach enabling non-invasive, whole-brain mapping of single capillaries and estimates of their transit-time as a neurovascular biomarker. We accomplish this first through computational Monte Carlo and ultrasound simulations of microbubbles flowing through a fully-connected capillary network. We unveil distinct capillary flow behaviors which informs methodological changes to ULM acquisitions to better capture capillaries in vivo. Subsequently, applying SCaRe-ULM in vivo, we achieve unprecedented visualization of single capillary tracks across brain regions, analysis of layer-specific capillary heterogeneous transit times (CHT), and characterization of whole microbubble trajectories from arterioles to venules. Lastly, we evaluate capillary biomarkers using injected lipopolysaccharide to induce systemic neuroinflammation and track the increase in SCaRe-ULM CHT, demonstrating the capability to detect subtle capillary functional changes. SCaRe-ULM represents a significant advance in studying microvascular dynamics, offering novel avenues for investigating capillary patterns in neurological disorders and potential diagnostic applications.

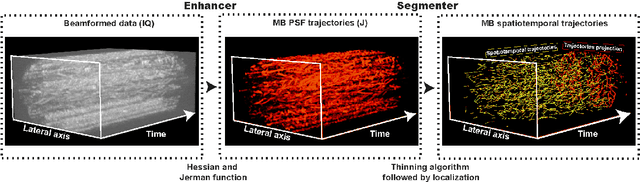

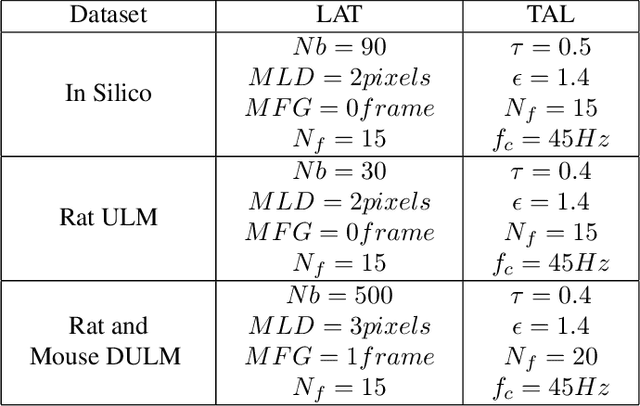

A Tracking prior to Localization workflow for Ultrasound Localization Microscopy

Aug 04, 2023

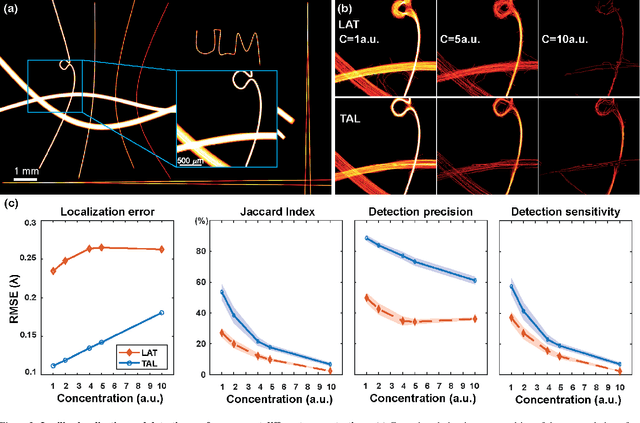

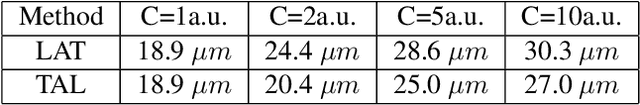

Abstract:Ultrasound Localization Microscopy (ULM) has proven effective in resolving microvascular structures and local mean velocities at sub-diffraction-limited scales, offering high-resolution imaging capabilities. Dynamic ULM (DULM) enables the creation of angiography or velocity movies throughout cardiac cycles. Currently, these techniques rely on a Localization-and-Tracking (LAT) workflow consisting in detecting microbubbles (MB) in the frames before pairing them to generate tracks. While conventional LAT methods perform well at low concentrations, they suffer from longer acquisition times and degraded localization and tracking accuracy at higher concentrations, leading to biased angiogram reconstruction and velocity estimation. In this study, we propose a novel approach to address these challenges by reversing the current workflow. The proposed method, Tracking-and-Localization (TAL), relies on first tracking the MB and then performing localization. Through comprehensive benchmarking using both in silico and in vivo experiments and employing various metrics to quantify ULM angiography and velocity maps, we demonstrate that the TAL method consistently outperforms the reference LAT workflow. Moreover, when applied to DULM, TAL successfully extracts velocity variations along the cardiac cycle with improved repeatability. The findings of this work highlight the effectiveness of the TAL approach in overcoming the limitations of conventional LAT methods, providing enhanced ULM angiography and velocity imaging.

Practice with Graph-based ANN Algorithms on Sparse Data: Chi-square Two-tower model, HNSW, Sign Cauchy Projections

Jun 13, 2023

Abstract:Sparse data are common. The traditional ``handcrafted'' features are often sparse. Embedding vectors from trained models can also be very sparse, for example, embeddings trained via the ``ReLu'' activation function. In this paper, we report our exploration of efficient search in sparse data with graph-based ANN algorithms (e.g., HNSW, or SONG which is the GPU version of HNSW), which are popular in industrial practice, e.g., search and ads (advertising). We experiment with the proprietary ads targeting application, as well as benchmark public datasets. For ads targeting, we train embeddings with the standard ``cosine two-tower'' model and we also develop the ``chi-square two-tower'' model. Both models produce (highly) sparse embeddings when they are integrated with the ``ReLu'' activation function. In EBR (embedding-based retrieval) applications, after we the embeddings are trained, the next crucial task is the approximate near neighbor (ANN) search for serving. While there are many ANN algorithms we can choose from, in this study, we focus on the graph-based ANN algorithm (e.g., HNSW-type). Sparse embeddings should help improve the efficiency of EBR. One benefit is the reduced memory cost for the embeddings. The other obvious benefit is the reduced computational time for evaluating similarities, because, for graph-based ANN algorithms such as HNSW, computing similarities is often the dominating cost. In addition to the effort on leveraging data sparsity for storage and computation, we also integrate ``sign cauchy random projections'' (SignCRP) to hash vectors to bits, to further reduce the memory cost and speed up the ANN search. In NIPS'13, SignCRP was proposed to hash the chi-square similarity, which is a well-adopted nonlinear kernel in NLP and computer vision. Therefore, the chi-square two-tower model, SignCRP, and HNSW are now tightly integrated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge