Alexander Raake

Fine-Grained HDR Image Quality Assessment From Noticeably Distorted to Very High Fidelity

Jun 14, 2025Abstract:High dynamic range (HDR) and wide color gamut (WCG) technologies significantly improve color reproduction compared to standard dynamic range (SDR) and standard color gamuts, resulting in more accurate, richer, and more immersive images. However, HDR increases data demands, posing challenges for bandwidth efficiency and compression techniques. Advances in compression and display technologies require more precise image quality assessment, particularly in the high-fidelity range where perceptual differences are subtle. To address this gap, we introduce AIC-HDR2025, the first such HDR dataset, comprising 100 test images generated from five HDR sources, each compressed using four codecs at five compression levels. It covers the high-fidelity range, from visible distortions to compression levels below the visually lossless threshold. A subjective study was conducted using the JPEG AIC-3 test methodology, combining plain and boosted triplet comparisons. In total, 34,560 ratings were collected from 151 participants across four fully controlled labs. The results confirm that AIC-3 enables precise HDR quality estimation, with 95\% confidence intervals averaging a width of 0.27 at 1 JND. In addition, several recently proposed objective metrics were evaluated based on their correlation with subjective ratings. The dataset is publicly available.

Appeal prediction for AI up-scaled Images

Feb 19, 2025Abstract:DNN- or AI-based up-scaling algorithms are gaining in popularity due to the improvements in machine learning. Various up-scaling models using CNNs, GANs or mixed approaches have been published. The majority of models are evaluated using PSRN and SSIM or only a few example images. However, a performance evaluation with a wide range of real-world images and subjective evaluation is missing, which we tackle in the following paper. For this reason, we describe our developed dataset, which uses 136 base images and five different up-scaling methods, namely Real-ESRGAN, BSRGAN, waifu2x, KXNet, and Lanczos. Overall the dataset consists of 1496 annotated images. The labeling of our dataset focused on image appeal and has been performed using crowd-sourcing employing our open-source tool AVRate Voyager. We evaluate the appeal of the different methods, and the results indicate that Real-ESRGAN and BSRGAN are the best. Furthermore, we train a DNN to detect which up-scaling method has been used, the trained models have a good overall performance in our evaluation. In addition to this, we evaluate state-of-the-art image appeal and quality models, here none of the models showed a high prediction performance, therefore we also trained two own approaches. The first uses transfer learning and has the best performance, and the second model uses signal-based features and a random forest model with good overall performance. We share the data and implementation to allow further research in the context of open science.

Satellite Streaming Video QoE Prediction: A Real-World Subjective Database and Network-Level Prediction Models

Oct 17, 2024

Abstract:Demand for streaming services, including satellite, continues to exhibit unprecedented growth. Internet Service Providers find themselves at the crossroads of technological advancements and rising customer expectations. To stay relevant and competitive, these ISPs must ensure their networks deliver optimal video streaming quality, a key determinant of user satisfaction. Towards this end, it is important to have accurate Quality of Experience prediction models in place. However, achieving robust performance by these models requires extensive data sets labeled by subjective opinion scores on videos impaired by diverse playback disruptions. To bridge this data gap, we introduce the LIVE-Viasat Real-World Satellite QoE Database. This database consists of 179 videos recorded from real-world streaming services affected by various authentic distortion patterns. We also conducted a comprehensive subjective study involving 54 participants, who contributed both continuous-time opinion scores and endpoint (retrospective) QoE scores. Our analysis sheds light on various determinants influencing subjective QoE, such as stall events, spatial resolutions, bitrate, and certain network parameters. We demonstrate the usefulness of this unique new resource by evaluating the efficacy of prevalent QoE-prediction models on it. We also created a new model that maps the network parameters to predicted human perception scores, which can be used by ISPs to optimize the video streaming quality of their networks. Our proposed model, which we call SatQA, is able to accurately predict QoE using only network parameters, without any access to pixel data or video-specific metadata, estimated by Spearman's Rank Order Correlation Coefficient (SROCC), Pearson Linear Correlation Coefficient (PLCC), and Root Mean Squared Error (RMSE), indicating high accuracy and reliability.

Power Reduction Opportunities on End-User Devices in Quality-Steady Video Streaming

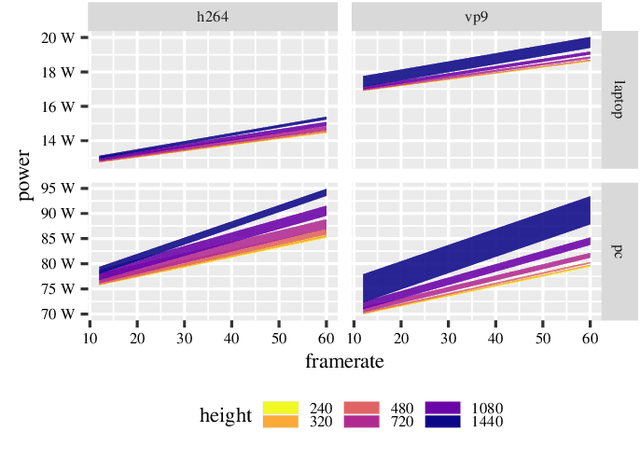

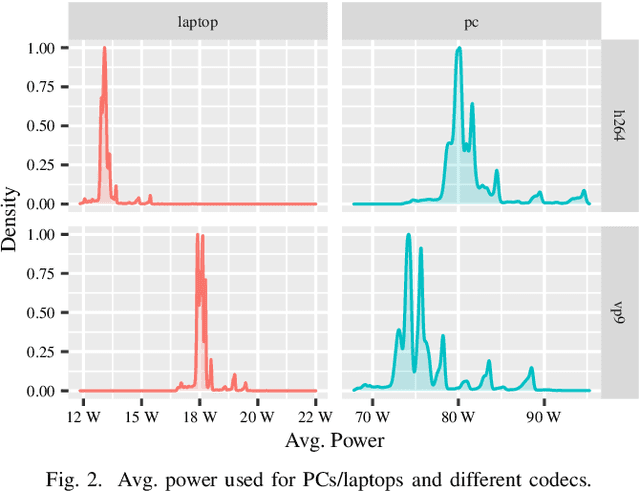

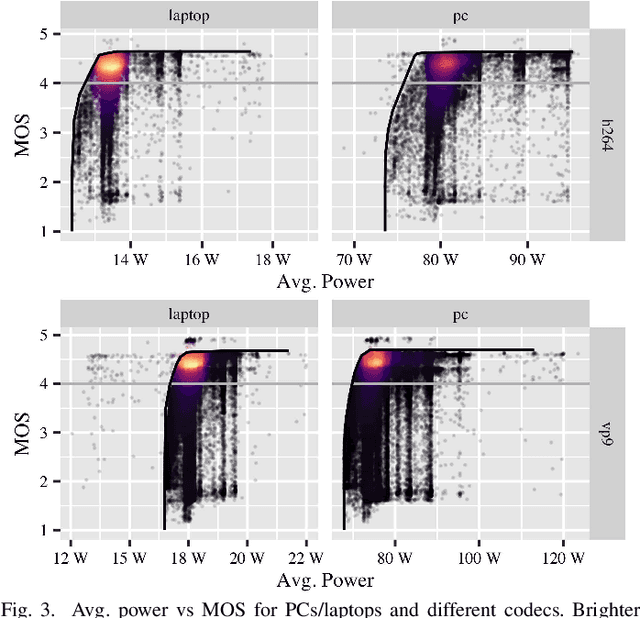

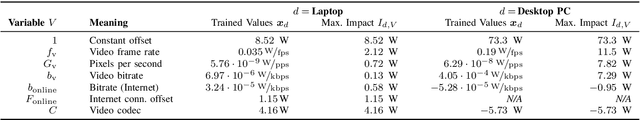

May 24, 2023Abstract:This paper uses a crowdsourced dataset of online video streaming sessions to investigate opportunities to reduce the power consumption while considering QoE. For this, we base our work on prior studies which model both the end-user's QoE and the end-user device's power consumption with the help of high-level video features such as the bitrate, the frame rate, and the resolution. On top of existing research, which focused on reducing the power consumption at the same QoE optimizing video parameters, we investigate potential power savings by other means such as using a different playback device, a different codec, or a predefined maximum quality level. We find that based on the power consumption of the streaming sessions from the crowdsourcing dataset, devices could save more than 55% of power if all participants adhere to low-power settings.

Audiovisual Database with 360 Video and Higher-Order Ambisonics Audio for Perception, Cognition, Behavior, and QoE Evaluation Research

Dec 27, 2022Abstract:Research into multi-modal perception, human cognition, behavior, and attention can benefit from high-fidelity content that may recreate real-life-like scenes when rendered on head-mounted displays. Moreover, aspects of audiovisual perception, cognitive processes, and behavior may complement questionnaire-based Quality of Experience (QoE) evaluation of interactive virtual environments. Currently, there is a lack of high-quality open-source audiovisual databases that can be used to evaluate such aspects or systems capable of reproducing high-quality content. With this paper, we provide a publicly available audiovisual database consisting of twelve scenes capturing real-life nature and urban environments with a video resolution of 7680x3840 at 60 frames-per-second and with 4th-order Ambisonics audio. These 360 video sequences, with an average duration of 60 seconds, represent real-life settings for systematically evaluating various dimensions of uni-/multi-modal perception, cognition, behavior, and QoE. The paper provides details of the scene requirements, recording approach, and scene descriptions. The database provides high-quality reference material with a balanced focus on auditory and visual sensory information. The database will be continuously updated with additional scenes and further metadata such as human ratings and saliency information.

Modeling of Energy Consumption and Streaming Video QoE using a Crowdsourcing Dataset

Oct 11, 2022

Abstract:In the past decade, we have witnessed an enormous growth in the demand for online video services. Recent studies estimate that nowadays, more than 1% of the global greenhouse gas emissions can be attributed to the production and use of devices performing online video tasks. As such, research on the true power consumption of devices and their energy efficiency during video streaming is highly important for a sustainable use of this technology. At the same time, over-the-top providers strive to offer high-quality streaming experiences to satisfy user expectations. Here, energy consumption and QoE partly depend on the same system parameters. Hence, a joint view is needed for their evaluation. In this paper, we perform a first analysis of both end-user power efficiency and Quality of Experience of a video streaming service. We take a crowdsourced dataset comprising 447,000 streaming events from YouTube and estimate both the power consumption and perceived quality. The power consumption is modeled based on previous work which we extended towards predicting the power usage of different devices and codecs. The user-perceived QoE is estimated using a standardized model. Our results indicate that an intelligent choice of streaming parameters can optimize both the QoE and the power efficiency of the end user device. Further, the paper discusses limitations of the approach and identifies directions for future research.

* 6 pages, 3 figures

Semantic-driven Colorization

Jun 18, 2020

Abstract:Recent deep colorization works predict the semantic information implicitly while learning to colorize black-and-white photographic images. As a consequence, the generated color is easier to be overflowed, and the semantic faults are invisible. As human experience in coloring, the human first recognize which objects and their location in the photo, imagine which color is plausible for the objects as in real life, then colorize it. In this study, we simulate that human-like action to firstly let our network learn to segment what is in the photo, then colorize it. Therefore, our network can choose a plausible color under semantic constraint for specific objects, and give discriminative colors between them. Moreover, the segmentation map becomes understandable and interactable for the user. Our models are trained on PASCAL-Context and evaluated on selected images from the public domain and COCO-Stuff, which has several unseen categories compared to training data. As seen from the experimental results, our colorization system can provide plausible colors for specific objects and generate harmonious colors competitive with state-of-the-art methods.

INSPIRE: Evaluation of a Smart-Home System for Infotainment Management and Device Control

Oct 25, 2004

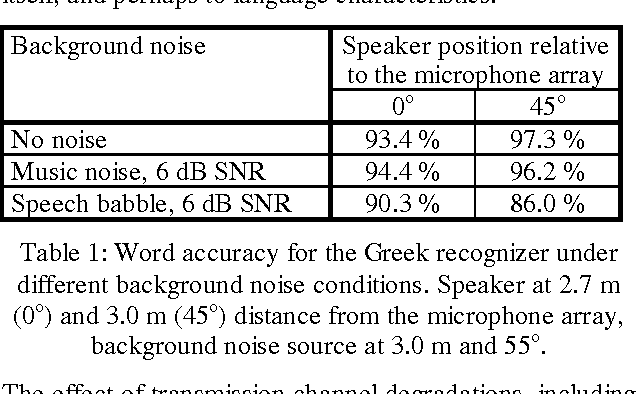

Abstract:This paper gives an overview of the assessment and evaluation methods which have been used to determine the quality of the INSPIRE smart home system. The system allows different home appliances to be controlled via speech, and consists of speech and speaker recognition, speech understanding, dialogue management, and speech output components. The performance of these components is first assessed individually, and then the entire system is evaluated in an interaction experiment with test users. Initial results of the assessment and evaluation are given, in particular with respect to the transmission channel impact on speech and speaker recognition, and the assessment of speech output for different system metaphors.

* 4 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge