Alessandro Leite

TAU

Partition Trees: Conditional Density Estimation over General Outcome Spaces

Feb 03, 2026Abstract:We propose Partition Trees, a tree-based framework for conditional density estimation over general outcome spaces, supporting both continuous and categorical variables within a unified formulation. Our approach models conditional distributions as piecewise-constant densities on data adaptive partitions and learns trees by directly minimizing conditional negative log-likelihood. This yields a scalable, nonparametric alternative to existing probabilistic trees that does not make parametric assumptions about the target distribution. We further introduce Partition Forests, an ensemble extension obtained by averaging conditional densities. Empirically, we demonstrate improved probabilistic prediction over CART-style trees and competitive or superior performance compared to state-of-the-art probabilistic tree methods and Random Forests, along with robustness to redundant features and heteroscedastic noise.

Position: Causal Machine Learning Requires Rigorous Synthetic Experiments for Broader Adoption

Aug 12, 2025Abstract:Causal machine learning has the potential to revolutionize decision-making by combining the predictive power of machine learning algorithms with the theory of causal inference. However, these methods remain underutilized by the broader machine learning community, in part because current empirical evaluations do not permit assessment of their reliability and robustness, undermining their practical utility. Specifically, one of the principal criticisms made by the community is the extensive use of synthetic experiments. We argue, on the contrary, that synthetic experiments are essential and necessary to precisely assess and understand the capabilities of causal machine learning methods. To substantiate our position, we critically review the current evaluation practices, spotlight their shortcomings, and propose a set of principles for conducting rigorous empirical analyses with synthetic data. Adopting the proposed principles will enable comprehensive evaluations that build trust in causal machine learning methods, driving their broader adoption and impactful real-world use.

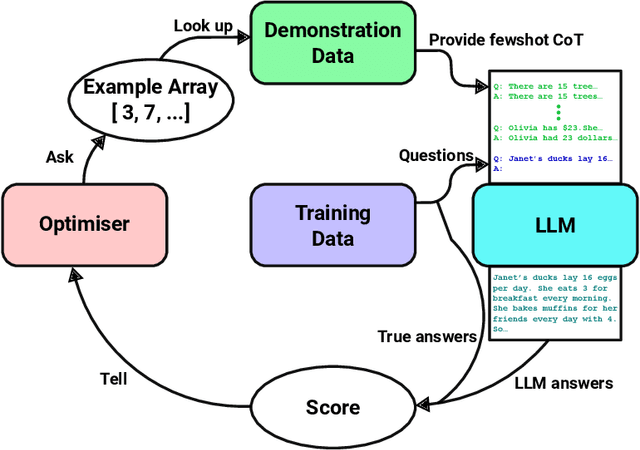

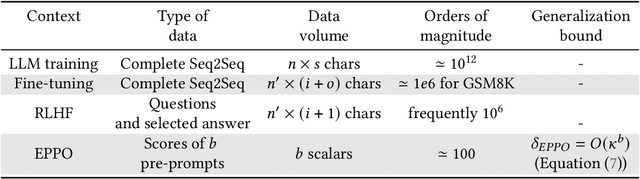

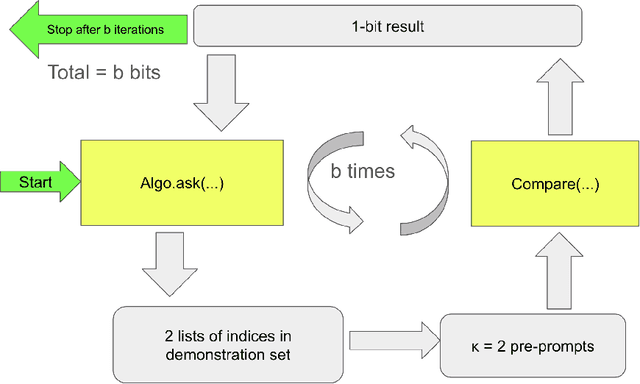

Tuning without Peeking: Provable Privacy and Generalization Bounds for LLM Post-Training

Jul 02, 2025Abstract:Gradient-based optimization is the workhorse of deep learning, offering efficient and scalable training via backpropagation. However, its reliance on large volumes of labeled data raises privacy and security concerns such as susceptibility to data poisoning attacks and the risk of overfitting. In contrast, black box optimization methods, which treat the model as an opaque function, relying solely on function evaluations to guide optimization, offer a promising alternative in scenarios where data access is restricted, adversarial risks are high, or overfitting is a concern. However, black box methods also pose significant challenges, including poor scalability to high-dimensional parameter spaces, as prevalent in large language models (LLMs), and high computational costs due to reliance on numerous model evaluations. This paper introduces BBoxER, an evolutionary black-box method for LLM post-training that induces an information bottleneck via implicit compression of the training data. Leveraging the tractability of information flow, we provide strong theoretical bounds on generalization, differential privacy, susceptibility to data poisoning attacks, and robustness to extraction attacks. BBoxER operates on top of pre-trained LLMs, offering a lightweight and modular enhancement suitable for deployment in restricted or privacy-sensitive environments, in addition to non-vacuous generalization guarantees. In experiments with LLMs, we demonstrate empirically that Retrofitting methods are able to learn, showing how a few iterations of BBoxER improve performance and generalize well on a benchmark of reasoning datasets. This positions BBoxER as an attractive add-on on top of gradient-based optimization.

From Bytes to Ideas: Language Modeling with Autoregressive U-Nets

Jun 17, 2025Abstract:Tokenization imposes a fixed granularity on the input text, freezing how a language model operates on data and how far in the future it predicts. Byte Pair Encoding (BPE) and similar schemes split text once, build a static vocabulary, and leave the model stuck with that choice. We relax this rigidity by introducing an autoregressive U-Net that learns to embed its own tokens as it trains. The network reads raw bytes, pools them into words, then pairs of words, then up to 4 words, giving it a multi-scale view of the sequence. At deeper stages, the model must predict further into the future -- anticipating the next few words rather than the next byte -- so deeper stages focus on broader semantic patterns while earlier stages handle fine details. When carefully tuning and controlling pretraining compute, shallow hierarchies tie strong BPE baselines, and deeper hierarchies have a promising trend. Because tokenization now lives inside the model, the same system can handle character-level tasks and carry knowledge across low-resource languages.

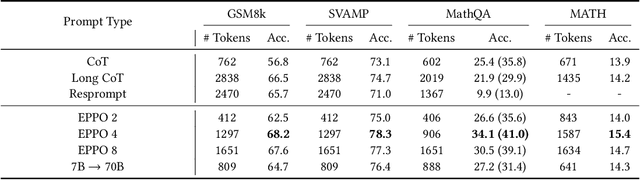

Evolutionary Pre-Prompt Optimization for Mathematical Reasoning

Dec 05, 2024

Abstract:Recent advancements have highlighted that large language models (LLMs), when given a small set of task-specific examples, demonstrate remarkable proficiency, a capability that extends to complex reasoning tasks. In particular, the combination of few-shot learning with the chain-of-thought (CoT) approach has been pivotal in steering models towards more logically consistent conclusions. This paper explores the optimization of example selection for designing effective CoT pre-prompts and shows that the choice of the optimization algorithm, typically in favor of comparison-based methods such as evolutionary computation, significantly enhances efficacy and feasibility. Specifically, thanks to a limited exploitative and overfitted optimization, Evolutionary Pre-Prompt Optimization (EPPO) brings an improvement over the naive few-shot approach exceeding 10 absolute points in exact match scores on benchmark datasets such as GSM8k and MathQA. These gains are consistent across various contexts and are further amplified when integrated with self-consistency (SC)

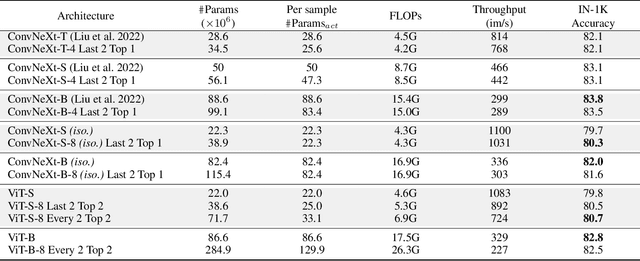

Mixture of Experts in Image Classification: What's the Sweet Spot?

Nov 27, 2024

Abstract:Mixture-of-Experts (MoE) models have shown promising potential for parameter-efficient scaling across various domains. However, the implementation in computer vision remains limited, and often requires large-scale datasets comprising billions of samples. In this study, we investigate the integration of MoE within computer vision models and explore various MoE configurations on open datasets. When introducing MoE layers in image classification, the best results are obtained for models with a moderate number of activated parameters per sample. However, such improvements gradually vanish when the number of parameters per sample increases.

Evolutionary Retrofitting

Oct 15, 2024Abstract:AfterLearnER (After Learning Evolutionary Retrofitting) consists in applying non-differentiable optimization, including evolutionary methods, to refine fully-trained machine learning models by optimizing a set of carefully chosen parameters or hyperparameters of the model, with respect to some actual, exact, and hence possibly non-differentiable error signal, performed on a subset of the standard validation set. The efficiency of AfterLearnER is demonstrated by tackling non-differentiable signals such as threshold-based criteria in depth sensing, the word error rate in speech re-synthesis, image quality in 3D generative adversarial networks (GANs), image generation via Latent Diffusion Models (LDM), the number of kills per life at Doom, computational accuracy or BLEU in code translation, and human appreciations in image synthesis. In some cases, this retrofitting is performed dynamically at inference time by taking into account user inputs. The advantages of AfterLearnER are its versatility (no gradient is needed), the possibility to use non-differentiable feedback including human evaluations, the limited overfitting, supported by a theoretical study and its anytime behavior. Last but not least, AfterLearnER requires only a minimal amount of feedback, i.e., a few dozens to a few hundreds of scalars, rather than the tens of thousands needed in most related published works. Compared to fine-tuning (typically using the same loss, and gradient-based optimization on a smaller but still big dataset at a fine grain), AfterLearnER uses a minimum amount of data on the real objective function without requiring differentiability.

Learning Structural Causal Models through Deep Generative Models: Methods, Guarantees, and Challenges

May 08, 2024

Abstract:This paper provides a comprehensive review of deep structural causal models (DSCMs), particularly focusing on their ability to answer counterfactual queries using observational data within known causal structures. It delves into the characteristics of DSCMs by analyzing the hypotheses, guarantees, and applications inherent to the underlying deep learning components and structural causal models, fostering a finer understanding of their capabilities and limitations in addressing different counterfactual queries. Furthermore, it highlights the challenges and open questions in the field of deep structural causal modeling. It sets the stages for researchers to identify future work directions and for practitioners to get an overview in order to find out the most appropriate methods for their needs.

Conformal Approach To Gaussian Process Surrogate Evaluation With Coverage Guarantees

Jan 15, 2024Abstract:Gaussian processes (GPs) are a Bayesian machine learning approach widely used to construct surrogate models for the uncertainty quantification of computer simulation codes in industrial applications. It provides both a mean predictor and an estimate of the posterior prediction variance, the latter being used to produce Bayesian credibility intervals. Interpreting these intervals relies on the Gaussianity of the simulation model as well as the well-specification of the priors which are not always appropriate. We propose to address this issue with the help of conformal prediction. In the present work, a method for building adaptive cross-conformal prediction intervals is proposed by weighting the non-conformity score with the posterior standard deviation of the GP. The resulting conformal prediction intervals exhibit a level of adaptivity akin to Bayesian credibility sets and display a significant correlation with the surrogate model local approximation error, while being free from the underlying model assumptions and having frequentist coverage guarantees. These estimators can thus be used for evaluating the quality of a GP surrogate model and can assist a decision-maker in the choice of the best prior for the specific application of the GP. The performance of the method is illustrated through a panel of numerical examples based on various reference databases. Moreover, the potential applicability of the method is demonstrated in the context of surrogate modeling of an expensive-to-evaluate simulator of the clogging phenomenon in steam generators of nuclear reactors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge