Alessandro Albini

Diffusion-based Inverse Model of a Distributed Tactile Sensor for Object Pose Estimation

Jan 19, 2026Abstract:Tactile sensing provides a promising sensing modality for object pose estimation in manipulation settings where visual information is limited due to occlusion or environmental effects. However, efficiently leveraging tactile data for estimation remains a challenge due to partial observability, with single observations corresponding to multiple possible contact configurations. This limits conventional estimation approaches largely tailored to vision. We propose to address these challenges by learning an inverse tactile sensor model using denoising diffusion. The model is conditioned on tactile observations from a distributed tactile sensor and trained in simulation using a geometric sensor model based on signed distance fields. Contact constraints are enforced during inference through single-step projection using distance and gradient information from the signed distance field. For online pose estimation, we integrate the inverse model with a particle filter through a proposal scheme that combines generated hypotheses with particles from the prior belief. Our approach is validated in simulated and real-world planar pose estimation settings, without access to visual data or tight initial pose priors. We further evaluate robustness to unmodeled contact and sensor dynamics for pose tracking in a box-pushing scenario. Compared to local sampling baselines, the inverse sensor model improves sampling efficiency and estimation accuracy while preserving multimodal beliefs across objects with varying tactile discriminability.

Estimating Scene Flow in Robot Surroundings with Distributed Miniaturized Time-of-Flight Sensors

Apr 03, 2025

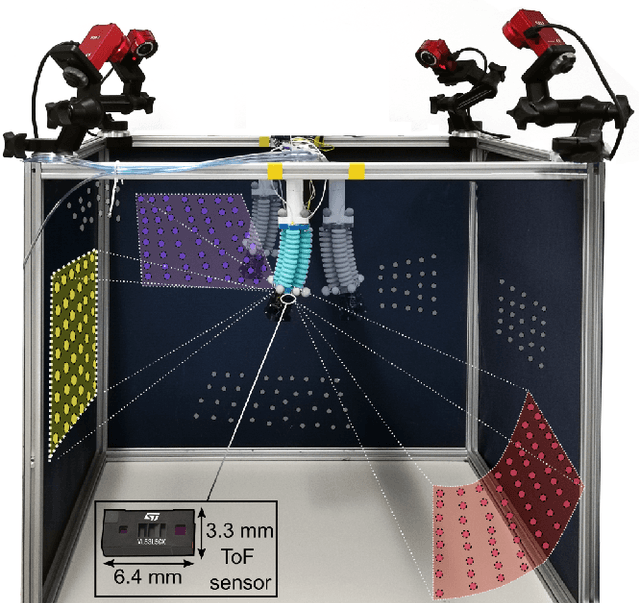

Abstract:Tracking motions of humans or objects in the surroundings of the robot is essential to improve safe robot motions and reactions. In this work, we present an approach for scene flow estimation from low-density and noisy point clouds acquired from miniaturized Time of Flight (ToF) sensors distributed on the robot body. The proposed method clusters points from consecutive frames and applies Iterative Closest Point (ICP) to estimate a dense motion flow, with additional steps introduced to mitigate the impact of sensor noise and low-density data points. Specifically, we employ a fitness-based classification to distinguish between stationary and moving points and an inlier removal strategy to refine geometric correspondences. The proposed approach is validated in an experimental setup where 24 ToF are used to estimate the velocity of an object moving at different controlled speeds. Experimental results show that the method consistently approximates the direction of the motion and its magnitude with an error which is in line with sensor noise.

Tiny Lidars for Manipulator Self-Awareness: Sensor Characterization and Initial Localization Experiments

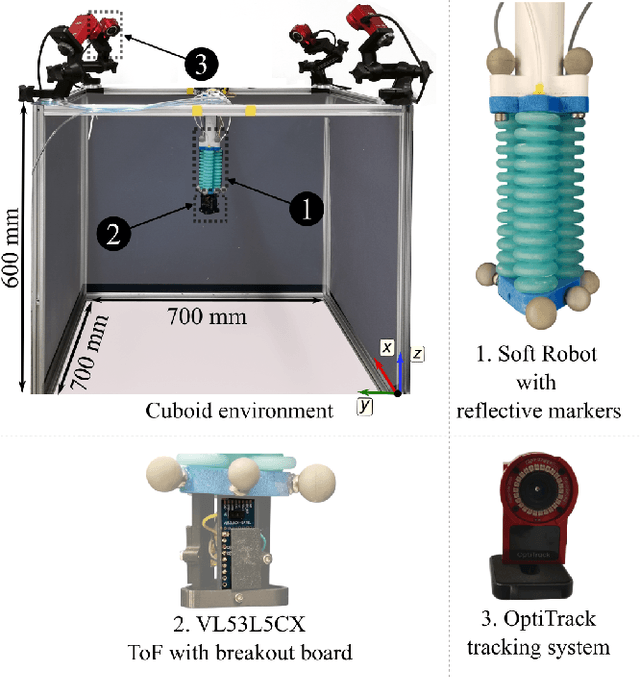

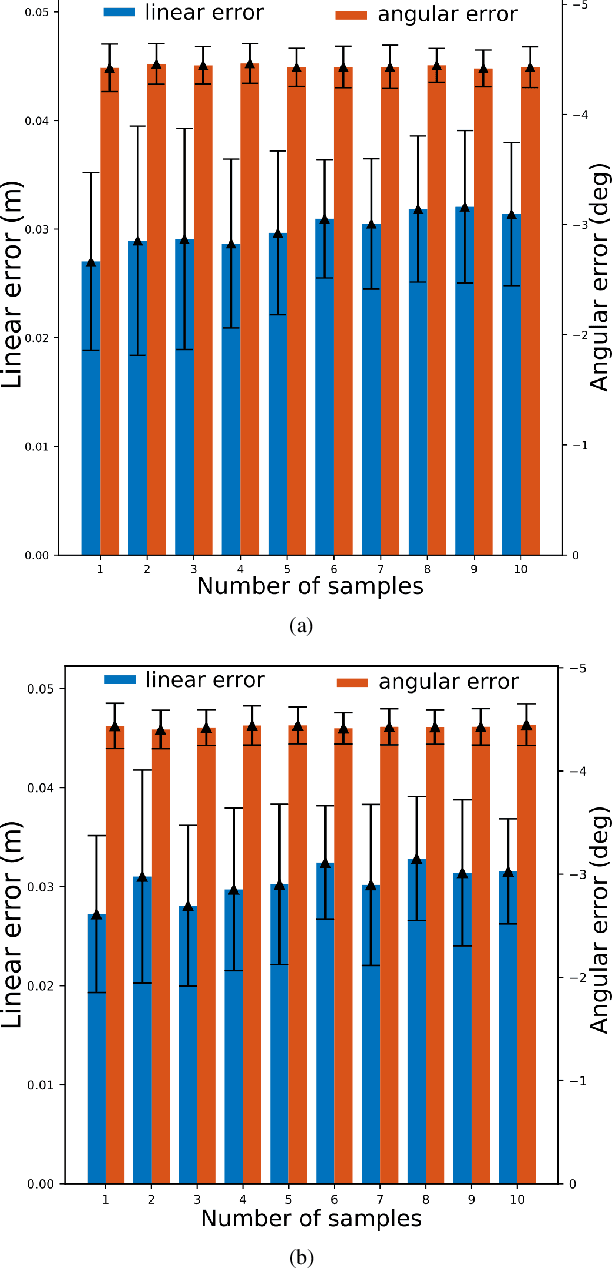

Mar 05, 2025Abstract:For several tasks, ranging from manipulation to inspection, it is beneficial for robots to localize a target object in their surroundings. In this paper, we propose an approach that utilizes coarse point clouds obtained from miniaturized VL53L5CX Time-of-Flight (ToF) sensors (tiny lidars) to localize a target object in the robot's workspace. We first conduct an experimental campaign to calibrate the dependency of sensor readings on relative range and orientation to targets. We then propose a probabilistic sensor model that is validated in an object pose estimation task using a Particle Filter (PF). The results show that the proposed sensor model improves the performance of the localization of the target object with respect to two baselines: one that assumes measurements are free from uncertainty and one in which the confidence is provided by the sensor datasheet.

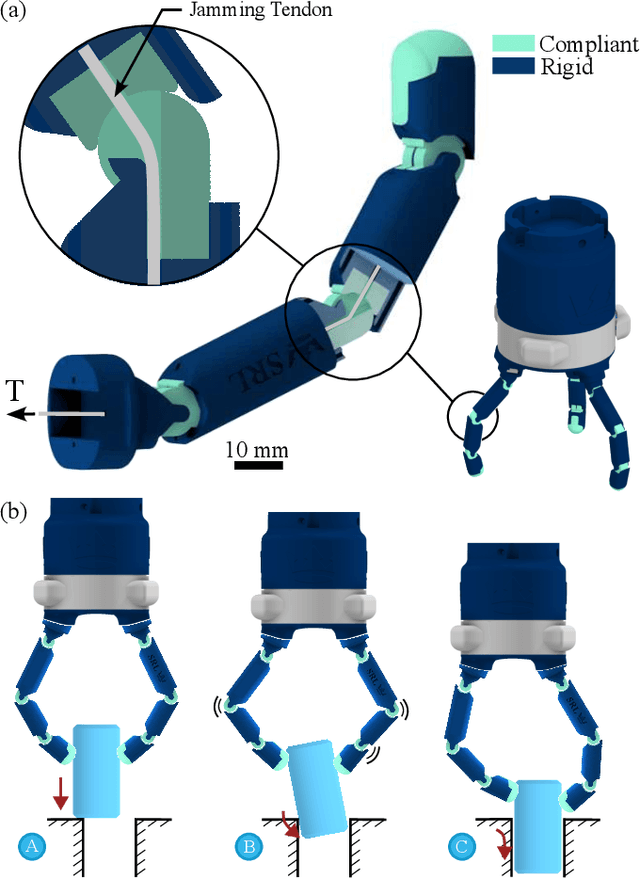

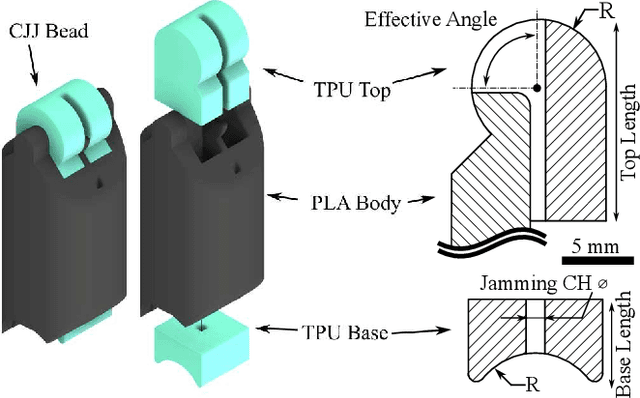

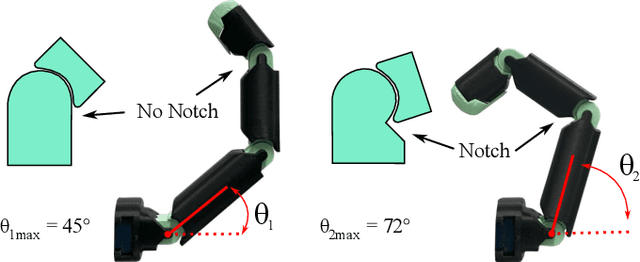

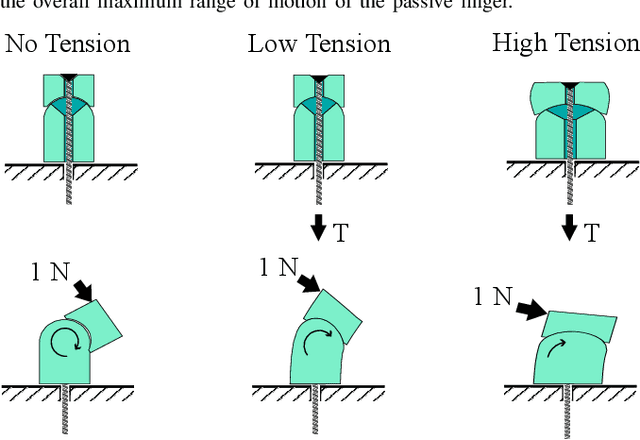

Compliant Beaded-String Jamming For Variable Stiffness Anthropomorphic Fingers

Feb 06, 2025

Abstract:Achieving human-like dexterity in robotic grippers remains an open challenge, particularly in ensuring robust manipulation in uncertain environments. Soft robotic hands try to address this by leveraging passive compliance, a characteristic that is crucial to the adaptability of the human hand, to achieve more robust manipulation while reducing reliance on high-resolution sensing and complex control. Further improvements in terms of precision and postural stability in manipulation tasks are achieved through the integration of variable stiffness mechanisms, but these tend to lack residual compliance, be bulky and have slow response times. To address these limitations, this work introduces a Compliant Joint Jamming mechanism for anthropomorphic fingers that exhibits passive residual compliance and adjustable stiffness, while achieving a range of motion in line with that of human interphalangeal joints. The stiffness range provided by the mechanism is controllable from 0.48 Nm/rad to 1.95 Nm/rad (a 4x increase). Repeatability, hysteresis and stiffness were also characterized as a function of the jamming force. To demonstrate the importance of the passive residual compliance afforded by the proposed system, a peg-in-hole task was conducted, which showed a 60% higher success rate for a gripper integrating our joint design when compared to a rigid one.

JAMMit! Monolithic 3D-Printing of a Bead Jamming Soft Pneumatic Arm

Feb 05, 2025Abstract:3D-printed bellow soft pneumatic arms are widely adopted for their flexible design, ease of fabrication, and large deformation capabilities. However, their low stiffness limits their real-world applications. Although several methods exist to enhance the stiffness of soft actuators, many involve complex manufacturing processes not in line with modern goals of monolithic and automated additive manufacturing. With its simplicity, bead-jamming represents a simple and effective solution to these challenges. This work introduces a method for monolithic printing of a bellow soft pneumatic arm, integrating a tendon-driven central spine of bowl-shaped beads. We experimentally characterized the arm's range of motion in both unjammed and jammed states, as well as its stiffness under various actuation and jamming conditions. As a result, we provide an optimal jamming policy as a trade-off between preserving the range of motion and maximizing stiffness. The proposed design was further demonstrated in a switch-toggling task, showing its potential for practical applications.

Soft Robot Localization Using Distributed Miniaturized Time-of-Flight Sensors

Feb 03, 2025

Abstract:Thanks to their compliance and adaptability, soft robots can be deployed to perform tasks in constrained or complex environments. In these scenarios, spatial awareness of the surroundings and the ability to localize the robot within the environment represent key aspects. While state-of-the-art localization techniques are well-explored in autonomous vehicles and walking robots, they rely on data retrieved with lidar or depth sensors which are bulky and thus difficult to integrate into small soft robots. Recent developments in miniaturized Time of Flight (ToF) sensors show promise as a small and lightweight alternative to bulky sensors. These sensors can be potentially distributed on the soft robot body, providing multi-point depth data of the surroundings. However, the small spatial resolution and the noisy measurements pose a challenge to the success of state-of-the-art localization algorithms, which are generally applied to much denser and more reliable measurements. In this paper, we enforce distributed VL53L5CX ToF sensors, mount them on the tip of a soft robot, and investigate their usage for self-localization tasks. Experimental results show that the soft robot can effectively be localized with respect to a known map, with an error comparable to the uncertainty on the measures provided by the miniaturized ToF sensors.

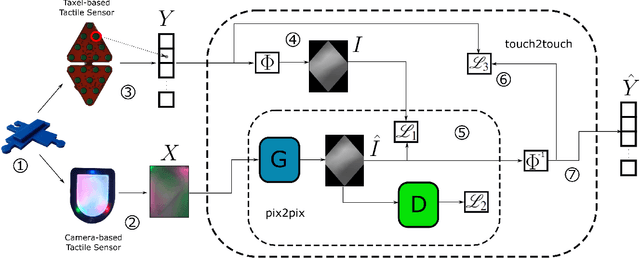

Touch-to-Touch Translation -- Learning the Mapping Between Heterogeneous Tactile Sensing Technologies

Nov 04, 2024

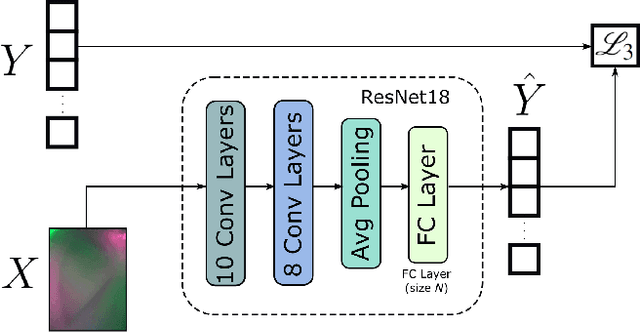

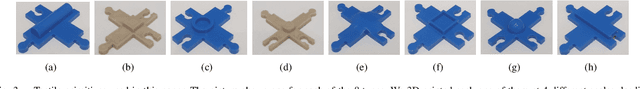

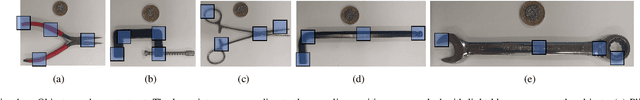

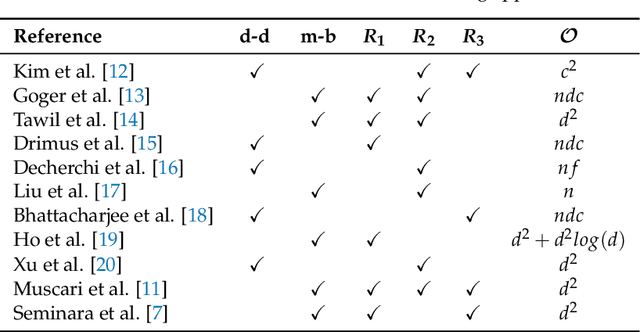

Abstract:The use of data-driven techniques for tactile data processing and classification has recently increased. However, collecting tactile data is a time-expensive and sensor-specific procedure. Indeed, due to the lack of hardware standards in tactile sensing, data is required to be collected for each different sensor. This paper considers the problem of learning the mapping between two tactile sensor outputs with respect to the same physical stimulus -- we refer to this problem as touch-to-touch translation. In this respect, we proposed two data-driven approaches to address this task and we compared their performance. The first one exploits a generative model developed for image-to-image translation and adapted for this context. The second one uses a ResNet model trained to perform a regression task. We validated both methods using two completely different tactile sensors -- a camera-based, Digit and a capacitance-based, CySkin. In particular, we used Digit images to generate the corresponding CySkin data. We trained the models on a set of tactile features that can be found in common larger objects and we performed the testing on a previously unseen set of data. Experimental results show the possibility of translating Digit images into the CySkin output by preserving the contact shape and with an error of 15.18% in the magnitude of the sensor responses.

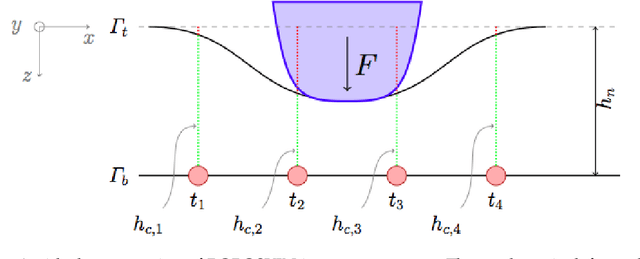

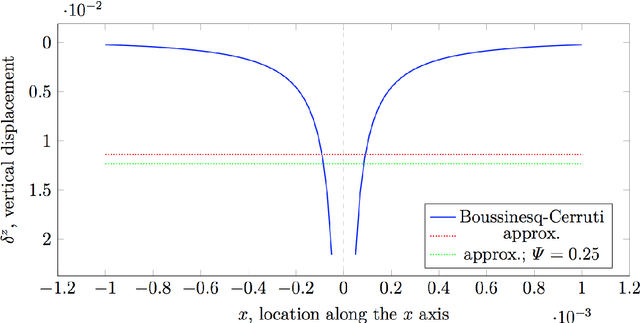

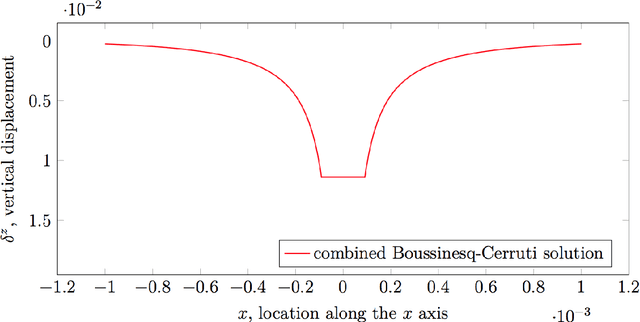

Contact modelling and tactile data processing for robot skin

Sep 21, 2018

Abstract:Tactile sensing is a key enabling technology to develop complex behaviours for robots interacting with humans or the environment. This paper discusses computational aspects playing a significant role when extracting information about contact events. Considering a large-scale, capacitance-based robot skin technology we developed in the past few years, we analyse the classical Boussinesq-Cerruti's solution and the Love's approach for solving a distributed inverse contact problem, both from a qualitative and a computational perspective. Our contribution is the characterisation of algorithms performance using a freely available dataset and data originating from surfaces provided with robot skin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge