Francesco Grella

Generating Whole-Body Avoidance Motion through Localized Proximity Sensing

Dec 05, 2024Abstract:This paper presents a novel control algorithm for robotic manipulators in unstructured environments using proximity sensors partially distributed on the platform. The proposed approach exploits arrays of multi zone Time-of-Flight (ToF) sensors to generate a sparse point cloud representation of the robot surroundings. By employing computational geometry techniques, we fuse the knowledge of robot geometric model with ToFs sensory feedback to generate whole-body motion tasks, allowing to move both sensorized and non-sensorized links in response to unpredictable events such as human motion. In particular, the proposed algorithm computes the pair of closest points between the environment cloud and the robot links, generating a dynamic avoidance motion that is implemented as the highest priority task in a two-level hierarchical architecture. Such a design choice allows the robot to work safely alongside humans even without a complete sensorization over the whole surface. Experimental validation demonstrates the algorithm effectiveness both in static and dynamic scenarios, achieving comparable performances with respect to well established control techniques that aim to move the sensors mounting positions on the robot body. The presented algorithm exploits any arbitrary point on the robot surface to perform avoidance motion, showing improvements in the distance margin up to 100 mm, due to the rendering of virtual avoidance tasks on non-sensorized links.

Touch-to-Touch Translation -- Learning the Mapping Between Heterogeneous Tactile Sensing Technologies

Nov 04, 2024

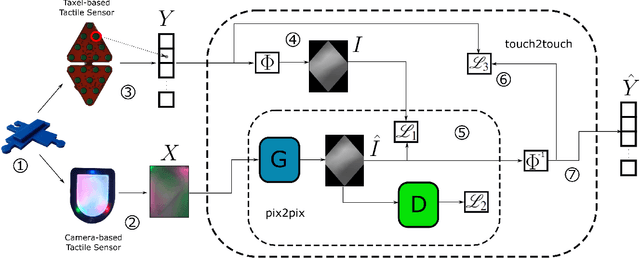

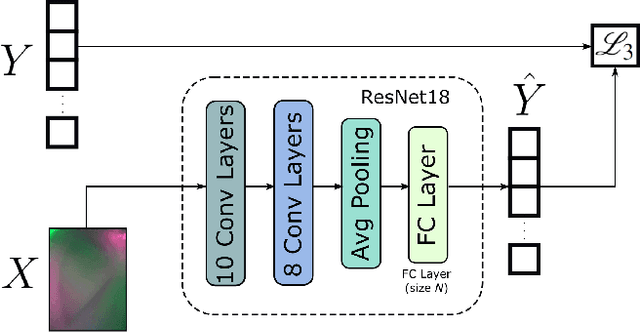

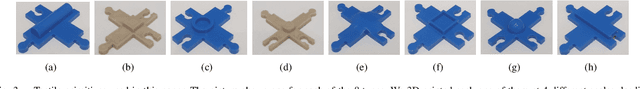

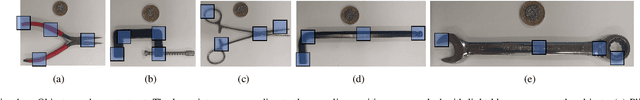

Abstract:The use of data-driven techniques for tactile data processing and classification has recently increased. However, collecting tactile data is a time-expensive and sensor-specific procedure. Indeed, due to the lack of hardware standards in tactile sensing, data is required to be collected for each different sensor. This paper considers the problem of learning the mapping between two tactile sensor outputs with respect to the same physical stimulus -- we refer to this problem as touch-to-touch translation. In this respect, we proposed two data-driven approaches to address this task and we compared their performance. The first one exploits a generative model developed for image-to-image translation and adapted for this context. The second one uses a ResNet model trained to perform a regression task. We validated both methods using two completely different tactile sensors -- a camera-based, Digit and a capacitance-based, CySkin. In particular, we used Digit images to generate the corresponding CySkin data. We trained the models on a set of tactile features that can be found in common larger objects and we performed the testing on a previously unseen set of data. Experimental results show the possibility of translating Digit images into the CySkin output by preserving the contact shape and with an error of 15.18% in the magnitude of the sensor responses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge