Alberto Bacchin

DISF: Disentangled Iterative Surface Fitting for Contact-stable Grasp Planning with Grasp Pose Alignment to the Object Center of Mass

Dec 31, 2025Abstract:In this work, we address the limitation of surface fitting-based grasp planning algorithm, which primarily focuses on geometric alignment between the gripper and object surface while overlooking the stability of contact point distribution, often resulting in unstable grasps due to inadequate contact configurations. To overcome this limitation, we propose a novel surface fitting algorithm that integrates contact stability while preserving geometric compatibility. Inspired by human grasping behavior, our method disentangles the grasp pose optimization into three sequential steps: (1) rotation optimization to align contact normals, (2) translation refinement to improve the alignment between the gripper frame origin and the object Center of Mass (CoM), and (3) gripper aperture adjustment to optimize contact point distribution. We validate our approach in simulation across 15 objects under both Known-shape (with clean CAD-derived dataset) and Observed-shape (with YCB object dataset) settings, including cross-platform grasp execution on three robot--gripper platforms. We further validate the method in real-world grasp experiments on a UR3e robot. Overall, DISF reduces CoM misalignment while maintaining geometric compatibility, translating into higher grasp success in both simulation and real-world execution compared to baselines. Additional videos and supplementary results are available on our project page: https://tomoya-yamanokuchi.github.io/disf-ras-project-page/

Disentangled Iterative Surface Fitting for Contact-stable Grasp Planning

Feb 17, 2025Abstract:In this work, we address the limitation of surface fitting-based grasp planning algorithm, which primarily focuses on geometric alignment between the gripper and object surface while overlooking the stability of contact point distribution, often resulting in unstable grasps due to inadequate contact configurations. To overcome this limitation, we propose a novel surface fitting algorithm that integrates contact stability while preserving geometric compatibility. Inspired by human grasping behavior, our method disentangles the grasp pose optimization into three sequential steps: (1) rotation optimization to align contact normals, (2) translation refinement to improve Center of Mass (CoM) alignment, and (3) gripper aperture adjustment to optimize contact point distribution. We validate our approach through simulations on ten YCB dataset objects, demonstrating an 80% improvement in grasp success over conventional surface fitting methods that disregard contact stability. Further details can be found on our project page: https://tomoya-yamanokuchi.github.io/disf-project-page/.

WasteGAN: Data Augmentation for Robotic Waste Sorting through Generative Adversarial Networks

Sep 25, 2024

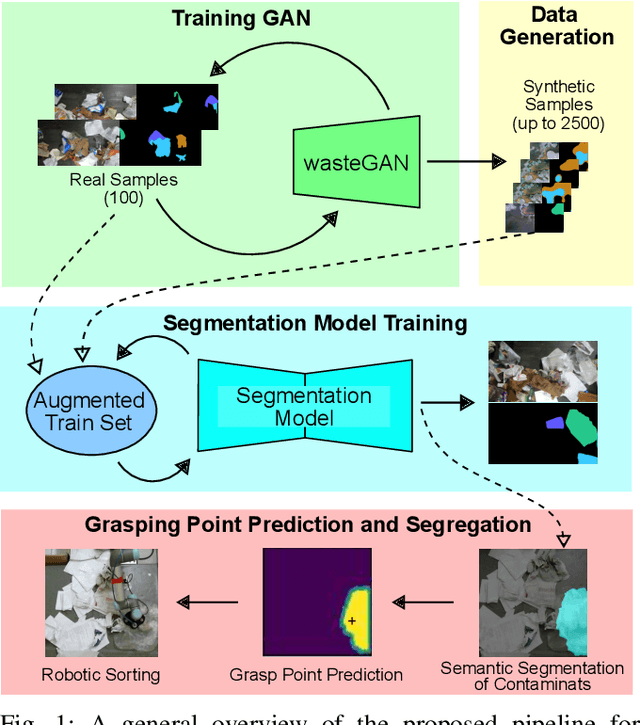

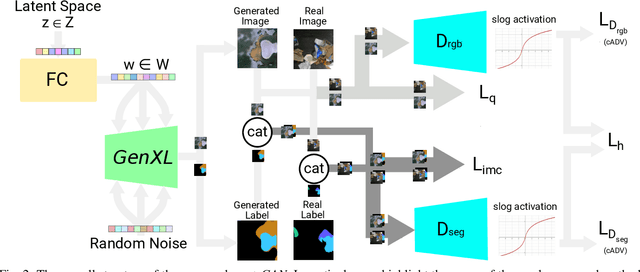

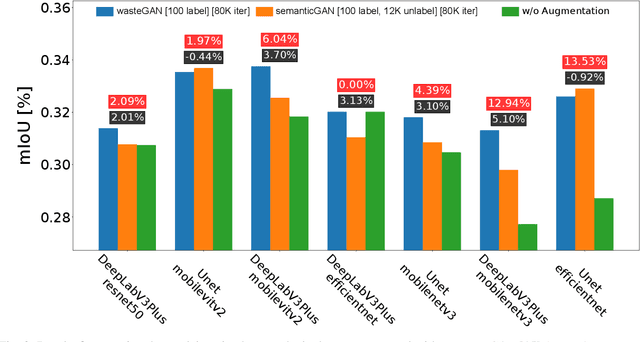

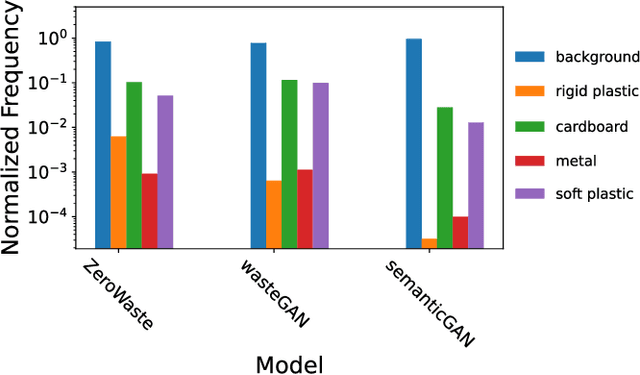

Abstract:Robotic waste sorting poses significant challenges in both perception and manipulation, given the extreme variability of objects that should be recognized on a cluttered conveyor belt. While deep learning has proven effective in solving complex tasks, the necessity for extensive data collection and labeling limits its applicability in real-world scenarios like waste sorting. To tackle this issue, we introduce a data augmentation method based on a novel GAN architecture called wasteGAN. The proposed method allows to increase the performance of semantic segmentation models, starting from a very limited bunch of labeled examples, such as few as 100. The key innovations of wasteGAN include a novel loss function, a novel activation function, and a larger generator block. Overall, such innovations helps the network to learn from limited number of examples and synthesize data that better mirrors real-world distributions. We then leverage the higher-quality segmentation masks predicted from models trained on the wasteGAN synthetic data to compute semantic-aware grasp poses, enabling a robotic arm to effectively recognizing contaminants and separating waste in a real-world scenario. Through comprehensive evaluation encompassing dataset-based assessments and real-world experiments, our methodology demonstrated promising potential for robotic waste sorting, yielding performance gains of up to 5.8\% in picking contaminants. The project page is available at https://github.com/bach05/wasteGAN.git

SOOD-ImageNet: a Large-Scale Dataset for Semantic Out-Of-Distribution Image Classification and Semantic Segmentation

Sep 02, 2024

Abstract:Out-of-Distribution (OOD) detection in computer vision is a crucial research area, with related benchmarks playing a vital role in assessing the generalizability of models and their applicability in real-world scenarios. However, existing OOD benchmarks in the literature suffer from two main limitations: (1) they often overlook semantic shift as a potential challenge, and (2) their scale is limited compared to the large datasets used to train modern models. To address these gaps, we introduce SOOD-ImageNet, a novel dataset comprising around 1.6M images across 56 classes, designed for common computer vision tasks such as image classification and semantic segmentation under OOD conditions, with a particular focus on the issue of semantic shift. We ensured the necessary scalability and quality by developing an innovative data engine that leverages the capabilities of modern vision-language models, complemented by accurate human checks. Through extensive training and evaluation of various models on SOOD-ImageNet, we showcase its potential to significantly advance OOD research in computer vision. The project page is available at https://github.com/bach05/SOODImageNet.git.

Show and Grasp: Few-shot Semantic Segmentation for Robot Grasping through Zero-shot Foundation Models

Apr 19, 2024

Abstract:The ability of a robot to pick an object, known as robot grasping, is crucial for several applications, such as assembly or sorting. In such tasks, selecting the right target to pick is as essential as inferring a correct configuration of the gripper. A common solution to this problem relies on semantic segmentation models, which often show poor generalization to unseen objects and require considerable time and massive data to be trained. To reduce the need for large datasets, some grasping pipelines exploit few-shot semantic segmentation models, which are capable of recognizing new classes given a few examples. However, this often comes at the cost of limited performance and fine-tuning is required to be effective in robot grasping scenarios. In this work, we propose to overcome all these limitations by combining the impressive generalization capability reached by foundation models with a high-performing few-shot classifier, working as a score function to select the segmentation that is closer to the support set. The proposed model is designed to be embedded in a grasp synthesis pipeline. The extensive experiments using one or five examples show that our novel approach overcomes existing performance limitations, improving the state of the art both in few-shot semantic segmentation on the Graspnet-1B (+10.5% mIoU) and Ocid-grasp (+1.6% AP) datasets, and real-world few-shot grasp synthesis (+21.7% grasp accuracy). The project page is available at: https://leobarcellona.github.io/showandgrasp.github.io/

People Tracking in Panoramic Video for Guiding Robots

Jun 06, 2022

Abstract:A guiding robot aims to effectively bring people to and from specific places within environments that are possibly unknown to them. During this operation the robot should be able to detect and track the accompanied person, trying never to lose sight of her/him. A solution to minimize this event is to use an omnidirectional camera: its 360{\deg} Field of View (FoV) guarantees that any framed object cannot leave the FoV if not occluded or very far from the sensor. However, the acquired panoramic videos introduce new challenges in perception tasks such as people detection and tracking, including the large size of the images to be processed, the distortion effects introduced by the cylindrical projection and the periodic nature of panoramic images. In this paper, we propose a set of targeted methods that allow to effectively adapt to panoramic videos a standard people detection and tracking pipeline originally designed for perspective cameras. Our methods have been implemented and tested inside a deep learning-based people detection and tracking framework with a commercial 360{\deg} camera. Experiments performed on datasets specifically acquired for guiding robot applications and on a real service robot show the effectiveness of the proposed approach over other state-of-the-art systems. We release with this paper the acquired and annotated datasets and the open-source implementation of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge