Filippo Berno

People Tracking in Panoramic Video for Guiding Robots

Jun 06, 2022

Abstract:A guiding robot aims to effectively bring people to and from specific places within environments that are possibly unknown to them. During this operation the robot should be able to detect and track the accompanied person, trying never to lose sight of her/him. A solution to minimize this event is to use an omnidirectional camera: its 360{\deg} Field of View (FoV) guarantees that any framed object cannot leave the FoV if not occluded or very far from the sensor. However, the acquired panoramic videos introduce new challenges in perception tasks such as people detection and tracking, including the large size of the images to be processed, the distortion effects introduced by the cylindrical projection and the periodic nature of panoramic images. In this paper, we propose a set of targeted methods that allow to effectively adapt to panoramic videos a standard people detection and tracking pipeline originally designed for perspective cameras. Our methods have been implemented and tested inside a deep learning-based people detection and tracking framework with a commercial 360{\deg} camera. Experiments performed on datasets specifically acquired for guiding robot applications and on a real service robot show the effectiveness of the proposed approach over other state-of-the-art systems. We release with this paper the acquired and annotated datasets and the open-source implementation of our method.

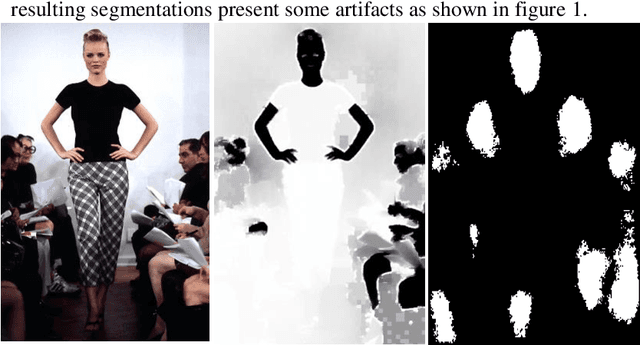

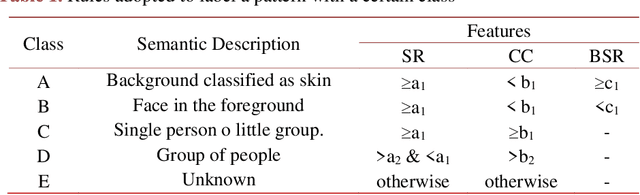

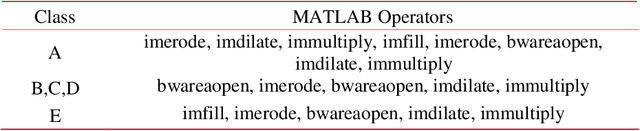

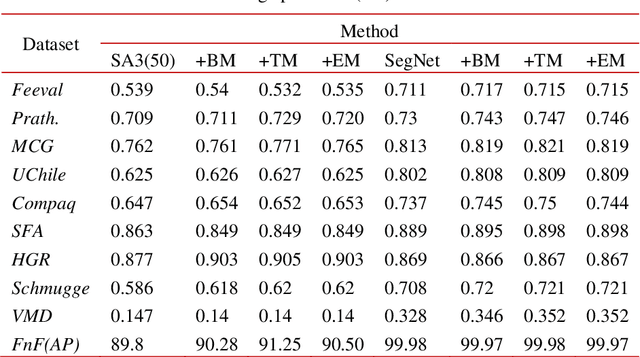

Learning morphological operators for skin detection

Aug 09, 2019

Abstract:In this work we propose a novel post processing approach for skin detectors based on trained morphological operators. The first step, consisting in skin segmentation is performed according to an existing skin detection approach is performed for skin segmentation, then a second step is carried out consisting in the application of a set of morphological operators to refine the resulting mask. Extensive experimental evaluation performed considering two different detection approaches (one based on deep learning and a handcrafted one) carried on 10 different datasets confirms the quality of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge