Alan Whone

Skeleton-Snippet Contrastive Learning with Multiscale Feature Fusion for Action Localization

Dec 22, 2025

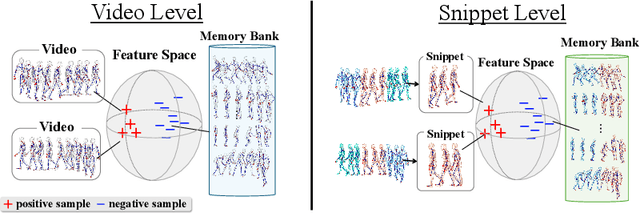

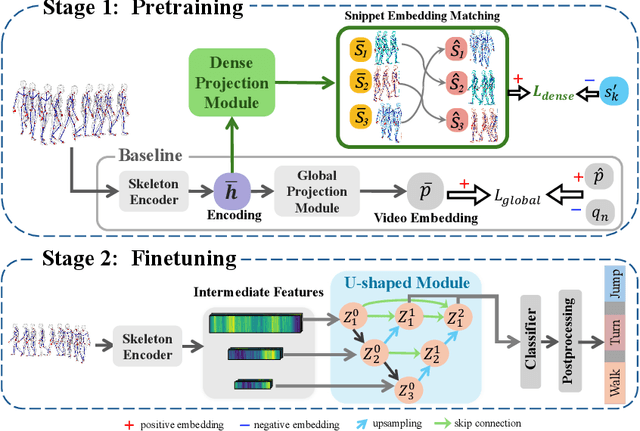

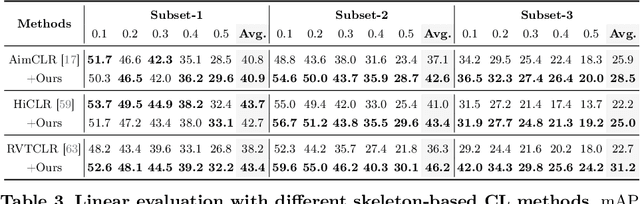

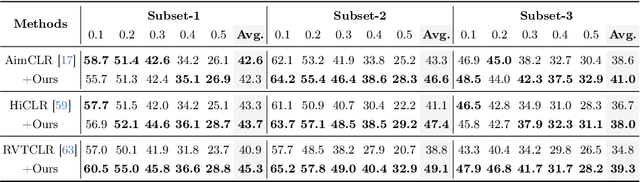

Abstract:The self-supervised pretraining paradigm has achieved great success in learning 3D action representations for skeleton-based action recognition using contrastive learning. However, learning effective representations for skeleton-based temporal action localization remains challenging and underexplored. Unlike video-level {action} recognition, detecting action boundaries requires temporally sensitive features that capture subtle differences between adjacent frames where labels change. To this end, we formulate a snippet discrimination pretext task for self-supervised pretraining, which densely projects skeleton sequences into non-overlapping segments and promotes features that distinguish them across videos via contrastive learning. Additionally, we build on strong backbones of skeleton-based action recognition models by fusing intermediate features with a U-shaped module to enhance feature resolution for frame-level localization. Our approach consistently improves existing skeleton-based contrastive learning methods for action localization on BABEL across diverse subsets and evaluation protocols. We also achieve state-of-the-art transfer learning performance on PKUMMD with pretraining on NTU RGB+D and BABEL.

CARE-PD: A Multi-Site Anonymized Clinical Dataset for Parkinson's Disease Gait Assessment

Oct 05, 2025

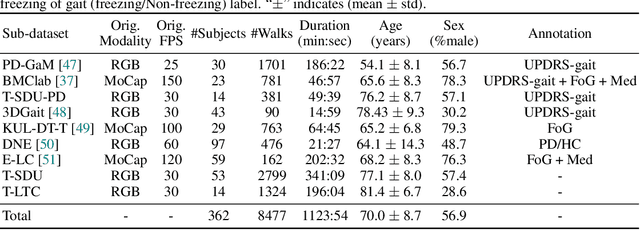

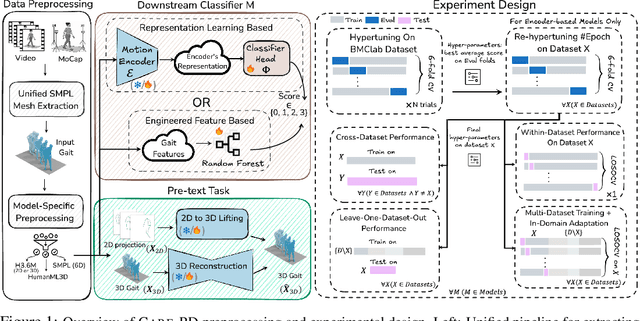

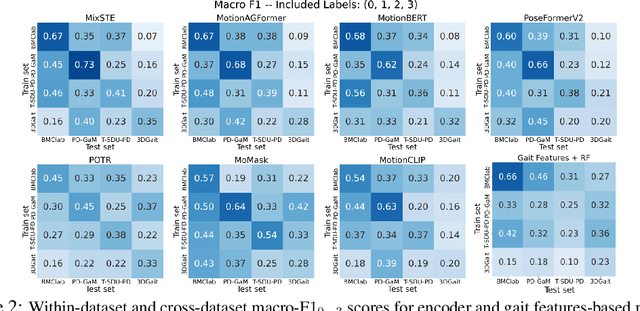

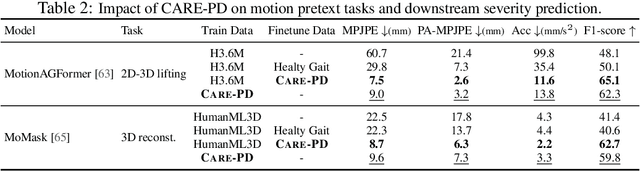

Abstract:Objective gait assessment in Parkinson's Disease (PD) is limited by the absence of large, diverse, and clinically annotated motion datasets. We introduce CARE-PD, the largest publicly available archive of 3D mesh gait data for PD, and the first multi-site collection spanning 9 cohorts from 8 clinical centers. All recordings (RGB video or motion capture) are converted into anonymized SMPL meshes via a harmonized preprocessing pipeline. CARE-PD supports two key benchmarks: supervised clinical score prediction (estimating Unified Parkinson's Disease Rating Scale, UPDRS, gait scores) and unsupervised motion pretext tasks (2D-to-3D keypoint lifting and full-body 3D reconstruction). Clinical prediction is evaluated under four generalization protocols: within-dataset, cross-dataset, leave-one-dataset-out, and multi-dataset in-domain adaptation. To assess clinical relevance, we compare state-of-the-art motion encoders with a traditional gait-feature baseline, finding that encoders consistently outperform handcrafted features. Pretraining on CARE-PD reduces MPJPE (from 60.8mm to 7.5mm) and boosts PD severity macro-F1 by 17 percentage points, underscoring the value of clinically curated, diverse training data. CARE-PD and all benchmark code are released for non-commercial research at https://neurips2025.care-pd.ca/.

Trajectory-guided Motion Perception for Facial Expression Quality Assessment in Neurological Disorders

Apr 16, 2025Abstract:Automated facial expression quality assessment (FEQA) in neurological disorders is critical for enhancing diagnostic accuracy and improving patient care, yet effectively capturing the subtle motions and nuances of facial muscle movements remains a challenge. We propose to analyse facial landmark trajectories, a compact yet informative representation, that encodes these subtle motions from a high-level structural perspective. Hence, we introduce Trajectory-guided Motion Perception Transformer (TraMP-Former), a novel FEQA framework that fuses landmark trajectory features for fine-grained motion capture with visual semantic cues from RGB frames, ultimately regressing the combined features into a quality score. Extensive experiments demonstrate that TraMP-Former achieves new state-of-the-art performance on benchmark datasets with neurological disorders, including PFED5 (up by 6.51%) and an augmented Toronto NeuroFace (up by 7.62%). Our ablation studies further validate the efficiency and effectiveness of landmark trajectories in FEQA. Our code is available at https://github.com/shuchaoduan/TraMP-Former.

GAITGen: Disentangled Motion-Pathology Impaired Gait Generative Model -- Bringing Motion Generation to the Clinical Domain

Mar 28, 2025Abstract:Gait analysis is crucial for the diagnosis and monitoring of movement disorders like Parkinson's Disease. While computer vision models have shown potential for objectively evaluating parkinsonian gait, their effectiveness is limited by scarce clinical datasets and the challenge of collecting large and well-labelled data, impacting model accuracy and risk of bias. To address these gaps, we propose GAITGen, a novel framework that generates realistic gait sequences conditioned on specified pathology severity levels. GAITGen employs a Conditional Residual Vector Quantized Variational Autoencoder to learn disentangled representations of motion dynamics and pathology-specific factors, coupled with Mask and Residual Transformers for conditioned sequence generation. GAITGen generates realistic, diverse gait sequences across severity levels, enriching datasets and enabling large-scale model training in parkinsonian gait analysis. Experiments on our new PD-GaM (real) dataset demonstrate that GAITGen outperforms adapted state-of-the-art models in both reconstruction fidelity and generation quality, accurately capturing critical pathology-specific gait features. A clinical user study confirms the realism and clinical relevance of our generated sequences. Moreover, incorporating GAITGen-generated data into downstream tasks improves parkinsonian gait severity estimation, highlighting its potential for advancing clinical gait analysis.

Your Turn: Real-World Turning Angle Estimation for Parkinson's Disease Severity Assessment

Aug 15, 2024Abstract:People with Parkinson's Disease (PD) often experience progressively worsening gait, including changes in how they turn around, as the disease progresses. Existing clinical rating tools are not capable of capturing hour-by-hour variations of PD symptoms, as they are confined to brief assessments within clinic settings. Measuring real-world gait turning angles continuously and passively is a component step towards using gait characteristics as sensitive indicators of disease progression in PD. This paper presents a deep learning-based approach to automatically quantify turning angles by extracting 3D skeletons from videos and calculating the rotation of hip and knee joints. We utilise state-of-the-art human pose estimation models, Fastpose and Strided Transformer, on a total of 1386 turning video clips from 24 subjects (12 people with PD and 12 healthy control volunteers), trimmed from a PD dataset of unscripted free-living videos in a home-like setting (Turn-REMAP). We also curate a turning video dataset, Turn-H3.6M, from the public Human3.6M human pose benchmark with 3D ground truth, to further validate our method. Previous gait research has primarily taken place in clinics or laboratories evaluating scripted gait outcomes, but this work focuses on real-world settings where complexities exist, such as baggy clothing and poor lighting. Due to difficulties in obtaining accurate ground truth data in a free-living setting, we quantise the angle into the nearest bin $45^\circ$ based on the manual labelling of expert clinicians. Our method achieves a turning calculation accuracy of 41.6%, a Mean Absolute Error (MAE) of 34.7{\deg}, and a weighted precision WPrec of 68.3% for Turn-REMAP. This is the first work to explore the use of single monocular camera data to quantify turns by PD patients in a home setting.

QAFE-Net: Quality Assessment of Facial Expressions with Landmark Heatmaps

Dec 01, 2023Abstract:Facial expression recognition (FER) methods have made great inroads in categorising moods and feelings in humans. Beyond FER, pain estimation methods assess levels of intensity in pain expressions, however assessing the quality of all facial expressions is of critical value in health-related applications. In this work, we address the quality of five different facial expressions in patients affected by Parkinson's disease. We propose a novel landmark-guided approach, QAFE-Net, that combines temporal landmark heatmaps with RGB data to capture small facial muscle movements that are encoded and mapped to severity scores. The proposed approach is evaluated on a new Parkinson's Disease Facial Expression dataset (PFED5), as well as on the pain estimation benchmark, the UNBC-McMaster Shoulder Pain Expression Archive Database. Our comparative experiments demonstrate that the proposed method outperforms SOTA action quality assessment works on PFED5 and achieves lower mean absolute error than the SOTA pain estimation methods on UNBC-McMaster. Our code and the new PFED5 dataset are available at https://github.com/shuchaoduan/QAFE-Net.

PECoP: Parameter Efficient Continual Pretraining for Action Quality Assessment

Nov 11, 2023

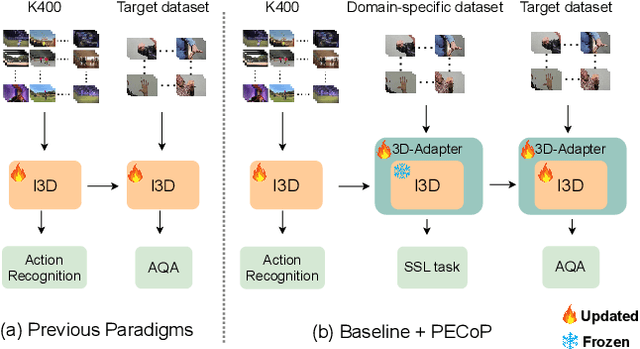

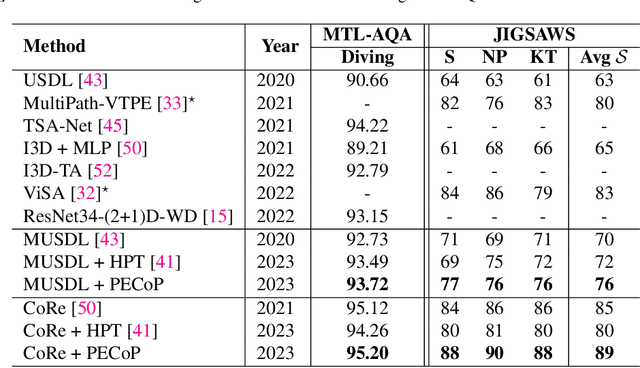

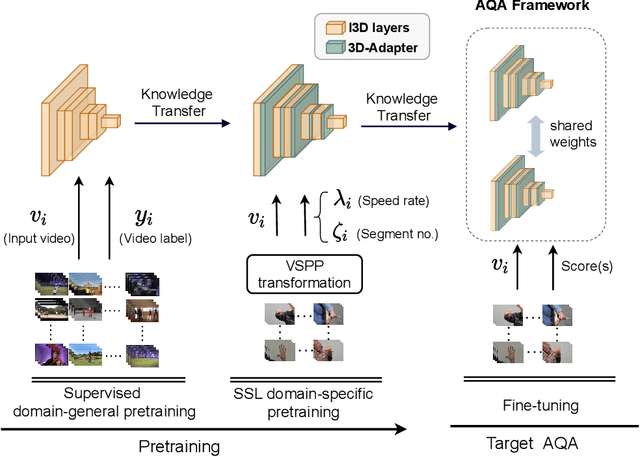

Abstract:The limited availability of labelled data in Action Quality Assessment (AQA), has forced previous works to fine-tune their models pretrained on large-scale domain-general datasets. This common approach results in weak generalisation, particularly when there is a significant domain shift. We propose a novel, parameter efficient, continual pretraining framework, PECoP, to reduce such domain shift via an additional pretraining stage. In PECoP, we introduce 3D-Adapters, inserted into the pretrained model, to learn spatiotemporal, in-domain information via self-supervised learning where only the adapter modules' parameters are updated. We demonstrate PECoP's ability to enhance the performance of recent state-of-the-art methods (MUSDL, CoRe, and TSA) applied to AQA, leading to considerable improvements on benchmark datasets, JIGSAWS ($\uparrow6.0\%$), MTL-AQA ($\uparrow0.99\%$), and FineDiving ($\uparrow2.54\%$). We also present a new Parkinson's Disease dataset, PD4T, of real patients performing four various actions, where we surpass ($\uparrow3.56\%$) the state-of-the-art in comparison. Our code, pretrained models, and the PD4T dataset are available at https://github.com/Plrbear/PECoP.

Multimodal Indoor Localisation in Parkinson's Disease for Detecting Medication Use: Observational Pilot Study in a Free-Living Setting

Aug 03, 2023

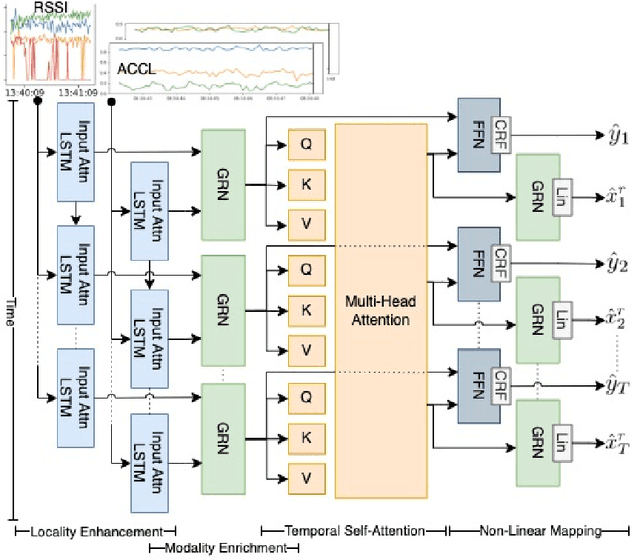

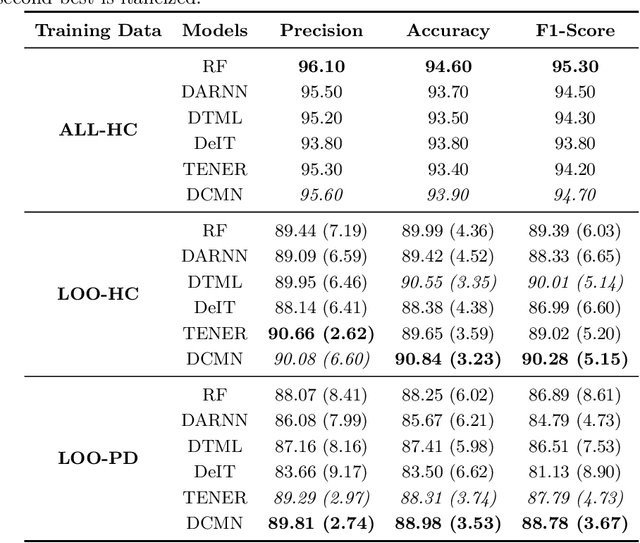

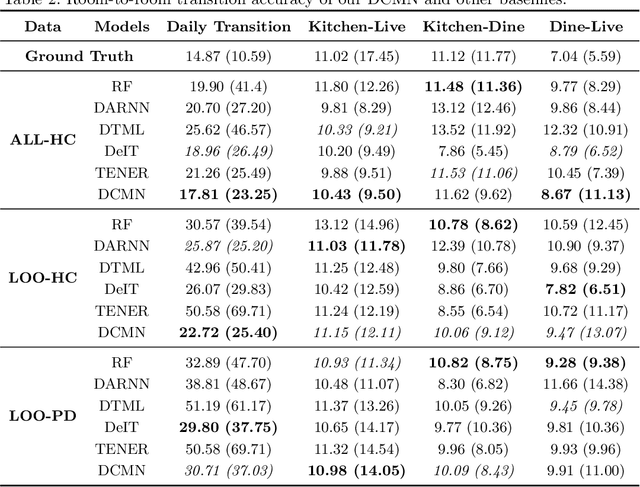

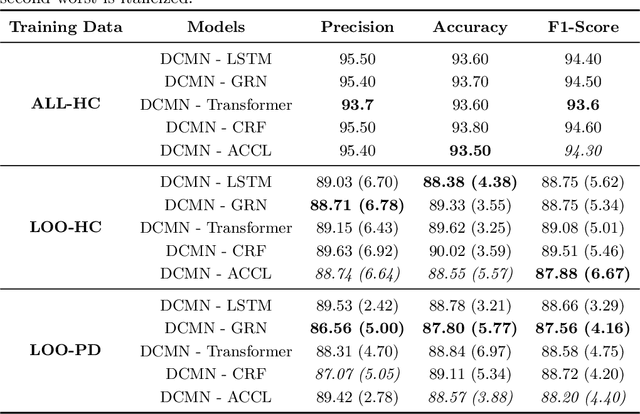

Abstract:Parkinson's disease (PD) is a slowly progressive, debilitating neurodegenerative disease which causes motor symptoms including gait dysfunction. Motor fluctuations are alterations between periods with a positive response to levodopa therapy ("on") and periods marked by re-emergency of PD symptoms ("off") as the response to medication wears off. These fluctuations often affect gait speed and they increase in their disabling impact as PD progresses. To improve the effectiveness of current indoor localisation methods, a transformer-based approach utilising dual modalities which provide complementary views of movement, Received Signal Strength Indicator (RSSI) and accelerometer data from wearable devices, is proposed. A sub-objective aims to evaluate whether indoor localisation, including its in-home gait speed features (i.e. the time taken to walk between rooms), could be used to evaluate motor fluctuations by detecting whether the person with PD is taking levodopa medications or withholding them. To properly evaluate our proposed method, we use a free-living dataset where the movements and mobility are greatly varied and unstructured as expected in real-world conditions. 24 participants lived in pairs (consisting of one person with PD, one control) for five days in a smart home with various sensors. Our evaluation on the resulting dataset demonstrates that our proposed network outperforms other methods for indoor localisation. The sub-objective evaluation shows that precise room-level localisation predictions, transformed into in-home gait speed features, produce accurate predictions on whether the PD participant is taking or withholding their medications.

* 11 pages, 4 figures, 4 tables

A Time Series Approach to Parkinson's Disease Classification from EEG

Jan 20, 2023

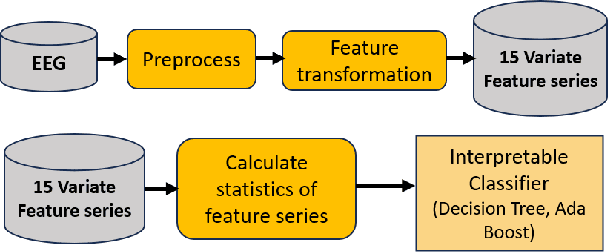

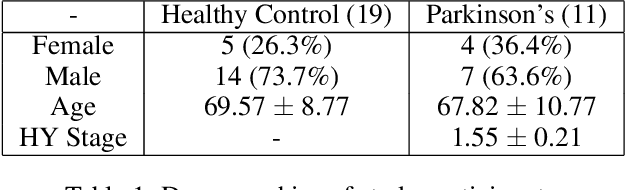

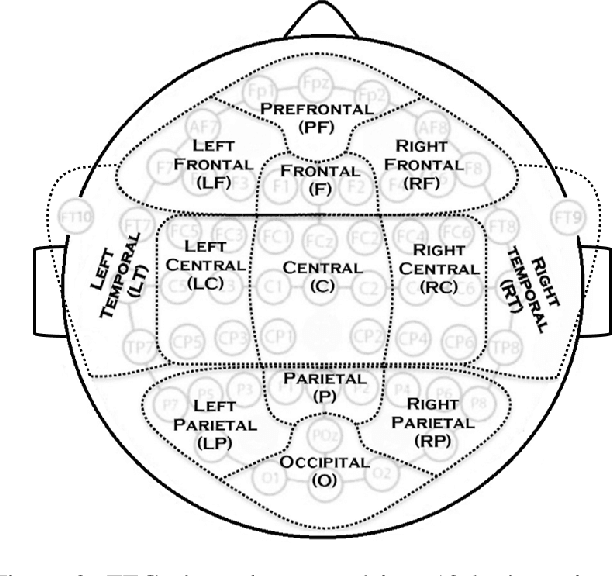

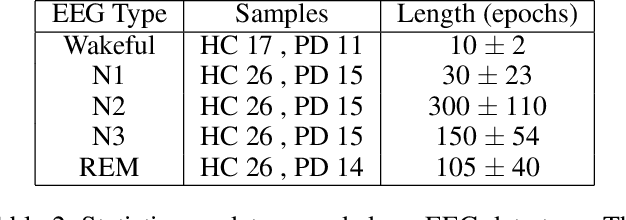

Abstract:Firstly, we present a novel representation for EEG data, a 7-variate series of band power coefficients, which enables the use of (previously inaccessible) time series classification methods. Specifically, we implement the multi-resolution representation-based time series classification method MrSQL. This is deployed on a challenging early-stage Parkinson's dataset that includes wakeful and sleep EEG. Initial results are promising with over 90% accuracy achieved on all EEG data types used. Secondly, we present a framework that enables high-importance data types and brain regions for classification to be identified. Using our framework, we find that, across different EEG data types, it is the Prefrontal brain region that has the most predictive power for the presence of Parkinson's Disease. This outperformance was statistically significant versus ten of the twelve other brain regions (not significant versus adjacent Left Frontal and Right Frontal regions). The Prefrontal region of the brain is important for higher-order cognitive processes and our results align with studies that have shown neural dysfunction in the prefrontal cortex in Parkinson's Disease.

Multimodal Indoor Localisation for Measuring Mobility in Parkinson's Disease using Transformers

May 12, 2022

Abstract:Parkinson's disease (PD) is a slowly progressive debilitating neurodegenerative disease which is prominently characterised by motor symptoms. Indoor localisation, including number and speed of room to room transitions, provides a proxy outcome which represents mobility and could be used as a digital biomarker to quantify how mobility changes as this disease progresses. We use data collected from 10 people with Parkinson's, and 10 controls, each of whom lived for five days in a smart home with various sensors. In order to more effectively localise them indoors, we propose a transformer-based approach utilizing two data modalities, Received Signal Strength Indicator (RSSI) and accelerometer data from wearable devices, which provide complementary views of movement. Our approach makes asymmetric and dynamic correlations by a) learning temporal correlations at different scales and levels, and b) utilizing various gating mechanisms to select relevant features within modality and suppress unnecessary modalities. On a dataset with real patients, we demonstrate that our proposed method gives an average accuracy of 89.9%, outperforming competitors. We also show that our model is able to better predict in-home mobility for people with Parkinson's with an average offset of 1.13 seconds to ground truth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge