Exploring Motion Boundaries in an End-to-End Network for Vision-based Parkinson's Severity Assessment

Paper and Code

Dec 24, 2020

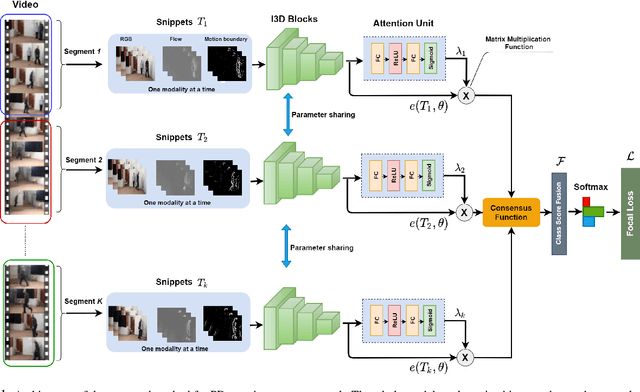

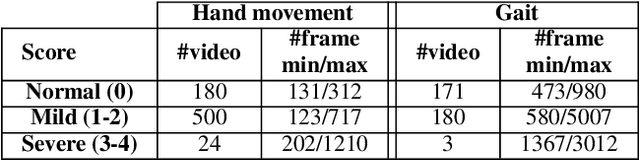

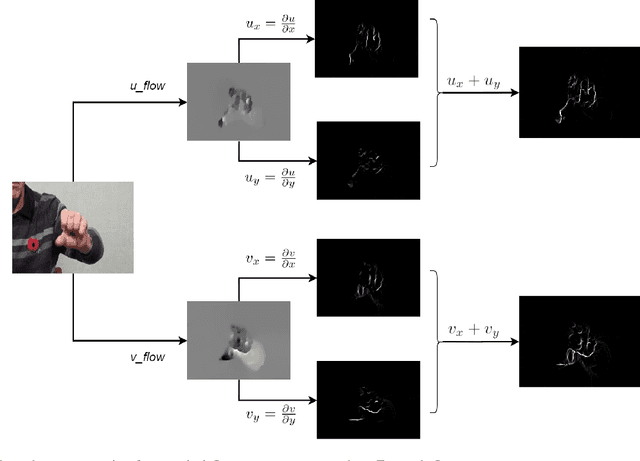

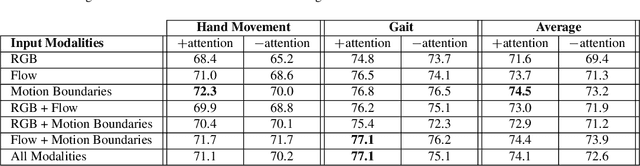

Evaluating neurological disorders such as Parkinson's disease (PD) is a challenging task that requires the assessment of several motor and non-motor functions. In this paper, we present an end-to-end deep learning framework to measure PD severity in two important components, hand movement and gait, of the Unified Parkinson's Disease Rating Scale (UPDRS). Our method leverages on an Inflated 3D CNN trained by a temporal segment framework to learn spatial and long temporal structure in video data. We also deploy a temporal attention mechanism to boost the performance of our model. Further, motion boundaries are explored as an extra input modality to assist in obfuscating the effects of camera motion for better movement assessment. We ablate the effects of different data modalities on the accuracy of the proposed network and compare with other popular architectures. We evaluate our proposed method on a dataset of 25 PD patients, obtaining 72.3% and 77.1% top-1 accuracy on hand movement and gait tasks respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge