Alan R. Wagner

Learning Event-Based Shooter Models from Virtual Reality Experiments

Feb 05, 2026Abstract:Virtual reality (VR) has emerged as a powerful tool for evaluating school security measures in high-risk scenarios such as school shootings, offering experimental control and high behavioral fidelity. However, assessing new interventions in VR requires recruiting new participant cohorts for each condition, making large-scale or iterative evaluation difficult. These limitations are especially restrictive when attempting to learn effective intervention strategies, which typically require many training episodes. To address this challenge, we develop a data-driven discrete-event simulator (DES) that models shooter movement and in-region actions as stochastic processes learned from participant behavior in VR studies. We use the simulator to examine the impact of a robot-based shooter intervention strategy. Once shown to reproduce key empirical patterns, the DES enables scalable evaluation and learning of intervention strategies that are infeasible to train directly with human subjects. Overall, this work demonstrates a high-to-mid fidelity simulation workflow that provides a scalable surrogate for developing and evaluating autonomous school-security interventions.

That was not what I was aiming at! Differentiating human intent and outcome in a physically dynamic throwing task

Oct 26, 2024Abstract:Recognising intent in collaborative human robot tasks can improve team performance and human perception of robots. Intent can differ from the observed outcome in the presence of mistakes which are likely in physically dynamic tasks. We created a dataset of 1227 throws of a ball at a target from 10 participants and observed that 47% of throws were mistakes with 16% completely missing the target. Our research leverages facial images capturing the person's reaction to the outcome of a throw to predict when the resulting throw is a mistake and then we determine the actual intent of the throw. The approach we propose for outcome prediction performs 38% better than the two-stream architecture used previously for this task on front-on videos. In addition, we propose a 1-D CNN model which is used in conjunction with priors learned from the frequency of mistakes to provide an end-to-end pipeline for outcome and intent recognition in this throwing task.

* Accepted October 2022 in Autonomous Robots. Published December 2022

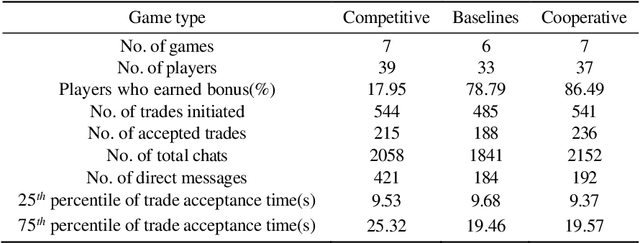

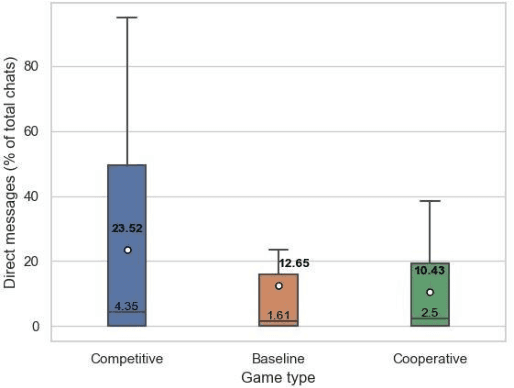

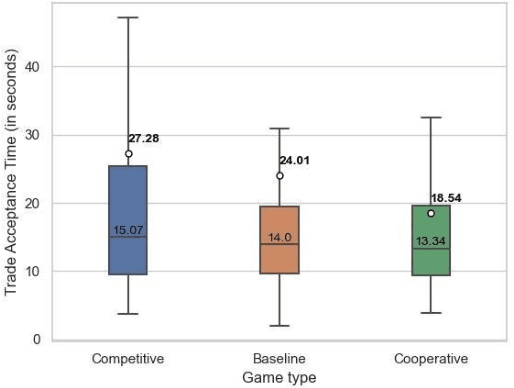

Exploring Trust and Risk during Online Bartering Interactions

Nov 27, 2023

Abstract:This paper investigates how risk influences the way people barter. We used Minecraft to create an experimental environment in which people bartered to earn a monetary bonus. Our findings reveal that subjects exhibit risk-aversion to competitive bartering environments and deliberate over their trades longer when compared to cooperative environments. These initial experiments lay groundwork for development of agents capable of strategically trading with human counterparts in different environments.

CBCL-PR: A Cognitively Inspired Model for Class-Incremental Learning in Robotics

Jul 31, 2023Abstract:For most real-world applications, robots need to adapt and learn continually with limited data in their environments. In this paper, we consider the problem of Few-Shot class Incremental Learning (FSIL), in which an AI agent is required to learn incrementally from a few data samples without forgetting the data it has previously learned. To solve this problem, we present a novel framework inspired by theories of concept learning in the hippocampus and the neocortex. Our framework represents object classes in the form of sets of clusters and stores them in memory. The framework replays data generated by the clusters of the old classes, to avoid forgetting when learning new classes. Our approach is evaluated on two object classification datasets resulting in state-of-the-art (SOTA) performance for class-incremental learning and FSIL. We also evaluate our framework for FSIL on a robot demonstrating that the robot can continually learn to classify a large set of household objects with limited human assistance.

Active Class Selection for Few-Shot Class-Incremental Learning

Jul 05, 2023Abstract:For real-world applications, robots will need to continually learn in their environments through limited interactions with their users. Toward this, previous works in few-shot class incremental learning (FSCIL) and active class selection (ACS) have achieved promising results but were tested in constrained setups. Therefore, in this paper, we combine ideas from FSCIL and ACS to develop a novel framework that can allow an autonomous agent to continually learn new objects by asking its users to label only a few of the most informative objects in the environment. To this end, we build on a state-of-the-art (SOTA) FSCIL model and extend it with techniques from ACS literature. We term this model Few-shot Incremental Active class SeleCtiOn (FIASco). We further integrate a potential field-based navigation technique with our model to develop a complete framework that can allow an agent to process and reason on its sensory data through the FIASco model, navigate towards the most informative object in the environment, gather data about the object through its sensors and incrementally update the FIASco model. Experimental results on a simulated agent and a real robot show the significance of our approach for long-term real-world robotics applications.

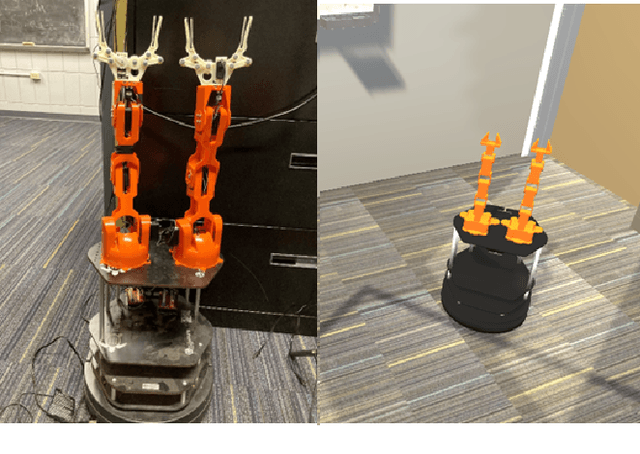

Learning Evacuee Models from Robot-Guided Emergency Evacuation Experiments

Jun 30, 2023Abstract:Recent research has examined the possibility of using robots to guide evacuees to safe exits during emergencies. Yet, there are many factors that can impact a person's decision to follow a robot. Being able to model how an evacuee follows an emergency robot guide could be crucial for designing robots that effectively guide evacuees during an emergency. This paper presents a method for developing realistic and predictive human evacuee models from physical human evacuation experiments. The paper analyzes the behavior of 14 human subjects during physical robot-guided evacuation. We then use the video data to create evacuee motion models that predict the person's future positions during the emergency. Finally, we validate the resulting models by running a k-fold cross-validation on the data collected during physical human subject experiments. We also present performance results of the model using data from a similar simulated emergency evacuation experiment demonstrating that these models can serve as a tool to predict evacuee behavior in novel evacuation simulations.

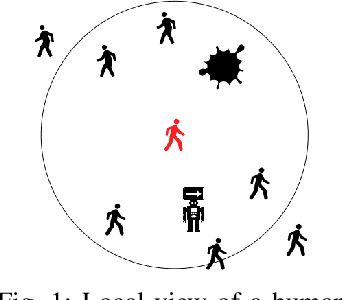

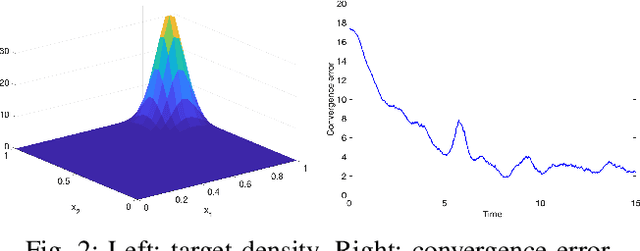

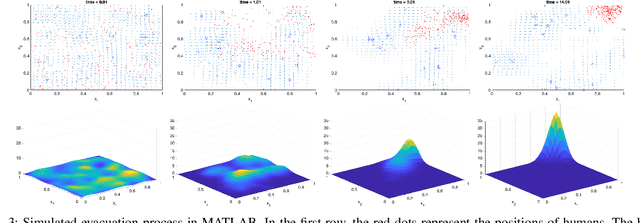

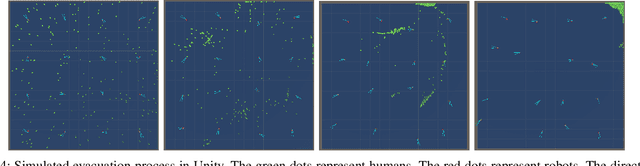

Multi-Robot-Guided Crowd Evacuation: Two-Scale Modeling and Control Based on Mean-Field Hydrodynamic Models

Feb 28, 2023Abstract:Emergency evacuation describes a complex situation involving time-critical decision-making by evacuees. Mobile robots are being actively explored as a potential solution to provide timely guidance. In this work, we study a robot-guided crowd evacuation problem where a small group of robots is used to guide a large human crowd to safe locations. The challenge lies in how to utilize micro-level human-robot interactions to indirectly influence a population that significantly outnumbers the robots to achieve the collective evacuation objective. To address the challenge, we follow a two-scale modeling strategy and explore mean-field hydrodynamic models which consist of a family of microscopic social-force models that explicitly describe how human movements are locally affected by other humans, the environment, and the robots, and associated macroscopic equations for the temporal and spatial evolution of the crowd density and flow velocity. We design controllers for the robots such that they not only automatically explore the environment (with unknown dynamic obstacles) to cover it as much as possible but also dynamically adjust the directions of their local navigation force fields based on the real-time macro-states of the crowd to guide the crowd to a safe location. We prove the stability of the proposed evacuation algorithm and conduct a series of simulations (involving unknown dynamic obstacles) to validate the performance of the algorithm.

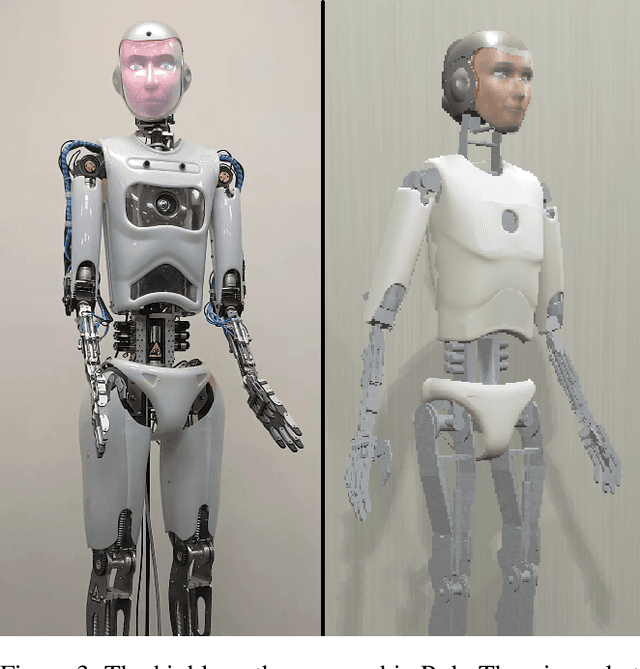

Using Virtual Reality to Simulate Human-Robot Emergency Evacuation Scenarios

Oct 16, 2022

Abstract:This paper describes our recent effort to use virtual reality to simulate threatening emergency evacuation scenarios in which a robot guides a person to an exit. Our prior work has demonstrated that people will follow a robot's guidance, even when the robot is faulty, during an emergency evacuation. Yet, because physical in-person emergency evacuation experiments are difficult and costly to conduct and because we would like to evaluate many different factors, we are motivated to develop a system that immerses people in the simulation environment to encourage genuine subject reactions. We are working to complete experiments verifying the validity of our approach.

Multi-Robot-Assisted Human Crowd Evacuation using Navigation Velocity Fields

Sep 20, 2022

Abstract:This work studies a robot-assisted crowd evacuation problem where we control a small group of robots to guide a large human crowd to safe locations. The challenge lies in how to model human-robot interactions and design robot controls to indirectly control a human population that significantly outnumbers the robots. To address the challenge, we treat the crowd as a continuum and formulate the evacuation objective as driving the crowd density to target locations. We propose a novel mean-field model which consists of a family of microscopic equations that explicitly model how human motions are locally guided by the robots and an associated macroscopic equation that describes how the crowd density is controlled by the navigation velocity fields generated by all robots. Then, we design density feedback controllers for the robots to dynamically adjust their states such that the generated navigation velocity fields drive the crowd density to a target density. Stability guarantees of the proposed controllers are proven. Agent-based simulations are included to evaluate the proposed evacuation algorithms.

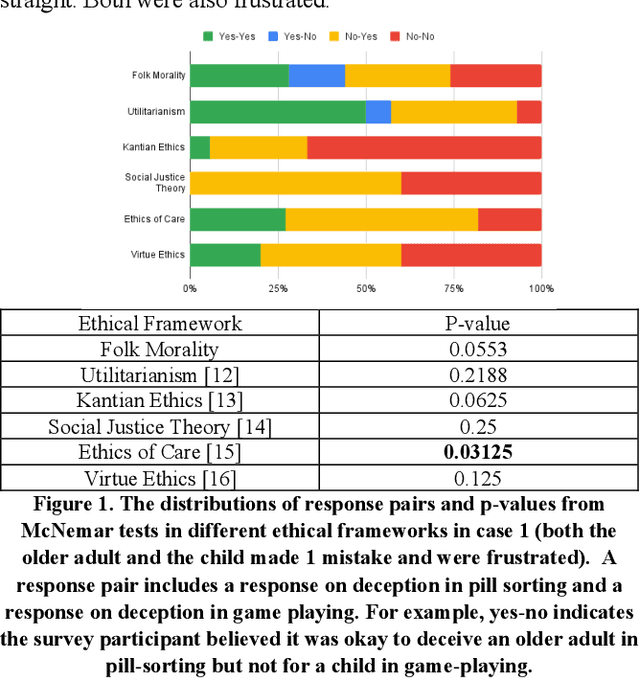

Toward Ethical Robotic Behavior in Human-Robot Interaction Scenarios

Jun 21, 2022

Abstract:This paper describes current progress on developing an ethical architecture for robots that are designed to follow human ethical decision-making processes. We surveyed both regular adults (folks) and ethics experts (experts) on what they consider to be ethical behavior in two specific scenarios: pill-sorting with an older adult and game playing with a child. A key goal of the surveys is to better understand human ethical decision-making. In the first survey, folk responses were based on the subject's ethical choices ("folk morality"); in the second survey, expert responses were based on the expert's application of different formal ethical frameworks to each scenario. We observed that most of the formal ethical frameworks we included in the survey (Utilitarianism, Kantian Ethics, Ethics of Care and Virtue Ethics) and "folk morality" were conservative toward deception in the high-risk task with an older adult when both the adult and the child had significant performance deficiencies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge