Zhenyuan Yuan

SynSHRP2: A Synthetic Multimodal Benchmark for Driving Safety-critical Events Derived from Real-world Driving Data

May 06, 2025Abstract:Driving-related safety-critical events (SCEs), including crashes and near-crashes, provide essential insights for the development and safety evaluation of automated driving systems. However, two major challenges limit their accessibility: the rarity of SCEs and the presence of sensitive privacy information in the data. The Second Strategic Highway Research Program (SHRP 2) Naturalistic Driving Study (NDS), the largest NDS to date, collected millions of hours of multimodal, high-resolution, high-frequency driving data from thousands of participants, capturing thousands of SCEs. While this dataset is invaluable for safety research, privacy concerns and data use restrictions significantly limit public access to the raw data. To address these challenges, we introduce SynSHRP2, a publicly available, synthetic, multimodal driving dataset containing over 1874 crashes and 6924 near-crashes derived from the SHRP 2 NDS. The dataset features de-identified keyframes generated using Stable Diffusion and ControlNet, ensuring the preservation of critical safety-related information while eliminating personally identifiable data. Additionally, SynSHRP2 includes detailed annotations on SCE type, environmental and traffic conditions, and time-series kinematic data spanning 5 seconds before and during each event. Synchronized keyframes and narrative descriptions further enhance its usability. This paper presents two benchmarks for event attribute classification and scene understanding, demonstrating the potential applications of SynSHRP2 in advancing safety research and automated driving system development.

Bayesian meta learning for trustworthy uncertainty quantification

Jul 27, 2024Abstract:We consider the problem of Bayesian regression with trustworthy uncertainty quantification. We define that the uncertainty quantification is trustworthy if the ground truth can be captured by intervals dependent on the predictive distributions with a pre-specified probability. Furthermore, we propose, Trust-Bayes, a novel optimization framework for Bayesian meta learning which is cognizant of trustworthy uncertainty quantification without explicit assumptions on the prior model/distribution of the functions. We characterize the lower bounds of the probabilities of the ground truth being captured by the specified intervals and analyze the sample complexity with respect to the feasible probability for trustworthy uncertainty quantification. Monte Carlo simulation of a case study using Gaussian process regression is conducted for verification and comparison with the Meta-prior algorithm.

Federated reinforcement learning for robot motion planning with zero-shot generalization

Mar 20, 2024Abstract:This paper considers the problem of learning a control policy for robot motion planning with zero-shot generalization, i.e., no data collection and policy adaptation is needed when the learned policy is deployed in new environments. We develop a federated reinforcement learning framework that enables collaborative learning of multiple learners and a central server, i.e., the Cloud, without sharing their raw data. In each iteration, each learner uploads its local control policy and the corresponding estimated normalized arrival time to the Cloud, which then computes the global optimum among the learners and broadcasts the optimal policy to the learners. Each learner then selects between its local control policy and that from the Cloud for next iteration. The proposed framework leverages on the derived zero-shot generalization guarantees on arrival time and safety. Theoretical guarantees on almost-sure convergence, almost consensus, Pareto improvement and optimality gap are also provided. Monte Carlo simulation is conducted to evaluate the proposed framework.

Learning Evacuee Models from Robot-Guided Emergency Evacuation Experiments

Jun 30, 2023Abstract:Recent research has examined the possibility of using robots to guide evacuees to safe exits during emergencies. Yet, there are many factors that can impact a person's decision to follow a robot. Being able to model how an evacuee follows an emergency robot guide could be crucial for designing robots that effectively guide evacuees during an emergency. This paper presents a method for developing realistic and predictive human evacuee models from physical human evacuation experiments. The paper analyzes the behavior of 14 human subjects during physical robot-guided evacuation. We then use the video data to create evacuee motion models that predict the person's future positions during the emergency. Finally, we validate the resulting models by running a k-fold cross-validation on the data collected during physical human subject experiments. We also present performance results of the model using data from a similar simulated emergency evacuation experiment demonstrating that these models can serve as a tool to predict evacuee behavior in novel evacuation simulations.

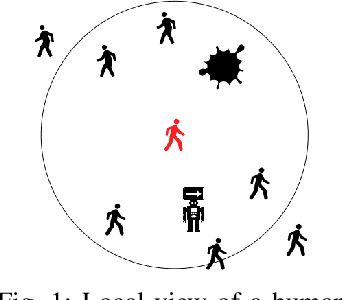

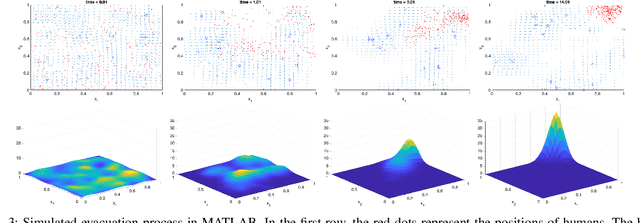

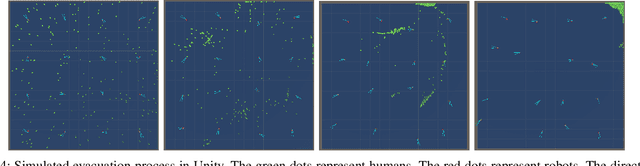

Multi-Robot-Guided Crowd Evacuation: Two-Scale Modeling and Control Based on Mean-Field Hydrodynamic Models

Feb 28, 2023Abstract:Emergency evacuation describes a complex situation involving time-critical decision-making by evacuees. Mobile robots are being actively explored as a potential solution to provide timely guidance. In this work, we study a robot-guided crowd evacuation problem where a small group of robots is used to guide a large human crowd to safe locations. The challenge lies in how to utilize micro-level human-robot interactions to indirectly influence a population that significantly outnumbers the robots to achieve the collective evacuation objective. To address the challenge, we follow a two-scale modeling strategy and explore mean-field hydrodynamic models which consist of a family of microscopic social-force models that explicitly describe how human movements are locally affected by other humans, the environment, and the robots, and associated macroscopic equations for the temporal and spatial evolution of the crowd density and flow velocity. We design controllers for the robots such that they not only automatically explore the environment (with unknown dynamic obstacles) to cover it as much as possible but also dynamically adjust the directions of their local navigation force fields based on the real-time macro-states of the crowd to guide the crowd to a safe location. We prove the stability of the proposed evacuation algorithm and conduct a series of simulations (involving unknown dynamic obstacles) to validate the performance of the algorithm.

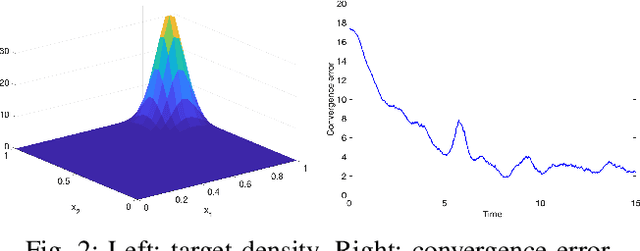

Multi-Robot-Assisted Human Crowd Evacuation using Navigation Velocity Fields

Sep 20, 2022

Abstract:This work studies a robot-assisted crowd evacuation problem where we control a small group of robots to guide a large human crowd to safe locations. The challenge lies in how to model human-robot interactions and design robot controls to indirectly control a human population that significantly outnumbers the robots. To address the challenge, we treat the crowd as a continuum and formulate the evacuation objective as driving the crowd density to target locations. We propose a novel mean-field model which consists of a family of microscopic equations that explicitly model how human motions are locally guided by the robots and an associated macroscopic equation that describes how the crowd density is controlled by the navigation velocity fields generated by all robots. Then, we design density feedback controllers for the robots to dynamically adjust their states such that the generated navigation velocity fields drive the crowd density to a target density. Stability guarantees of the proposed controllers are proven. Agent-based simulations are included to evaluate the proposed evacuation algorithms.

Resource-aware Distributed Gaussian Process Regression for Real-time Machine Learning

May 13, 2021

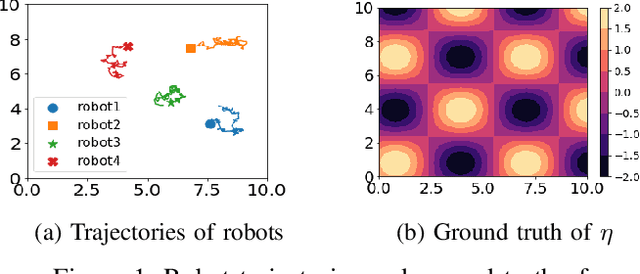

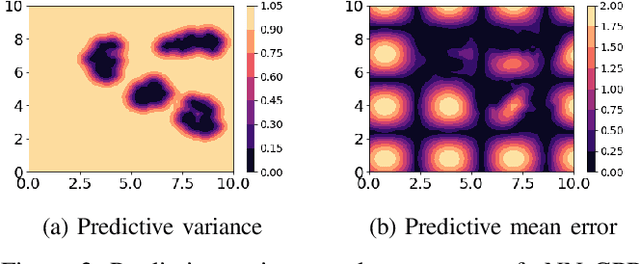

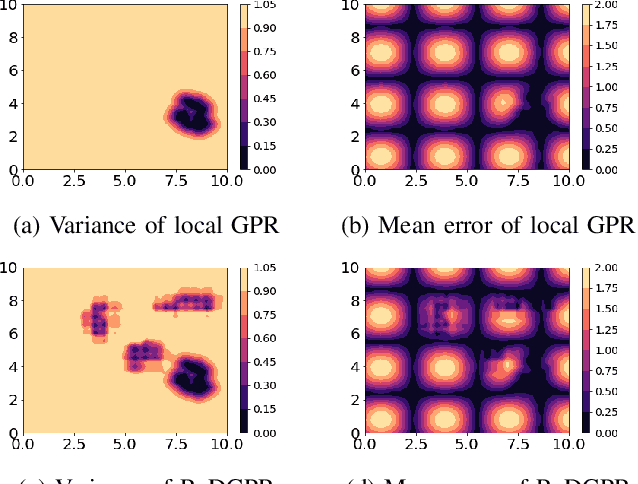

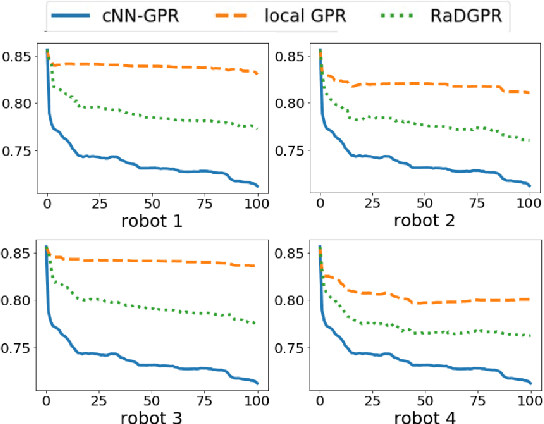

Abstract:We study the problem where a group of agents aim to collaboratively learn a common latent function through streaming data. We propose a Resource-aware Gaussian process regression algorithm that is cognizant of agents' limited capabilities in communication, computation and memory. We quantify the improvement that limited inter-agent communication brings to the transient and steady-state performance in predictive variance and predictive mean. A set of simulations is conducted to evaluate the developed algorithm.

Data-Driven Distributed State Estimation and Behavior Modeling in Sensor Networks

Sep 24, 2020

Abstract:Nowadays, the prevalence of sensor networks has enabled tracking of the states of dynamic objects for a wide spectrum of applications from autonomous driving to environmental monitoring and urban planning. However, tracking real-world objects often faces two key challenges: First, due to the limitation of individual sensors, state estimation needs to be solved in a collaborative and distributed manner. Second, the objects' movement behavior is unknown, and needs to be learned using sensor observations. In this work, for the first time, we formally formulate the problem of simultaneous state estimation and behavior learning in a sensor network. We then propose a simple yet effective solution to this new problem by extending the Gaussian process-based Bayes filters (GP-BayesFilters) to an online, distributed setting. The effectiveness of the proposed method is evaluated on tracking objects with unknown movement behaviors using both synthetic data and data collected from a multi-robot platform.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge