Alípio Jorge

MiNER: A Two-Stage Pipeline for Metadata Extraction from Municipal Meeting Minutes

Jan 30, 2026Abstract:Municipal meeting minutes are official documents of local governance, exhibiting heterogeneous formats and writing styles. Effective information retrieval (IR) requires identifying metadata such as meeting number, date, location, participants, and start/end times, elements that are rarely standardized or easy to extract automatically. Existing named entity recognition (NER) models are ill-suited to this task, as they are not adapted to such domain-specific categories. In this paper, we propose a two-stage pipeline for metadata extraction from municipal minutes. First, a question answering (QA) model identifies the opening and closing text segments containing metadata. Transformer-based models (BERTimbau and XLM-RoBERTa with and without a CRF layer) are then applied for fine-grained entity extraction and enhanced through deslexicalization. To evaluate our proposed pipeline, we benchmark both open-weight (Phi) and closed-weight (Gemini) LLMs, assessing predictive performance, inference cost, and carbon footprint. Our results demonstrate strong in-domain performance, better than larger general-purpose LLMs. However, cross-municipality evaluation reveals reduced generalization reflecting the variability and linguistic complexity of municipal records. This work establishes the first benchmark for metadata extraction from municipal meeting minutes, providing a solid foundation for future research in this domain.

ClaimPT: A Portuguese Dataset of Annotated Claims in News Articles

Jan 27, 2026Abstract:Fact-checking remains a demanding and time-consuming task, still largely dependent on manual verification and unable to match the rapid spread of misinformation online. This is particularly important because debunking false information typically takes longer to reach consumers than the misinformation itself; accelerating corrections through automation can therefore help counter it more effectively. Although many organizations perform manual fact-checking, this approach is difficult to scale given the growing volume of digital content. These limitations have motivated interest in automating fact-checking, where identifying claims is a crucial first step. However, progress has been uneven across languages, with English dominating due to abundant annotated data. Portuguese, like other languages, still lacks accessible, licensed datasets, limiting research, NLP developments and applications. In this paper, we introduce ClaimPT, a dataset of European Portuguese news articles annotated for factual claims, comprising 1,308 articles and 6,875 individual annotations. Unlike most existing resources based on social media or parliamentary transcripts, ClaimPT focuses on journalistic content, collected through a partnership with LUSA, the Portuguese News Agency. To ensure annotation quality, two trained annotators labeled each article, with a curator validating all annotations according to a newly proposed scheme. We also provide baseline models for claim detection, establishing initial benchmarks and enabling future NLP and IR applications. By releasing ClaimPT, we aim to advance research on low-resource fact-checking and enhance understanding of misinformation in news media.

CitiLink: Enhancing Municipal Transparency and Citizen Engagement through Searchable Meeting Minutes

Jan 26, 2026Abstract:City council minutes are typically lengthy and formal documents with a bureaucratic writing style. Although publicly available, their structure often makes it difficult for citizens or journalists to efficiently find information. In this demo, we present CitiLink, a platform designed to transform unstructured municipal meeting minutes into structured and searchable data, demonstrating how NLP and IR can enhance the accessibility and transparency of local government. The system employs LLMs to extract metadata, discussed subjects, and voting outcomes, which are then indexed in a database to support full-text search with BM25 ranking and faceted filtering through a user-friendly interface. The developed system was built over a collection of 120 minutes made available by six Portuguese municipalities. To assess its usability, CitiLink was tested through guided sessions with municipal personnel, providing insights into how real users interact with the system. In addition, we evaluated Gemini's performance in extracting relevant information from the minutes, highlighting its effectiveness in data extraction.

VotIE: Information Extraction from Meeting Minutes

Jan 08, 2026Abstract:Municipal meeting minutes record key decisions in local democratic processes. Unlike parliamentary proceedings, which typically adhere to standardized formats, they encode voting outcomes in highly heterogeneous, free-form narrative text that varies widely across municipalities, posing significant challenges for automated extraction. In this paper, we introduce VotIE (Voting Information Extraction), a new information extraction task aimed at identifying structured voting events in narrative deliberative records, and establish the first benchmark for this task using Portuguese municipal minutes, building on the recently introduced CitiLink corpus. Our experiments yield two key findings. First, under standard in-domain evaluation, fine-tuned encoders, specifically XLM-R-CRF, achieve the strongest performance, reaching 93.2\% macro F1, outperforming generative approaches. Second, in a cross-municipality setting that evaluates transfer to unseen administrative contexts, these models suffer substantial performance degradation, whereas few-shot LLMs demonstrate greater robustness, with significantly smaller declines in performance. Despite this generalization advantage, the high computational cost of generative models currently constrains their practicality. As a result, lightweight fine-tuned encoders remain a more practical option for large-scale, real-world deployment. To support reproducible research in administrative NLP, we publicly release our benchmark, trained models, and evaluation framework.

SegNSP: Revisiting Next Sentence Prediction for Linear Text Segmentation

Jan 07, 2026Abstract:Linear text segmentation is a long-standing problem in natural language processing (NLP), focused on dividing continuous text into coherent and semantically meaningful units. Despite its importance, the task remains challenging due to the complexity of defining topic boundaries, the variability in discourse structure, and the need to balance local coherence with global context. These difficulties hinder downstream applications such as summarization, information retrieval, and question answering. In this work, we introduce SegNSP, framing linear text segmentation as a next sentence prediction (NSP) task. Although NSP has largely been abandoned in modern pre-training, its explicit modeling of sentence-to-sentence continuity makes it a natural fit for detecting topic boundaries. We propose a label-agnostic NSP approach, which predicts whether the next sentence continues the current topic without requiring explicit topic labels, and enhance it with a segmentation-aware loss combined with harder negative sampling to better capture discourse continuity. Unlike recent proposals that leverage NSP alongside auxiliary topic classification, our approach avoids task-specific supervision. We evaluate our model against established baselines on two datasets, CitiLink-Minutes, for which we establish the first segmentation benchmark, and WikiSection. On CitiLink-Minutes, SegNSP achieves a B-$F_1$ of 0.79, closely aligning with human-annotated topic transitions, while on WikiSection it attains a B-F$_1$ of 0.65, outperforming the strongest reproducible baseline, TopSeg, by 0.17 absolute points. These results demonstrate competitive and robust performance, highlighting the effectiveness of modeling sentence-to-sentence continuity for improving segmentation quality and supporting downstream NLP applications.

FRaN-X: FRaming and Narratives-eXplorer

Jul 09, 2025Abstract:We present FRaN-X, a Framing and Narratives Explorer that automatically detects entity mentions and classifies their narrative roles directly from raw text. FRaN-X comprises a two-stage system that combines sequence labeling with fine-grained role classification to reveal how entities are portrayed as protagonists, antagonists, or innocents, using a unique taxonomy of 22 fine-grained roles nested under these three main categories. The system supports five languages (Bulgarian, English, Hindi, Russian, and Portuguese) and two domains (the Russia-Ukraine Conflict and Climate Change). It provides an interactive web interface for media analysts to explore and compare framing across different sources, tackling the challenge of automatically detecting and labeling how entities are framed. Our system allows end users to focus on a single article as well as analyze up to four articles simultaneously. We provide aggregate level analysis including an intuitive graph visualization that highlights the narrative a group of articles are pushing. Our system includes a search feature for users to look up entities of interest, along with a timeline view that allows analysts to track an entity's role transitions across different contexts within the article. The FRaN-X system and the trained models are licensed under an MIT License. FRaN-X is publicly accessible at https://fran-x.streamlit.app/ and a video demonstration is available at https://youtu.be/VZVi-1B6yYk.

Tradutor: Building a Variety Specific Translation Model

Feb 20, 2025

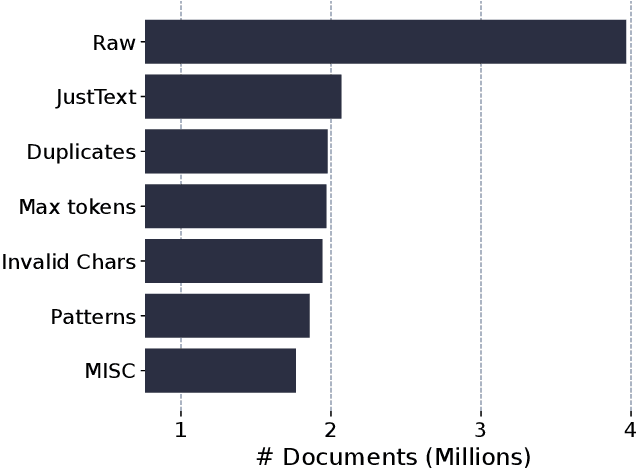

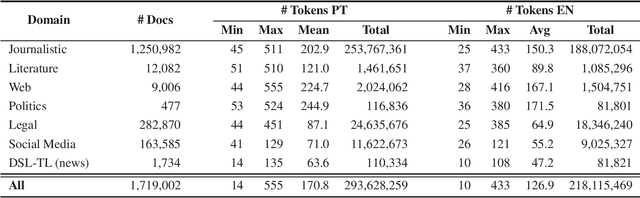

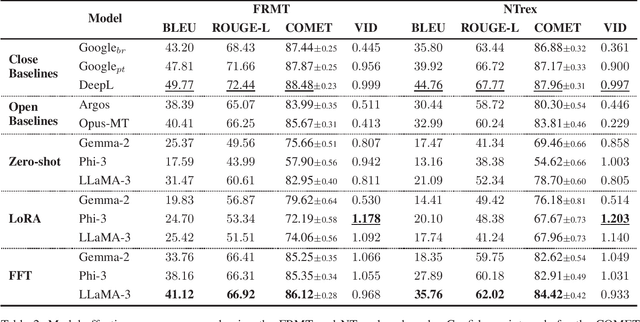

Abstract:Language models have become foundational to many widely used systems. However, these seemingly advantageous models are double-edged swords. While they excel in tasks related to resource-rich languages like English, they often lose the fine nuances of language forms, dialects, and varieties that are inherent to languages spoken in multiple regions of the world. Languages like European Portuguese are neglected in favor of their more popular counterpart, Brazilian Portuguese, leading to suboptimal performance in various linguistic tasks. To address this gap, we introduce the first open-source translation model specifically tailored for European Portuguese, along with a novel dataset specifically designed for this task. Results from automatic evaluations on two benchmark datasets demonstrate that our best model surpasses existing open-source translation systems for Portuguese and approaches the performance of industry-leading closed-source systems for European Portuguese. By making our dataset, models, and code publicly available, we aim to support and encourage further research, fostering advancements in the representation of underrepresented language varieties.

Enhancing Portuguese Variety Identification with Cross-Domain Approaches

Feb 20, 2025Abstract:Recent advances in natural language processing have raised expectations for generative models to produce coherent text across diverse language varieties. In the particular case of the Portuguese language, the predominance of Brazilian Portuguese corpora online introduces linguistic biases in these models, limiting their applicability outside of Brazil. To address this gap and promote the creation of European Portuguese resources, we developed a cross-domain language variety identifier (LVI) to discriminate between European and Brazilian Portuguese. Motivated by the findings of our literature review, we compiled the PtBrVarId corpus, a cross-domain LVI dataset, and study the effectiveness of transformer-based LVI classifiers for cross-domain scenarios. Although this research focuses on two Portuguese varieties, our contribution can be extended to other varieties and languages. We open source the code, corpus, and models to foster further research in this task.

ACE-2005-PT: Corpus for Event Extraction in Portuguese

Aug 29, 2024Abstract:Event extraction is an NLP task that commonly involves identifying the central word (trigger) for an event and its associated arguments in text. ACE-2005 is widely recognised as the standard corpus in this field. While other corpora, like PropBank, primarily focus on annotating predicate-argument structure, ACE-2005 provides comprehensive information about the overall event structure and semantics. However, its limited language coverage restricts its usability. This paper introduces ACE-2005-PT, a corpus created by translating ACE-2005 into Portuguese, with European and Brazilian variants. To speed up the process of obtaining ACE-2005-PT, we rely on automatic translators. This, however, poses some challenges related to automatically identifying the correct alignments between multi-word annotations in the original text and in the corresponding translated sentence. To achieve this, we developed an alignment pipeline that incorporates several alignment techniques: lemmatization, fuzzy matching, synonym matching, multiple translations and a BERT-based word aligner. To measure the alignment effectiveness, a subset of annotations from the ACE-2005-PT corpus was manually aligned by a linguist expert. This subset was then compared against our pipeline results which achieved exact and relaxed match scores of 70.55\% and 87.55\% respectively. As a result, we successfully generated a Portuguese version of the ACE-2005 corpus, which has been accepted for publication by LDC.

Event Extraction for Portuguese: A QA-driven Approach using ACE-2005

Aug 29, 2024Abstract:Event extraction is an Information Retrieval task that commonly consists of identifying the central word for the event (trigger) and the event's arguments. This task has been extensively studied for English but lags behind for Portuguese, partly due to the lack of task-specific annotated corpora. This paper proposes a framework in which two separated BERT-based models were fine-tuned to identify and classify events in Portuguese documents. We decompose this task into two sub-tasks. Firstly, we use a token classification model to detect event triggers. To extract event arguments, we train a Question Answering model that queries the triggers about their corresponding event argument roles. Given the lack of event annotated corpora in Portuguese, we translated the original version of the ACE-2005 dataset (a reference in the field) into Portuguese, producing a new corpus for Portuguese event extraction. To accomplish this, we developed an automatic translation pipeline. Our framework obtains F1 marks of 64.4 for trigger classification and 46.7 for argument classification setting, thus a new state-of-the-art reference for these tasks in Portuguese.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge