Sérgio Nunes

NLP for Local Governance Meeting Records: A Focus Article on Tasks, Datasets, Metrics and Benchmark

Feb 08, 2026Abstract:Local governance meeting records are official documents, in the form of minutes or transcripts, documenting how proposals, discussions, and procedural actions unfold during institutional meetings. While generally structured, these documents are often dense, bureaucratic, and highly heterogeneous across municipalities, exhibiting significant variation in language, terminology, structure, and overall organization. This heterogeneity makes them difficult for non-experts to interpret and challenging for intelligent automated systems to process, limiting public transparency and civic engagement. To address these challenges, computational methods can be employed to structure and interpret such complex documents. In particular, Natural Language Processing (NLP) offers well-established methods that can enhance the accessibility and interpretability of governmental records. In this focus article, we review foundational NLP tasks that support the structuring of local governance meeting documents. Specifically, we review three core tasks: document segmentation, domain-specific entity extraction and automatic text summarization, which are essential for navigating lengthy deliberations, identifying political actors and personal information, and generating concise representations of complex decision-making processes. In reviewing these tasks, we discuss methodological approaches, evaluation metrics, and publicly available resources, while highlighting domain-specific challenges such as data scarcity, privacy constraints, and source variability. By synthesizing existing work across these foundational tasks, this article provides a structured overview of how NLP can enhance the structuring and accessibility of local governance meeting records.

ClaimPT: A Portuguese Dataset of Annotated Claims in News Articles

Jan 27, 2026Abstract:Fact-checking remains a demanding and time-consuming task, still largely dependent on manual verification and unable to match the rapid spread of misinformation online. This is particularly important because debunking false information typically takes longer to reach consumers than the misinformation itself; accelerating corrections through automation can therefore help counter it more effectively. Although many organizations perform manual fact-checking, this approach is difficult to scale given the growing volume of digital content. These limitations have motivated interest in automating fact-checking, where identifying claims is a crucial first step. However, progress has been uneven across languages, with English dominating due to abundant annotated data. Portuguese, like other languages, still lacks accessible, licensed datasets, limiting research, NLP developments and applications. In this paper, we introduce ClaimPT, a dataset of European Portuguese news articles annotated for factual claims, comprising 1,308 articles and 6,875 individual annotations. Unlike most existing resources based on social media or parliamentary transcripts, ClaimPT focuses on journalistic content, collected through a partnership with LUSA, the Portuguese News Agency. To ensure annotation quality, two trained annotators labeled each article, with a curator validating all annotations according to a newly proposed scheme. We also provide baseline models for claim detection, establishing initial benchmarks and enabling future NLP and IR applications. By releasing ClaimPT, we aim to advance research on low-resource fact-checking and enhance understanding of misinformation in news media.

CitiLink: Enhancing Municipal Transparency and Citizen Engagement through Searchable Meeting Minutes

Jan 26, 2026Abstract:City council minutes are typically lengthy and formal documents with a bureaucratic writing style. Although publicly available, their structure often makes it difficult for citizens or journalists to efficiently find information. In this demo, we present CitiLink, a platform designed to transform unstructured municipal meeting minutes into structured and searchable data, demonstrating how NLP and IR can enhance the accessibility and transparency of local government. The system employs LLMs to extract metadata, discussed subjects, and voting outcomes, which are then indexed in a database to support full-text search with BM25 ranking and faceted filtering through a user-friendly interface. The developed system was built over a collection of 120 minutes made available by six Portuguese municipalities. To assess its usability, CitiLink was tested through guided sessions with municipal personnel, providing insights into how real users interact with the system. In addition, we evaluated Gemini's performance in extracting relevant information from the minutes, highlighting its effectiveness in data extraction.

VotIE: Information Extraction from Meeting Minutes

Jan 08, 2026Abstract:Municipal meeting minutes record key decisions in local democratic processes. Unlike parliamentary proceedings, which typically adhere to standardized formats, they encode voting outcomes in highly heterogeneous, free-form narrative text that varies widely across municipalities, posing significant challenges for automated extraction. In this paper, we introduce VotIE (Voting Information Extraction), a new information extraction task aimed at identifying structured voting events in narrative deliberative records, and establish the first benchmark for this task using Portuguese municipal minutes, building on the recently introduced CitiLink corpus. Our experiments yield two key findings. First, under standard in-domain evaluation, fine-tuned encoders, specifically XLM-R-CRF, achieve the strongest performance, reaching 93.2\% macro F1, outperforming generative approaches. Second, in a cross-municipality setting that evaluates transfer to unseen administrative contexts, these models suffer substantial performance degradation, whereas few-shot LLMs demonstrate greater robustness, with significantly smaller declines in performance. Despite this generalization advantage, the high computational cost of generative models currently constrains their practicality. As a result, lightweight fine-tuned encoders remain a more practical option for large-scale, real-world deployment. To support reproducible research in administrative NLP, we publicly release our benchmark, trained models, and evaluation framework.

SegNSP: Revisiting Next Sentence Prediction for Linear Text Segmentation

Jan 07, 2026Abstract:Linear text segmentation is a long-standing problem in natural language processing (NLP), focused on dividing continuous text into coherent and semantically meaningful units. Despite its importance, the task remains challenging due to the complexity of defining topic boundaries, the variability in discourse structure, and the need to balance local coherence with global context. These difficulties hinder downstream applications such as summarization, information retrieval, and question answering. In this work, we introduce SegNSP, framing linear text segmentation as a next sentence prediction (NSP) task. Although NSP has largely been abandoned in modern pre-training, its explicit modeling of sentence-to-sentence continuity makes it a natural fit for detecting topic boundaries. We propose a label-agnostic NSP approach, which predicts whether the next sentence continues the current topic without requiring explicit topic labels, and enhance it with a segmentation-aware loss combined with harder negative sampling to better capture discourse continuity. Unlike recent proposals that leverage NSP alongside auxiliary topic classification, our approach avoids task-specific supervision. We evaluate our model against established baselines on two datasets, CitiLink-Minutes, for which we establish the first segmentation benchmark, and WikiSection. On CitiLink-Minutes, SegNSP achieves a B-$F_1$ of 0.79, closely aligning with human-annotated topic transitions, while on WikiSection it attains a B-F$_1$ of 0.65, outperforming the strongest reproducible baseline, TopSeg, by 0.17 absolute points. These results demonstrate competitive and robust performance, highlighting the effectiveness of modeling sentence-to-sentence continuity for improving segmentation quality and supporting downstream NLP applications.

Establishing a Foundation for Tetun Text Ad-Hoc Retrieval: Indexing, Stemming, Retrieval, and Ranking

Dec 16, 2024

Abstract:Searching for information on the internet and digital platforms to satisfy an information need requires effective retrieval solutions. However, such solutions are not yet available for Tetun, making it challenging to find relevant documents for text-based search queries in this language. To address these challenges, this study investigates Tetun text retrieval with a focus on the ad-hoc retrieval task. It begins by developing essential language resources -- including a list of stopwords, a stemmer, and a test collection -- which serve as foundational components for solutions tailored to Tetun text retrieval. Various strategies are then explored using both document titles and content to evaluate retrieval effectiveness. The results show that retrieving document titles, after removing hyphens and apostrophes without applying stemming, significantly improves retrieval performance compared to the baseline. Efficiency increases by 31.37%, while effectiveness achieves an average gain of 9.40% in MAP@10 and 30.35% in nDCG@10 with DFR BM25. Beyond the top-10 cutoff point, Hiemstra LM demonstrates strong performance across various retrieval strategies and evaluation metrics. Contributions of this work include the development of Labadain-Stopwords (a list of 160 Tetun stopwords), Labadain-Stemmer (a Tetun stemmer with three variants), and Labadain-Avaliad\'or (a Tetun test collection containing 59 topics, 33,550 documents, and 5,900 qrels).

Exploring Large Language Models for Relevance Judgments in Tetun

Jun 11, 2024

Abstract:The Cranfield paradigm has served as a foundational approach for developing test collections, with relevance judgments typically conducted by human assessors. However, the emergence of large language models (LLMs) has introduced new possibilities for automating these tasks. This paper explores the feasibility of using LLMs to automate relevance assessments, particularly within the context of low-resource languages. In our study, LLMs are employed to automate relevance judgment tasks, by providing a series of query-document pairs in Tetun as the input text. The models are tasked with assigning relevance scores to each pair, where these scores are then compared to those from human annotators to evaluate the inter-annotator agreement levels. Our investigation reveals results that align closely with those reported in studies of high-resource languages.

Indexing Portuguese NLP Resources with PT-Pump-Up

Jan 27, 2024Abstract:The recent advances in natural language processing (NLP) are linked to training processes that require vast amounts of corpora. Access to this data is commonly not a trivial process due to resource dispersion and the need to maintain these infrastructures online and up-to-date. New developments in NLP are often compromised due to the scarcity of data or lack of a shared repository that works as an entry point to the community. This is especially true in low and mid-resource languages, such as Portuguese, which lack data and proper resource management infrastructures. In this work, we propose PT-Pump-Up, a set of tools that aim to reduce resource dispersion and improve the accessibility to Portuguese NLP resources. Our proposal is divided into four software components: a) a web platform to list the available resources; b) a client-side Python package to simplify the loading of Portuguese NLP resources; c) an administrative Python package to manage the platform and d) a public GitHub repository to foster future collaboration and contributions. All four components are accessible using: https://linktr.ee/pt_pump_up

* Demo Track, 3 pages

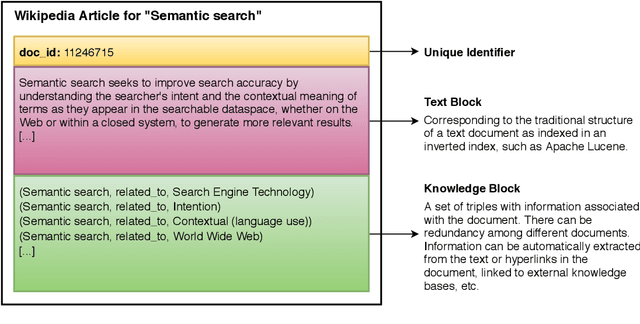

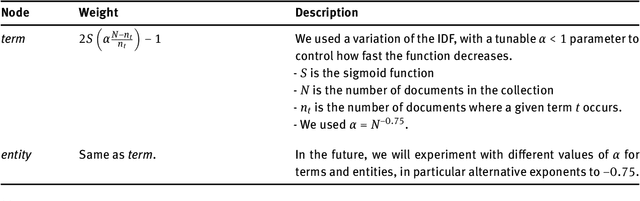

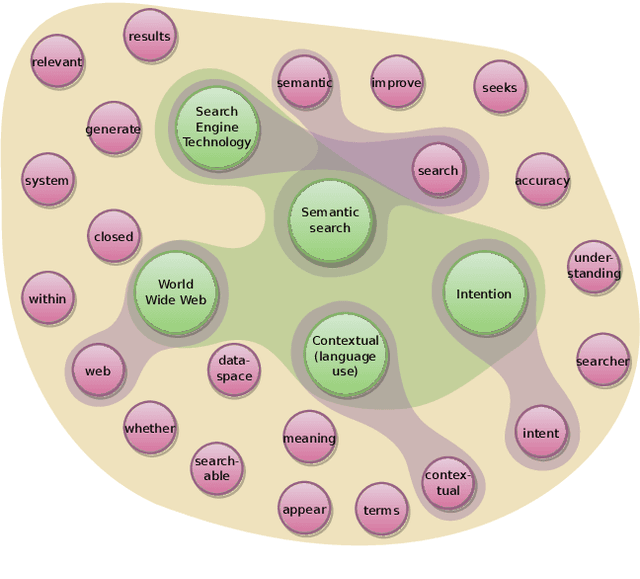

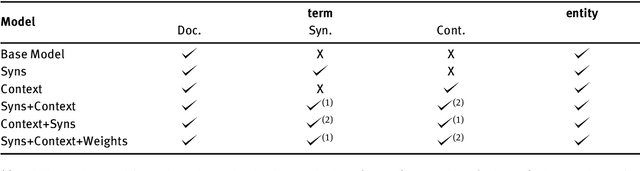

Hypergraph-of-Entity: A General Model for Entity-Oriented Search

Sep 01, 2021

Abstract:The hypergraph-of-entity was conceptually proposed as a general model for entity-oriented search. However, only the performance for ad hoc document retrieval had been assessed. We continue this line of research by also evaluating ad hoc entity retrieval, and entity list completion. We also attempt to scale the model, so that it can support the complete INEX 2009 Wikipedia collection. We do this by indexing the top keywords for each document, reducing complexity by partially lowering the number of nodes and, indirectly, the number of hyperedges linking terms to entities. This enables us to compare the effectiveness of the hypergraph-of-entity with the results obtained by the participants of the INEX tracks for the considered tasks. We find this to be a viable model that is, to our knowledge, the first attempt at a generalization in information retrieval, in particular by supporting a universal ranking function for multiple entity-oriented search tasks.

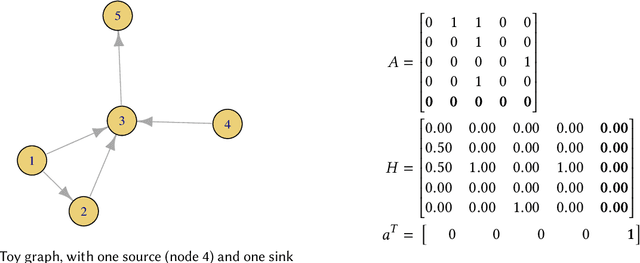

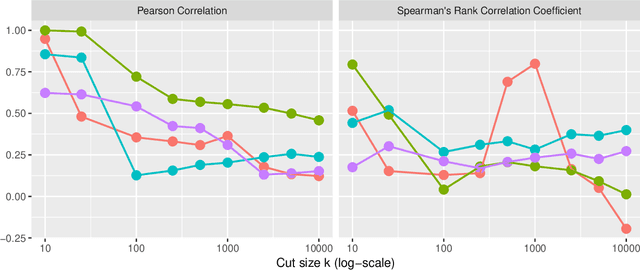

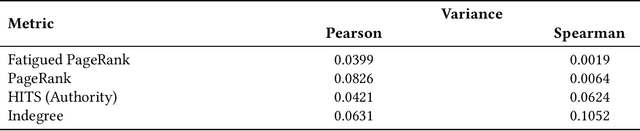

Fatigued PageRank

Apr 14, 2021

Abstract:Connections among entities are everywhere. From social media interactions to web page hyperlinks, networks are frequently used to represent such complex systems. Node ranking is a fundamental task that provides the strategy to identify central entities according to multiple criteria. Popular node ranking metrics include degree, closeness or betweenness centralities, as well as HITS authority or PageRank. In this work, we propose a novel node ranking metric, where we combine PageRank and the idea of node fatigue, in order to model a random explorer who wants to optimize coverage - it gets fatigued and avoids previously visited nodes. We formalize and exemplify the computation of Fatigued PageRank, evaluating it as a node ranking metric, as well as query-independent evidence in ad hoc document retrieval. Based on the Simple English Wikipedia link graph with clickstream transitions from the English Wikipedia, we find that Fatigued PageRank is able to surpass both indegree and HITS authority, but only for the top ranking nodes. On the other hand, based on the TREC Washington Post Corpus, we were unable to outperform the BM25 baseline, obtaining similar performance for all graph-based metrics, except for indegree, which lowered GMAP and MAP, but increased NDCG@10 and P@10.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge