Aaron Kline

Stanford University

Ensemble Modeling of Multiple Physical Indicators to Dynamically Phenotype Autism Spectrum Disorder

Aug 23, 2024

Abstract:Early detection of autism, a neurodevelopmental disorder marked by social communication challenges, is crucial for timely intervention. Recent advancements have utilized naturalistic home videos captured via the mobile application GuessWhat. Through interactive games played between children and their guardians, GuessWhat has amassed over 3,000 structured videos from 382 children, both diagnosed with and without Autism Spectrum Disorder (ASD). This collection provides a robust dataset for training computer vision models to detect ASD-related phenotypic markers, including variations in emotional expression, eye contact, and head movements. We have developed a protocol to curate high-quality videos from this dataset, forming a comprehensive training set. Utilizing this set, we trained individual LSTM-based models using eye gaze, head positions, and facial landmarks as input features, achieving test AUCs of 86%, 67%, and 78%, respectively. To boost diagnostic accuracy, we applied late fusion techniques to create ensemble models, improving the overall AUC to 90%. This approach also yielded more equitable results across different genders and age groups. Our methodology offers a significant step forward in the early detection of ASD by potentially reducing the reliance on subjective assessments and making early identification more accessibly and equitable.

An Exploration of Active Learning for Affective Digital Phenotyping

Apr 06, 2022

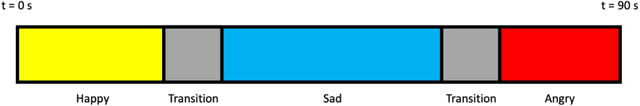

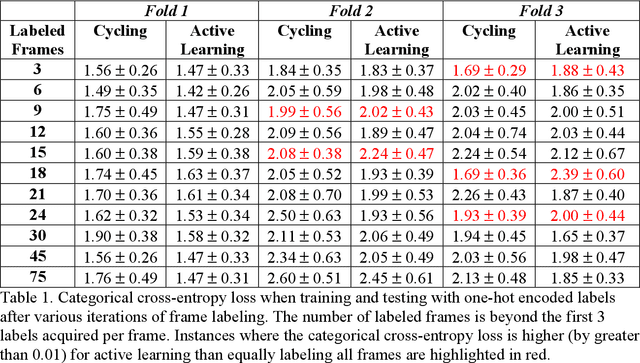

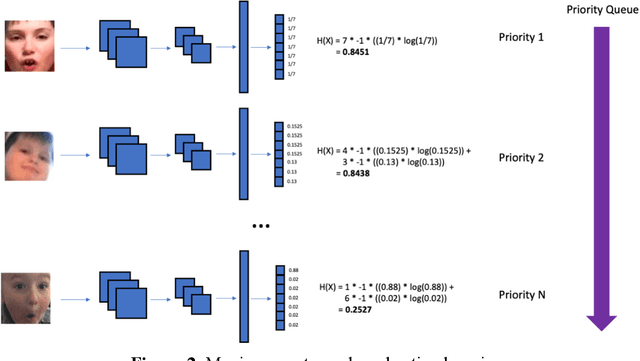

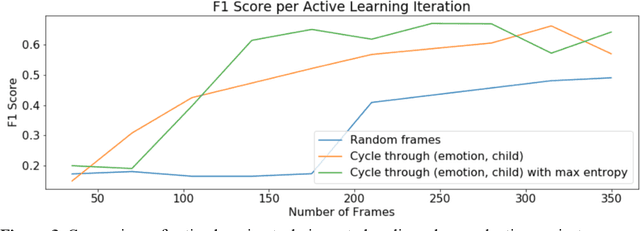

Abstract:Some of the most severe bottlenecks preventing widespread development of machine learning models for human behavior include a dearth of labeled training data and difficulty of acquiring high quality labels. Active learning is a paradigm for using algorithms to computationally select a useful subset of data points to label using metrics for model uncertainty and data similarity. We explore active learning for naturalistic computer vision emotion data, a particularly heterogeneous and complex data space due to inherently subjective labels. Using frames collected from gameplay acquired from a therapeutic smartphone game for children with autism, we run a simulation of active learning using gameplay prompts as metadata to aid in the active learning process. We find that active learning using information generated during gameplay slightly outperforms random selection of the same number of labeled frames. We next investigate a method to conduct active learning with subjective data, such as in affective computing, and where multiple crowdsourced labels can be acquired for each image. Using the Child Affective Facial Expression (CAFE) dataset, we simulate an active learning process for crowdsourcing many labels and find that prioritizing frames using the entropy of the crowdsourced label distribution results in lower categorical cross-entropy loss compared to random frame selection. Collectively, these results demonstrate pilot evaluations of two novel active learning approaches for subjective affective data collected in noisy settings.

Challenges and Opportunities for Machine Learning Classification of Behavior and Mental State from Images

Jan 26, 2022

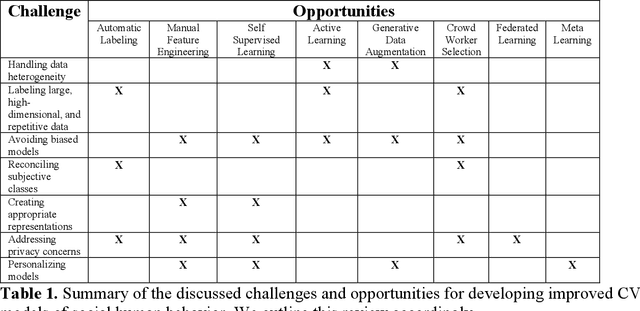

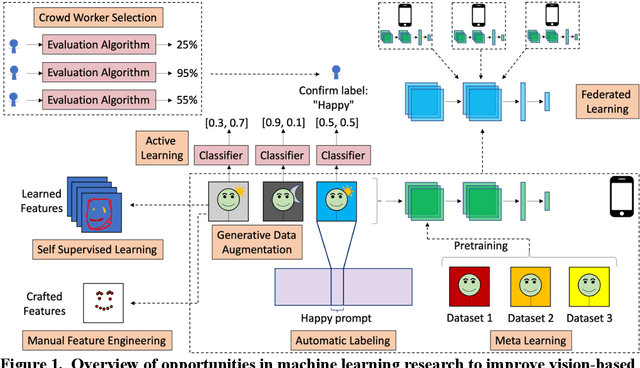

Abstract:Computer Vision (CV) classifiers which distinguish and detect nonverbal social human behavior and mental state can aid digital diagnostics and therapeutics for psychiatry and the behavioral sciences. While CV classifiers for traditional and structured classification tasks can be developed with standard machine learning pipelines for supervised learning consisting of data labeling, preprocessing, and training a convolutional neural network, there are several pain points which arise when attempting this process for behavioral phenotyping. Here, we discuss the challenges and corresponding opportunities in this space, including handling heterogeneous data, avoiding biased models, labeling massive and repetitive data sets, working with ambiguous or compound class labels, managing privacy concerns, creating appropriate representations, and personalizing models. We discuss current state-of-the-art research endeavors in CV such as data curation, data augmentation, crowdsourced labeling, active learning, reinforcement learning, generative models, representation learning, federated learning, and meta-learning. We highlight at least some of the machine learning advancements needed for imaging classifiers to detect human social cues successfully and reliably.

Classifying Autism from Crowdsourced Semi-Structured Speech Recordings: A Machine Learning Approach

Jan 04, 2022

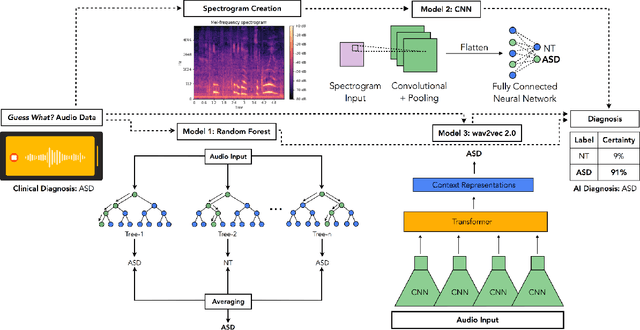

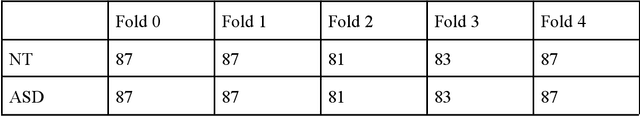

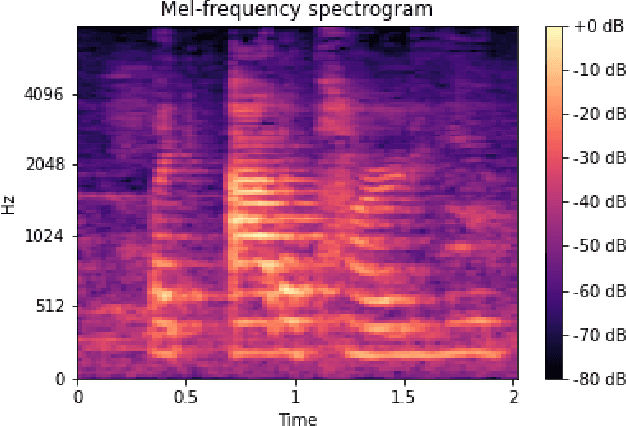

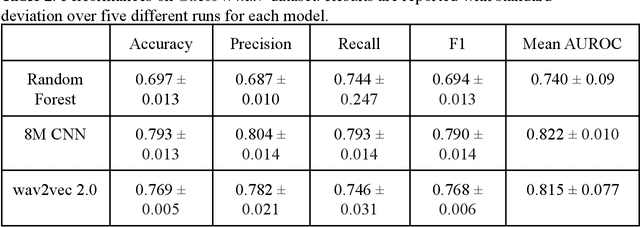

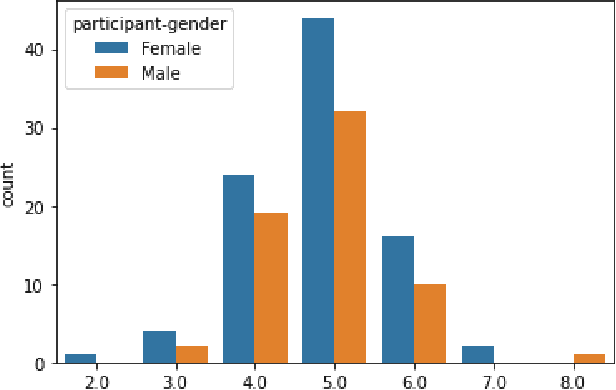

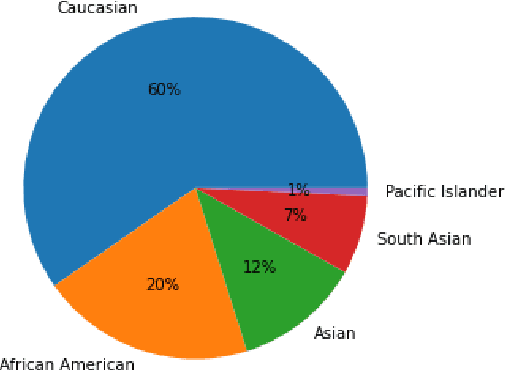

Abstract:Autism spectrum disorder (ASD) is a neurodevelopmental disorder which results in altered behavior, social development, and communication patterns. In past years, autism prevalence has tripled, with 1 in 54 children now affected. Given that traditional diagnosis is a lengthy, labor-intensive process, significant attention has been given to developing systems that automatically screen for autism. Prosody abnormalities are among the clearest signs of autism, with affected children displaying speech idiosyncrasies including echolalia, monotonous intonation, atypical pitch, and irregular linguistic stress patterns. In this work, we present a suite of machine learning approaches to detect autism in self-recorded speech audio captured from autistic and neurotypical (NT) children in home environments. We consider three methods to detect autism in child speech: first, Random Forests trained on extracted audio features (including Mel-frequency cepstral coefficients); second, convolutional neural networks (CNNs) trained on spectrograms; and third, fine-tuned wav2vec 2.0--a state-of-the-art Transformer-based ASR model. We train our classifiers on our novel dataset of cellphone-recorded child speech audio curated from Stanford's Guess What? mobile game, an app designed to crowdsource videos of autistic and neurotypical children in a natural home environment. The Random Forest classifier achieves 70% accuracy, the fine-tuned wav2vec 2.0 model achieves 77% accuracy, and the CNN achieves 79% accuracy when classifying children's audio as either ASD or NT. Our models were able to predict autism status when training on a varied selection of home audio clips with inconsistent recording quality, which may be more generalizable to real world conditions. These results demonstrate that machine learning methods offer promise in detecting autism automatically from speech without specialized equipment.

Training and Profiling a Pediatric Emotion Recognition Classifier on Mobile Devices

Aug 22, 2021

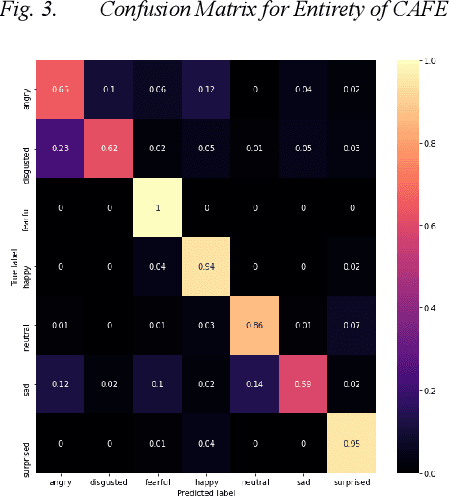

Abstract:Implementing automated emotion recognition on mobile devices could provide an accessible diagnostic and therapeutic tool for those who struggle to recognize emotion, including children with developmental behavioral conditions such as autism. Although recent advances have been made in building more accurate emotion classifiers, existing models are too computationally expensive to be deployed on mobile devices. In this study, we optimized and profiled various machine learning models designed for inference on edge devices and were able to match previous state of the art results for emotion recognition on children. Our best model, a MobileNet-V2 network pre-trained on ImageNet, achieved 65.11% balanced accuracy and 64.19% F1-score on CAFE, while achieving a 45-millisecond inference latency on a Motorola Moto G6 phone. This balanced accuracy is only 1.79% less than the current state of the art for CAFE, which used a model that contains 26.62x more parameters and was unable to run on the Moto G6, even when fully optimized. This work validates that with specialized design and optimization techniques, machine learning models can become lightweight enough for deployment on mobile devices and still achieve high accuracies on difficult image classification tasks.

Activity Recognition with Moving Cameras and Few Training Examples: Applications for Detection of Autism-Related Headbanging

Jan 10, 2021

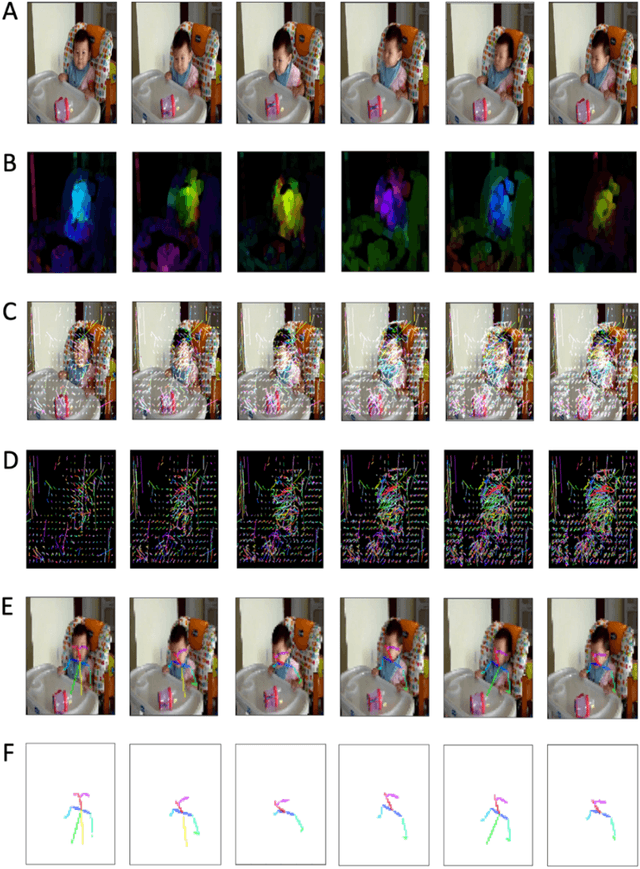

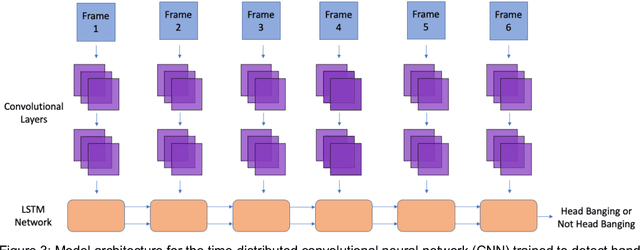

Abstract:Activity recognition computer vision algorithms can be used to detect the presence of autism-related behaviors, including what are termed "restricted and repetitive behaviors", or stimming, by diagnostic instruments. The limited data that exist in this domain are usually recorded with a handheld camera which can be shaky or even moving, posing a challenge for traditional feature representation approaches for activity detection which mistakenly capture the camera's motion as a feature. To address these issues, we first document the advantages and limitations of current feature representation techniques for activity recognition when applied to head banging detection. We then propose a feature representation consisting exclusively of head pose keypoints. We create a computer vision classifier for detecting head banging in home videos using a time-distributed convolutional neural network (CNN) in which a single CNN extracts features from each frame in the input sequence, and these extracted features are fed as input to a long short-term memory (LSTM) network. On the binary task of predicting head banging and no head banging within videos from the Self Stimulatory Behaviour Dataset (SSBD), we reach a mean F1-score of 90.77% using 3-fold cross validation (with individual fold F1-scores of 83.3%, 89.0%, and 100.0%) when ensuring that no child who appeared in the train set was in the test set for all folds. This work documents a successful technique for training a computer vision classifier which can detect human motion with few training examples and even when the camera recording the source clips is unstable. The general methods described here can be applied by designers and developers of interactive systems towards other human motion and pose classification problems used in mobile and ubiquitous interactive systems.

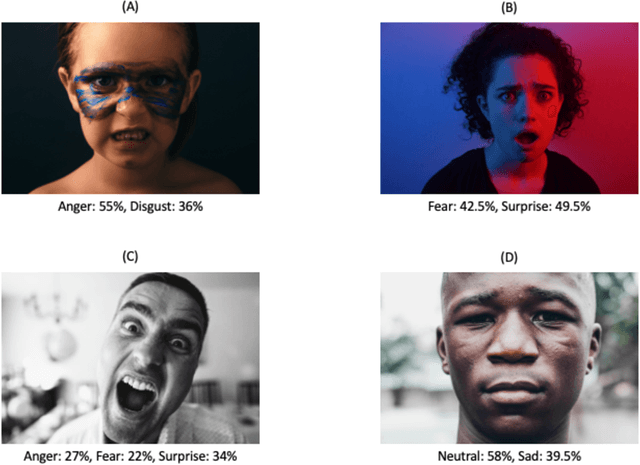

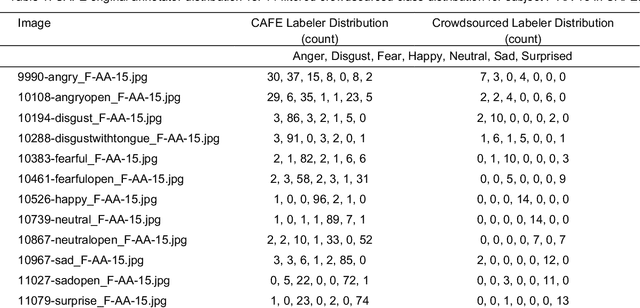

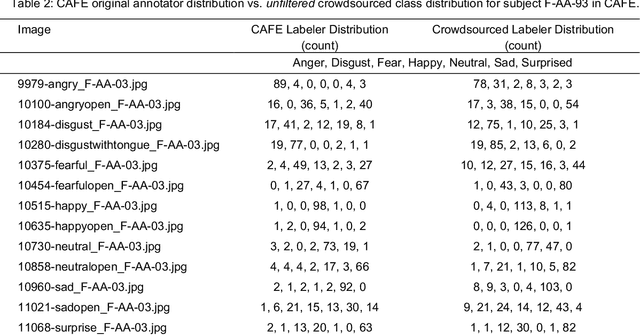

Using Crowdsourcing to Train Facial Emotion Machine Learning Models with Ambiguous Labels

Jan 10, 2021

Abstract:Current emotion detection classifiers predict discrete emotions. However, literature in psychology has documented that compound and ambiguous facial expressions are often evoked by humans. As a stride towards development of machine learning models that more accurately reflect compound and ambiguous emotions, we replace traditional one-hot encoded label representations with a crowd's distribution of labels. We center our study on the Child Affective Facial Expression (CAFE) dataset, a gold standard dataset of pediatric facial expressions which includes 100 human labels per image. We first acquire crowdsourced labels for 207 emotions from CAFE and demonstrate that the consensus labels from the crowd tend to match the consensus from the original CAFE raters, validating the utility of crowdsourcing. We then train two versions of a ResNet-152 classifier on CAFE images with two types of labels (1) traditional one-hot encoding and (2) vector labels representing the crowd distribution of responses. We compare the resulting output distributions of the two classifiers. While the traditional F1-score for the one-hot encoding classifier is much higher (94.33% vs. 78.68%), the output probability vector of the crowd-trained classifier much more closely resembles the distribution of human labels (t=3.2827, p=0.0014). For many applications of affective computing, reporting an emotion probability distribution that more closely resembles human interpretation can be more important than traditional machine learning metrics. This work is a first step for engineers of interactive systems to account for machine learning cases with ambiguous classes and we hope it will generate a discussion about machine learning with ambiguous labels and leveraging crowdsourcing as a potential solution.

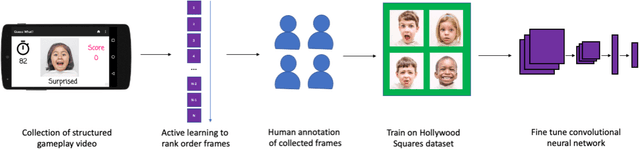

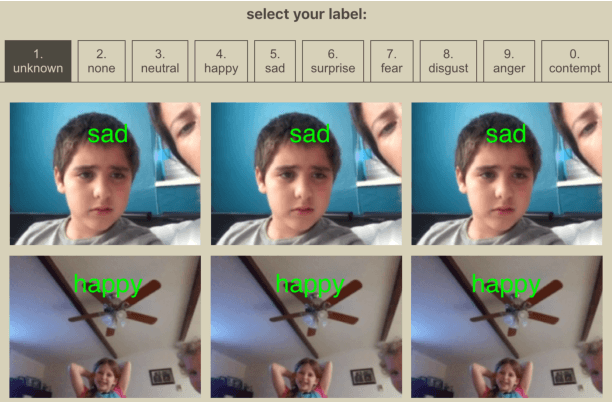

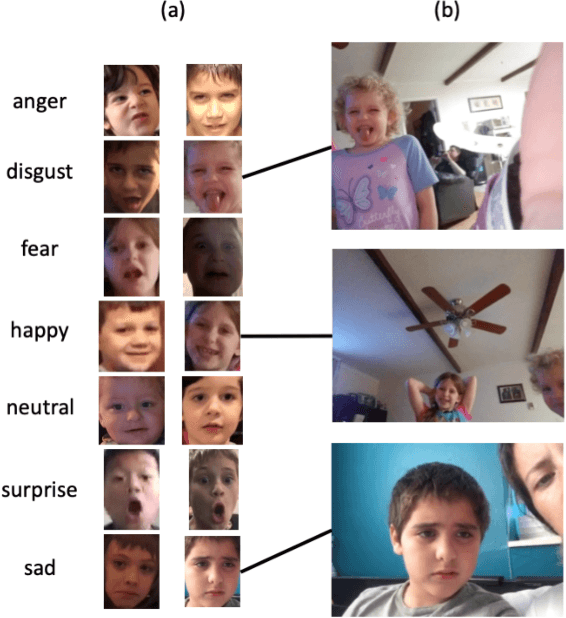

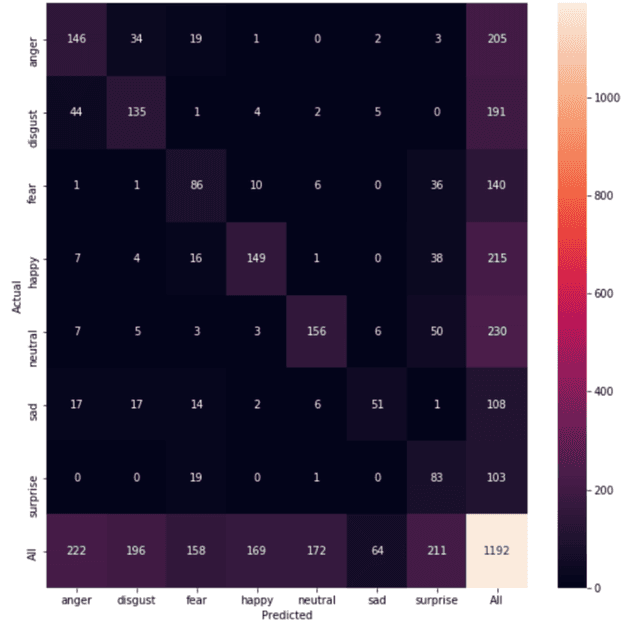

Training an Emotion Detection Classifier using Frames from a Mobile Therapeutic Game for Children with Developmental Disorders

Dec 16, 2020

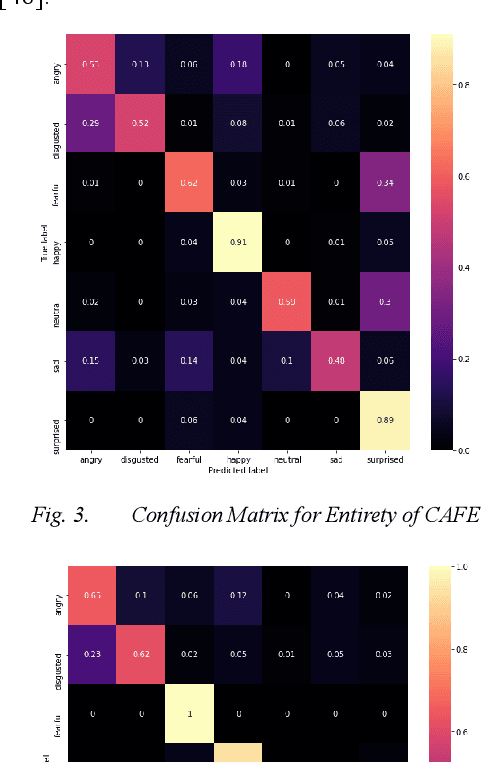

Abstract:Automated emotion classification could aid those who struggle to recognize emotion, including children with developmental behavioral conditions such as autism. However, most computer vision emotion models are trained on adult affect and therefore underperform on child faces. In this study, we designed a strategy to gamify the collection and the labeling of child affect data in an effort to boost the performance of automatic child emotion detection to a level closer to what will be needed for translational digital healthcare. We leveraged our therapeutic smartphone game, GuessWhat, which was designed in large part for children with developmental and behavioral conditions, to gamify the secure collection of video data of children expressing a variety of emotions prompted by the game. Through a secure web interface gamifying the human labeling effort, we gathered and labeled 2,155 videos, 39,968 emotion frames, and 106,001 labels on all images. With this drastically expanded pediatric emotion centric database (>30x larger than existing public pediatric affect datasets), we trained a pediatric emotion classification convolutional neural network (CNN) classifier of happy, sad, surprised, fearful, angry, disgust, and neutral expressions in children. The classifier achieved 66.9% balanced accuracy and 67.4% F1-score on the entirety of CAFE as well as 79.1% balanced accuracy and 78.0% F1-score on CAFE Subset A, a subset containing at least 60% human agreement on emotions labels. This performance is at least 10% higher than all previously published classifiers, the best of which reached 56.% balanced accuracy even when combining "anger" and "disgust" into a single class. This work validates that mobile games designed for pediatric therapies can generate high volumes of domain-relevant datasets to train state of the art classifiers to perform tasks highly relevant to precision health efforts.

A Wearable Social Interaction Aid for Children with Autism

Apr 19, 2020

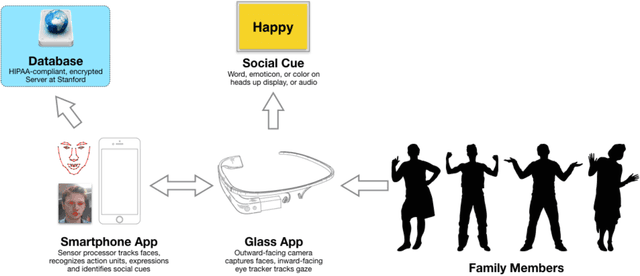

Abstract:With most recent estimates giving an incidence rate of 1 in 68 children in the United States, the autism spectrum disorder (ASD) is a growing public health crisis. Many of these children struggle to make eye contact, recognize facial expressions, and engage in social interactions. Today the standard for treatment of the core autism-related deficits focuses on a form of behavior training known as Applied Behavioral Analysis. To address perceived deficits in expression recognition, ABA approaches routinely involve the use of prompts such as flash cards for repetitive emotion recognition training via memorization. These techniques must be administered by trained practitioners and often at clinical centers that are far outnumbered by and out of reach from the many children and families in need of attention. Waitlists for access are up to 18 months long, and this wait may lead to children regressing down a path of isolation that worsens their long-term prognosis. There is an urgent need to innovate new methods of care delivery that can appropriately empower caregivers of children at risk or with a diagnosis of autism, and that capitalize on mobile tools and wearable devices for use outside of clinical settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge