Agnik Banerjee

Training and Profiling a Pediatric Emotion Recognition Classifier on Mobile Devices

Aug 22, 2021

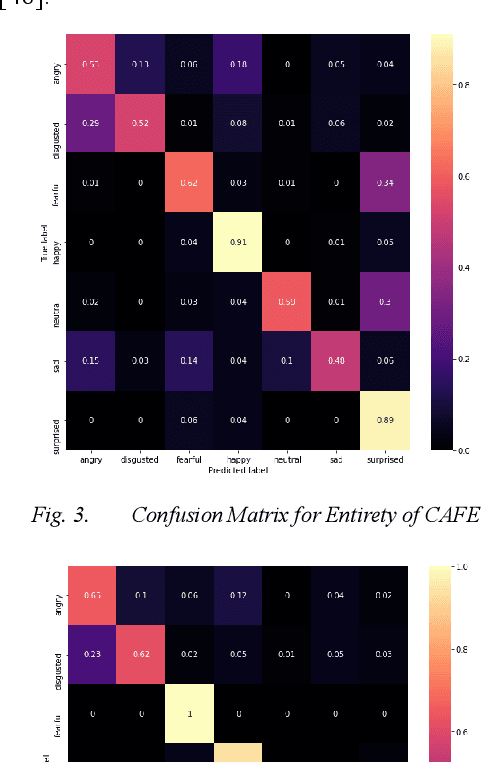

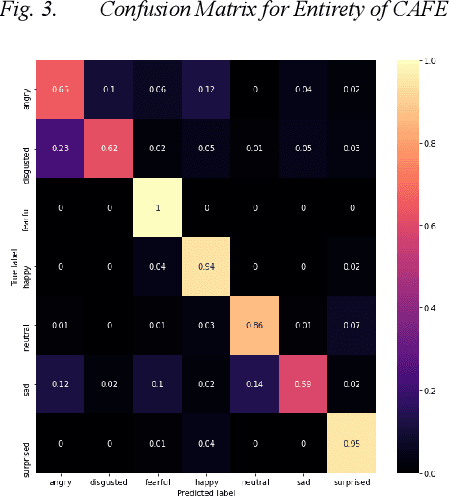

Abstract:Implementing automated emotion recognition on mobile devices could provide an accessible diagnostic and therapeutic tool for those who struggle to recognize emotion, including children with developmental behavioral conditions such as autism. Although recent advances have been made in building more accurate emotion classifiers, existing models are too computationally expensive to be deployed on mobile devices. In this study, we optimized and profiled various machine learning models designed for inference on edge devices and were able to match previous state of the art results for emotion recognition on children. Our best model, a MobileNet-V2 network pre-trained on ImageNet, achieved 65.11% balanced accuracy and 64.19% F1-score on CAFE, while achieving a 45-millisecond inference latency on a Motorola Moto G6 phone. This balanced accuracy is only 1.79% less than the current state of the art for CAFE, which used a model that contains 26.62x more parameters and was unable to run on the Moto G6, even when fully optimized. This work validates that with specialized design and optimization techniques, machine learning models can become lightweight enough for deployment on mobile devices and still achieve high accuracies on difficult image classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge