A. N. Rajagopalan

NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images: Methods and Results

Apr 19, 2025

Abstract:This paper reviews the NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images. This challenge received a wide range of impressive solutions, which are developed and evaluated using our collected real-world Raindrop Clarity dataset. Unlike existing deraining datasets, our Raindrop Clarity dataset is more diverse and challenging in degradation types and contents, which includes day raindrop-focused, day background-focused, night raindrop-focused, and night background-focused degradations. This dataset is divided into three subsets for competition: 14,139 images for training, 240 images for validation, and 731 images for testing. The primary objective of this challenge is to establish a new and powerful benchmark for the task of removing raindrops under varying lighting and focus conditions. There are a total of 361 participants in the competition, and 32 teams submitting valid solutions and fact sheets for the final testing phase. These submissions achieved state-of-the-art (SOTA) performance on the Raindrop Clarity dataset. The project can be found at https://lixinustc.github.io/CVPR-NTIRE2025-RainDrop-Competition.github.io/.

PNeRV: A Polynomial Neural Representation for Videos

Jun 27, 2024

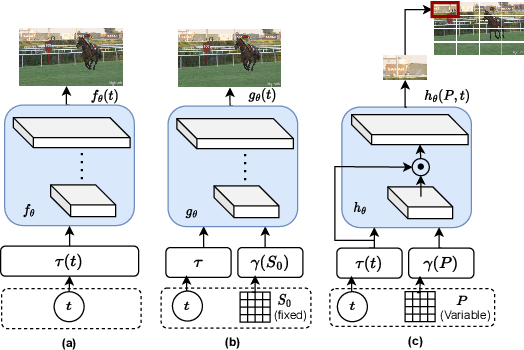

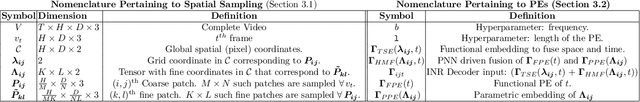

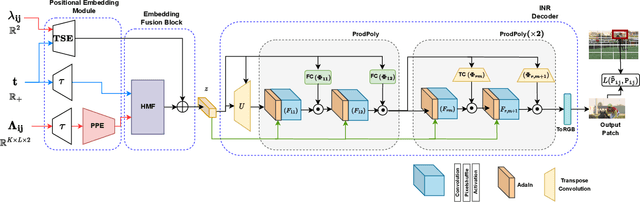

Abstract:Extracting Implicit Neural Representations (INRs) on video data poses unique challenges due to the additional temporal dimension. In the context of videos, INRs have predominantly relied on a frame-only parameterization, which sacrifices the spatiotemporal continuity observed in pixel-level (spatial) representations. To mitigate this, we introduce Polynomial Neural Representation for Videos (PNeRV), a parameter-wise efficient, patch-wise INR for videos that preserves spatiotemporal continuity. PNeRV leverages the modeling capabilities of Polynomial Neural Networks to perform the modulation of a continuous spatial (patch) signal with a continuous time (frame) signal. We further propose a custom Hierarchical Patch-wise Spatial Sampling Scheme that ensures spatial continuity while retaining parameter efficiency. We also employ a carefully designed Positional Embedding methodology to further enhance PNeRV's performance. Our extensive experimentation demonstrates that PNeRV outperforms the baselines in conventional Implicit Neural Representation tasks like compression along with downstream applications that require spatiotemporal continuity in the underlying representation. PNeRV not only addresses the challenges posed by video data in the realm of INRs but also opens new avenues for advanced video processing and analysis.

Spatially-Attentive Patch-Hierarchical Network with Adaptive Sampling for Motion Deblurring

Feb 09, 2024

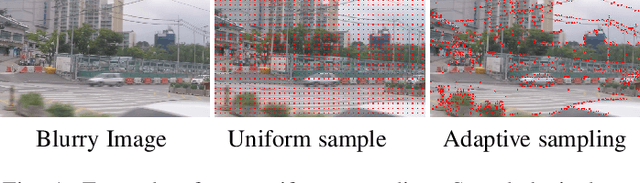

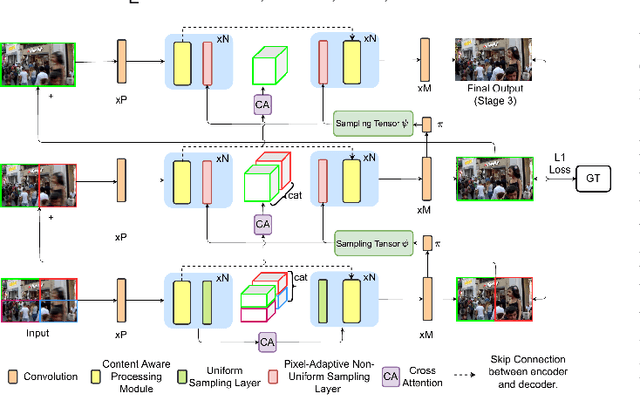

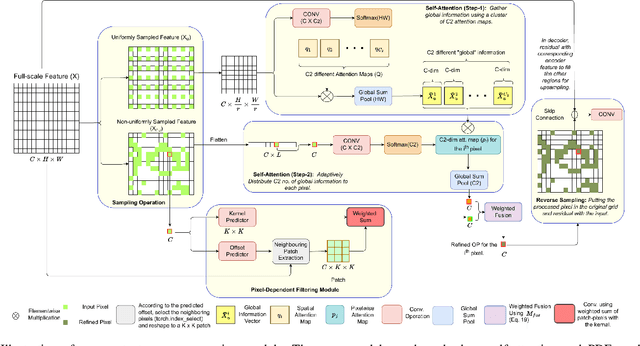

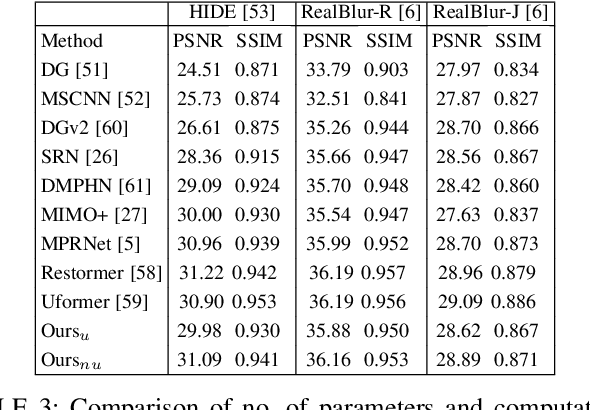

Abstract:This paper tackles the problem of motion deblurring of dynamic scenes. Although end-to-end fully convolutional designs have recently advanced the state-of-the-art in non-uniform motion deblurring, their performance-complexity trade-off is still sub-optimal. Most existing approaches achieve a large receptive field by increasing the number of generic convolution layers and kernel size. In this work, we propose a pixel adaptive and feature attentive design for handling large blur variations across different spatial locations and process each test image adaptively. We design a content-aware global-local filtering module that significantly improves performance by considering not only global dependencies but also by dynamically exploiting neighboring pixel information. We further introduce a pixel-adaptive non-uniform sampling strategy that implicitly discovers the difficult-to-restore regions present in the image and, in turn, performs fine-grained refinement in a progressive manner. Extensive qualitative and quantitative comparisons with prior art on deblurring benchmarks demonstrate that our approach performs favorably against the state-of-the-art deblurring algorithms.

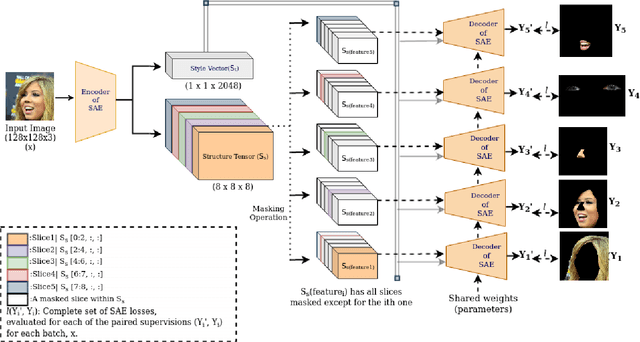

Latents2Semantics: Leveraging the Latent Space of Generative Models for Localized Style Manipulation of Face Images

Dec 22, 2023Abstract:With the metaverse slowly becoming a reality and given the rapid pace of developments toward the creation of digital humans, the need for a principled style editing pipeline for human faces is bound to increase manifold. We cater to this need by introducing the Latents2Semantics Autoencoder (L2SAE), a Generative Autoencoder model that facilitates highly localized editing of style attributes of several Regions of Interest (ROIs) in face images. The L2SAE learns separate latent representations for encoded images' structure and style information. Thus, allowing for structure-preserving style editing of the chosen ROIs. The encoded structure representation is a multichannel 2D tensor with reduced spatial dimensions, which captures both local and global structure properties. The style representation is a 1D tensor that captures global style attributes. In our framework, we slice the structure representation to build strong and disentangled correspondences with different ROIs. Consequentially, style editing of the chosen ROIs amounts to a simple combination of (a) the ROI-mask generated from the sliced structure representation and (b) the decoded image with global style changes, generated from the manipulated (using Gaussian noise) global style and unchanged structure tensor. Style editing sans additional human supervision is a significant win over SOTA style editing pipelines because most existing works require additional human effort (supervision) post-training for attributing semantic meaning to style edits. We also do away with iterative-optimization-based inversion or determining controllable latent directions post-training, which requires additional computationally expensive operations. We provide qualitative and quantitative results for the same over multiple applications, such as selective style editing and swapping using test images sampled from several datasets.

Zero shot framework for satellite image restoration

Jun 05, 2023

Abstract:Satellite images are typically subject to multiple distortions. Different factors affect the quality of satellite images, including changes in atmosphere, surface reflectance, sun illumination, viewing geometries etc., limiting its application to downstream tasks. In supervised networks, the availability of paired datasets is a strong assumption. Consequently, many unsupervised algorithms have been proposed to address this problem. These methods synthetically generate a large dataset of degraded images using image formation models. A neural network is then trained with an adversarial loss to discriminate between images from distorted and clean domains. However, these methods yield suboptimal performance when tested on real images that do not necessarily conform to the generation mechanism. Also, they require a large amount of training data and are rendered unsuitable when only a few images are available. We propose a distortion disentanglement and knowledge distillation framework for satellite image restoration to address these important issues. Our algorithm requires only two images: the distorted satellite image to be restored and a reference image with similar semantics. Specifically, we first propose a mechanism to disentangle distortion. This enables us to generate images with varying degrees of distortion using the disentangled distortion and the reference image. We then propose the use of knowledge distillation to train a restoration network using the generated image pairs. As a final step, the distorted image is passed through the restoration network to get the final output. Ablation studies show that our proposed mechanism successfully disentangles distortion.

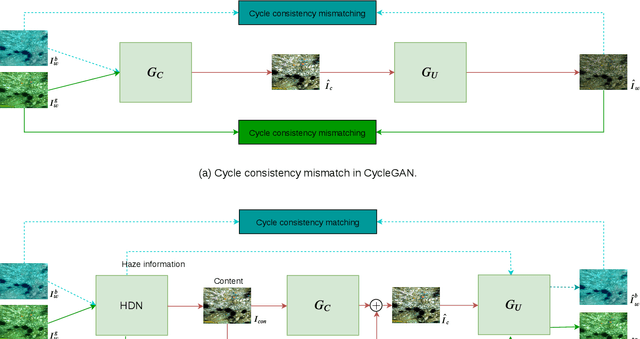

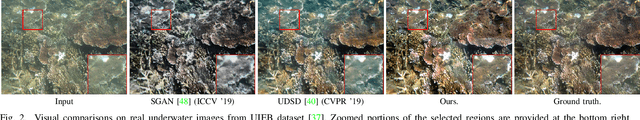

Unsupervised haze removal from underwater images

Jun 05, 2023

Abstract:Several supervised networks exist that remove haze information from underwater images using paired datasets and pixel-wise loss functions. However, training these networks requires large amounts of paired data which is cumbersome, complex and time-consuming. Also, directly using adversarial and cycle consistency loss functions for unsupervised learning is inaccurate as the underlying mapping from clean to underwater images is one-to-many, resulting in an inaccurate constraint on the cycle consistency loss. To address these issues, we propose a new method to remove haze from underwater images using unpaired data. Our model disentangles haze and content information from underwater images using a Haze Disentanglement Network (HDN). The disentangled content is used by a restoration network to generate a clean image using adversarial losses. The disentangled haze is then used as a guide for underwater image regeneration resulting in a strong constraint on cycle consistency loss and improved performance gains. Different ablation studies show that the haze and content from underwater images are effectively separated. Exhaustive experiments reveal that accurate cycle consistency constraint and the proposed network architecture play an important role in yielding enhanced results. Experiments on UFO-120, UWNet, UWScenes, and UIEB underwater datasets indicate that the results of our method outperform prior art both visually and quantitatively.

Unsupervised network for low-light enhancement

Jun 05, 2023Abstract:Supervised networks address the task of low-light enhancement using paired images. However, collecting a wide variety of low-light/clean paired images is tedious as the scene needs to remain static during imaging. In this paper, we propose an unsupervised low-light enhancement network using contextguided illumination-adaptive norm (CIN). Inspired by coarse to fine methods, we propose to address this task in two stages. In stage-I, a pixel amplifier module (PAM) is used to generate a coarse estimate with an overall improvement in visibility and aesthetic quality. Stage-II further enhances the saturated dark pixels and scene properties of the image using CIN. Different ablation studies show the importance of PAM and CIN in improving the visible quality of the image. Next, we propose a region-adaptive single input multiple output (SIMO) model that can generate multiple enhanced images from a single lowlight image. The objective of SIMO is to let users choose the image of their liking from a pool of enhanced images. Human subjective analysis of SIMO results shows that the distribution of preferred images varies, endorsing the importance of SIMO-type models. Lastly, we propose a low-light road scene (LLRS) dataset having an unpaired collection of low-light and clean scenes. Unlike existing datasets, the clean and low-light scenes in LLRS are real and captured using fixed camera settings. Exhaustive comparisons on publicly available datasets, and the proposed dataset reveal that the results of our model outperform prior art quantitatively and qualitatively.

Exploring the Effectiveness of Mask-Guided Feature Modulation as a Mechanism for Localized Style Editing of Real Images

Dec 10, 2022Abstract:The success of Deep Generative Models at high-resolution image generation has led to their extensive utilization for style editing of real images. Most existing methods work on the principle of inverting real images onto their latent space, followed by determining controllable directions. Both inversion of real images and determination of controllable latent directions are computationally expensive operations. Moreover, the determination of controllable latent directions requires additional human supervision. This work aims to explore the efficacy of mask-guided feature modulation in the latent space of a Deep Generative Model as a solution to these bottlenecks. To this end, we present the SemanticStyle Autoencoder (SSAE), a deep Generative Autoencoder model that leverages semantic mask-guided latent space manipulation for highly localized photorealistic style editing of real images. We present qualitative and quantitative results for the same and their analysis. This work shall serve as a guiding primer for future work.

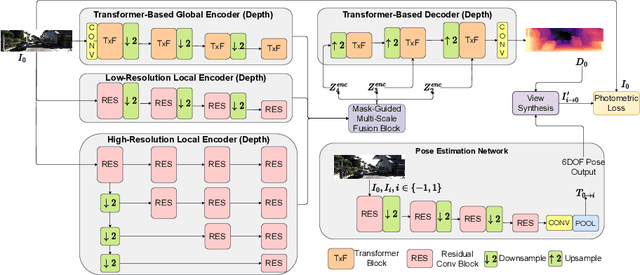

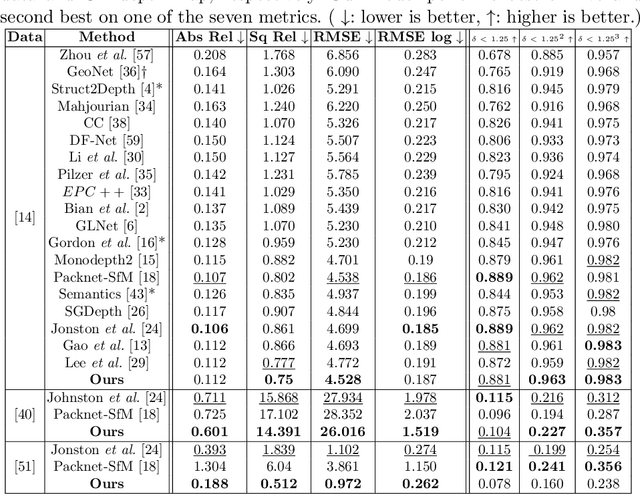

Hybrid Transformer Based Feature Fusion for Self-Supervised Monocular Depth Estimation

Nov 20, 2022

Abstract:With an unprecedented increase in the number of agents and systems that aim to navigate the real world using visual cues and the rising impetus for 3D Vision Models, the importance of depth estimation is hard to understate. While supervised methods remain the gold standard in the domain, the copious amount of paired stereo data required to train such models makes them impractical. Most State of the Art (SOTA) works in the self-supervised and unsupervised domain employ a ResNet-based encoder architecture to predict disparity maps from a given input image which are eventually used alongside a camera pose estimator to predict depth without direct supervision. The fully convolutional nature of ResNets makes them susceptible to capturing per-pixel local information only, which is suboptimal for depth prediction. Our key insight for doing away with this bottleneck is to use Vision Transformers, which employ self-attention to capture the global contextual information present in an input image. Our model fuses per-pixel local information learned using two fully convolutional depth encoders with global contextual information learned by a transformer encoder at different scales. It does so using a mask-guided multi-stream convolution in the feature space to achieve state-of-the-art performance on most standard benchmarks.

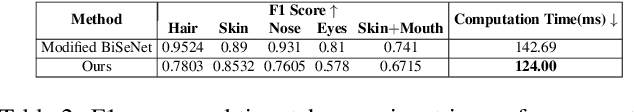

Latents2Segments: Disentangling the Latent Space of Generative Models for Semantic Segmentation of Face Images

Jul 06, 2022

Abstract:With the advent of an increasing number of Augmented and Virtual Reality applications that aim to perform meaningful and controlled style edits on images of human faces, the impetus for the task of parsing face images to produce accurate and fine-grained semantic segmentation maps is more than ever before. Few State of the Art (SOTA) methods which solve this problem, do so by incorporating priors with respect to facial structure or other face attributes such as expression and pose in their deep classifier architecture. Our endeavour in this work is to do away with the priors and complex pre-processing operations required by SOTA multi-class face segmentation models by reframing this operation as a downstream task post infusion of disentanglement with respect to facial semantic regions of interest (ROIs) in the latent space of a Generative Autoencoder model. We present results for our model's performance on the CelebAMask-HQ and HELEN datasets. The encoded latent space of our model achieves significantly higher disentanglement with respect to semantic ROIs than that of other SOTA works. Moreover, it achieves a 13% faster inference rate and comparable accuracy with respect to the publicly available SOTA for the downstream task of semantic segmentation of face images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge