Ziping Liu

Observer study-based evaluation of TGAN architecture used to generate oncological PET images

Nov 28, 2023Abstract:The application of computer-vision algorithms in medical imaging has increased rapidly in recent years. However, algorithm training is challenging due to limited sample sizes, lack of labeled samples, as well as privacy concerns regarding data sharing. To address these issues, we previously developed (Bergen et al. 2022) a synthetic PET dataset for Head and Neck (H and N) cancer using the temporal generative adversarial network (TGAN) architecture and evaluated its performance segmenting lesions and identifying radiomics features in synthesized images. In this work, a two-alternative forced-choice (2AFC) observer study was performed to quantitatively evaluate the ability of human observers to distinguish between real and synthesized oncological PET images. In the study eight trained readers, including two board-certified nuclear medicine physicians, read 170 real/synthetic image pairs presented as 2D-transaxial using a dedicated web app. For each image pair, the observer was asked to identify the real image and input their confidence level with a 5-point Likert scale. P-values were computed using the binomial test and Wilcoxon signed-rank test. A heat map was used to compare the response accuracy distribution for the signed-rank test. Response accuracy for all observers ranged from 36.2% [27.9-44.4] to 63.1% [54.8-71.3]. Six out of eight observers did not identify the real image with statistical significance, indicating that the synthetic dataset was reasonably representative of oncological PET images. Overall, this study adds validity to the realism of our simulated H&N cancer dataset, which may be implemented in the future to train AI algorithms while favoring patient confidentiality and privacy protection.

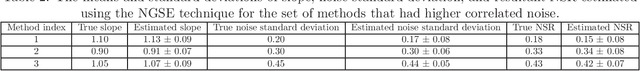

No-gold-standard evaluation of quantitative imaging methods in the presence of correlated noise

Mar 03, 2022

Abstract:Objective evaluation of quantitative imaging (QI) methods with patient data is highly desirable, but is hindered by the lack or unreliability of an available gold standard. To address this issue, techniques that can evaluate QI methods without access to a gold standard are being actively developed. These techniques assume that the true and measured values are linearly related by a slope, bias, and Gaussian-distributed noise term, where the noise between measurements made by different methods is independent of each other. However, this noise arises in the process of measuring the same quantitative value, and thus can be correlated. To address this limitation, we propose a no-gold-standard evaluation (NGSE) technique that models this correlated noise by a multi-variate Gaussian distribution parameterized by a covariance matrix. We derive a maximum-likelihood-based approach to estimate the parameters that describe the relationship between the true and measured values, without any knowledge of the true values. We then use the estimated slopes and diagonal elements of the covariance matrix to compute the noise-to-slope ratio (NSR) to rank the QI methods on the basis of precision. The proposed NGSE technique was evaluated with multiple numerical experiments. Our results showed that the technique reliably estimated the NSR values and yielded accurate rankings of the considered methods for ~ 83% of 160 trials. In particular, the technique correctly identified the most precise method for ~ 97% of the trials. Overall, this study demonstrates the efficacy of the NGSE technique to accurately rank different QI methods when the correlated noise is present, and without access to any knowledge of the ground truth. The results motivate further validation of this technique with realistic simulation studies and patient data.

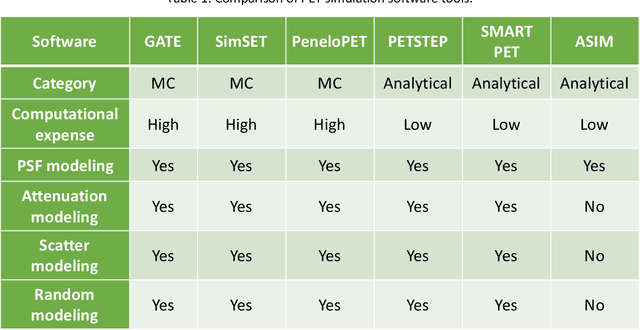

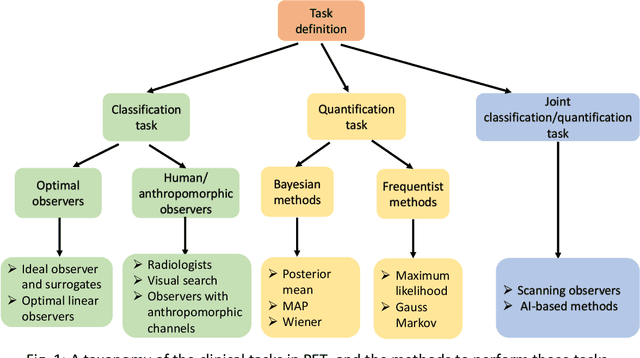

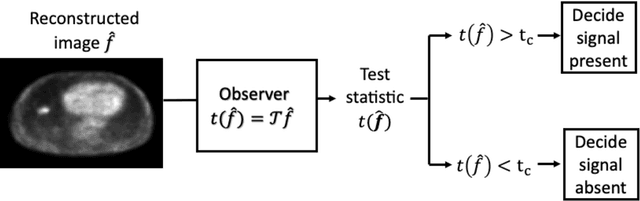

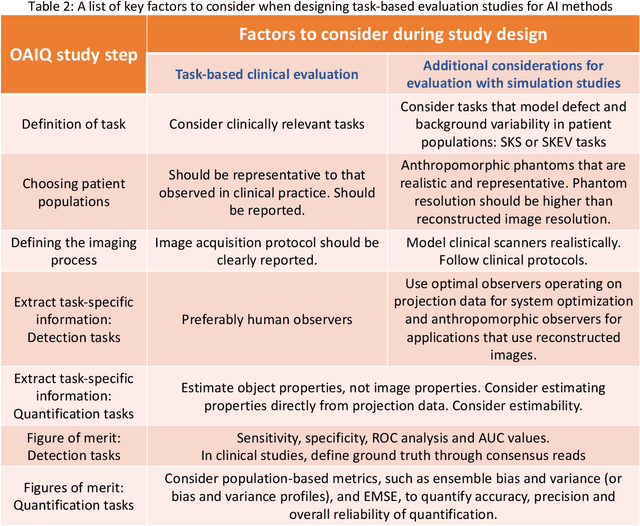

Objective task-based evaluation of artificial intelligence-based medical imaging methods: Framework, strategies and role of the physician

Jul 20, 2021

Abstract:Artificial intelligence (AI)-based methods are showing promise in multiple medical-imaging applications. Thus, there is substantial interest in clinical translation of these methods, requiring in turn, that they be evaluated rigorously. In this paper, our goal is to lay out a framework for objective task-based evaluation of AI methods. We will also provide a list of tools available in the literature to conduct this evaluation. Further, we outline the important role of physicians in conducting these evaluation studies. The examples in this paper will be proposed in the context of PET with a focus on neural-network-based methods. However, the framework is also applicable to evaluate other medical-imaging modalities and other types of AI methods.

Observer study-based evaluation of a stochastic and physics-based method to generate oncological PET images

Feb 11, 2021

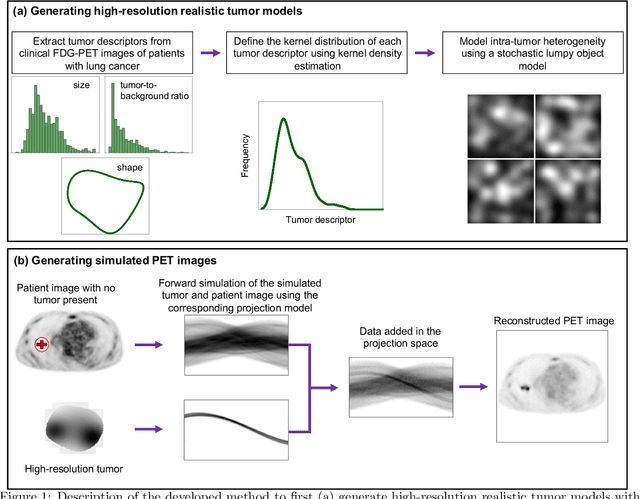

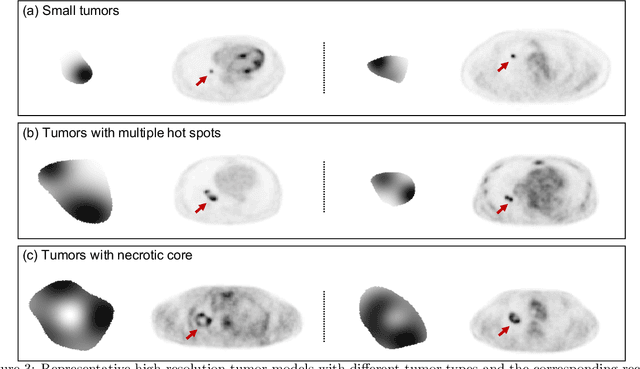

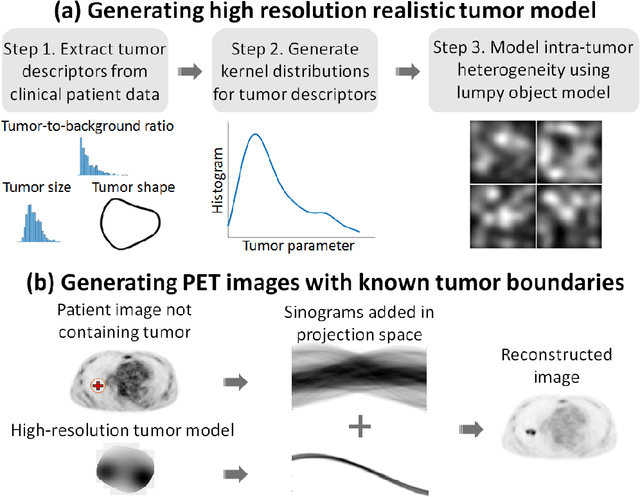

Abstract:Objective evaluation of new and improved methods for PET imaging requires access to images with ground truth, as can be obtained through simulation studies. However, for these studies to be clinically relevant, it is important that the simulated images are clinically realistic. In this study, we develop a stochastic and physics-based method to generate realistic oncological two-dimensional (2-D) PET images, where the ground-truth tumor properties are known. The developed method extends upon a previously proposed approach. The approach captures the observed variabilities in tumor properties from actual patient population. Further, we extend that approach to model intra-tumor heterogeneity using a lumpy object model. To quantitatively evaluate the clinical realism of the simulated images, we conducted a human-observer study. This was a two-alternative forced-choice (2AFC) study with trained readers (five PET physicians and one PET physicist). Our results showed that the readers had an average of ~ 50% accuracy in the 2AFC study. Further, the developed simulation method was able to generate wide varieties of clinically observed tumor types. These results provide evidence for the application of this method to 2-D PET imaging applications, and motivate development of this method to generate 3-D PET images.

Fully automated 3D segmentation of dopamine transporter SPECT images using an estimation-based approach

Jan 17, 2021

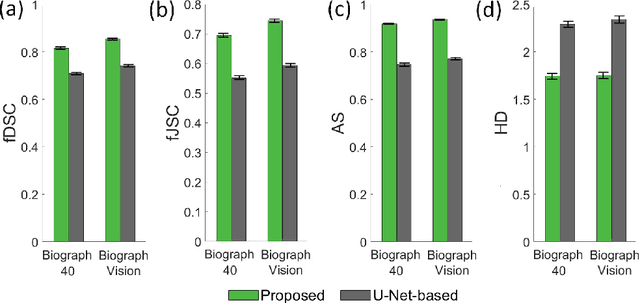

Abstract:Quantitative measures of uptake in caudate, putamen, and globus pallidus in dopamine transporter (DaT) brain SPECT have potential as biomarkers for the severity of Parkinson disease. Reliable quantification of uptake requires accurate segmentation of these regions. However, segmentation is challenging in DaT SPECT due to partial-volume effects, system noise, physiological variability, and the small size of these regions. To address these challenges, we propose an estimation-based approach to segmentation. This approach estimates the posterior mean of the fractional volume occupied by caudate, putamen, and globus pallidus within each voxel of a 3D SPECT image. The estimate is obtained by minimizing a cost function based on the binary cross-entropy loss between the true and estimated fractional volumes over a population of SPECT images, where the distribution of the true fractional volumes is obtained from magnetic resonance images from clinical populations. The proposed method accounts for both the sources of partial-volume effects in SPECT, namely the limited system resolution and tissue-fraction effects. The method was implemented using an encoder-decoder network and evaluated using realistic clinically guided SPECT simulation studies, where the ground-truth fractional volumes were known. The method significantly outperformed all other considered segmentation methods and yielded accurate segmentation with dice similarity coefficients of ~ 0.80 for all regions. The method was relatively insensitive to changes in voxel size. Further, the method was relatively robust up to +/- 10 degrees of patient head tilt along transaxial, sagittal, and coronal planes. Overall, the results demonstrate the efficacy of the proposed method to yield accurate fully automated segmentation of caudate, putamen, and globus pallidus in 3D DaT-SPECT images.

An estimation-based method to segment PET images

Feb 29, 2020

Abstract:Tumor segmentation in oncological PET images is challenging, a major reason being the partial-volume effects that arise from low system resolution and a finite pixel size. The latter results in pixels containing more than one region, also referred to as tissue-fraction effects. Conventional classification-based segmentation approaches are inherently limited in accounting for the tissue-fraction effects. To address this limitation, we pose the segmentation task as an estimation problem. We propose a Bayesian method that estimates the posterior mean of the tumorfraction area within each pixel and uses these estimates to define the segmented tumor boundary. The method was implemented using an autoencoder. Quantitative evaluation of the method was performed using realistic simulation studies conducted in the context of segmenting the primary tumor in PET images of patients with lung cancer. For these studies, a framework was developed to generate clinically realistic simulated PET images. Realism of these images was quantitatively confirmed using a two-alternative-forced-choice study by six trained readers with expertise in reading PET scans. The evaluation studies demonstrated that the proposed segmentation method was accurate, significantly outperformed widely used conventional methods on the tasks of tumor segmentation and estimation of tumor-fraction areas, was relatively insensitive to partial-volume effects, and reliably estimated the ground-truth tumor boundaries. Further, these results were obtained across different clinical-scanner configurations. This proof-of-concept study demonstrates the efficacy of an estimation-based approach to PET segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge