Md Ashequr Rahman

DEMIST: A deep-learning-based task-specific denoising approach for myocardial perfusion SPECT

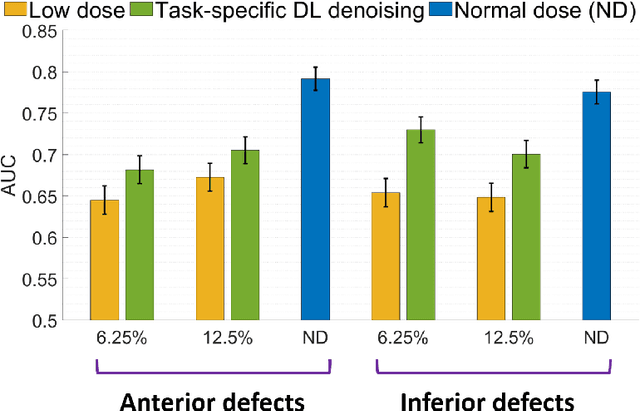

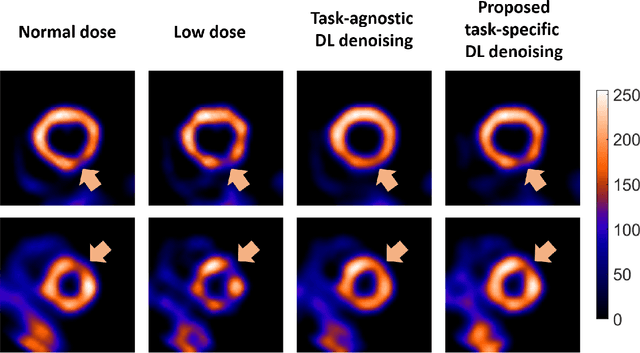

Jun 14, 2023Abstract:There is an important need for methods to process myocardial perfusion imaging (MPI) SPECT images acquired at lower radiation dose and/or acquisition time such that the processed images improve observer performance on the clinical task of detecting perfusion defects. To address this need, we build upon concepts from model-observer theory and our understanding of the human visual system to propose a Detection task-specific deep-learning-based approach for denoising MPI SPECT images (DEMIST). The approach, while performing denoising, is designed to preserve features that influence observer performance on detection tasks. We objectively evaluated DEMIST on the task of detecting perfusion defects using a retrospective study with anonymized clinical data in patients who underwent MPI studies across two scanners (N = 338). The evaluation was performed at low-dose levels of 6.25%, 12.5% and 25% and using an anthropomorphic channelized Hotelling observer. Performance was quantified using area under the receiver operating characteristics curve (AUC). Images denoised with DEMIST yielded significantly higher AUC compared to corresponding low-dose images and images denoised with a commonly used task-agnostic DL-based denoising method. Similar results were observed with stratified analysis based on patient sex and defect type. Additionally, DEMIST improved visual fidelity of the low-dose images as quantified using root mean squared error and structural similarity index metric. A mathematical analysis revealed that DEMIST preserved features that assist in detection tasks while improving the noise properties, resulting in improved observer performance. The results provide strong evidence for further clinical evaluation of DEMIST to denoise low-count images in MPI SPECT.

Development and task-based evaluation of a scatter-window projection and deep learning-based transmission-less attenuation compensation method for myocardial perfusion SPECT

Mar 19, 2023Abstract:Attenuation compensation (AC) is beneficial for visual interpretation tasks in single-photon emission computed tomography (SPECT) myocardial perfusion imaging (MPI). However, traditional AC methods require the availability of a transmission scan, most often a CT scan. This approach has the disadvantages of increased radiation dose, increased scanner cost, and the possibility of inaccurate diagnosis in cases of misregistration between the SPECT and CT images. Further, many SPECT systems do not include a CT component. To address these issues, we developed a Scatter-window projection and deep Learning-based AC (SLAC) method to perform AC without a separate transmission scan. To investigate the clinical efficacy of this method, we then objectively evaluated the performance of this method on the clinical task of detecting perfusion defects on MPI in a retrospective study with anonymized clinical SPECT/CT stress MPI images. The proposed method was compared with CT-based AC (CTAC) and no-AC (NAC) methods. Our results showed that the SLAC method yielded an almost overlapping receiver operating characteristic (ROC) plot and a similar area under the ROC (AUC) to the CTAC method on this task. These results demonstrate the capability of the SLAC method for transmission-less AC in SPECT and motivate further clinical evaluation.

Need for Objective Task-based Evaluation of Deep Learning-Based Denoising Methods: A Study in the Context of Myocardial Perfusion SPECT

Mar 16, 2023

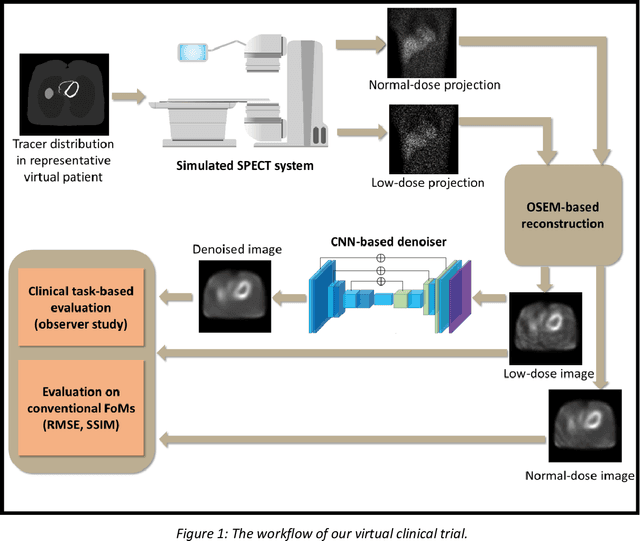

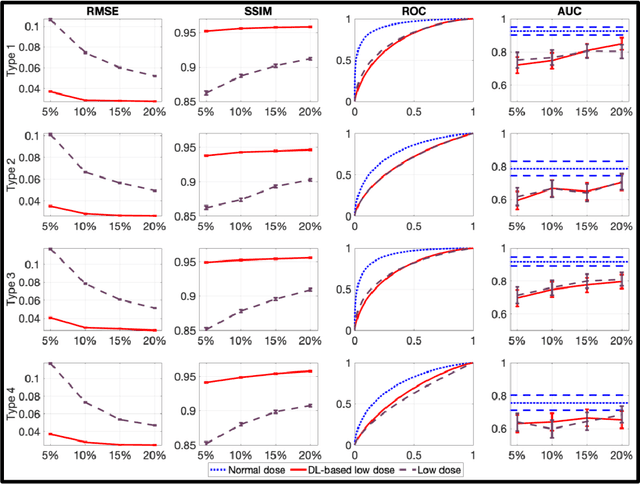

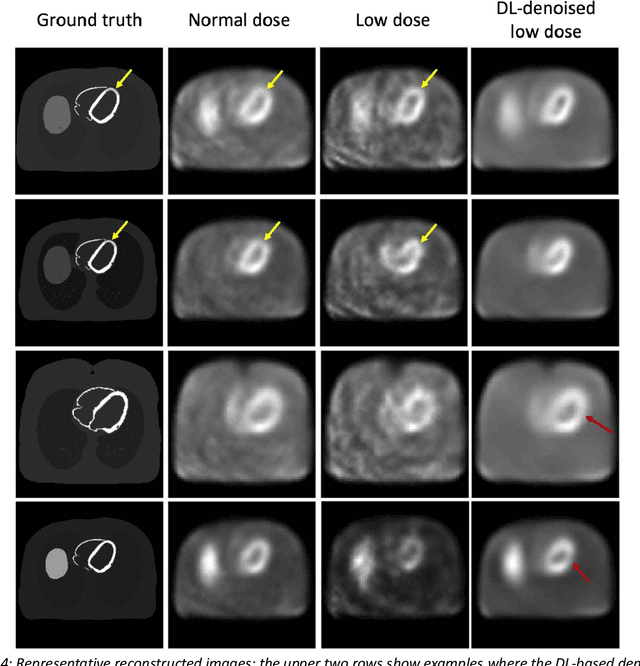

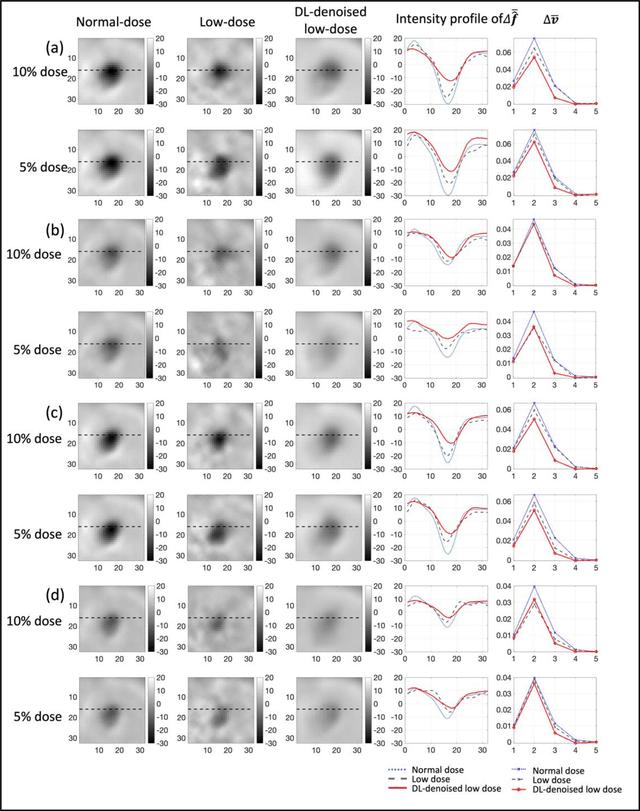

Abstract:Artificial intelligence-based methods have generated substantial interest in nuclear medicine. An area of significant interest has been using deep-learning (DL)-based approaches for denoising images acquired with lower doses, shorter acquisition times, or both. Objective evaluation of these approaches is essential for clinical application. DL-based approaches for denoising nuclear-medicine images have typically been evaluated using fidelity-based figures of merit (FoMs) such as RMSE and SSIM. However, these images are acquired for clinical tasks and thus should be evaluated based on their performance in these tasks. Our objectives were to (1) investigate whether evaluation with these FoMs is consistent with objective clinical-task-based evaluation; (2) provide a theoretical analysis for determining the impact of denoising on signal-detection tasks; (3) demonstrate the utility of virtual clinical trials (VCTs) to evaluate DL-based methods. A VCT to evaluate a DL-based method for denoising myocardial perfusion SPECT (MPS) images was conducted. The impact of DL-based denoising was evaluated using fidelity-based FoMs and AUC, which quantified performance on detecting perfusion defects in MPS images as obtained using a model observer with anthropomorphic channels. Based on fidelity-based FoMs, denoising using the considered DL-based method led to significantly superior performance. However, based on ROC analysis, denoising did not improve, and in fact, often degraded detection-task performance. The results motivate the need for objective task-based evaluation of DL-based denoising approaches. Further, this study shows how VCTs provide a mechanism to conduct such evaluations using VCTs. Finally, our theoretical treatment reveals insights into the reasons for the limited performance of the denoising approach.

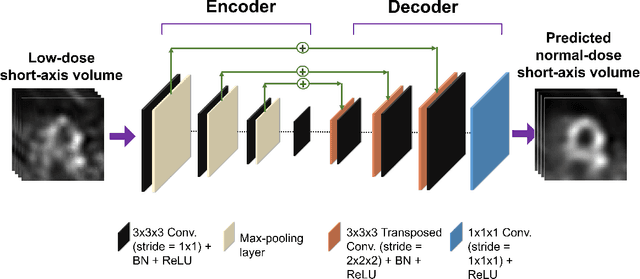

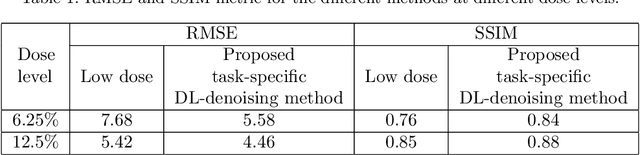

A task-specific deep-learning-based denoising approach for myocardial perfusion SPECT

Mar 01, 2023

Abstract:Deep-learning (DL)-based methods have shown significant promise in denoising myocardial perfusion SPECT images acquired at low dose. For clinical application of these methods, evaluation on clinical tasks is crucial. Typically, these methods are designed to minimize some fidelity-based criterion between the predicted denoised image and some reference normal-dose image. However, while promising, studies have shown that these methods may have limited impact on the performance of clinical tasks in SPECT. To address this issue, we use concepts from the literature on model observers and our understanding of the human visual system to propose a DL-based denoising approach designed to preserve observer-related information for detection tasks. The proposed method was objectively evaluated on the task of detecting perfusion defect in myocardial perfusion SPECT images using a retrospective study with anonymized clinical data. Our results demonstrate that the proposed method yields improved performance on this detection task compared to using low-dose images. The results show that by preserving task-specific information, DL may provide a mechanism to improve observer performance in low-dose myocardial perfusion SPECT.

Investigating the limited performance of a deep-learning-based SPECT denoising approach: An observer-study-based characterization

Mar 03, 2022

Abstract:Multiple objective assessment of image-quality-based studies have reported that several deep-learning-based denoising methods show limited performance on signal-detection tasks. Our goal was to investigate the reasons for this limited performance. To achieve this goal, we conducted a task-based characterization of a DL-based denoising approach for individual signal properties. We conducted this study in the context of evaluating a DL-based approach for denoising SPECT images. The training data consisted of signals of different sizes and shapes within a clustered-lumpy background, imaged with a 2D parallel-hole-collimator SPECT system. The projections were generated at normal and 20% low count level, both of which were reconstructed using an OSEM algorithm. A CNN-based denoiser was trained to process the low-count images. The performance of this CNN was characterized for five different signal sizes and four different SBR by designing each evaluation as an SKE/BKS signal-detection task. Performance on this task was evaluated using an anthropomorphic CHO. As in previous studies, we observed that the DL-based denoising method did not improve performance on signal-detection tasks. Evaluation using the idea of observer-study-based characterization demonstrated that the DL-based denoising approach did not improve performance on the signal-detection task for any of the signal types. Overall, these results provide new insights on the performance of the DL-based denoising approach as a function of signal size and contrast. More generally, the observer study-based characterization provides a mechanism to evaluate the sensitivity of the method to specific object properties and may be explored as analogous to characterizations such as modulation transfer function for linear systems. Finally, this work underscores the need for objective task-based evaluation of DL-based denoising approaches.

Objective task-based evaluation of artificial intelligence-based medical imaging methods: Framework, strategies and role of the physician

Jul 20, 2021

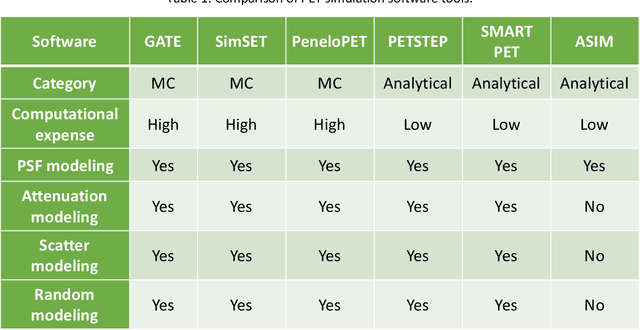

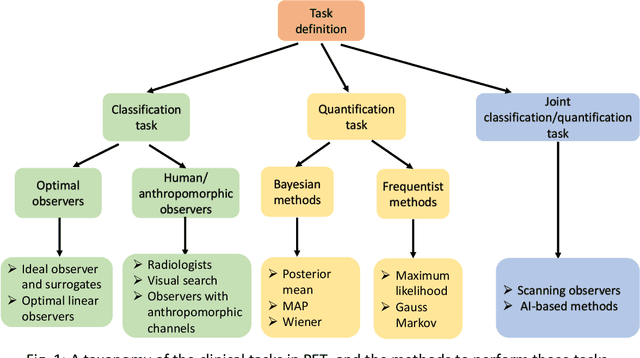

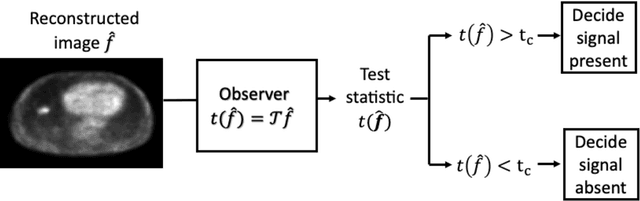

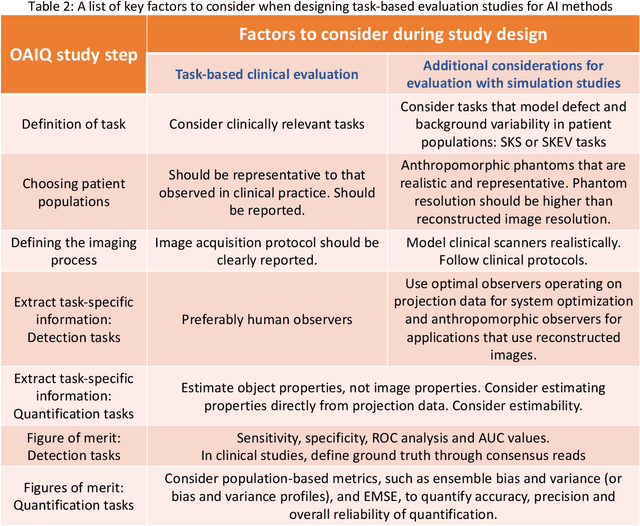

Abstract:Artificial intelligence (AI)-based methods are showing promise in multiple medical-imaging applications. Thus, there is substantial interest in clinical translation of these methods, requiring in turn, that they be evaluated rigorously. In this paper, our goal is to lay out a framework for objective task-based evaluation of AI methods. We will also provide a list of tools available in the literature to conduct this evaluation. Further, we outline the important role of physicians in conducting these evaluation studies. The examples in this paper will be proposed in the context of PET with a focus on neural-network-based methods. However, the framework is also applicable to evaluate other medical-imaging modalities and other types of AI methods.

Task-based assessment of binned and list-mode SPECT systems

Feb 11, 2021

Abstract:In SPECT, list-mode (LM) format allows storing data at higher precision compared to binned data. There is significant interest in investigating whether this higher precision translates to improved performance on clinical tasks. Towards this goal, in this study, we quantitatively investigated whether processing data in LM format, and in particular, the energy attribute of the detected photon, provides improved performance on the task of absolute quantification of region-of-interest (ROI) uptake in comparison to processing the data in binned format. We conducted this evaluation study using a DaTscan brain SPECT acquisition protocol, conducted in the context of imaging patients with Parkinson's disease. This study was conducted with a synthetic phantom. A signal-known exactly/background-known-statistically (SKE/BKS) setup was considered. An ordered-subset expectation-maximization algorithm was used to reconstruct images from data acquired in LM format, including the scatter-window data, and including the energy attribute of each LM event. Using a realistic 2-D SPECT system simulation, quantification tasks were performed on the reconstructed images. The results demonstrated improved quantification performance when LM data was used compared to binning the attributes in all the conducted evaluation studies. Overall, we observed that LM data, including the energy attribute, yielded improved performance on absolute quantification tasks compared to binned data.

Native Language Identification using i-vector

Nov 09, 2018

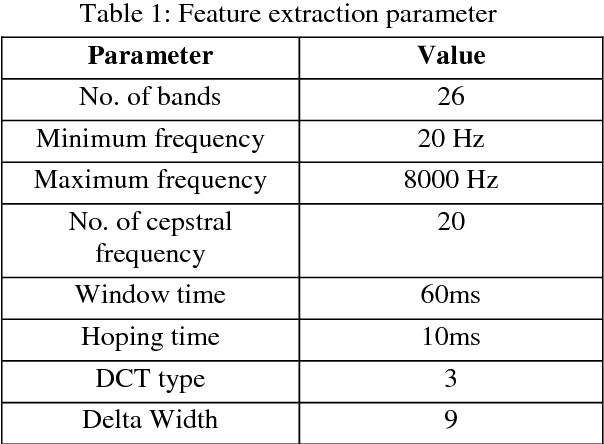

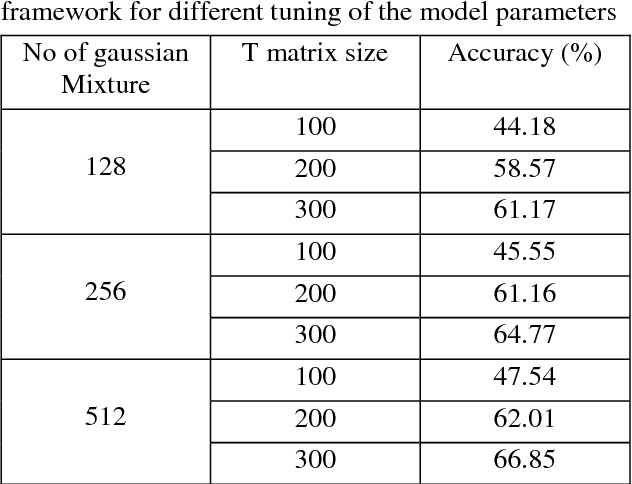

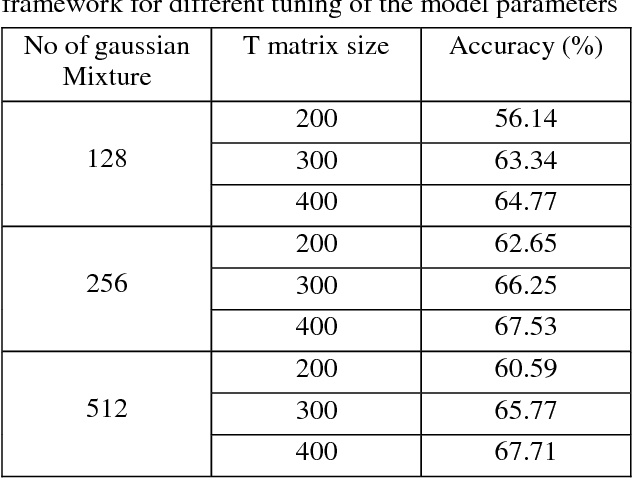

Abstract:The task of determining a speaker's native language based only on his speeches in a second language is known as Native Language Identification or NLI. Due to its increasing applications in various domains of speech signal processing, this has emerged as an important research area in recent times. In this paper we have proposed an i-vector based approach to develop an automatic NLI system using MFCC and GFCC features. For evaluation of our approach, we have tested our framework on the 2016 ComParE Native language sub-challenge dataset which has English language speakers from 11 different native language backgrounds. Our proposed method outperforms the baseline system with an improvement in accuracy by 21.95% for the MFCC feature based i-vector framework and 22.81% for the GFCC feature based i-vector framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge