Zhuoping Yu

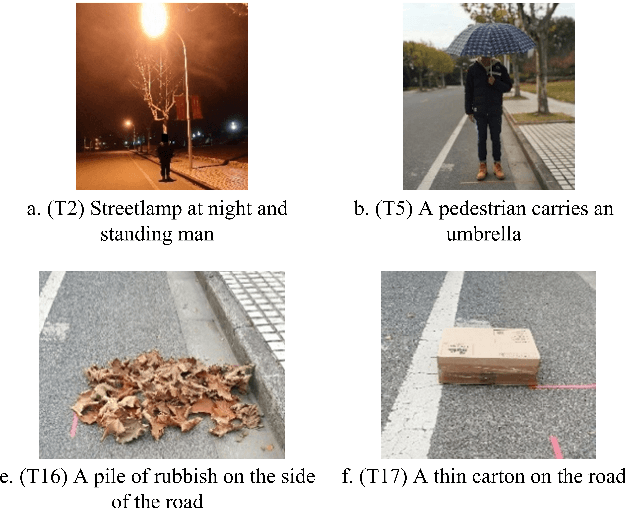

An Ontology-based Method to Identify Triggering Conditions for Perception Insufficiency of Autonomous Vehicles

Oct 17, 2022

Abstract:The autonomous vehicle (AV) is a safety-critical system relying on complex sensors and algorithms. The AV may confront risk conditions if these sensors and algorithms misunderstand the environment and situation, even though all components are fault-free. The ISO 21448 defined the safety of the intended functionality (SOTIF), aiming to enhance the AV's safety by specifying AV's development and validation process. As required in the ISO 21448, the triggering conditions, which may lead to the vehicle's functional insufficiencies, should be analyzed and verified. However, there is not yet a method to realize a comprehensive and systematic identification of triggering conditions so far. This paper proposed an analysis framework of triggering conditions for the perception system based on the propagation chain of events model, which consists of triggering source, influenced perception stage, and triggering effect. According to the analysis framework, ontologies of triggering source and perception stage were constructed, and the relationships between concepts in ontologies are defined. According to these ontologies, triggering conditions can be generated comprehensively and systematically. The proposed method was applied on an L3 autonomous vehicle, and 20 from 87 triggering conditions identified were tested in the field, among which eight triggering conditions triggered risky behaviors of the vehicle.

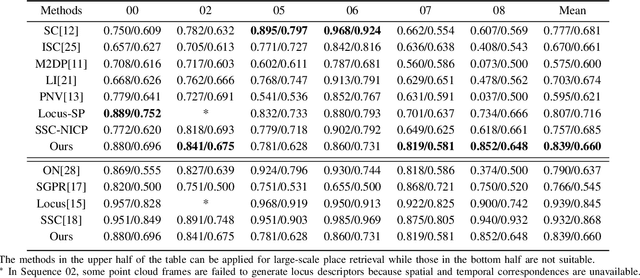

DSC: Deep Scan Context Descriptor for Large-Scale Place Recognition

Nov 27, 2021

Abstract:LiDAR-based place recognition is an essential and challenging task both in loop closure detection and global relocalization. We propose Deep Scan Context (DSC), a general and discriminative global descriptor that captures the relationship among segments of a point cloud. Unlike previous methods that utilize either semantics or a sequence of adjacent point clouds for better place recognition, we only use raw point clouds to get competitive results. Concretely, we first segment the point cloud egocentrically to acquire centroids and eigenvalues of the segments. Then, we introduce a graph neural network to aggregate these features into an embedding representation. Extensive experiments conducted on the KITTI dataset show that DSC is robust to scene variants and outperforms existing methods.

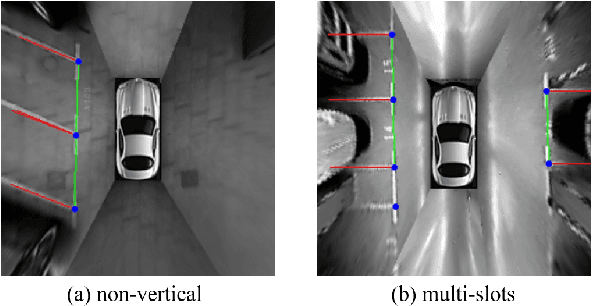

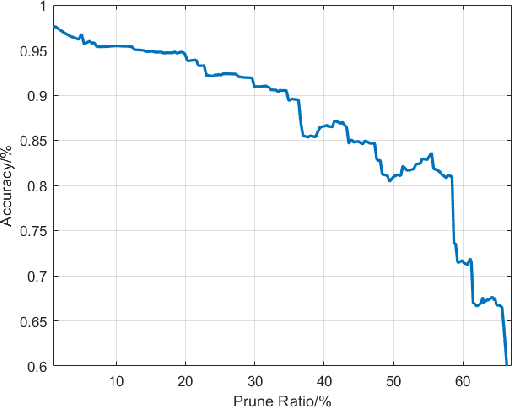

SPFCN: Select and Prune the Fully Convolutional Networks for Real-time Parking Slot Detection

Mar 25, 2020

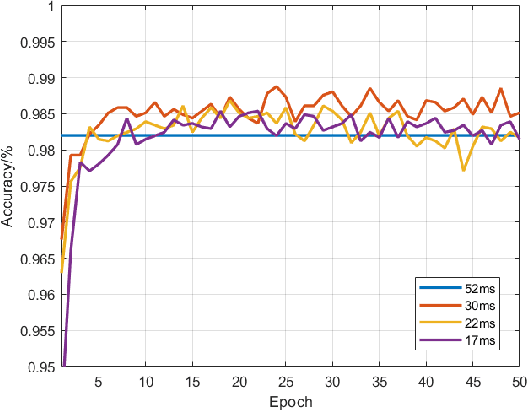

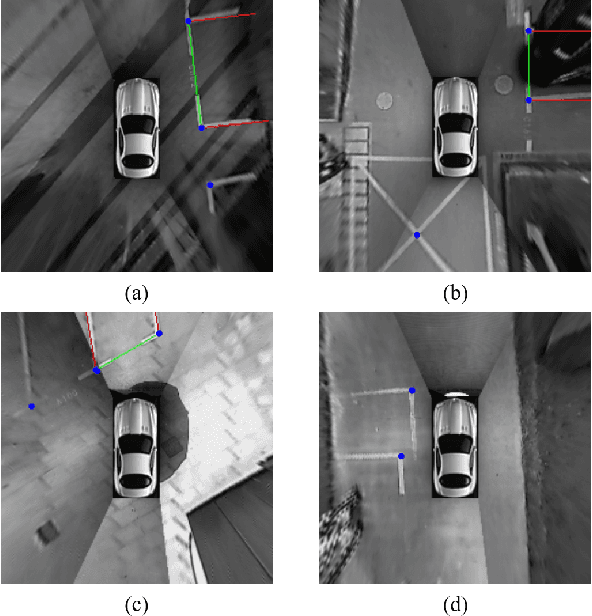

Abstract:For passenger cars equipped with automatic parking function, convolutional neural networks are employed to detect parking slots on the panoramic surround view, which is an overhead image synthesized by four calibrated fish-eye images, The accuracy is obtained at the price of low speed or expensive computation equipments, which are sensitive for many car manufacturers. In this paper, the same accuracy is challenged by the proposed parking slot detector, which leverages deep convolutional networks for the faster speed and smaller model while keep the accuracy by simultaneously training and pruning it. To achieve the optimal trade-off, we developed a strategy to select the best receptive fields and prune the redundant channels automatically during training. The proposed model is capable of jointly detecting corners and line features of parking slots while running efficiently in real time on average CPU. Even without any specific computing devices, the model outperforms existing counterparts, at a frame rate of about 30 FPS on a 2.3 GHz CPU core, getting parking slot corner localization error of 1.51$\pm$2.14 cm (std. err.) and slot detection accuracy of 98\%, generally satisfying the requirements in both speed and accuracy on on-board mobile terminals.

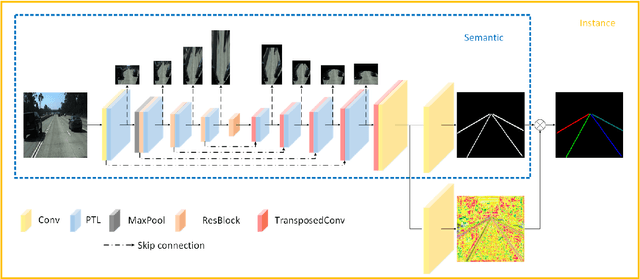

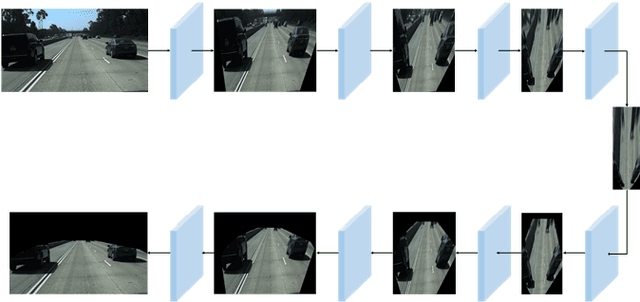

Detecting Lane and Road Markings at A Distance with Perspective Transformer Layers

Mar 19, 2020

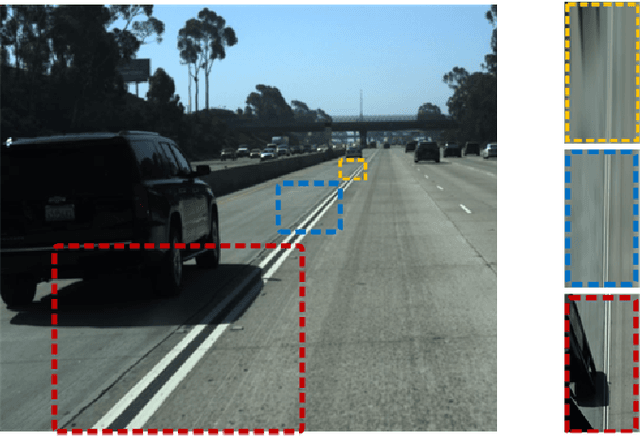

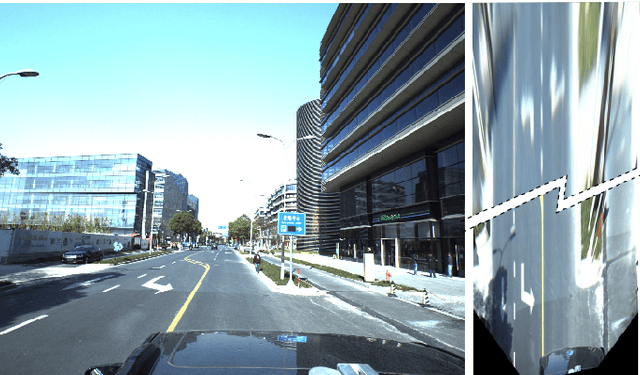

Abstract:Accurate detection of lane and road markings is a task of great importance for intelligent vehicles. In existing approaches, the detection accuracy often degrades with the increasing distance. This is due to the fact that distant lane and road markings occupy a small number of pixels in the image, and scales of lane and road markings are inconsistent at various distances and perspectives. The Inverse Perspective Mapping (IPM) can be used to eliminate the perspective distortion, but the inherent interpolation can lead to artifacts especially around distant lane and road markings and thus has a negative impact on the accuracy of lane marking detection and segmentation. To solve this problem, we adopt the Encoder-Decoder architecture in Fully Convolutional Networks and leverage the idea of Spatial Transformer Networks to introduce a novel semantic segmentation neural network. This approach decomposes the IPM process into multiple consecutive differentiable homographic transform layers, which are called "Perspective Transformer Layers". Furthermore, the interpolated feature map is refined by subsequent convolutional layers thus reducing the artifacts and improving the accuracy. The effectiveness of the proposed method in lane marking detection is validated on two public datasets: TuSimple and ApolloScape

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge