Zhiqiang Gong

National Innovation Institute of Defense Technology, Chinese Academy of Military Science

SynergyWarpNet: Attention-Guided Cooperative Warping for Neural Portrait Animation

Dec 19, 2025

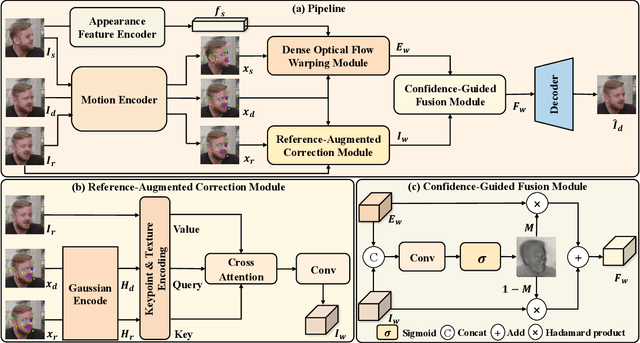

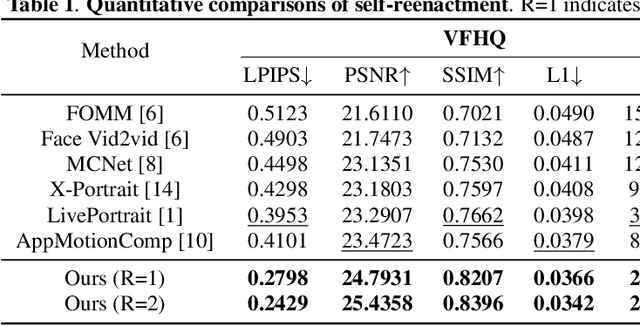

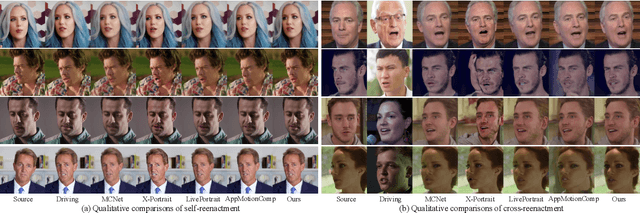

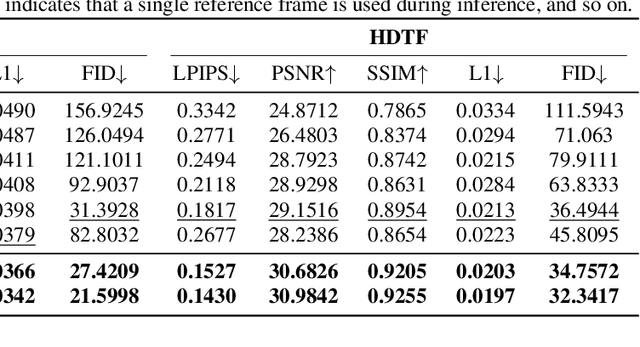

Abstract:Recent advances in neural portrait animation have demonstrated remarked potential for applications in virtual avatars, telepresence, and digital content creation. However, traditional explicit warping approaches often struggle with accurate motion transfer or recovering missing regions, while recent attention-based warping methods, though effective, frequently suffer from high complexity and weak geometric grounding. To address these issues, we propose SynergyWarpNet, an attention-guided cooperative warping framework designed for high-fidelity talking head synthesis. Given a source portrait, a driving image, and a set of reference images, our model progressively refines the animation in three stages. First, an explicit warping module performs coarse spatial alignment between the source and driving image using 3D dense optical flow. Next, a reference-augmented correction module leverages cross-attention across 3D keypoints and texture features from multiple reference images to semantically complete occluded or distorted regions. Finally, a confidence-guided fusion module integrates the warped outputs with spatially-adaptive fusing, using a learned confidence map to balance structural alignment and visual consistency. Comprehensive evaluations on benchmark datasets demonstrate state-of-the-art performance.

HyperDID: Hyperspectral Intrinsic Image Decomposition with Deep Feature Embedding

Nov 25, 2023Abstract:The dissection of hyperspectral images into intrinsic components through hyperspectral intrinsic image decomposition (HIID) enhances the interpretability of hyperspectral data, providing a foundation for more accurate classification outcomes. However, the classification performance of HIID is constrained by the model's representational ability. To address this limitation, this study rethinks hyperspectral intrinsic image decomposition for classification tasks by introducing deep feature embedding. The proposed framework, HyperDID, incorporates the Environmental Feature Module (EFM) and Categorical Feature Module (CFM) to extract intrinsic features. Additionally, a Feature Discrimination Module (FDM) is introduced to separate environment-related and category-related features. Experimental results across three commonly used datasets validate the effectiveness of HyperDID in improving hyperspectral image classification performance. This novel approach holds promise for advancing the capabilities of hyperspectral image analysis by leveraging deep feature embedding principles. The implementation of the proposed method could be accessed soon at https://github.com/shendu-sw/HyperDID for the sake of reproducibility.

MultiScale Spectral-Spatial Convolutional Transformer for Hyperspectral Image Classification

Oct 28, 2023Abstract:Due to the powerful ability in capturing the global information, Transformer has become an alternative architecture of CNNs for hyperspectral image classification. However, general Transformer mainly considers the global spectral information while ignores the multiscale spatial information of the hyperspectral image. In this paper, we propose a multiscale spectral-spatial convolutional Transformer (MultiscaleFormer) for hyperspectral image classification. First, the developed method utilizes multiscale spatial patches as tokens to formulate the spatial Transformer and generates multiscale spatial representation of each band in each pixel. Second, the spatial representation of all the bands in a given pixel are utilized as tokens to formulate the spectral Transformer and generate the multiscale spectral-spatial representation of each pixel. Besides, a modified spectral-spatial CAF module is constructed in the MultiFormer to fuse cross-layer spectral and spatial information. Therefore, the proposed MultiFormer can capture the multiscale spectral-spatial information and provide better performance than most of other architectures for hyperspectral image classification. Experiments are conducted over commonly used real-world datasets and the comparison results show the superiority of the proposed method.

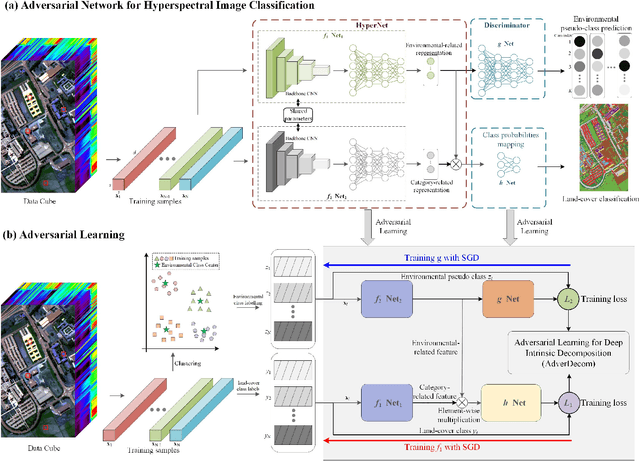

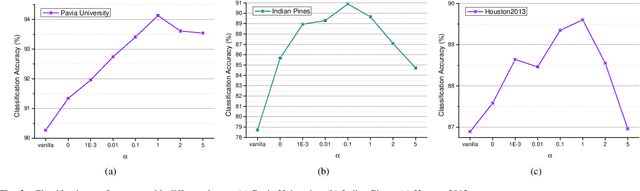

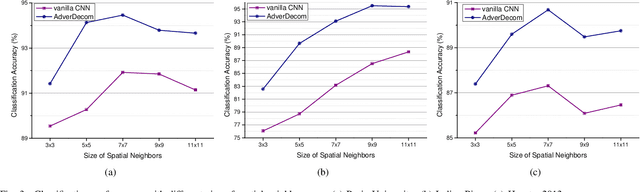

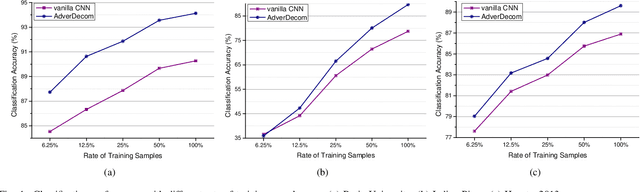

Deep Intrinsic Decomposition with Adversarial Learning for Hyperspectral Image Classification

Oct 28, 2023

Abstract:Convolutional neural networks (CNNs) have been demonstrated their powerful ability to extract discriminative features for hyperspectral image classification. However, general deep learning methods for CNNs ignore the influence of complex environmental factor which enlarges the intra-class variance and decreases the inter-class variance. This multiplies the difficulty to extract discriminative features. To overcome this problem, this work develops a novel deep intrinsic decomposition with adversarial learning, namely AdverDecom, for hyperspectral image classification to mitigate the negative impact of environmental factors on classification performance. First, we develop a generative network for hyperspectral image (HyperNet) to extract the environmental-related feature and category-related feature from the image. Then, a discriminative network is constructed to distinguish different environmental categories. Finally, a environmental and category joint learning loss is developed for adversarial learning to make the deep model learn discriminative features. Experiments are conducted over three commonly used real-world datasets and the comparison results show the superiority of the proposed method. The implementation of the proposed method and other compared methods could be accessed at https://github.com/shendu-sw/Adversarial Learning Intrinsic Decomposition for the sake of reproducibility.

Multi-fidelity prediction of fluid flow and temperature field based on transfer learning using Fourier Neural Operator

Apr 14, 2023

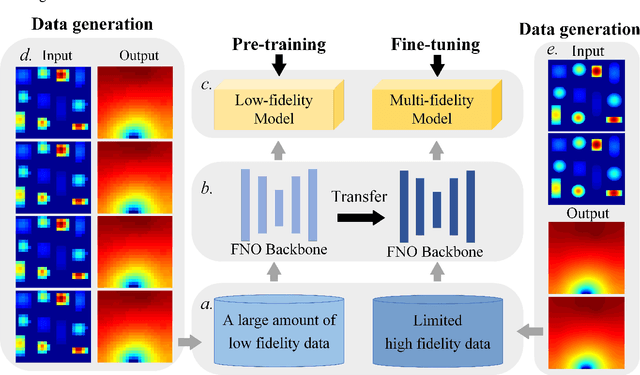

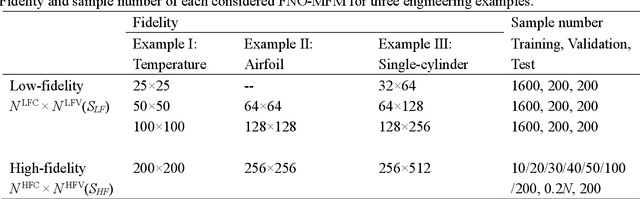

Abstract:Data-driven prediction of fluid flow and temperature distribution in marine and aerospace engineering has received extensive research and demonstrated its potential in real-time prediction recently. However, usually large amounts of high-fidelity data are required to describe and accurately predict the complex physical information, while in reality, only limited high-fidelity data is available due to the high experiment/computational cost. Therefore, this work proposes a novel multi-fidelity learning method based on the Fourier Neural Operator by jointing abundant low-fidelity data and limited high-fidelity data under transfer learning paradigm. First, as a resolution-invariant operator, the Fourier Neural Operator is first and gainfully applied to integrate multi-fidelity data directly, which can utilize the scarce high-fidelity data and abundant low-fidelity data simultaneously. Then, the transfer learning framework is developed for the current task by extracting the rich low-fidelity data knowledge to assist high-fidelity modeling training, to further improve data-driven prediction accuracy. Finally, three typical fluid and temperature prediction problems are chosen to validate the accuracy of the proposed multi-fidelity model. The results demonstrate that our proposed method has high effectiveness when compared with other high-fidelity models, and has the high modeling accuracy of 99% for all the selected physical field problems. Significantly, the proposed multi-fidelity learning method has the potential of a simple structure with high precision, which can provide a reference for the construction of the subsequent model.

Uncertainty Guided Ensemble Self-Training for Semi-Supervised Global Field Reconstruction

Feb 23, 2023

Abstract:Recovering a globally accurate complex physics field from limited sensor is critical to the measurement and control in the aerospace engineering. General reconstruction methods for recovering the field, especially the deep learning with more parameters and better representational ability, usually require large amounts of labeled data which is unaffordable. To solve the problem, this paper proposes Uncertainty Guided Ensemble Self-Training (UGE-ST), using plentiful unlabeled data to improve reconstruction performance. A novel self-training framework with the ensemble teacher and pretraining student designed to improve the accuracy of the pseudo-label and remedy the impact of noise is first proposed. On the other hand, uncertainty-guided learning is proposed to encourage the model to focus on the highly confident regions of pseudo-labels and mitigate the effects of wrong pseudo-labeling in self-training, improving the performance of the reconstruction model. Experiments include the pressure velocity field reconstruction of airfoil and the temperature field reconstruction of aircraft system indicate that our UGE-ST can save up to 90% of the data with the same accuracy as supervised learning.

RecFNO: a resolution-invariant flow and heat field reconstruction method from sparse observations via Fourier neural operator

Feb 20, 2023

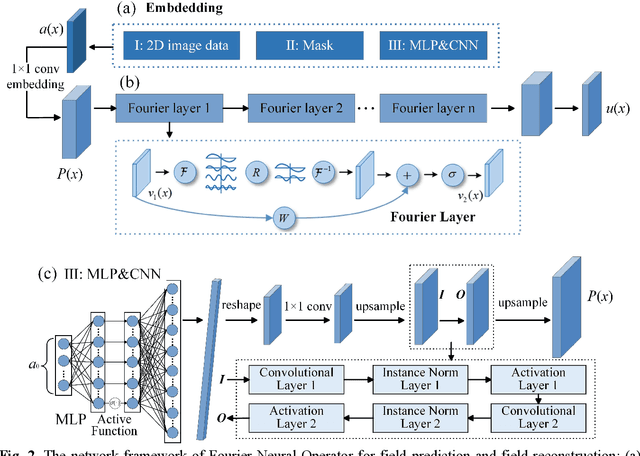

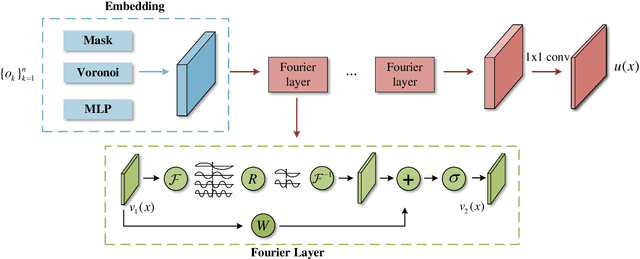

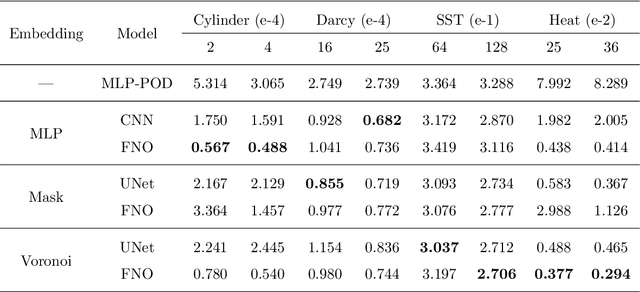

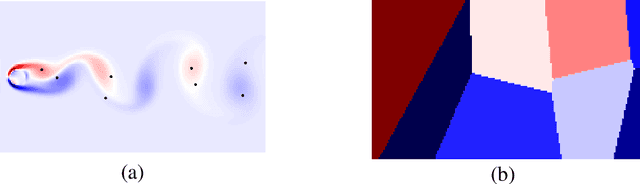

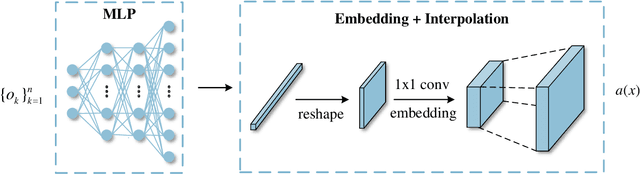

Abstract:Perception of the full state is an essential technology to support the monitoring, analysis, and design of physical systems, one of whose challenges is to recover global field from sparse observations. Well-known for brilliant approximation ability, deep neural networks have been attractive to data-driven flow and heat field reconstruction studies. However, limited by network structure, existing researches mostly learn the reconstruction mapping in finite-dimensional space and has poor transferability to variable resolution of outputs. In this paper, we extend the new paradigm of neural operator and propose an end-to-end physical field reconstruction method with both excellent performance and mesh transferability named RecFNO. The proposed method aims to learn the mapping from sparse observations to flow and heat field in infinite-dimensional space, contributing to a more powerful nonlinear fitting capacity and resolution-invariant characteristic. Firstly, according to different usage scenarios, we develop three types of embeddings to model the sparse observation inputs: MLP, mask, and Voronoi embedding. The MLP embedding is propitious to more sparse input, while the others benefit from spatial information preservation and perform better with the increase of observation data. Then, we adopt stacked Fourier layers to reconstruct physical field in Fourier space that regularizes the overall recovered field by Fourier modes superposition. Benefiting from the operator in infinite-dimensional space, the proposed method obtains remarkable accuracy and better resolution transferability among meshes. The experiments conducted on fluid mechanics and thermology problems show that the proposed method outperforms existing POD-based and CNN-based methods in most cases and has the capacity to achieve zero-shot super-resolution.

Multi-fidelity surrogate modeling for temperature field prediction using deep convolution neural network

Jan 17, 2023

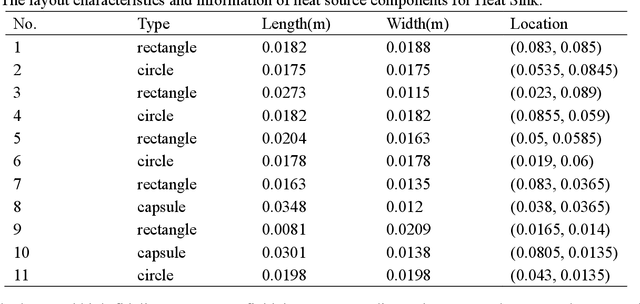

Abstract:Temperature field prediction is of great importance in the thermal design of systems engineering, and building the surrogate model is an effective way for the task. Generally, large amounts of labeled data are required to guarantee a good prediction performance of the surrogate model, especially the deep learning model, which have more parameters and better representational ability. However, labeled data, especially high-fidelity labeled data, are usually expensive to obtain and sometimes even impossible. To solve this problem, this paper proposes a pithy deep multi-fidelity model (DMFM) for temperature field prediction, which takes advantage of low-fidelity data to boost the performance with less high-fidelity data. First, a pre-train and fine-tune paradigm are developed in DMFM to train the low-fidelity and high-fidelity data, which significantly reduces the complexity of the deep surrogate model. Then, a self-supervised learning method for training the physics-driven deep multi-fidelity model (PD-DMFM) is proposed, which fully utilizes the physics characteristics of the engineering systems and reduces the dependence on large amounts of labeled low-fidelity data in the training process. Two diverse temperature field prediction problems are constructed to validate the effectiveness of DMFM and PD-DMFM, and the result shows that the proposed method can greatly reduce the dependence of the model on high-fidelity data.

Masked Spatial-Spectral Autoencoders Are Excellent Hyperspectral Defenders

Jul 16, 2022

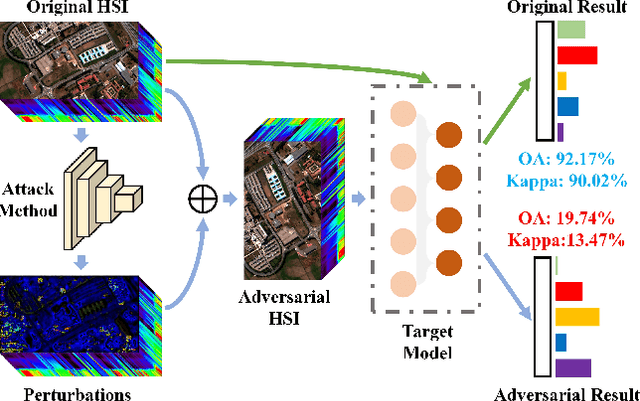

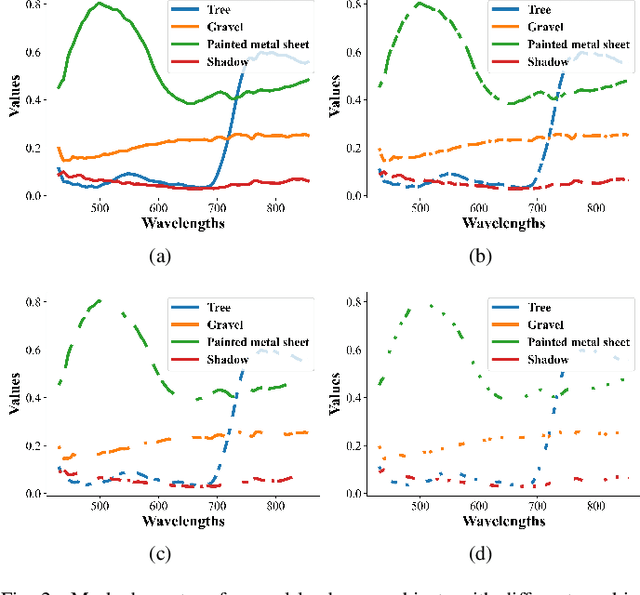

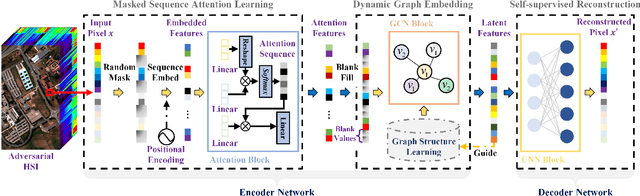

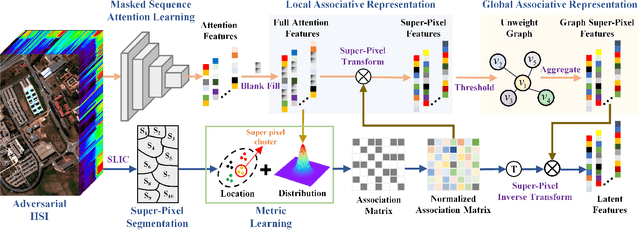

Abstract:Deep learning methodology contributes a lot to the development of hyperspectral image (HSI) analysis community. However, it also makes HSI analysis systems vulnerable to adversarial attacks. To this end, we propose a masked spatial-spectral autoencoder (MSSA) in this paper under self-supervised learning theory, for enhancing the robustness of HSI analysis systems. First, a masked sequence attention learning module is conducted to promote the inherent robustness of HSI analysis systems along spectral channel. Then, we develop a graph convolutional network with learnable graph structure to establish global pixel-wise combinations.In this way, the attack effect would be dispersed by all the related pixels among each combination, and a better defense performance is achievable in spatial aspect.Finally, to improve the defense transferability and address the problem of limited labelled samples, MSSA employs spectra reconstruction as a pretext task and fits the datasets in a self-supervised manner.Comprehensive experiments over three benchmarks verify the effectiveness of MSSA in comparison with the state-of-the-art hyperspectral classification methods and representative adversarial defense strategies.

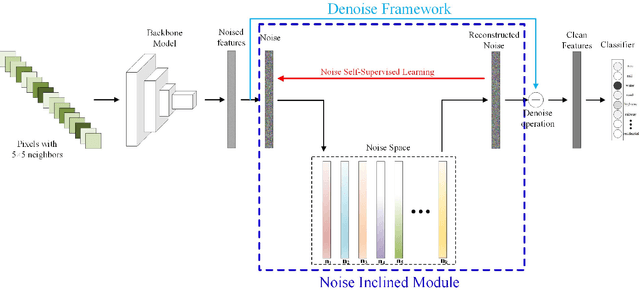

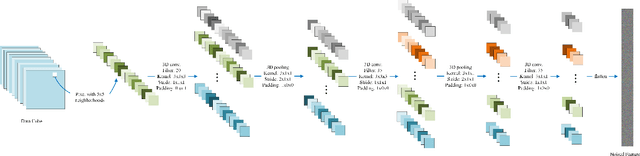

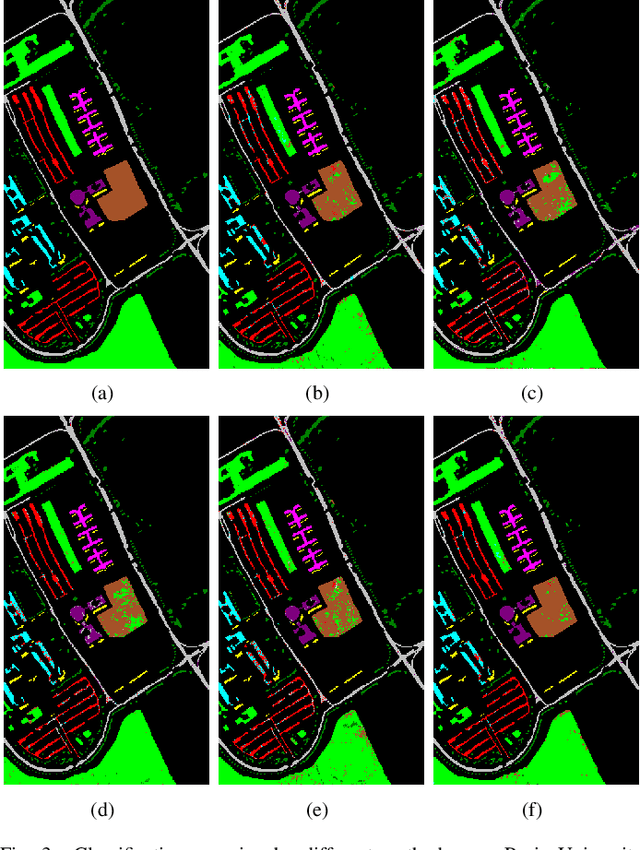

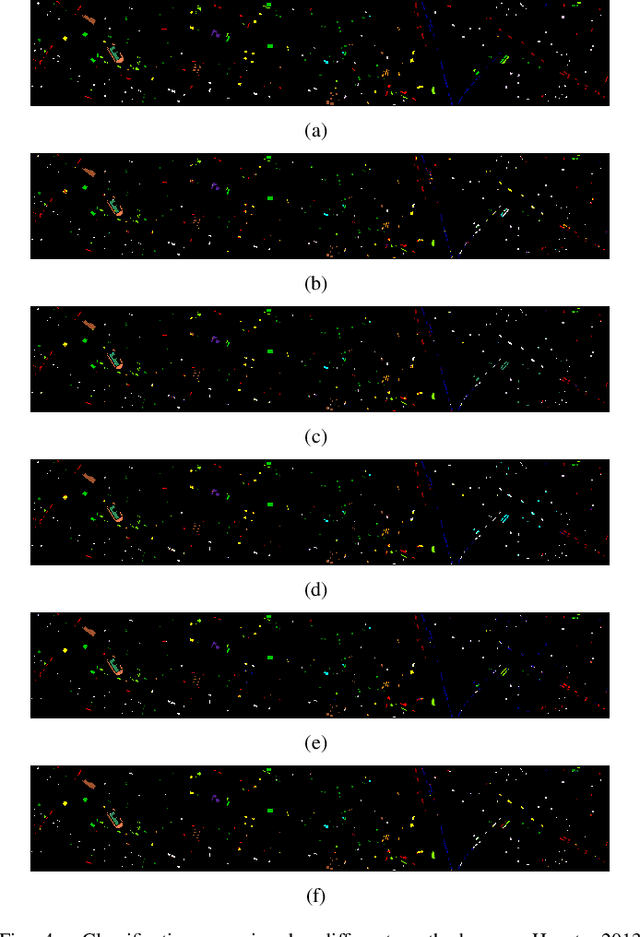

A CNN with Noise Inclined Module and Denoise Framework for Hyperspectral Image Classification

May 25, 2022

Abstract:Deep Neural Networks have been successfully applied in hyperspectral image classification. However, most of prior works adopt general deep architectures while ignore the intrinsic structure of the hyperspectral image, such as the physical noise generation. This would make these deep models unable to generate discriminative features and provide impressive classification performance. To leverage such intrinsic information, this work develops a novel deep learning framework with the noise inclined module and denoise framework for hyperspectral image classification. First, we model the spectral signature of hyperspectral image with the physical noise model to describe the high intraclass variance of each class and great overlapping between different classes in the image. Then, a noise inclined module is developed to capture the physical noise within each object and a denoise framework is then followed to remove such noise from the object. Finally, the CNN with noise inclined module and the denoise framework is developed to obtain discriminative features and provides good classification performance of hyperspectral image. Experiments are conducted over two commonly used real-world datasets and the experimental results show the effectiveness of the proposed method. The implementation of the proposed method and other compared methods could be accessed at https://github.com/shendu-sw/noise-physical-framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge