Xiaohu Zheng

HyperDID: Hyperspectral Intrinsic Image Decomposition with Deep Feature Embedding

Nov 25, 2023Abstract:The dissection of hyperspectral images into intrinsic components through hyperspectral intrinsic image decomposition (HIID) enhances the interpretability of hyperspectral data, providing a foundation for more accurate classification outcomes. However, the classification performance of HIID is constrained by the model's representational ability. To address this limitation, this study rethinks hyperspectral intrinsic image decomposition for classification tasks by introducing deep feature embedding. The proposed framework, HyperDID, incorporates the Environmental Feature Module (EFM) and Categorical Feature Module (CFM) to extract intrinsic features. Additionally, a Feature Discrimination Module (FDM) is introduced to separate environment-related and category-related features. Experimental results across three commonly used datasets validate the effectiveness of HyperDID in improving hyperspectral image classification performance. This novel approach holds promise for advancing the capabilities of hyperspectral image analysis by leveraging deep feature embedding principles. The implementation of the proposed method could be accessed soon at https://github.com/shendu-sw/HyperDID for the sake of reproducibility.

Multi-fidelity surrogate modeling for temperature field prediction using deep convolution neural network

Jan 17, 2023

Abstract:Temperature field prediction is of great importance in the thermal design of systems engineering, and building the surrogate model is an effective way for the task. Generally, large amounts of labeled data are required to guarantee a good prediction performance of the surrogate model, especially the deep learning model, which have more parameters and better representational ability. However, labeled data, especially high-fidelity labeled data, are usually expensive to obtain and sometimes even impossible. To solve this problem, this paper proposes a pithy deep multi-fidelity model (DMFM) for temperature field prediction, which takes advantage of low-fidelity data to boost the performance with less high-fidelity data. First, a pre-train and fine-tune paradigm are developed in DMFM to train the low-fidelity and high-fidelity data, which significantly reduces the complexity of the deep surrogate model. Then, a self-supervised learning method for training the physics-driven deep multi-fidelity model (PD-DMFM) is proposed, which fully utilizes the physics characteristics of the engineering systems and reduces the dependence on large amounts of labeled low-fidelity data in the training process. Two diverse temperature field prediction problems are constructed to validate the effectiveness of DMFM and PD-DMFM, and the result shows that the proposed method can greatly reduce the dependence of the model on high-fidelity data.

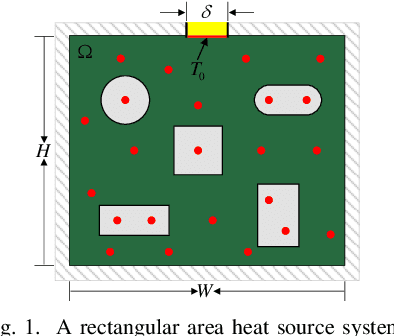

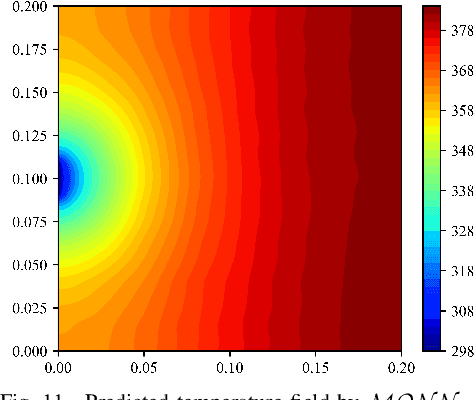

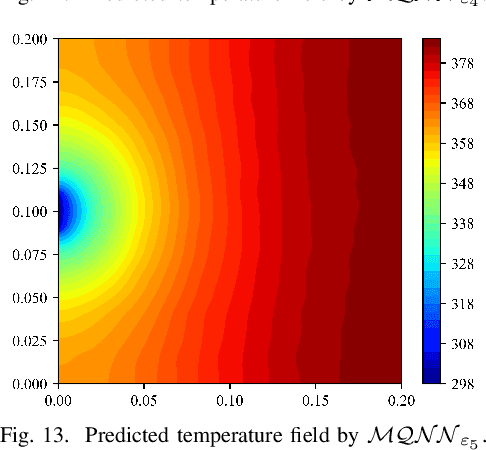

Heat Source Layout Optimization Using Automatic Deep Learning Surrogate Model and Multimodal Neighborhood Search Algorithm

May 16, 2022

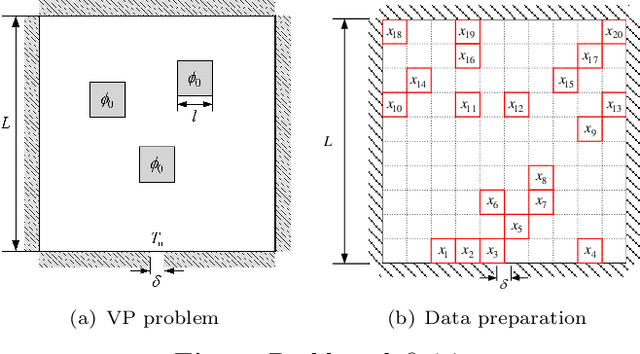

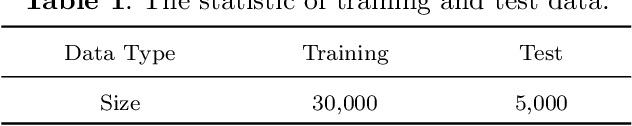

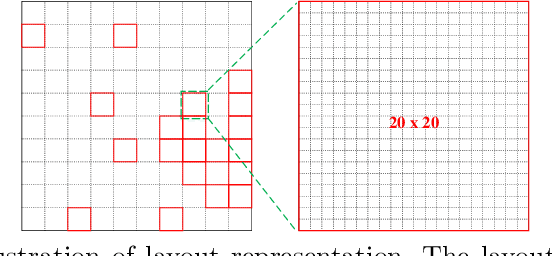

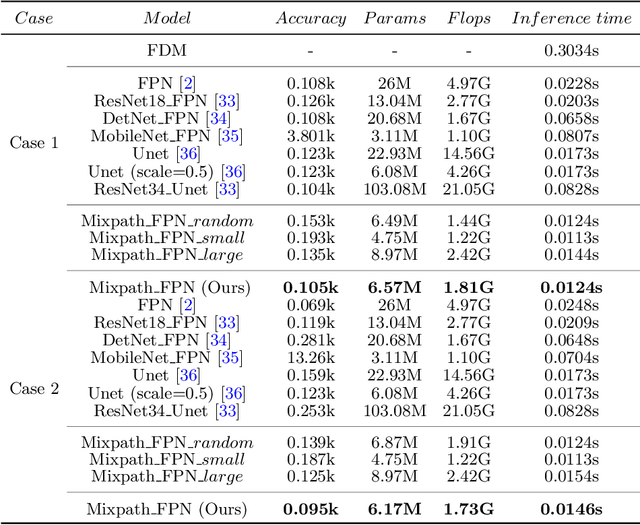

Abstract:In satellite layout design, heat source layout optimization (HSLO) is an effective technique to decrease the maximum temperature and improve the heat management of the whole system. Recently, deep learning surrogate assisted HSLO has been proposed, which learns the mapping from layout to its corresponding temperature field, so as to substitute the simulation during optimization to decrease the computational cost largely. However, it faces two main challenges: 1) the neural network surrogate for the certain task is often manually designed to be complex and requires rich debugging experience, which is challenging for the designers in the engineering field; 2) existing algorithms for HSLO could only obtain a near optimal solution in single optimization and are easily trapped in local optimum. To address the first challenge, considering reducing the total parameter numbers and ensuring the similar accuracy as well as, a neural architecture search (NAS) method combined with Feature Pyramid Network (FPN) framework is developed to realize the purpose of automatically searching for a small deep learning surrogate model for HSLO. To address the second challenge, a multimodal neighborhood search based layout optimization algorithm (MNSLO) is proposed, which could obtain more and better approximate optimal design schemes simultaneously in single optimization. Finally, two typical two-dimensional heat conduction optimization problems are utilized to demonstrate the effectiveness of the proposed method. With the similar accuracy, NAS finds models with 80% fewer parameters, 64% fewer FLOPs and 36% faster inference time than the original FPN. Besides, with the assistance of deep learning surrogate by automatic search, MNSLO could achieve multiple near optimal design schemes simultaneously to provide more design diversities for designers.

Algorithms for Bayesian network modeling and reliability inference of complex multistate systems: Part II-Dependent systems

Apr 04, 2022

Abstract:In using the Bayesian network (BN) to construct the complex multistate system's reliability model as described in Part I, the memory storage requirements of the node probability table (NPT) will exceed the random access memory (RAM) of the computer. However, the proposed inference algorithm of Part I is not suitable for the dependent system. This Part II proposes a novel method for BN reliability modeling and analysis to apply the compression idea to the complex multistate dependent system. In this Part II, the dependent nodes and their parent nodes are equivalent to a block, based on which the multistate joint probability inference algorithm is proposed to calculate the joint probability distribution of a block's all nodes. Then, based on the proposed multistate compression algorithm of Part I, the dependent multistate inference algorithm is proposed for the complex multistate dependent system. The use and accuracy of the proposed algorithms are demonstrated in case 1. Finally, the proposed algorithms are applied to the reliability modeling and analysis of the satellite attitude control system. The results show that both Part I and Part II's proposed algorithms make the reliability modeling and analysis of the complex multistate system feasible.

Consistency regularization-based Deep Polynomial Chaos Neural Network Method for Reliability Analysis

Apr 04, 2022

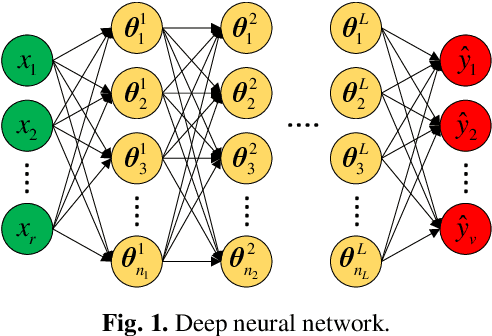

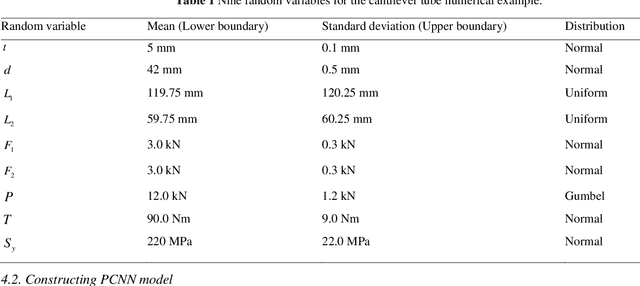

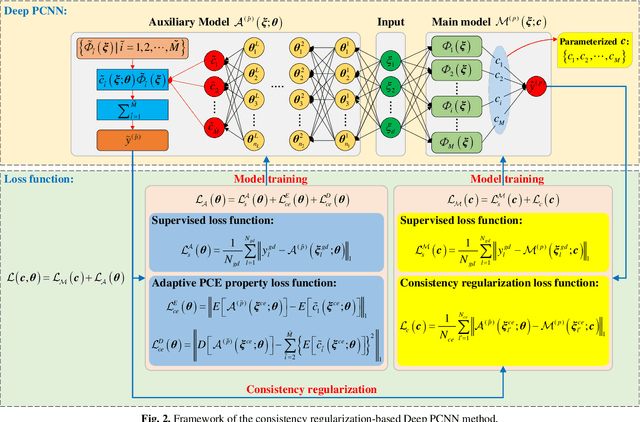

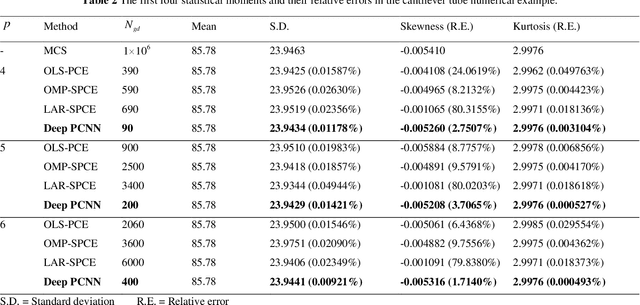

Abstract:Polynomial chaos expansion (PCE) is a powerful surrogate model-based reliability analysis method. Generally, a PCE model with a higher expansion order is usually required to obtain an accurate surrogate model for some complex non-linear stochastic systems. However, the high-order PCE increases the number of labeled data required for solving the expansion coefficients. To alleviate this problem, this paper proposes a consistency regularization-based deep polynomial chaos neural network (Deep PCNN) method, including the low-order adaptive PCE model (the auxiliary model) and the high-order polynomial chaos neural network (the main model). The expansion coefficients of the main model are parameterized into the learnable weights of the polynomial chaos neural network, realizing iterative learning of expansion coefficients to obtain more accurate high-order PCE models. The auxiliary model uses a proposed consistency regularization loss function to assist in training the main model. The consistency regularization-based Deep PCNN method can significantly reduce the number of labeled data in constructing a high-order PCE model without losing accuracy by using few labeled data and abundant unlabeled data. A numerical example validates the effectiveness of the consistency regularization-based Deep PCNN method, and then this method is applied to analyze the reliability of two aerospace engineering systems.

Semi-supervision semantic segmentation with uncertainty-guided self cross supervision

Mar 15, 2022

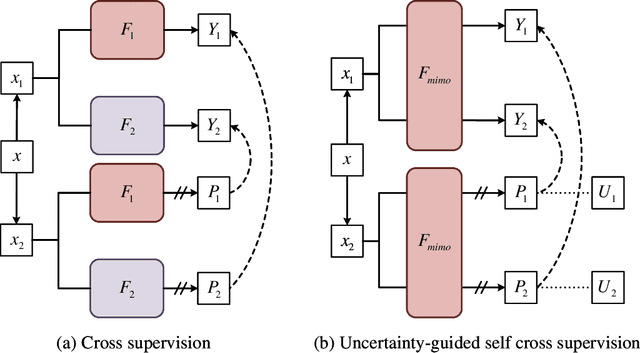

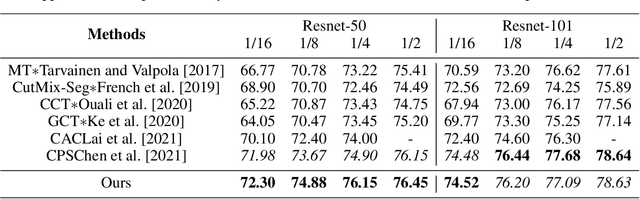

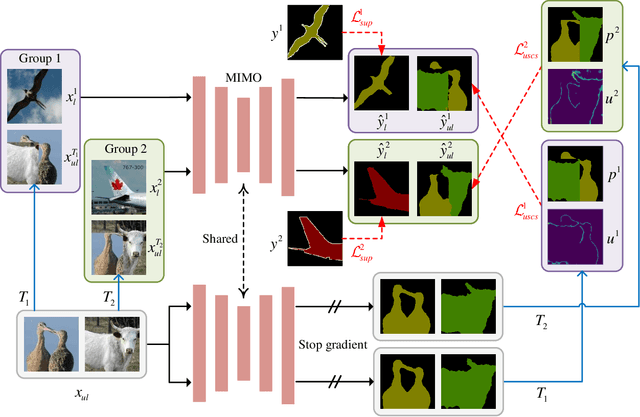

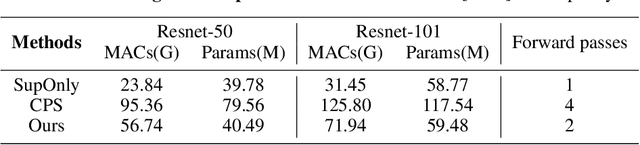

Abstract:As a powerful way of realizing semi-supervised segmentation, the cross supervision method learns cross consistency based on independent ensemble models using abundant unlabeled images. However, the wrong pseudo labeling information generated by cross supervision would confuse the training process and negatively affect the effectiveness of the segmentation model. Besides, the training process of ensemble models in such methods also multiplies the cost of computation resources and decreases the training efficiency. To solve these problems, we propose a novel cross supervision method, namely uncertainty-guided self cross supervision (USCS). In addition to ensemble models, we first design a multi-input multi-output (MIMO) segmentation model which can generate multiple outputs with shared model and consequently impose consistency over the outputs, saving the cost on parameters and calculations. On the other hand, we employ uncertainty as guided information to encourage the model to focus on the high confident regions of pseudo labels and mitigate the effects of wrong pseudo labeling in self cross supervision, improving the performance of the segmentation model. Extensive experiments show that our method achieves state-of-the-art performance while saving 40.5% and 49.1% cost on parameters and calculations.

Contrastive Enhancement Using Latent Prototype for Few-Shot Segmentation

Mar 08, 2022

Abstract:Few-shot segmentation enables the model to recognize unseen classes with few annotated examples. Most existing methods adopt prototype learning architecture, where support prototype vectors are expanded and concatenated with query features to perform conditional segmentation. However, such framework potentially focuses more on query features while may neglect the similarity between support and query features. This paper proposes a contrastive enhancement approach using latent prototypes to leverage latent classes and raise the utilization of similarity information between prototype and query features. Specifically, a latent prototype sampling module is proposed to generate pseudo-mask and novel prototypes based on features similarity. The module conveniently conducts end-to-end learning and has no strong dependence on clustering numbers like cluster-based method. Besides, a contrastive enhancement module is developed to drive models to provide different predictions with the same query features. Our method can be used as an auxiliary module to flexibly integrate into other baselines for a better segmentation performance. Extensive experiments show our approach remarkably improves the performance of state-of-the-art methods for 1-shot and 5-shot segmentation, especially outperforming baseline by 5.9% and 7.3% for 5-shot task on Pascal-5^i and COCO-20^i. Source code is available at https://github.com/zhaoxiaoyu1995/CELP-Pytorch

Physics-Informed Deep Monte Carlo Quantile Regression method for Interval Multilevel Bayesian Network-based Satellite Heat Reliability Analysis

Feb 14, 2022

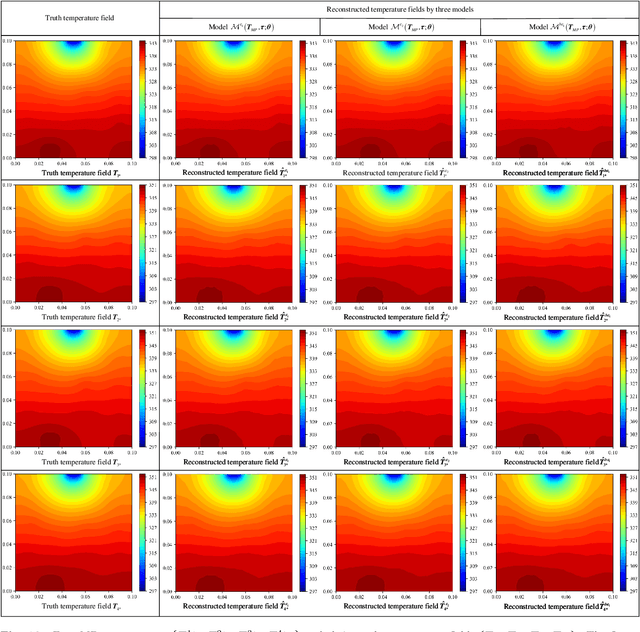

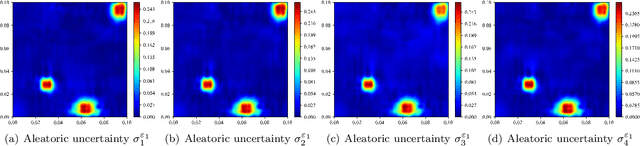

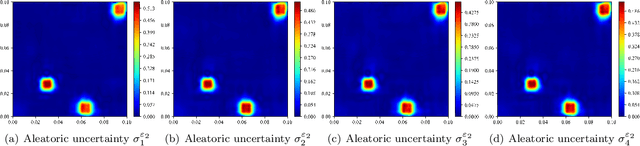

Abstract:Temperature field reconstruction is essential for analyzing satellite heat reliability. As a representative machine learning model, the deep convolutional neural network (DCNN) is a powerful tool for reconstructing the satellite temperature field. However, DCNN needs a lot of labeled data to learn its parameters, which is contrary to the fact that actual satellite engineering can only acquire noisy unlabeled data. To solve the above problem, this paper proposes an unsupervised method, i.e., the physics-informed deep Monte Carlo quantile regression method, for reconstructing temperature field and quantifying the aleatoric uncertainty caused by data noise. For one thing, the proposed method combines a deep convolutional neural network with the known physics knowledge to reconstruct an accurate temperature field using only monitoring point temperatures. For another thing, the proposed method can quantify the aleatoric uncertainty by the Monte Carlo quantile regression. Based on the reconstructed temperature field and the quantified aleatoric uncertainty, this paper models an interval multilevel Bayesian Network to analyze satellite heat reliability. Two case studies are used to validate the proposed method.

Deep Monte Carlo Quantile Regression for Quantifying Aleatoric Uncertainty in Physics-informed Temperature Field Reconstruction

Feb 14, 2022

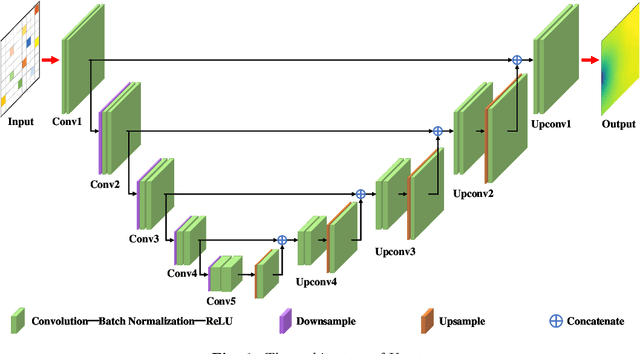

Abstract:For the temperature field reconstruction (TFR), a complex image-to-image regression problem, the convolutional neural network (CNN) is a powerful surrogate model due to the convolutional layer's good image feature extraction ability. However, a lot of labeled data is needed to train CNN, and the common CNN can not quantify the aleatoric uncertainty caused by data noise. In actual engineering, the noiseless and labeled training data is hardly obtained for the TFR. To solve these two problems, this paper proposes a deep Monte Carlo quantile regression (Deep MC-QR) method for reconstructing the temperature field and quantifying aleatoric uncertainty caused by data noise. On the one hand, the Deep MC-QR method uses physical knowledge to guide the training of CNN. Thereby, the Deep MC-QR method can reconstruct an accurate TFR surrogate model without any labeled training data. On the other hand, the Deep MC-QR method constructs a quantile level image for each input in each training epoch. Then, the trained CNN model can quantify aleatoric uncertainty by quantile level image sampling during the prediction stage. Finally, the effectiveness of the proposed Deep MC-QR method is validated by many experiments, and the influence of data noise on TFR is analyzed.

Mini-data-driven Deep Arbitrary Polynomial Chaos Expansion for Uncertainty Quantification

Jul 22, 2021

Abstract:The surrogate model-based uncertainty quantification method has drawn a lot of attention in recent years. Both the polynomial chaos expansion (PCE) and the deep learning (DL) are powerful methods for building a surrogate model. However, the PCE needs to increase the expansion order to improve the accuracy of the surrogate model, which causes more labeled data to solve the expansion coefficients, and the DL also needs a lot of labeled data to train the neural network model. This paper proposes a deep arbitrary polynomial chaos expansion (Deep aPCE) method to improve the balance between surrogate model accuracy and training data cost. On the one hand, the multilayer perceptron (MLP) model is used to solve the adaptive expansion coefficients of arbitrary polynomial chaos expansion, which can improve the Deep aPCE model accuracy with lower expansion order. On the other hand, the adaptive arbitrary polynomial chaos expansion's properties are used to construct the MLP training cost function based on only a small amount of labeled data and a large scale of non-labeled data, which can significantly reduce the training data cost. Four numerical examples and an actual engineering problem are used to verify the effectiveness of the Deep aPCE method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge